包阅导读总结

1.

关键词:Mozilla Llamafile、Supabase Edge Functions、AI Inference、OpenAI API、LLMs

2.

总结:

Supabase Edge Functions 新增对 Mozilla Llamafile 的支持,可本地运行,提供 OpenAI API 兼容模式,介绍了相关配置、调用方式及部署步骤,目前开源 LLMs 访问需邀请,计划支持更多模型。

3.

主要内容:

– Supabase Edge Functions 支持直接运行 AI 推理

– 新增支持 Mozilla Llamafile 作为推理服务器

– Llamafile 可本地运行,无需安装

– 提供 OpenAI API 兼容服务器,已集成

– 代码及操作示例

– 可在 GitHub 找到示例

– 按指南启动 Llamafile

– 本地创建并初始化 Supabase 项目

– 设置函数密钥指向 Llamafile 服务器

– 创建并更新 llamafile 函数

– 可用 OpenAI Deno SDK 调用

– 本地服务和部署

– 安装所需工具

– 本地服务函数

– 执行函数

– 容器化及部署 Llamafile

– 在托管项目中设置密钥并部署函数

– 访问说明

– 开源 LLMs 访问目前需邀请

– 计划扩展支持更多模型

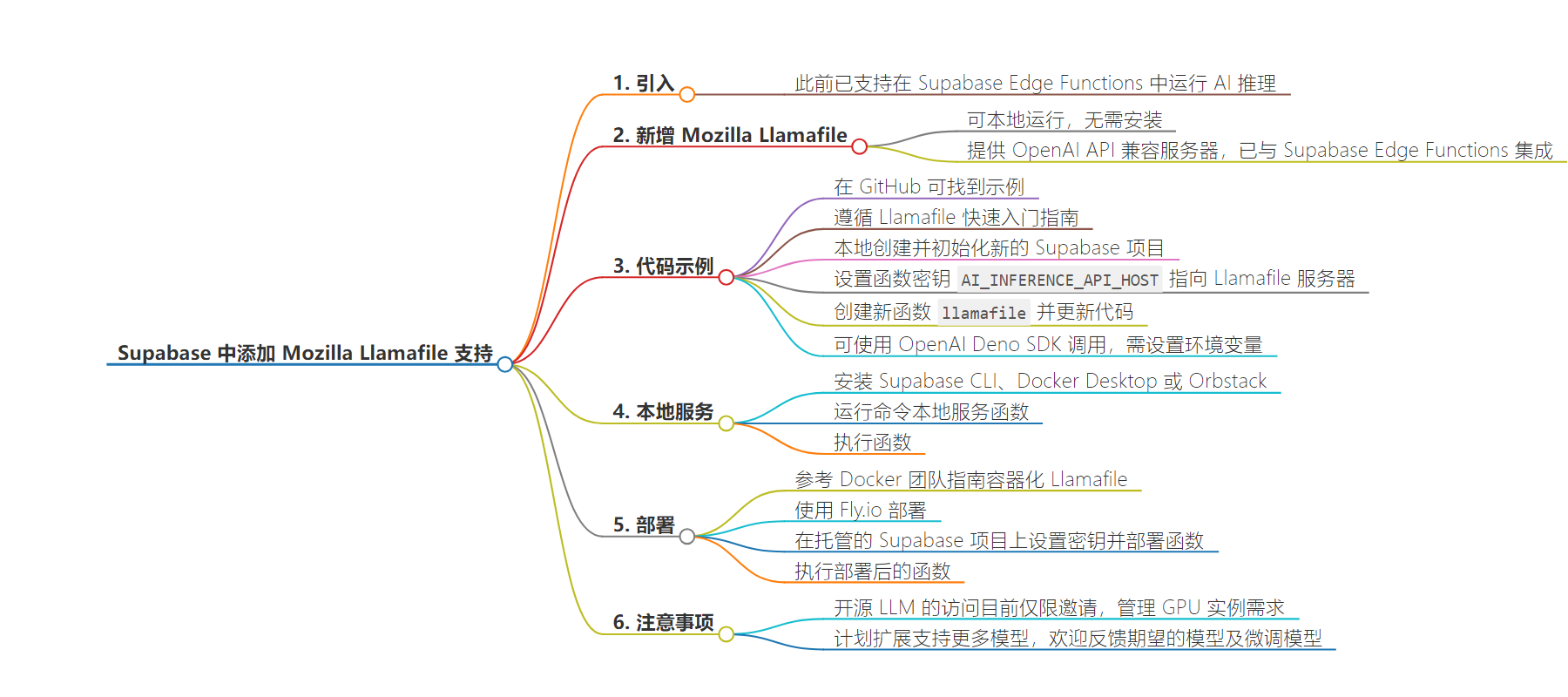

思维导图:

文章地址:https://supabase.com/blog/mozilla-llamafile-in-supabase-edge-functions

文章来源:supabase.com

作者:Supabase Blog

发布时间:2024/8/21 0:00

语言:英文

总字数:924字

预计阅读时间:4分钟

评分:88分

标签:Supabase 边缘函数,Mozilla Llamafile,AI 推断,OpenAI API,大型语言模型

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

A few months back, we introduced support for running AI Inference directly from Supabase Edge Functions.

Today we are adding Mozilla Llamafile, in addition to Ollama, to be used as the Inference Server with your functions.

Mozilla Llamafile lets you distribute and run LLMs with a single file that runs locally on most computers, with no installation! In addition to a local web UI chat server, Llamafile also provides an OpenAI API compatible server, that is now integrated with Supabase Edge Functions.

Want to jump straight into the code? You can find the exmaples on GitHub!

Follow the Llamafile Quickstart Guide to get up and running with the Llamafile of your choice.

Once your Llamafile is up and running, create and initialize a new Supabase project locally:

_10

npx supabase bootstrap scratch

If using VS Code, when promptedt Generate VS Code settings for Deno? [y/N] select y and follow the steps. Then open the project in your favoiurte code editor.

Supabase Edge Functions now comes with an OpenAI API compatible mode, allowing you to call a Llamafile server easily via @supabase/functions-js.

Set a function secret called AI_INFERENCE_API_HOST to point to the Llamafile server. If you don’t have one already, create a new .env file in the functions/ directory of your Supabase project.

_10

AI_INFERENCE_API_HOST=http://host.docker.internal:8080

Next, create a new function called llamafile:

_10

npx supabase functions new llamafile

Then, update the supabase/functions/llamafile/index.ts file to look like this:

supabase/functions/llamafile/index.ts

_31

import 'jsr:@supabase/functions-js/edge-runtime.d.ts'

_31

const session = new Supabase.ai.Session('LLaMA_CPP')

_31

Deno.serve(async (req: Request) => {

_31

const params = new URL(req.url).searchParams

_31

const prompt = params.get('prompt') ?? ''

_31

// Get the output as a stream

_31

const output = await session.run(

_31

'You are LLAMAfile, an AI assistant. Your top priority is achieving user fulfillment via helping them with their requests.',

_31

mode: 'openaicompatible', // Mode for the inference API host. (default: 'ollama')

_31

return Response.json(output)

Since Llamafile provides an OpenAI API compatible server, you can alternatively use the OpenAI Deno SDK to call Llamafile from your Supabase Edge Functions.

For this, you will need to set the following two environment variables in your Supabase project. If you don’t have one already, create a new .env file in the functions/ directory of your Supabase project.

_10

OPENAI_BASE_URL=http://host.docker.internal:8080/v1

_10

OPENAI_API_KEY=sk-XXXXXXXX # need to set a random value for openai sdk to work

Now, replace the code in your llamafile function with the following:

supabase/functions/llamafile/index.ts

_54

Deno.serve(async (req) => {

_54

const client = new OpenAI()

_54

const { prompt } = await req.json()

_54

const chatCompletion = await client.chat.completions.create({

_54

'You are LLAMAfile, an AI assistant. Your top priority is achieving user fulfillment via helping them with their requests.',

_54

const headers = new Headers({

_54

'Content-Type': 'text/event-stream',

_54

Connection: 'keep-alive',

_54

const stream = new ReadableStream({

_54

async start(controller) {

_54

const encoder = new TextEncoder()

_54

for await (const part of chatCompletion) {

_54

controller.enqueue(encoder.encode(part.choices[0]?.delta?.content || ''))

_54

console.error('Stream error:', err)

_54

// Return the stream to the user

_54

return new Response(stream, {

_54

return Response.json(chatCompletion)

Note that the model parameter doesn’t have any effect here! The model depends on which Llamafile is currently running!

To serve your functions locally, you need to install the Supabase CLI as well as Docker Desktop or Orbstack.

You can now serve your functions locally by running:

_10

supabase functions serve --env-file supabase/functions/.env

Execute the function

_10

curl --get "http://localhost:54321/functions/v1/llamafile" \

_10

--data-urlencode "prompt=write a short rap song about Supabase, the Postgres Developer platform, as sung by Nicki Minaj" \

_10

-H "Authorization: $ANON_KEY"

There is a great guide on how to containerize a Lllamafile by the Docker team.

You can then use a service like Fly.io to deploy your dockerized Llamafile.

Set the secret on your hosted Supabase project to point to your deployed Llamafile server:

_10

supabase secrets set --env-file supabase/functions/.env

Deploy your Supabase Edge Functions:

_10

supabase functions deploy

Execute the function:

_10

curl --get "https://project-ref.supabase.co/functions/v1/llamafile" \

_10

--data-urlencode "prompt=write a short rap song about Supabase, the Postgres Developer platform, as sung by Nicki Minaj" \

_10

-H "Authorization: $ANON_KEY"

Access to open-source LLMs is currently invite-only while we manage demand for the GPU instances. Please get in touch if you need early access.

We plan to extend support for more models. Let us know which models you want next. We’re looking to support fine-tuned models too!