包阅导读总结

关键词:AI-Assisted Coding、Security、Code Volume、Vulnerabilities、Supply Chain Risks

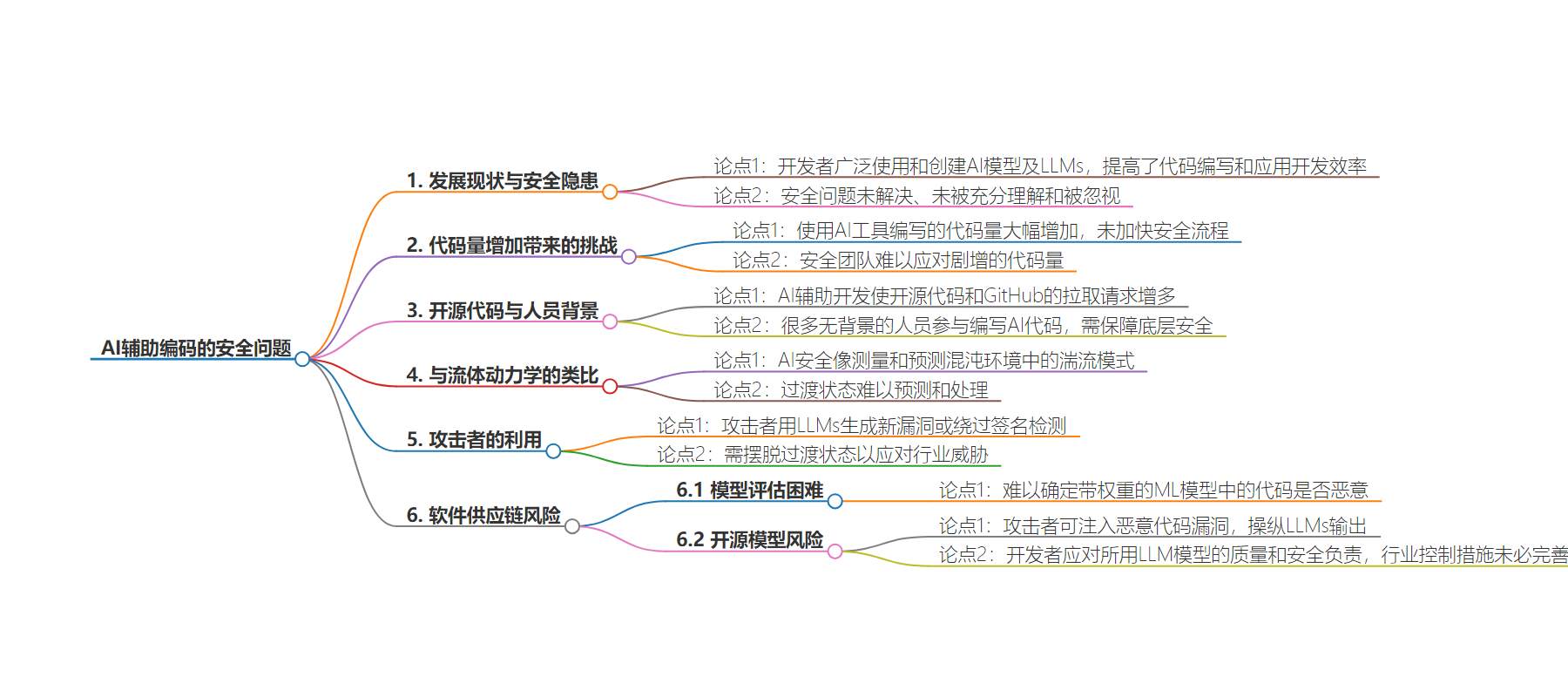

总结:本文主要探讨了 AI 辅助编码在带来代码创作和应用开发便利的同时,引发的安全问题。指出代码量剧增加大了安全管理难度,攻击者利用其生成新漏洞,且开发者难以检测恶意代码,强调要解决这些安全和供应链风险。

主要内容:

– AI 辅助编码的流行与安全隐患

– 开发者广泛使用 AI 模型和大语言模型,其快速发展带来安全问题,未被充分解决和理解。

– 代码量增加与安全挑战

– 利用工具写代码使产量大增,安全流程未跟上,安全团队难以应对剧增的代码量。

– 开源代码与安全问题

– AI 辅助开发使开源代码增多,新手参与但安全意识不足。

– 攻击者用 LLMs 生成新漏洞以绕过检测。

– 软件供应链中的威胁

– 难以确定 AI 生成代码是否恶意。

– 攻击者可注入恶意代码,操纵输出。

– 开发者应对所用模型的质量和安全负责,但行业控制措施未必到位。

思维导图:

文章地址:https://thenewstack.io/ai-assisted-coding-a-double-edged-sword-for-security/

文章来源:thenewstack.io

作者:B. Cameron Gain

发布时间:2024/7/24 16:22

语言:英文

总字数:1171字

预计阅读时间:5分钟

评分:88分

标签:AI 辅助编码,安全,大型语言模型,Copilot,GPT

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

PARIS — Developers on a wide scale are using and creating AI models and large language models (LLMs), as these facilities offer both the creation of code and the development of applications for LLMs. The popularity of LLMs has exploded, but despite the enthusiasm, the security issues resulting from this rapid advancement remain unsolved, not fully understood, and ultimately overlooked.

Some observers, especially in the security business, argue that we are moving far too fast before security is properly implemented and managed. This mirrors the mistake made with cloud native technologies like Kubernetes, where security concerns were not fully addressed before widespread adoption. It’s challenging to stop someone from using a cutting-edge technology before all security issues are resolved.

“The problem is the volume of code — using tools like Copilot and GPT to write code means you’re writing much more code than before, which is great for productivity but doesn’t speed up the security process. We’re essentially clogging up the pipes with all this new code,” Dan Lorenc, co-founder and CEO of Chainguard, said during his keynote at the recent Linux Foundation AI_dev conference. “Security was already struggling to keep up with developers, and now the amount of code being written has increased tenfold… No security team is prepared to handle 100 times the amount of code.”

The trials and tribulations for security teams to review and monitor security through observability are well-known. But as they look for bugs and vulnerabilities and test new features, “this approach won’t work with 100 times the amount of code and 100 times the number of features, and 10 times the number of developers,” Lorenc told me a few days ahead of the conference.

AI-assisted development has engendered an explosion in the open source code available and pull requests on GitHub, for example. As Lorenc noted, GitHub repositories for AI are getting hundreds of thousands of stars over a weekend in many cases, and many are writing AI code, even without backgrounds in it. “It’s great to see new people are writing and consuming code, which is wonderful, but we can’t forget about securing all the layers underneath — nothing changes. But you have to prepare for the new generations of people who don’t know about all those little problems,” Lorenc said. “You have to make it so they don’t need to know about security — telling every developer that they must know about security has never worked.”

During his talk, Lorenc made the comparison between AI-generated code and models and thermodynamics, which I remember fellow students in college claiming the professor said was 99% chaos.

Lorenc compared the present dilemma to fluid dynamics — a very difficult subject I heard tell, when I was in college. Instead of the more predictable laminar flow, AI security is like measuring and predicting turbulent flow patterns that manifest in chaotic environments. As developers use AI tools to write more and more code, they’re not using those same techniques to help review that code, leading to the present situation of there being too much water “flowing through the pipe than we can handle,” Lorenc said during his keynote.

“The transitional state here, when something first begins to transition from that one model to another, this is actually the toughest to reason about. This is the toughest type of system to model. In fluid dynamics, that transition doesn’t really happen at a set time, either,” Lorenc said. “There are some constants and ratios you can plug in to try to predict and guess which state of that you’re in, but a lot of engineering work goes into ensuring you know which regime you’re operating in, whether it’s laminar or turbulent. When you’re here in the middle, you don’t really know what to do.”

Already attackers have begun to use LLMs to generate new vulnerabilities or permutations on existing vulnerabilities to bypass signature detection,” Lorenc said. “We have to get out of that transitional state in order to fully understand and be able to work together as an industry again,” Lorenc said. “When that transition completes, though, and you get back over to the turbulent one, it makes folks feel really uncomfortable because scientists and physicists can’t actually explain how this works.”

Jossef Kadouri and Tzachi Zornshtain, who are both head of software supply chain at Checkmarx, describe supply-chain threats from AI during their talk “Dark Side of AI: The Hidden Supply Chain Risks in Open.”

The developer can make diligent efforts to vet AI-generated code, but more work needs to be done in order for vulnerability detection and remediation to become effective. During their talk “Dark Side of AI: The Hidden Supply Chain Risks in Open,” Jossef Kadouri and Tzachi Zornshtain, who are both head of software supply chain for Checkmarx, described specific threat vectors.

When using ML models with weights — parameters that are honed to improve neural-computing results in specific LLMs — it is very difficult to determine whether code is malicious or not. “I’m not saying the developer used to check the code that they were downloading — most of them weren’t doing that, but at least they had the option,” Zornshtain said. “When you’re getting an ML model, is it good or is it bad? It’s actually a bit more problematic for us to apply some of the changes there.”

Who among us, when writing a program or generating code, has not at least asked ChatGPT for its opinion or used a tool like GitHub Copilot? However, inputs into ChatGPT or other LLMs do not always result in the same output, due to the neural network configuration of machine learning and other aspects of these AI models involving hallucinations. When taking open source LLMs to build a service, an attacker can upload a malicious LLM model there and “trick you to use it,” Zornshtain said. Bad actors can inject malicious code vulnerabilities into LLMs and, through social engineering, manipulate the output of specific LLMs, just to name two risks, he said.

Meanwhile, developers using open source packages or models should be responsible for the quality and safety of the LLM models they use, Zornshtain said. “That being said, as an industry providing those solutions, do we have the right controls in place? Not necessarily,” Zornshtain said. “We know where the safety concerns lie, but are those checks being performed today? Based on what we are seeing, the answer isn’t necessarily what we want.”

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.