包阅导读总结

1. 关键词:代码审查、缓慢原因、改进技巧、社会方面、性能优化

2. 总结:本文探讨了代码审查缓慢的原因,包括人员、规则、复杂度等多方面,并介绍了改进的方法和需要注意的问题,强调从社会文化和技术角度优化代码审查流程。

3. 主要内容:

– 代码审查缓慢的定义与原因

– 定义为从发送审查请求到获得所有必需审查者批准的时间

– 原因包括过多审查者、严格指南、代码改动大、反复迭代多、反馈慢等

– 社会方面的影响

– 缺乏优先审查的激励机制

– 审查风格主观且多样

– 所有权定义影响审查流程

– 改进的方向

– 考虑代码复杂度,如变更行数、引用数量、过度工程等

– 关注迭代时间,因其影响反馈、开发者体验等

– 不应直接改进的方面

– 避免强制缩短代码变更的前置时间

– 测试覆盖率百分比作为绝对数并非最佳指标

– 改进的方法

– 设定审查服务级别目标(SLO)

– 区分巨大代码审查

– 考虑跨团队审查

– 关注工作日和时区等

– 优化审查SLO,如优先审查、安排时间、使用工具、定期监测调整

– 定义修订SLO及重写情况

思维导图:

文章地址:https://thenewstack.io/the-anatomy-of-slow-code-reviews/

文章来源:thenewstack.io

作者:Ankit Jain

发布时间:2024/7/18 13:38

语言:英文

总字数:1753字

预计阅读时间:8分钟

评分:85分

标签:代码审核,软件开发,社会文化因素,审核指南,代码复杂度

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Almost every software developer complains about slow code reviews, but sometimes, it can be hard to understand what’s causing them. It could sometimes be because the right owners were not identified, but many times, it could be due to a lack of communication. In this post, we will explore what can cause code reviews to slow down and learn about techniques for improving them.

Code Review Time

Total code review time can be defined as the time between sending a review request and getting approval from all the required reviewers. This process can quickly develop bottlenecks in most companies where inter-team code reviews are common.

To understand what’s causing bottlenecks, here are some questions worth asking:

- Do we have too many reviewers for each change?

- Do we have overly strict review guidelines?

- Are the code changes too big to review?

- Are there too many back-and-forth iterations?

- Are reviewers taking a long time to provide feedback?

- Are authors taking a long time to implement feedback?

- Are reviewers often out of the office or in different time zones?

When considering these questions and the corresponding answers, it’s clear that many code review challenges are social or cultural.

The Social Aspects

Incentives

In a typical engineering environment, there are no incentives to prioritize code reviews. One exception to this may be if the reviewer is also responsible for the timely launch of a product or feature associated with the code review.

In cases where there are no incentives, it makes sense for a developer to prioritize finishing their work over reviewing others’ changes.

A question worth asking: Is there a way to account for code review efforts in the performance cycle?

Review Styles

Code reviews are inherently subjective. Every developer, shaped by their experiences and preferences, approaches reviews differently. Some may focus on the nitty-gritty details of syntax and style, while others prioritize architectural decisions and code efficiency. Although these variations bring a well-rounded perspective to the codebase, they can also slow down the review process.

A question worth asking: Are code review guidelines well-defined and documented?

Ownership

While well-defined ownership is essential for maintaining quality and accountability, it can also introduce hurdles in the review process. If ownership is too fine-grained, a small set of developers receiving a large set of reviews may create a bottleneck. Identifying the right reviewer to provide good feedback may be difficult if it needs to be more coarse.

A question worth asking: How many directories or repositories have three or fewer reviewers?

What to Improve

Knowing what to improve is more important than learning how to improve. Unlike CI/CD system performance, code review performance depends on people — not machines. Therefore, instead of looking at “code review time” as a whole, breaking it down into distinct areas where you can make improvements — keeping in mind the social and cultural aspects of the process — can help you optimize code review times.

Code Complexity

Code complexity can be challenging to measure. A more scientific metric is cyclomatic complexity, introduced by Thomas J. McCabe in 1976. It measures the number of linearly independent paths through a program’s source code. However, from a code review standpoint, the most important thing to consider is whether the code is easy to understand—or its “reviewability.”

To simplify, we can use some proxies to define code complexity:

- Lines of change: Having fewer lines of code to review correlates directly with reviewing ease.

- Number of references: That is, the number of places where we use the methods or services that are being modified.

- Over-engineering: Are you trying to make code too generic or building for a distant future scenario? This may also be hard to determine and require more judgment from the reviewers.

Iteration Time

If code review is a turn-based exchange of feedback between the code change author and the reviewers, then “iteration time” can be defined as how long it takes for each exchange to reach a satisfactory conclusion.

In a code review process, it’s hard to know beforehand how many iterations it will take before the change is ready to be merged. However, reducing the time for each iteration can improve the overall time for code review.

Here’s why iteration time is essential:

- Accelerated feedback loop: The quicker the turnaround time, the faster developers can address issues, iterate on improvements, and merge their code into the main codebase.

- Improve developer experience: When developers experience swift and constructive feedback during code reviews, they feel empowered to do their best work. This satisfaction boosts individual morale and increases retention rates and overall team cohesion.

- Stay context-aware: When it takes a long time to respond to a code review, the author and the reviewer may lose some of the change’s context and background. This can have severe implications and cause defects.

- Encourage good behavior: Slow code reviews discourage developers from going the extra mile and performing refactors or code cleanups — because that would add more time to the approval process.

What Not To Improve (Directly)?

Lead Time for Changes

One of the key DORA metrics is “lead time for changes.” It measures the time from code commit to code deployment, and a shorter lead time is generally associated with better software delivery performance. Along with the CI and CD processes, code reviews play a significant role in improving this metric.

It’s tempting to focus on reducing the total time for code approval. However, enforcing strict timelines for approving a code change is unrealistic and might force reviewers to approve the PR prematurely to meet the standards.

On the contrary, most complaints about slow code reviews can be resolved by benchmarking and improving review response times. Google’s code review policy also emphasizes response time as a critical indicator:

It’s even more important for individual responses to come quickly than for the process to happen rapidly.

Test Coverage Percentage

Although it’s a standard way of measuring code health, test coverage percentage as an absolute number could be a better metric. High test coverage can give a false sense of security, implying the software is well-tested and bug-free. And it provides diminishing returns — as test coverage increases, the effort to cover the last few lines of code can be disproportionately high compared with the benefits.

Actual test effectiveness takes a lot of work to measure. Effective testing requires thoughtful consideration of edge cases, failure modes, and user scenarios not captured by coverage metrics.

How to Improve

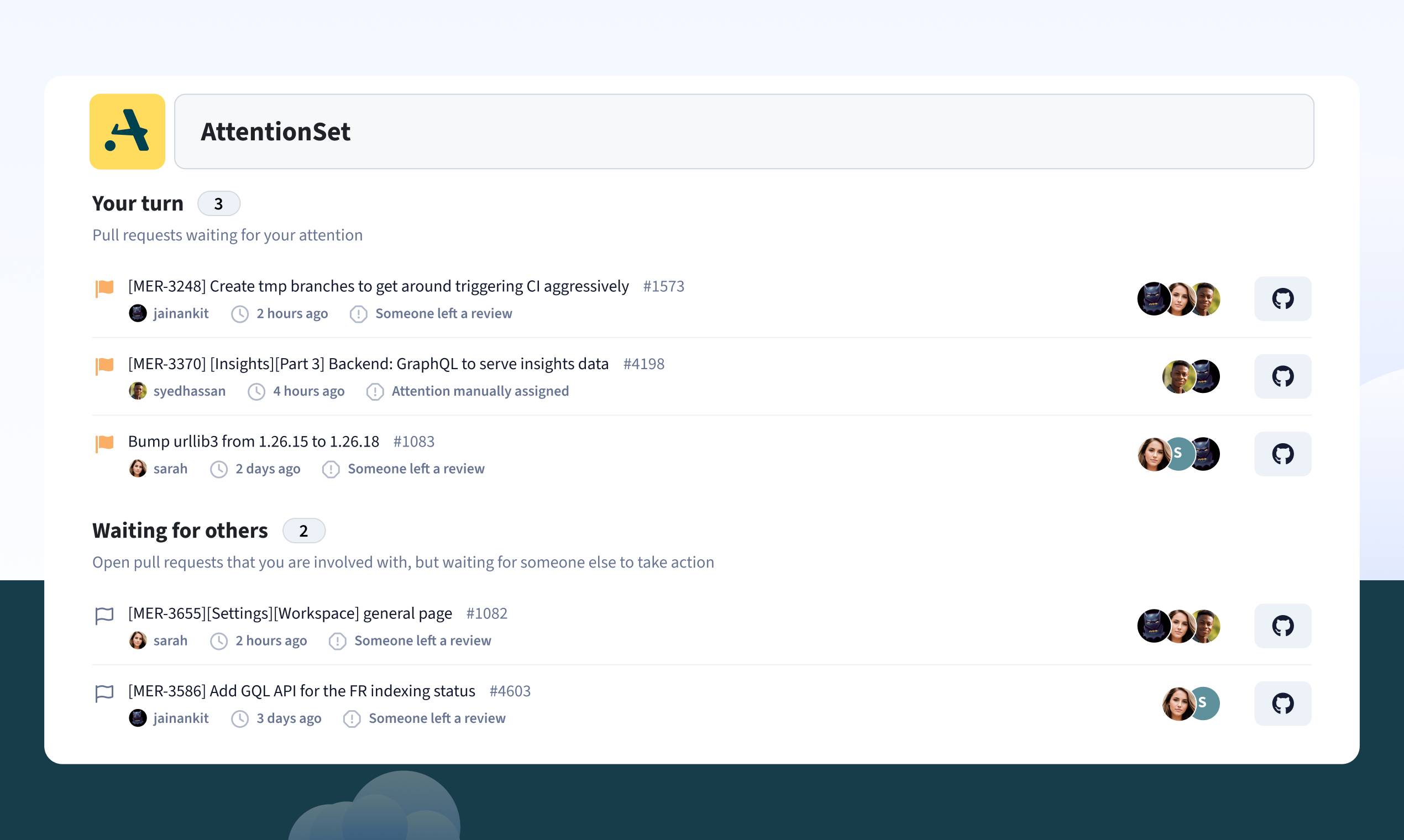

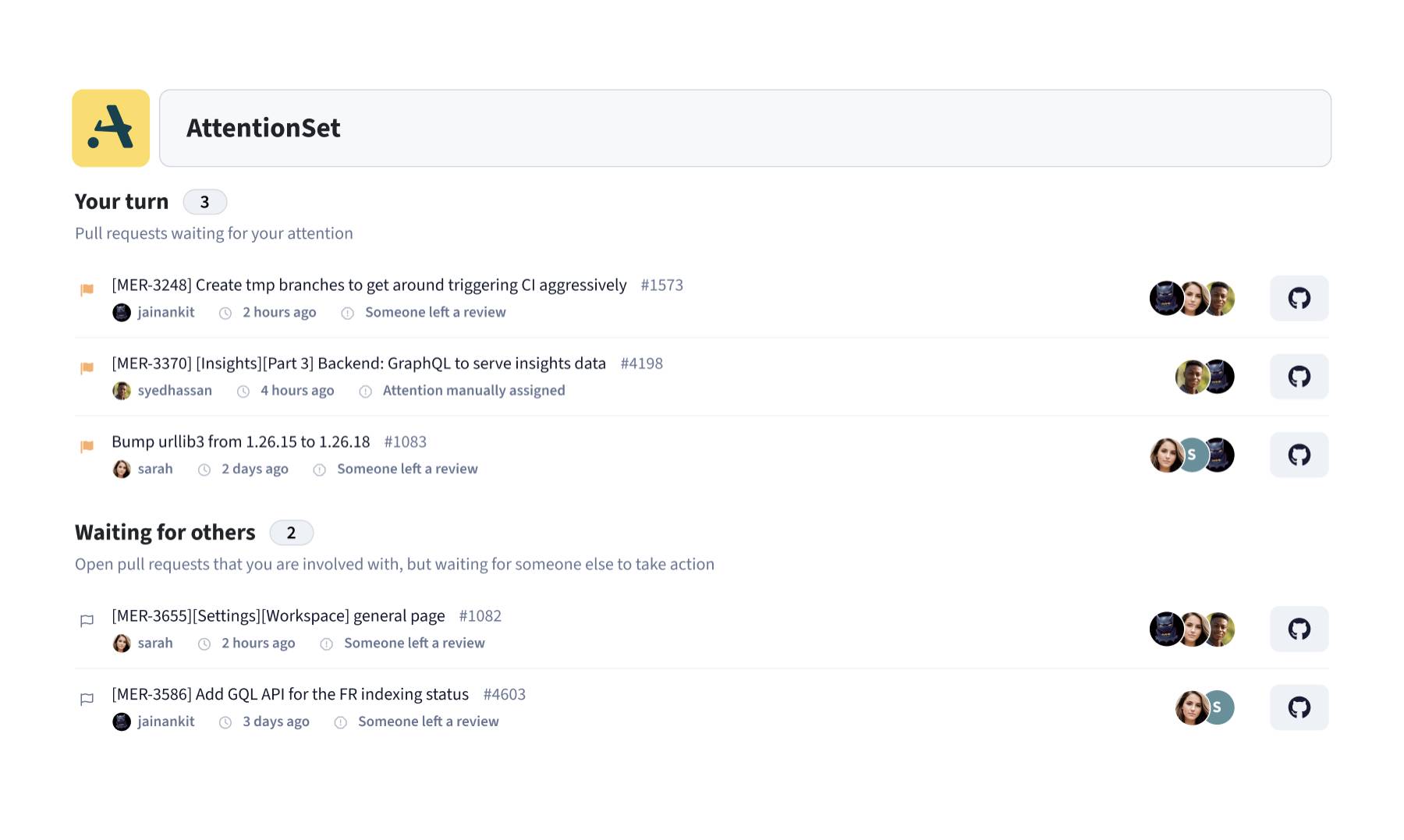

Review SLO

A service level objective (SLO) for code review can be defined as the suggested amount of time the reviewer should take to respond to a code change. Note that this is not the time it takes for a PR to be approved; instead, it’s just the time it takes to get a response.

Consider the code review SLO as an agreement between the author and the reviewer for a pull request. With this agreement, the author is incentivized to create PRs that fall within the bounds of the SLO agreement, and reviewers are incentivized to stay on top of the PRs.

Gigantic Code Reviews

But wait a second — not all code reviews are the same! How can we expect someone to review an entire 1,000-line change and a two-line change simultaneously? It’s essential to be realistic when benchmarking the iteration time. The best benchmark must take into account the size of the review. For instance, a good default could be “code changes with less than 200 lines require a response in one business day.”

In that case, anything over 200 lines of change can be left out. This would also encourage developers to prepare more minor changes that are easier to review.

Cross-Team Reviews

Software engineering is a team-based activity. It’s common for developers working in the same team to respond faster to one another’s code reviews because they’ve already built a social contract. Therefore, benchmarking inter-team and intra-team reviews differently can also be valuable.

Other Considerations

- Business days: To get meaningful data, track working days and account for company and regional holidays.

- Time zones: Similarly, understanding time zones can be helpful when measuring response times. For instance, if you’re in California and your code reviewer is in Australia, and you send the code for review at 10:00 a.m. on a Friday, expect the reviewer’s reply on Monday morning.

Improving the Review SLO

- Prioritize code reviews: Encourage the team to consider code reviews an integral part of the development process, not an afterthought.

- Schedule time for reviews: Team members should block off specific times during the workday for code reviews. This ensures that reviews are done promptly and that feedback is provided to the developer as quickly as possible.

- Use tools to speed up feedback: Implement tools to help automate some aspects of the code review process, like flagging potential issues. These tools can help identify issues in the code that may take a human reviewer longer to spot.

- Regularly monitor and adjust SLOs: This ensures the team always aims for a realistic target in their code review process.

Revision SLO

Similar to a review SLO, a revision SLO can be defined as the suggested amount of time the author of a code change should take to respond after the reviewer has left comments or approved the code change.

Rewrites

In many cases, the revision SLO should be very brief — for instance, if the reviewer’s feedback is minimal or if the author is responding to the reviewer with clarifications. However, in some cases, the reviewer’s feedback may require a significant rewrite or introduce a dependency on another change.

In such cases, recalling the code review request might make sense and submitting it again once the changes are implemented. This strategy reduces the cognitive load for the author and the reviewer, especially if SLOs are well-defined.

Conclusion

Improving the code review process is more than just enhancing the technical aspects or speeding up the timeline. It requires a balanced approach that considers the human element.

The implementation of review and revision service level objectives (SLOs) tailored to the nature of the code review, the introduction of efficient tools, and the establishment of a culture that values and prioritizes code reviews as an integral part of the development lifecycle stand out as effective strategies. Moreover, fostering a culture that encourages minor, more manageable changes and acknowledges the importance of cross-team reviews can lead to significant improvements.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.