包阅导读总结

1. 关键词:Mosaic AI Model Training、GenAI 模型、Fine-Tuning、企业数据、模型质量

2. 总结:Databricks 推出 Mosaic AI Model Training 对 GenAI 模型的微调支持进入公共预览,能结合企业数据优化模型,具有简单、快速、集成等特点,带来诸多效益,如提升质量、降低成本等,还介绍了评估方法和使用方式。

3. 主要内容:

– Mosaic AI Model Training 支持 GenAI 模型微调现处于公共预览阶段

– 理念:连接通用 LLMs 智能与企业数据是构建高质量 GenAI 系统的关键

– 作用:针对特定任务等进行模型专业化,可与 RAG 结合

– 模型训练

– 客户训练众多自定义 AI 模型,经验融入 Mosaic AI Model Training 服务

– 特点:简单、快速、集成、可调、主权

– 效益

– 包括更高质量、更低成本和延迟、格式风格一致等

– RAG 和微调结合

– 如 Celebal Tech 通过结合提升了总结质量

– 评估

– 多种评估方法,如监控提示、使用 Playground 等

– 开始使用

– 可通过 Databricks UI 或 Python 编程,提供多种引导方式

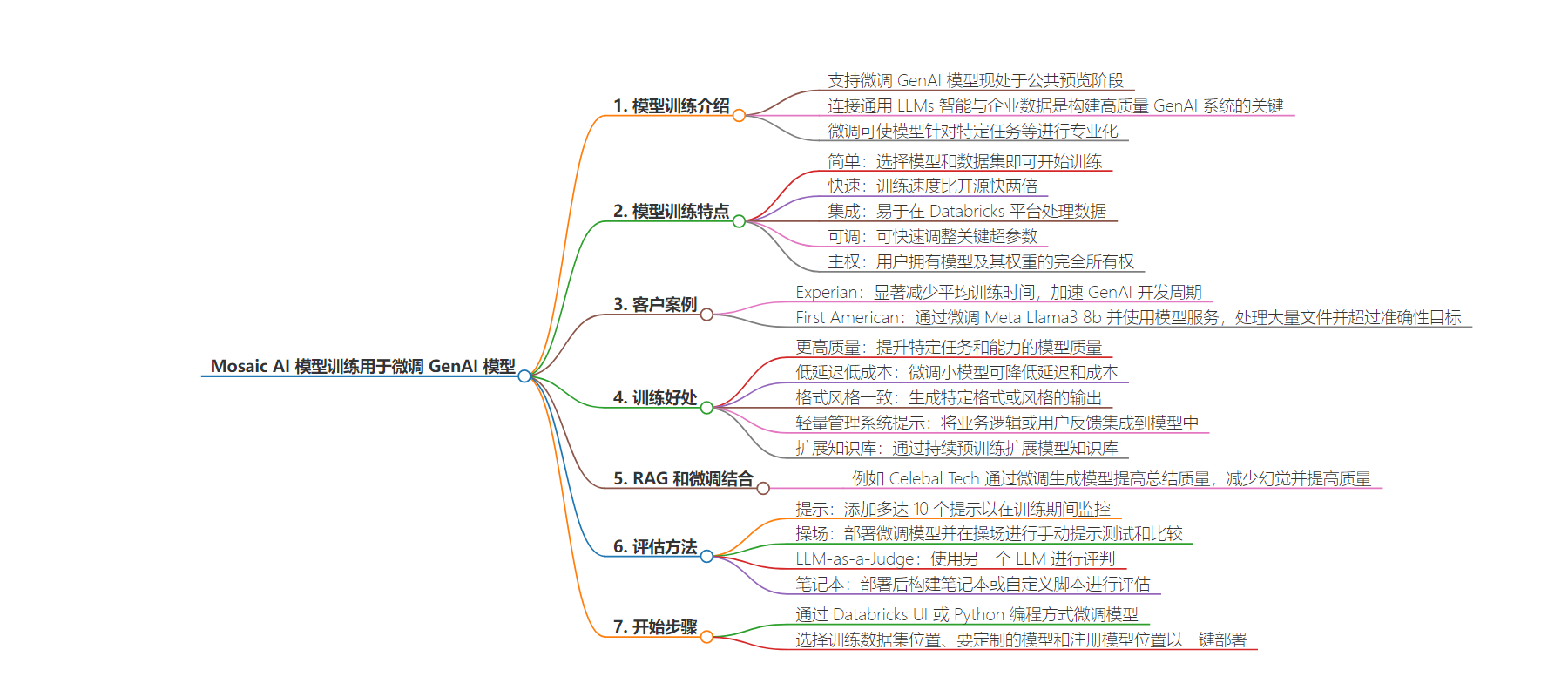

思维导图:

文章地址:https://www.databricks.com/blog/introducing-mosaic-ai-model-training-fine-tuning-genai-models

文章来源:databricks.com

作者:Databricks

发布时间:2024/7/22 16:30

语言:英文

总字数:1043字

预计阅读时间:5分钟

评分:91分

标签:AI 模型训练,微调,生成式 AI,Databricks,Mosaic AI

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Today, we’re thrilled to announce that Mosaic AI Model Training’s support for fine-tuning GenAI models is now available in Public Preview. At Databricks, we believe that connecting the intelligence in general-purpose LLMs to your enterprise data – data intelligence – is the key to building high-quality GenAI systems. Fine-tuning can specialize models for specific tasks, business contexts, or domain knowledge, and can be combined with RAG for more accurate applications. This forms a critical pillar of our Data Intelligence Platform strategy, which enables you to adapt GenAI to your unique needs by incorporating your enterprise data.

Model Training

Our customers have trained over 200,000 custom AI models in the last year, and we’ve distilled the lessons into Mosaic AI Model Training, a fully managed service. Fine-tune or pretrain a wide range of models – including Llama 3, Mistral, DBRX, and more – with your enterprise data. The resulting model is then registered to Unity Catalog, providing full ownership and control over the model and its weights. Additionally, easily deploy your model with Mosaic AI Model Serving in just one click.

We’ve designed Mosaic AI Model Training to be:

- Simple: Select your base model and training dataset, and start training immediately. We handle the GPU and efficient training complexities so you can focus on the modeling.

- Fast: Powered by a proprietary training stack that is up to 2x faster than open source, iterate quickly to build your models. From fine-tuning on a few thousand examples to continued pre-training on billions of tokens, our training stack scales with you.

- Integrated: Easily ingest, transform, and preprocess your data on the Databricks platform, and pull directly into training.

- Tunable: Quickly tune the key hyperparameters, namely learning rate and training duration, to build the highest quality model.

- Sovereign: You have full ownership of the model and its weights. You control the permissions and access lineage — tracking the training dataset as well as downstream consumers.

“At Experian, we are innovating in the area of fine-tuning for open source LLMs. The Mosaic AI Model Training reduced the average training time of our models significantly, which allowed us to accelerate our GenAI development cycle to multiple iterations per day. The end result is a model that behaves in a fashion that we define, outperforms commercial models for our use cases, and costs us significantly less to operate.” James Lin, Head of AI/ML Innovation, Experian

Benefits

Mosaic AI Model Training allows you to adapt open source models to perform well on specialized enterprise tasks to achieve higher quality. Benefits include:

- Higher quality: Improve the model quality along with specific tasks and capabilities, whether that be summarization, chatbot behavior, tools use, multilingual conversation, or more.

- Lower latency at lower costs: Large, general intelligence models can be expensive and slow in production. Many of our customers find that fine-tuning small models (<13B parameters) can dramatically reduce latency and cost while maintaining quality.

- Consistent, structured formatting or style: Generate outputs that follow a specific format or style, like entity extraction or creating JSON schemas in a compound AI system.

- Lightweight, manageable system prompts: Integrate many business logic or user feedback into the model itself. It can be hard to incorporate end-user feedback into a complex prompt and small prompt changes can cause regressions for other questions.

- Expand the knowledge base: With Continued Pretraining, extend a model’s knowledge base, whether that be particular topics, internal documents, languages, or updated recent events past the model’s original knowledge cut-off. Stay tuned for future blogs on the benefits of continued pretraining!

“With Databricks, we could automate tedious manual tasks by using LLMs to process one million+ files daily for extracting transaction and entity data from property records. We exceeded our accuracy goals by fine-tuning Meta Llama3 8b and using Mosaic AI Model Serving. We scaled this operation massively without the need to manage a large and expensive GPU fleet.” – Prabhu Narsina, VP Data and AI, First American

RAG and Fine-Tuning

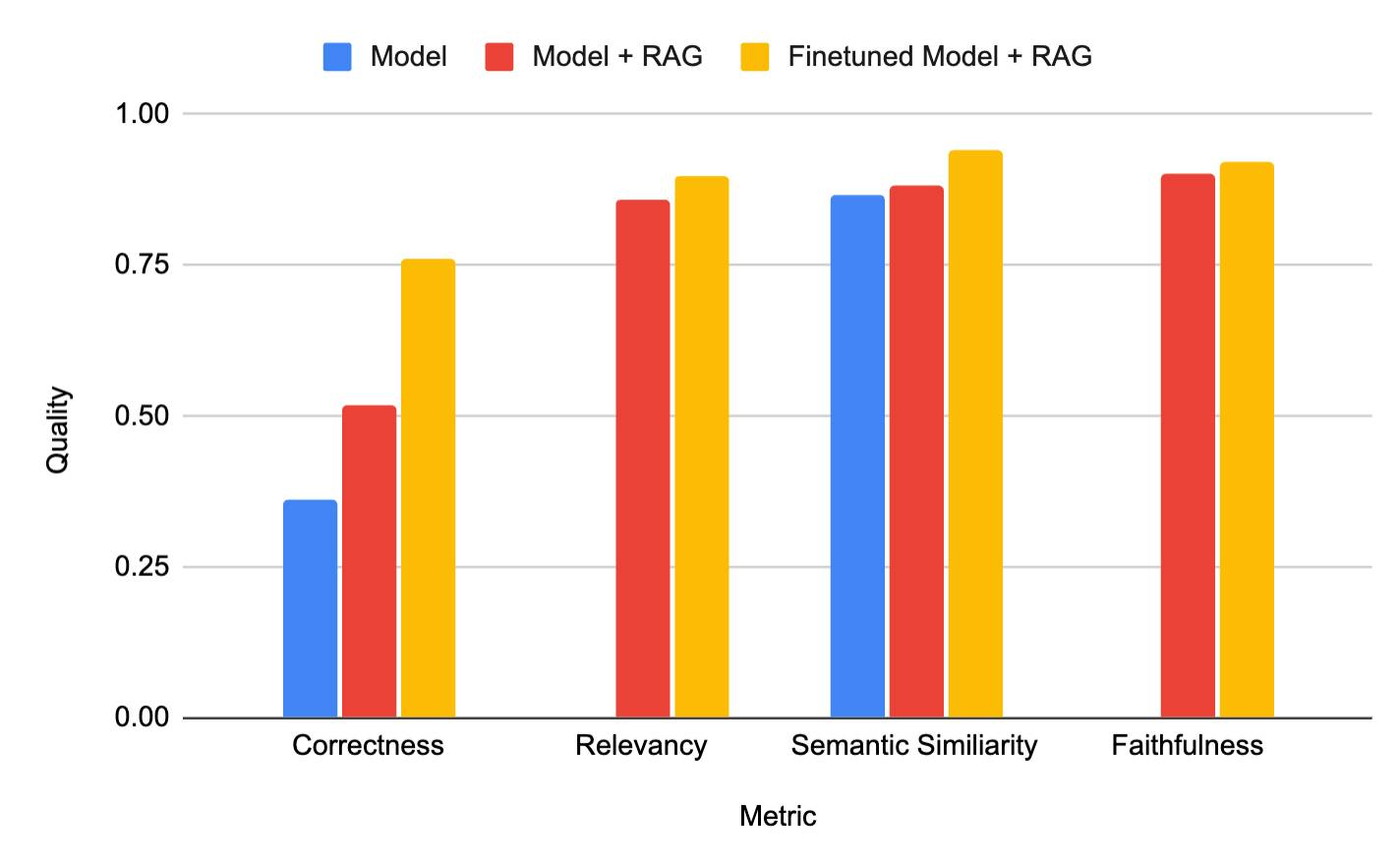

We often hear from customers: should I use RAG or fine-tune models in order to incorporate my enterprise data? With Retrieval Augmented Fine-tuning (RAFT), combine both! For example, our customer Celebal Tech built a high quality domain-specific RAG system by finetuning their generation model to improve summarization quality from retrieved context, reducing hallucinations and improving quality (see Figure below).

Figure 1: Combining a finetuned model with RAG (yellow) produced the highest quality system for customer Celebal Tech. Adapted from their blog.

“We felt we hit a ceiling with RAG- we had to write a lot of prompts and instructions, it was a hassle. We moved on to fine-tuning + RAG and Mosaic AI Model Training made it so easy! It not only adopted the model for Data Linguistics and Domain, but it also reduced hallucinations and increased speed in RAG systems. After combining our Databricks fine-tuned model with our RAG system, we got a better application and accuracy with the usage of less tokens.” Anurag Sharma, AVP Data Science, Celebal Technologies

Evaluation

Evaluation methods are critical to helping you iterate on model quality and base model choices during fine-tuning experiments. From visual inspection checks to LLM-as-a-Judge, we’ve designed Mosaic AI Model Training to seamlessly connect all the other evaluation systems within Databricks:

- Prompts: Add up to 10 prompts to monitor during training. We’ll periodically log the model’s outputs to the MLflow dashboard, so you can manually check the model’s progress during training.

- Playground: Deploy the fine-tuned model and interact with the playground for manual prompt testing and comparisons.

- LLM-as-a-Judge: With MLFlow Evaluation, use another LLM to judge your fine-tuned model on an array of existing or custom metrics.

- Notebooks: After deploying the fine-tuned model, build notebooks or custom scripts to run custom evaluation code on the endpoint.

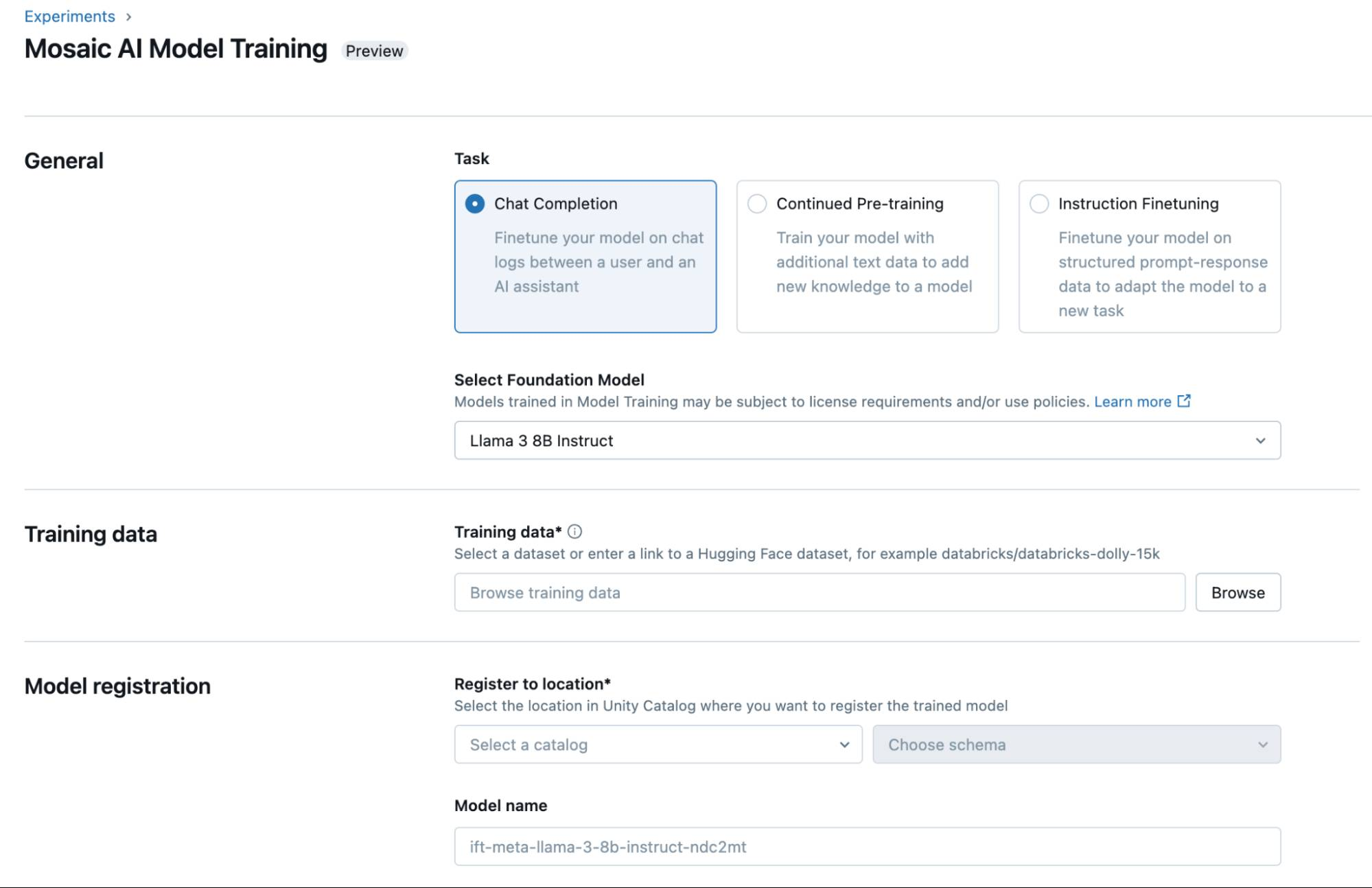

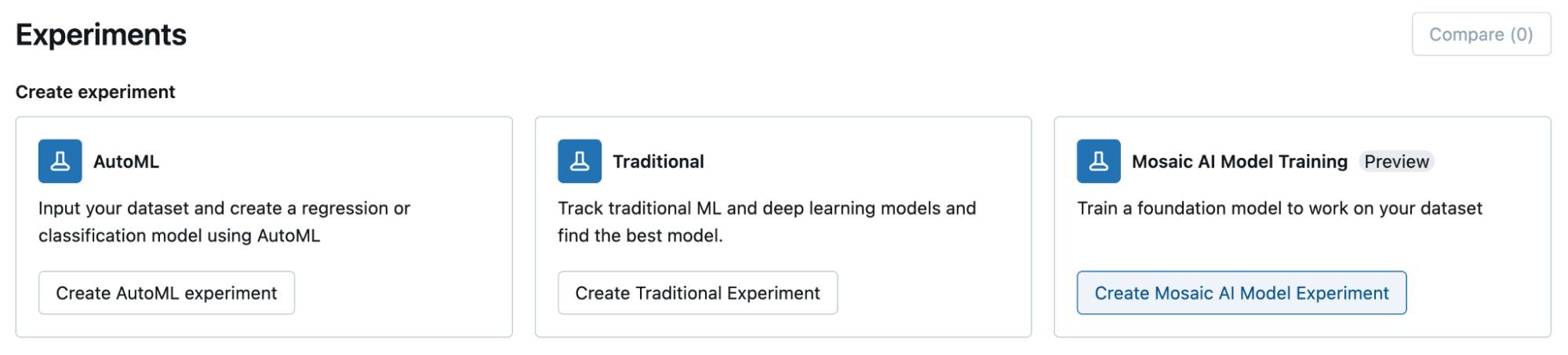

Get Started

You can fine-tune your model via the Databricks UI or programmatically in Python. To get started, select the location of your training dataset in Unity Catalog or a public Hugging Face dataset, the model you would like to customize, and the location to register your model for 1-click deployment.

- Watch our Data and AI Summit presentation on Mosaic AI Model Training

- Read our documentation (AWS, Azure) and visit our pricing page

- Try our dbdemo to quickly see how to get high-quality models with Mosaic AI Model Training

- Take our tutorial