包阅导读总结

1. `LLMs`、`Agents`、`Scrum Team`、`Productivity Benefits`、`AI Tooling`

2. 本文主要探讨了 LLMs 和 Agents 在 Scrum 团队中的应用及可能带来的生产力效益,同时也指出了使用中存在的问题,如信息过多可能妨碍价值实现,强调应关注其在特定问题空间的价值。

3.

– Scrum.org 的 COO Eric Naiburg 称 AI 作为团队成员能为 Scrum 大师、产品所有者和开发者带来生产力效益,减轻认知负担。

– 例如为 Scrum 大师提供团队促进、性能和流程优化方面的建议。

– 帮助开发者分解和理解故事,简化原型设计、测试等工作。

– Thoughtworks 的 Birgitta Böckeler 分享了使用 LLMs 在工程场景中的实验。

– 包括使用 LLMs 理解开源项目 Bhamni 的工单和代码库等。

– 尝试使用 Autogen 构建基于 LLM 的 AI 代理,但效果不稳定。

– TitanML 的 Meryem Arik 称使用具有 RAG 的 LLMs 作为“研究助理”是常见的企业用例,并强调了自定义解决方案的优势。

– Upwork Research Institute 的研究指出 AI 工具可能降低生产力。

– 但 Böckeler 认为在特定问题空间,AI 技术仍有价值。

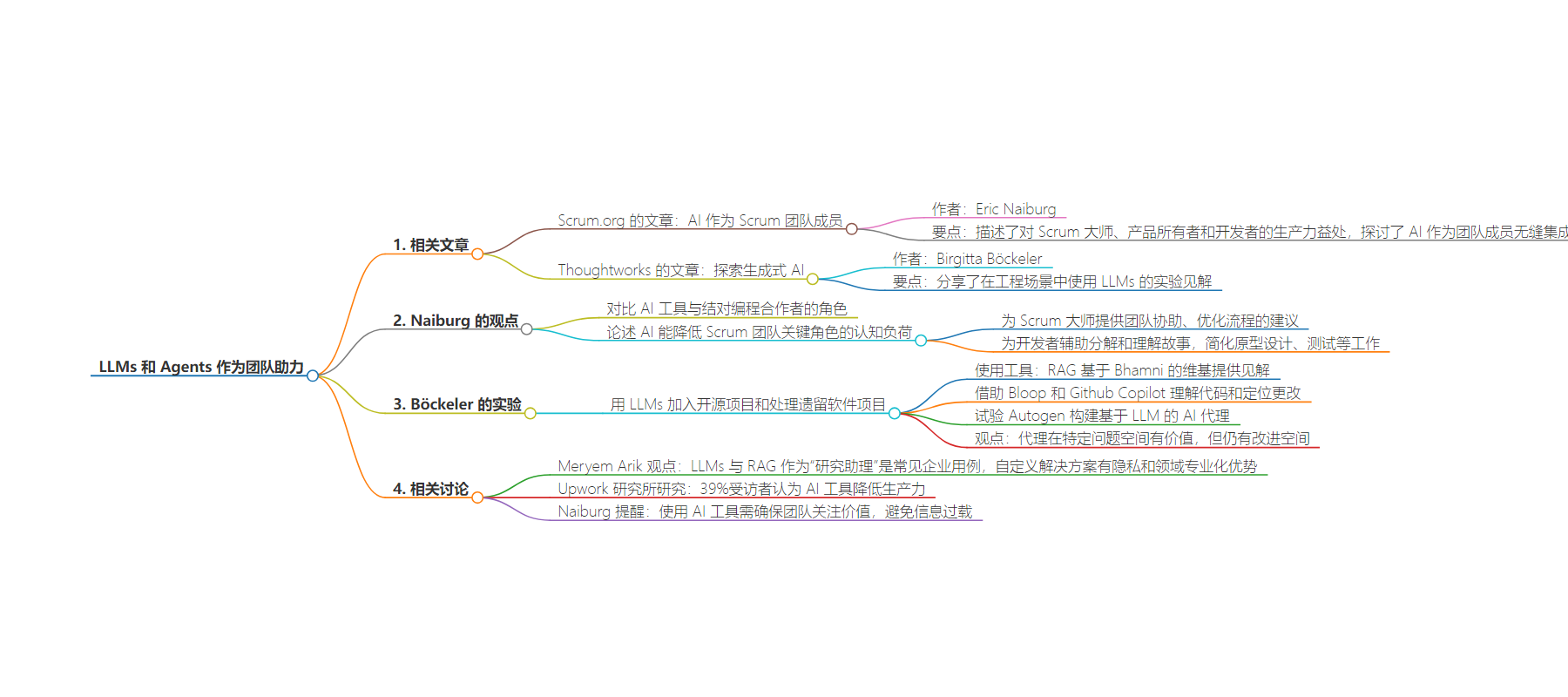

思维导图:

文章来源:infoq.com

作者:Rafiq Gemmail

发布时间:2024/8/27 0:00

语言:英文

总字数:953字

预计阅读时间:4分钟

评分:87分

标签:AI 在软件开发中的应用,大型语言模型,AI 代理,Scrum 团队,AI 集成

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Scrum.org recently published an article titled AI as a Scrum Team Member by its COO, Eric Naiburg. Naiburg described the productivity benefits for Scrum masters, product owners, and developers, challenging the reader to “imagine AI integrating seamlessly” as a “team member” into the Scrum team. Thoughtworks’ global lead for AI-assisted software delivery,Birgitta Böckeler, alsorecently published an article titledExploring Generative AI, where she shared insights into experiments involving the use ofLLMs (Large Language Models) in engineering scenarios, where they may potentially have amultiplier effect for software delivery teams.

Naiburg compared the role of AI tooling to that of a pair-programming collaborator. Using a definition of AI spanning from LLM integrations to analytical tools, he wrote about how AI can be used to reduce cognitive load across the key roles of a Scrum team. He discussed the role of the Scrum master and explained that AI provides an assistant capable of advising on team facilitation, team performance and optimisation of flow. Naiburg gave the example of engaging with an LLM for guidance on driving engagement in ceremonies:

AI can suggest different facilitation techniques for meetings. If you are having difficulty with Scrum Team members engaging in Sprint Retrospectives, for example, just ask the AI, “I am having a problem getting my Scrum Team to fully engage in Sprint Retrospectives any ideas?”

Naiburg wrote that AI provides developers with an assistant in the team to help decompose and understand stories. Further, he called out the benefit of using AI to simplify prototyping, testing, code-generation, code review and synthesis of test data.

Focusing on the developer persona, Böckeler described her experiment with using LLMs to onboard onto an open source project and deliver a story against a legacy software project. To understand the capabilities and limits of AI tooling, she used LLMs to work on a ticket from the backlog of the open-source project Bhamni. Böckeler wrote about her use of LLMs in comprehending the ticket, the codebase, and understanding the bounded context of the project.

Böckeler’s main tools comprised of an LLM using RAG (Retrieval Augmented Generation) to provide insights based on the content of Bhamni’s wiki. She offered the LLM a prompt containing the user story and asked it to “explain the Bhamni and health care terminology” which it mentioned. Böckeler wrote:

I asked more broadly, “Explain to me the Bahmni and healthcare terminology in the following ticket: …”. It gave me an answer that was a bit verbose and repetitive, but overall helpful. It put the ticket in context, and explained it once more. It also mentioned that the relevant functionality is “done through the Bahmni HIP plugin module”, a clue to where the relevant code is.

Speaking on the InfoQ Podcast in June, Meryem Arik, co-founder/CEO at TitanML, described the use of LLMs with RAG performing as “research assistant” being “the most common use cases that we see as a 101 for enterprise.” While Böckeler did not directly name her RAG implementation beyond describing it as a “Wiki-RAG-Bot”, Arik spoke extensively about the privacy and domain-specialisation benefits that can be gained from a custom solution using a range of open models. She said:

So actually, if you’re building a state-of-the-art RAG app, you might think, okay, the best model for everything is OpenAI. Well, that’s not actually true. If you’re building a state-of-the-art RAG app, the best generative model you can use is OpenAI. But the best embedding model, the best re-ranker model, the best table parser, the best image parser, they’re all open source.

To understand the code and target her changes, Böckeler wrote that she “fed the JIRA ticket text” into two tools used for code generation and comprehension, Bloop and Github Copilot. She asked both tools to help her “find the code relevant to this feature.” Both models gave her a similar set of pointers, which she described as “not 100% accurate,” but “generally useful direction.” Exploring the possibilities around autonomous code-generators, Böckeler experimented with Autogen to build LLM based AI agents to port tests across frameworks, she explained:

Agents in this context are applications that are using a Large Language Model, but are not just displaying the model’s responses to the user, but are also taking actions autonomously, based on what the LLM tells them.

Böckeler reported that her agent worked “at least once,” however it “also failed a bunch of times, more so than it worked.” InfoQ recently reported on a controversial study by Upwork Research Institute, pointing at a perception from those sampled that AI tools decrease productivity, with 39% of respondents stating that “they’re spending more time reviewing or moderating AI-generated content.” Naiburg calls out the need to ensure that teams remain focused on value and not just the output of AI tools:

One word of caution – the use of these tools can increase the volume of “stuff”. For example, some software development bots have been accused of creating too many lines of code and adding code that is irrelevant. That can also be true when you get AI to refine stories, build tests or even create minutes for meetings. The volume of information can ultimately get in the way of the value that these tools provide.

Commenting on her experiment with Autotgen, Böckeler shared a reminder that the technology still has value in “specific problem spaces,” saying:

These agents still have quite a way to go until they can fulfill the promise of solving any kind of coding problem we throw at them. However, I do think it’s worth considering what the specific problem spaces are where agents can help us, instead of dismissing them altogether for not being the generic problem solvers they are misleadingly advertised to be.