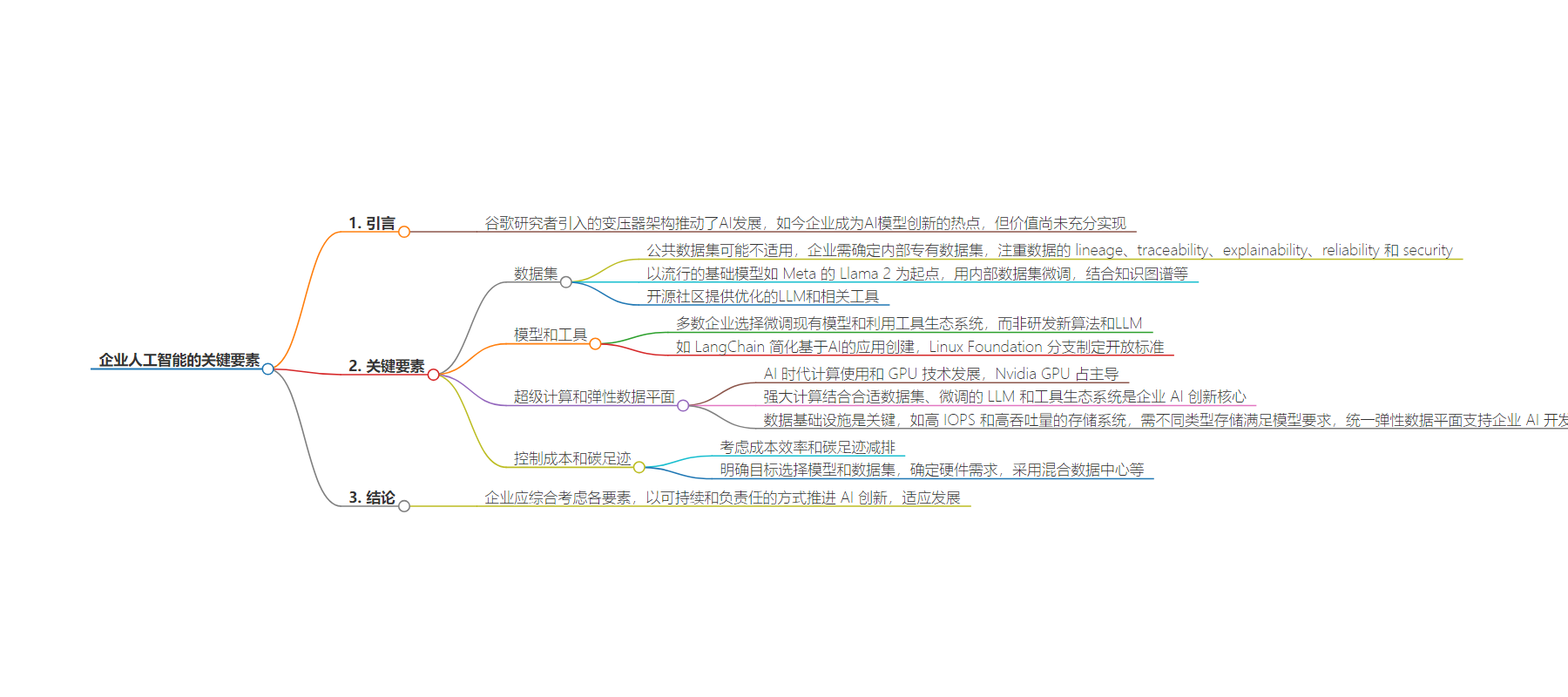

包阅导读总结

1.

关键词:Enterprise AI、Data Machine、LLMs、Data Infrastructure、Cost Control

2.

总结:本文探讨企业 AI 发展,指出 Transformer 架构推动了 AI 进步,如今企业成为创新温床。但要充分利用 LLMs,企业需处理好多方面,包括合适的数据、模型、工具、强大的计算和弹性数据平面,同时要控制成本和碳足迹。

3.

主要内容:

– Transformer 与 AI 发展:七年前 Google 研究者引入的 Transformer 架构使如今的大型语言模型和生成式 AI 应用成为可能。

– 企业 AI 创新:各行业企业抓住 AI 发展契机,尝试创新并创造价值,但价值尚未充分实现。

– 面临的挑战:需考虑众多因素,如技术、数据等,以确保生成式 AI 应用可靠并产生实际价值。

– 关键要素:

– 数据集、模型和工具:企业需确定适合的内部数据集,可对现有模型微调,开源社区提供了相关工具和优化的 LLMs。

– 超级计算和弹性数据平面:AI 发展推动计算使用和 GPU 技术提升,强大的数据基础设施是关键,需具备低延迟、大规模并行等特性,统一弹性数据平面能加速企业 AI 开发。

– 控制成本和碳足迹:考虑成本效率和碳减排,采用混合方式,明确需求并选择合适硬件,优化的数据中心有助于可持续的 AI 创新。

思维导图:

文章地址:https://thenewstack.io/enterprise-ai-requires-a-lean-mean-data-machine/

文章来源:thenewstack.io

作者:Bharti Patel

发布时间:2024/6/18 18:18

语言:英文

总字数:1268字

预计阅读时间:6分钟

评分:86分

标签:人工智能,机器学习,企业,数据,大型语言模型

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Eight Google researchers jolted AI into a new stage of evolution when they introduced transformers at a key machine learning conference seven years ago. Transformer architecture is the innovative AI neural network that makes today’s large language models (LLMs), and the generative AI applications built on top of them, possible. The work stands on the shoulders of many, including AI giants like Turing Award winner Geoffrey Hinton and living legend Fei Fei Li, the latter recognized for insisting big data was central to unlocking AI’s power. While research among the hyperscalers and academics remains as dynamic as ever, the other hotbed of AI model innovation today is the enterprise itself.

Enterprises across every vertical have smartly taken stock of this watershed moment in AI’s living history, seizing the day to refine LLMs in inventive new ways and create new kinds of value with them. But, as yet, that value is largely unrealized.

Right now, halfway through 2024, to take full advantage of LLMs, enterprise innovators first have to wrap their minds around a lot of moving parts. Having the right technology under the hood and tuning it to an enterprise’s unique needs will help ensure generative AI applications are capable of producing reliable results — and real-world value.

Datasets, Models and Tools

Data, of course, is AI’s fuel, and massive public datasets feed the power of LLMs. But these public datasets may not include the correct data for what an enterprise innovator is trying to accomplish. The hallucinations and bias born through them also conflict with the quality control enterprises require. Lineage, traceability, explainability, reliability and security of data all matter more to enterprise users. They have to be responsible with data use or risk costly lawsuits, reputation problems, customer harm, and damage to their products and solutions. That means they must determine what internal proprietary datasets should feed model customization and application development, where those datasets live and how best to clean and prepare them for model consumption.

The LLMs we’ve heard most about are considered foundation models: The ones built by OpenAI, Google, Meta and others that are trained on enormous volumes of internet data — some high-quality data and some so poor it counts as misinformation. Foundation models are built for massive parallelism, adaptable to a wide variety of different scenarios, and require significant guardrails. Meta’s Llama 2, “a family of pretrained and fine-tuned LLMs, ranging in scale from 7B to 70B parameters,” is a popular starting point for many enterprises. It can be fine-tuned with unique internal datasets and combined with the power of knowledge graphs, vector databases, SQL for structured data, and more. Fortunately, there’s a robust activity in the open source community to offer new optimized LLMs.

The open source community has also become particularly helpful in offering the tools that serve as connective tissue for generative AI ecosystems. LangChain, for example, is a framework that simplifies the creation of AI-based applications, with an open source Python library specifically designed to optimize the use of LLMs. Additionally, a Linux Foundation offshoot is developing open standards for retrieval-augmented generation (RAG), which is vital for bringing enterprise data into pre-trained LLMs and reducing hallucinations. Enterprise developers can access many tools using APIs, which is a paradigm shift that’s helping to democratize AI development.

While some enterprises will have a pure research division that may investigate developing new algorithms and LLMs, most will not be reinventing the wheel. Fine-tuning existing models and leveraging a growing ecosystem of tools will become the fastest path to value.

Super-Compute and an Elastic Data Plane

The current AI era, and the generative AI boom in particular, are driving a spectacular rise in compute usage and in the advancement of GPU technology. This is due to the complex and sheer number of calculations that AI training and AI inference require, albeit distinctions exist in the way those processes consume compute. It’s impossible not to mention Nvidia GPUs here, which supply about 90% of the AI chip market and will likely continue dominance with the recently announced and wildly powerful GB200 Grace Blackwell Superchip, capable of real-time, trillion-parameter inference and training.

The combination of the right datasets, fine-tuned LLMs, and a robust tool ecosystem, alongside of this powerful compute, is core to enabling enterprise AI innovation. But the technological backbone giving form to all of it is data infrastructure — storage and management systems that can unify a data ecosystem. The data infrastructure that has been foundational in cloud computing is now also foundational to AI’s existence and growth.

Today’s LLMs need volume, velocity, and variety of data at a rate not seen before, and that creates complexity. It’s not possible to store the kind of data LLMs require on cache memory. High IOPS and high throughput storage systems that can scale for massive datasets are a required substratum for LLMs where millions of nodes are needed. With superpower GPUs capable of lightning-fast read storage read times, an enterprise must have a low-latency, massively parallel system that avoids bottlenecks and is designed for this kind of rigor. For example, Hitachi Vantara’s Virtual Storage Platform One offers a new way of approaching data visibility across block, file and object. Different kinds of storage need to be readily available to meet different model requirements, including flash, on-site, and in the cloud. Flash can offer denser footprints, aggregated performance, scalability, and efficiency to accelerate AI model and application development that’s conscious of carbon footprint. And flash reduces power consumption, crucial to reap the benefits of generative AI in a sustainable present and future.

Ultimately, data infrastructure providers can best support enterprise AI developers by putting a unified elastic data plane and easy-to-deploy appliances with generative AI building blocks, along with suitable storage and compute, in developers’ hands. A unified elastic data plane is a lean machine, offering extremely efficient handling of data, with data plane nodes near to wherever the data is, easy access to disparate data sources, and improved control over data lineage, quality, and security. With appliances, models can sit on top and can be continuously trained. This kind of approach will speed enterprise development of value-generating AI applications across domains.

Controlling Costs and Carbon Footprint

It’s crucial that these technological underpinnings of the AI era be built with cost efficiency and reduction of carbon footprint in mind. We know that training LLMs and the expansion of generative AI across industries are ramping up our carbon footprint at a time when the world desperately needs to reduce it. We know too that CIOs consistently name cost-cutting as a top priority. Pursuing a hybrid approach to data infrastructure helps ensure that enterprises have the flexibility to choose what works best for their particular requirements and what is most cost-effective to meet those needs.

Most importantly, AI innovators should be clear about what exactly they want to achieve and which model and datasets they need to achieve it, then size hardware requirements like flash, SSDs and HDDs accordingly. It may be advantageous to rent from hyperscalers or use on-premise machines. Generative AI needs energy-efficient, high-density storage to reduce power consumption.

Hybrid data centers with high levels of automation, an elastic data plane and optimized appliances for AI application-building will help move AI innovation forward in a socially responsible and sustainable way that still respects the bottom line. How your business approaches this could determine whether it evolves with the next phases of AI or becomes a vestige locked in the past.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.