包阅导读总结

1. 关键词:AI 安全架构、攻击表面、训练、推理、分布式

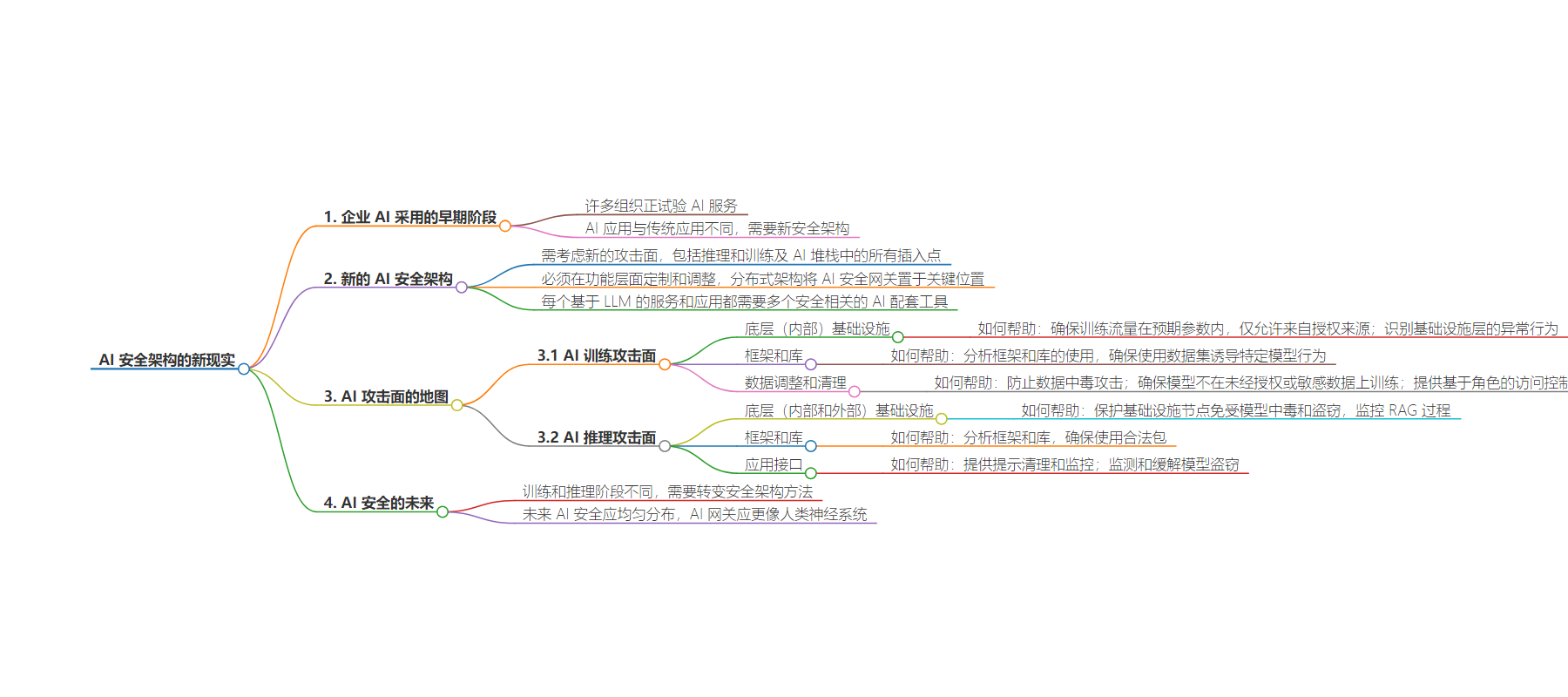

2. 总结:本文探讨企业 AI 应用早期阶段的安全架构需求,指出旧技术不再适用,需新架构应对新攻击面,包括训练和推理阶段,强调 AI 安全应在功能层面定制和调整,未来 AI 安全需分布式部署。

3. 主要内容:

– AI 安全架构的新需求

– 企业处于早期 AI 采用阶段,旧安全技术不足。

– 新架构要考虑 AI 基础设施的新攻击面,包括推理和训练等。

– AI 攻击表面的映射

– AI 训练攻击表面

– 包括基础设施、框架和库、数据调优与清理等环节的攻击插入点。

– AI 网关可保障训练流量、分析框架使用、预防数据中毒等。

– AI 推理攻击表面

– 涉及基础设施、框架和库、应用接口等。

– AI 网关可保护节点、分析框架、提供提示净化等。

– AI 安全的未来分布

– AI 安全不同,训练和推理阶段有不同需求和风险。

– 有效保护需将 AI 网关分布式部署,像神经系统般运作。

思维导图:

文章地址:https://thenewstack.io/the-new-realities-of-ai-security-architecture/

文章来源:thenewstack.io

作者:Liam Crilly

发布时间:2024/6/18 18:43

语言:英文

总字数:1124字

预计阅读时间:5分钟

评分:81分

标签:赞助-NGINX,赞助文章-贡献

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

We are in the early stages of enterprise AI adoption. Many organizations are experimenting with AI services delivered via API and running their own AI infrastructure and models. AI applications are unlike legacy applications and require a new security architecture. Old technologies such as filtering, firewalls and digital loss protection are no longer sufficient.

A new architecture must take into account the new attack surface of AI infrastructure. This includes inference and training and all the insertion points in the AI stack — additional model tuning via retrieval-augmented generation (RAG) or other modalities, for example.

What’s more, AI security must be customized and tuned at the functional level to properly ensure that responsible systems will detect and block threats and bad intent without blocking normal behaviors, which might inadvertently draw a red flag. This distributed architecture will put AI security gateways at key positions in the infrastructure stack. Each LLM-based service and application will need a number of security-focused AI companion tools to protect enterprises adequately.

In other words, your LLM needs an AI to secure it. This new architecture is like nothing we have seen before and will require a significant shift in the way we do security.

A Map of the AI Attack Surface

To effectively secure AI, we must first map the expanded attack surface it presents. This encompasses both the inference and training phases of AI models. For most AI applications, each phase includes multiple steps, dependencies and inputs. Each phase also requires different types of AI defenses suitable to its function and location in the stack.

AI Training Attack Surface

Training is the process of preparing AI models to shape their behavior and capabilities for specific (or sometimes general) use cases. Possible attack insertion points in this portion of AI infrastructure and application development include:

- Underlying (internal) infrastructure: AI training is performed mainly on GPUs and other processors built for parallel processing of programming instructions. This infrastructure is deployed on familiar deployment architectures, such as containers built on Kubernetes. At this layer, infrastructure teams will also deploy ingress controllers and load balancers to manage and shape traffic.

- How an AI gateway can help: Ensure training traffic is within expected parameters and only permitted from authorized sources. Identify anomalous behavior at the infrastructure layer and deliver resilience and continuity should infrastructure components be compromised.

- Frameworks and libraries: To train AI models, teams deploy frameworks and toolchains such as PyTorch and TensorFlow, which rely on large external software libraries. For example, PyTorch dependencies are mostly managed and pulled from the Python Package Index (PyPI).

- How an AI gateway can help: Analyze the use of frameworks and libraries to ensure that teams use datasets to induce specific model behaviors.

- Data tuning and cleansing: Data training involves using a set of labeled examples to teach machine learning models to recognize patterns and make predictions. The quality and quantity of training data are critical to the accuracy and effectiveness of these models. High-quality training data ensures that the model can generalize well in response to new, unseen data, while poor-quality data can lead to inaccurate or biased predictions. Data pipelines often include not only ingestion of labeled examples but also modifications or edits to the dataset to induce specific model behaviors.

- How an AI gateway can help: Prevent data poisoning attacks by insiders or outsiders tampering with data sources or seeking to inject bad or corrupt data into training databases. Ensure that models are not trained on unauthorized or sensitive (PII) data. Provide role-based access control to the data infrastructure.

AI Inferencing Attack Surface

Inferencing is the process that occurs when a trained AI model makes predictions or decisions based on new, unseen data. It involves feeding the new data into the model, performing computations based on the learned parameters and generating an output such as classification, prediction or generated text. Inference is also involved in the output of generative AI models for consumer applications, like ChatGPT.

- Underlying (internal and external) infrastructure: Many of the same infrastructure elements used for training are also used for AI inferencing. Inferencing is less compute-intensive but is often more continuous, and is more likely to be distributed. Increasingly, infrastructure is distributed to the edge to improve latency, with key values and common responses cached closer to users. This further extends and more widely distributes the attack surface. There are also additional components for inferencing scenarios. For example, RAG, a technique used to deliver more relevant and accurate results by including documents alongside prompts, has its own data and compute infrastructure.

- How an AI gateway can help: It can protect all the different infrastructure nodes against model poisoning and model theft while monitoring RAG processes to ensure that internal resources are not being hijacked or misused.

- Frameworks and libraries: Use cases are largely the same across training and inferencing except for added external exposure (public applications or APIs).

- How AI gateway can help: Like model training, an AI gateway can analyze frameworks and libraries to ensure that teams are using only legitimate packages and not introducing compromised or unauthorized dependencies.

- Application interface: Unlike training, in the inference stage, the AI system is exposed to the public or to API consumers. This means the application ingests prompts and then responds accordingly. This is the most exposed attack surface. It can also affect the underlying infrastructure because it can be viewed as an entry point for other types of attacks or techniques used to compromise internal systems. This can also be a highly distributed exposure because AI services may power components of other applications and thus may be exposed to abuse in that manner.

- How an AI gateway can help: Provide prompt sanitization and monitoring and speaking to the model training AI gateway to ensure signs of model drift or bias inducement are not emerging at runtime. Model theft monitoring and mitigation are also critical functions.

The Future of AI Security Must Be Evenly Distributed

AI security is different. Training and inference are divided into two distinct phases, each with different needs and risks. Compared to legacy application architectures, AI applications have different behaviors, deployment patterns and novel attack surfaces. This requires a fundamental shift in our approach to security architecture.

To effectively protect against the wide range of potential attacks on AI models’ training and inferencing stages, the logical path forward is to put AI where the AI is. That is, the AI gateway can’t be a perimeter checkpoint. It must be more like a human nervous system — distributed to be close to the action, customized to specific attributes and constantly communicating.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.