包阅导读总结

1. `React 19、Vector Database、Netlify、JetBrains、Frontend Survey`

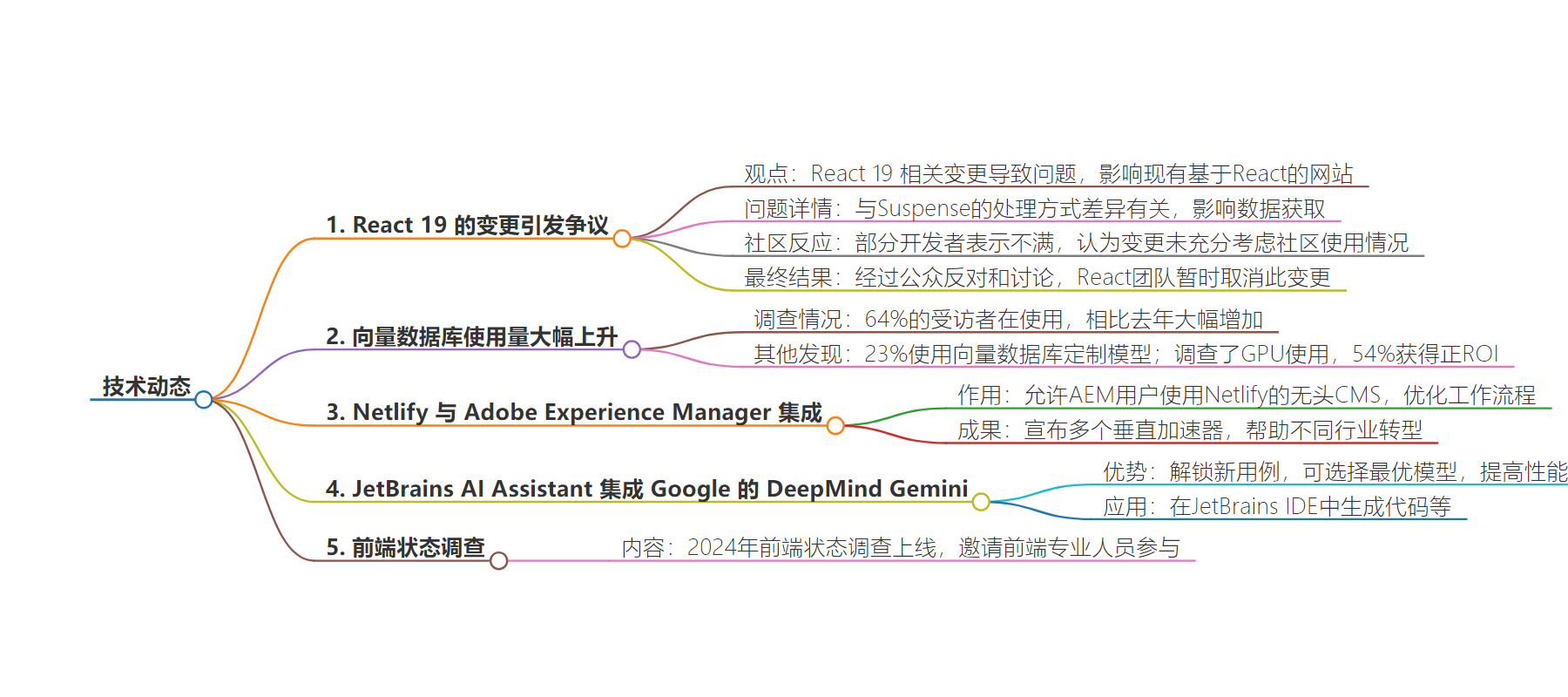

2. 本文涵盖了 React 19 变更引发开发者不满及最终团队撤回更改,向量数据库使用大幅增长,Netlify 与 Adobe 系统集成,JetBrains AI 助理整合新模型,以及前端状况调查等内容。

3.

– React 19 变更

– 相关问题导致网络速度减缓,特别是在 Suspense 处理上。

– 引发社区反对,最终团队撤回更改。

– 向量数据库

– 使用量大幅增长。

– 多数受访者使用,部分定制模型。

– Netlify

– 与 Adobe Experience Manager 集成。

– 推出多个垂直加速器以促进架构转变。

– JetBrains

– AI 助理整合 Google 的 DeepMind Gemini 语言模型。

– 解锁新用例,提升性能。

– 前端调查

– 2024 前端状况调查上线。

思维导图:

文章地址:https://thenewstack.io/react-19-change-angers-some-devs-vector-database-use-jumps/

文章来源:thenewstack.io

作者:Loraine Lawson

发布时间:2024/6/21 16:50

语言:英文

总字数:1244字

预计阅读时间:5分钟

评分:81分

标签:人工智能,前端开发,JavaScript

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

React 19 almost slowed down the internet last week — or at least existing sites built with React, according to Henrique Yuji of Code Miner, a software boutique that runs an interesting blog by the same name. The problem related to Suspense and a difference in how it’s handled in React 18 and 19.

“Suspense is a React component that lets you display a fallback until its children have finished loading, either because these children components are being lazy loaded, or because they’re making use of a Suspense-enabled data fetching mechanism,” Yugi explained.

“This is not the first time there’s been pushback from the community towards changes that are introduced to React without much regard to how React is used outside Meta and Vercel.”

– Henrique Yuji, Code Miner

He detailed a series of tweets that uncovered the problem, starting with one by frontend tech lead and software engineer Dominik Dorfmeister, who noted that there seemed to be a difference between React 18 and 18 in terms of how Suspense handles parallel fetching. Dorfmeister is one of the core maintainers of TanStack Query. Dorfmeister offered proof with links to the React 18 and 19 sandboxes. He also wrote an extensive blog post about the Suspense discovery.

“Yeah this feels like a bad move, especially since it would take existing applications and use cases and automatically make them worse,” responded Tanner Linsley, creator of React Query and TanStack.

It turned out, React 19 disables parallel rendering of filings within the same Suspense boundary, wrote Yuki, adding “which essentially introduces data fetching waterfalls for data that is fetched inside these siblings.”

Yuki does a good job explaining how Suspense came to be used on the client for data fetching and why the change is problematic for people who rely on that pattern, he added.

He also criticized the React team for only mentioning it in a single-line bullet point, and noting that there’s a disconnect between React’s community and the React team. He particularly calling out Meta and Vercel for being a bit tone deaf to how React is used by the wider community.

“This is not the first time there’s been pushback from the community towards changes that are introduced to React without much regard to how React is used outside Meta and Vercel. The push from React’s team and especially Vercel to make RSCs (React Server Components) a fundamental part of building with React is one such case,” he wrote. ”It’s clear that there’s a misalignment between what React’s maintainers think is best for the future of React and the community’s opinions on the subject.”

But all’s well that ends well … at least, as long as the lesson is learned.

“After a lot of public pushback, heated discussions, and probably a good deal of talking behind the scenes, the React team backed out and decided to hold off on this change for now,” Yuki wrote.

Vector Database Use Jumps 44%

Vector database use is on the rise in a big way, according to Retool’s 2024 State of AI report: Sixty-four percent of respondents this year say they are using a vector database, compared to only 20% in last year’s survey.

The survey also found that 23% are customizing models by using vector databases or RAG.

The survey also looked at GPUs and found that of the 68% of respondents are directly using GPUs; a minority of 19% of those own or operate those GPUs in-house. The majority (57%) primarily rent from major cloud providers and 19% are customers of emerging providers.

Fifty-four percent reported achieving a positive ROI from their investments in GPUs, while 5% said they are not getting a positive ROI. Twenty-five percent responded that they did not know.

Somewhat tellingly, 48% think they are allocating GPUs correctly, but many just don’t know. Respondents who feel their company allocates GPUs correctly are more likely (73%) to report seeing positive ROI on their GPU investment.

Netlify Adds Adobe Experience Manager Integration

Web development platform Netlify now integrates with Adobe Experience Manager, a content management system for websites and mobile apps. This will allow AEM users to use Netlify’s headless CMS and shift legacy monolithic web applications toward composable sites, the company said, which fits in nicely with Netlify’s composable web messaging.

It will streamline workflows and reduce the number of touchpoints needed across multichannel projects, Netlify said.

“AEM users have long been needing a tool to migrate legacy monolithic web applications towards composable,” Matt Biilmann, co-founder and chief executive officer at Netlify, said in the press release. “With the AEM integration, users can go to market and build web frontends much faster, while connecting tools across workflows to reduce bottlenecks. The end result is high-performing digital experiences built in a fast, flexible and painless manner.”

Netlify and its partner ecosystem also announced several vertical accelerators designed “to ease the transition from monolithic to composable architecture,” the company added. Those are:

- The GEAR Accelerator by Valtech is designed for the manufacturing industry. It’s focused on aftermarket commerce via customer portals and enhances B2B functionalities with Netlify’s architecture.

- The XCentium’s Composable Accelerator for the financial services industry, enabling rapid deployment and cost savings with pre-built templates, multi-language support and technology integration.

- The CAFE Accelerator by Apply Digital, which helps enterprises accelerate project timelines with a suite of tools and integrations. It’s designed for rapid proofs of concept and solution-led projects, Netlify noted.

JetBrains AI Assistant Integrates Google’s DeepMind Gemini

Devtools company JetBrains’ AI Assistant is now leveraging Google’s DeepMind Gemini language model, which will unlock a number of new use cases for the AI Assistant, the company said.

Google DeepMind Gemini is a family of multimodal large language models developed by Google DeepMind. It’s the successor to LaMDA and PaLM 2. It includes:

- Gemini Ultra, which is optimized for complex tasks like code and reasoning, with support for multiple languages;

- Gemini Pro, a general performance model that’s natively multimodal, with an updated long context window of up to two million tokens;

- Gemini Flash, a lightweight model optimized for speed and efficiency; and

- Gemini Nano, model optimized for on-device tasks.

The JetBrains AI Assistant incorporates Ultra and Pro, which the company saw previewed during their AI Hackathon with Google. That led JetBrains to decide to integrate Gemini, which the company claims makes its AI Assistant the only tool that chooses the best large language model for each problem.

The AI Assistant chooses the most optimal model for each task. Gemini brings a long context window, advanced reasoning and “impressive performance,” to the JetBrains offering, the company said. It will help in use cases where cost efficiency at high volume and low latency are important, it added.

The AI Assistant is available within JetBrains IDE and can generate code, suggest finesse for particular problems, or refactor functions. It can also generate tests, documentation and commit messages. JetBrains’s research has found that on average, developers report savings of up to 8 hours per week with the AI.

State of the Frontend Survey

The State of the Frontend Survey 2024 is online. If you’re a frontend professional, the survey team is asking you to take this 10-minute survey. It’ll be used to create a free report on industry trends. In 2022, the survey collected opinions from approximately 4,000 frontend developers.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.