包阅导读总结

1. 生成式 AI、软件开发、开发者热情、行业挑战、未来影响

2. 本文探讨了生成式 AI 在软件开发中的应用,包括开发者的热情驱动因素、可能存在的问题,如对开源资源的利用、工程技能的贬值等,还提及对不同开发者的影响和未来面临的挑战。

3.

– 开发者对生成式 AI 的热情

– 数据显示开发者在工作中采用 GenAI 工具

– 热情的驱动因素包括投资界的推动和工程师的压力

– 生成式 AI 相关问题

– 被指依赖风险投资,资源获取方式引发争议

– 可能导致工程技能和知识贬值

– 影响开发风格和增加工作管理难度

– 对不同开发者的影响

– 初级开发者更易信任 AI,技术教育或存不足

– 经验丰富者担忧工具使用和问题解决能力

– 未来展望与应对

– 思考如何提升媒体素养以应对趋势

– 探讨技术发展方向和可能的解决方案

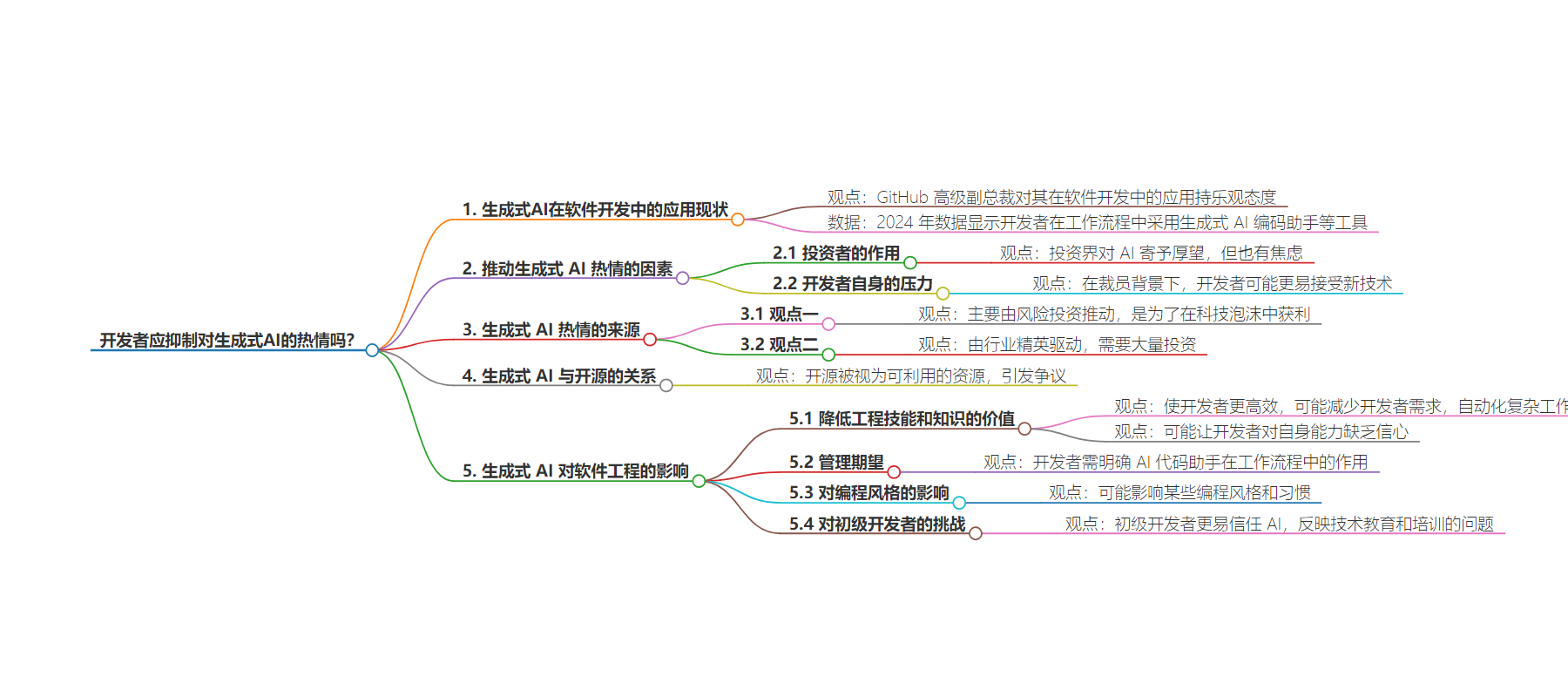

思维导图:

文章地址:https://thenewstack.io/should-developers-curb-their-enthusiasm-for-generative-ai/

文章来源:thenewstack.io

作者:Richard Gall

发布时间:2024/8/19 19:46

语言:英文

总字数:2029字

预计阅读时间:9分钟

评分:89分

标签:生成式 AI,软件开发,AI 工具,开发者生产力,风险投资

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Back in February, following the general release of Copilot Enterprise, GitHub’s senior vice president of product Mario Rodriguez was (unsurprisingly) bullish about the use of generative AI in software development.

“We’re past the point where AI is hype on this … We have crossed the chasm,” Rodriguez told The New Stack. “This is not an early adopter thing anymore.”

Six months later, it would appear it’s hard to disagree with him. Data released in 2024 shows that developers are embracing GenAI coding assistants and other GenAI tools in their workflows:

But what’s driving this enthusiasm? Is it really all organic, bottom-up engagement with this technology? Or are there other imperatives at play?

There’s clearly a big bet on AI in the investment world. Surprisingly cautious noises from the likes of Goldman Sachs followed by cratering tech stocks this summer suggest there’s anxiety in certain parts of the industry, making the drive for adoption particularly important for those who hold the proverbial purse strings.

And aside from the goals of investors, is there not also something in the increasing pressure placed on software engineers, who may be feeling more vulnerable after a period of seemingly continuous layoff announcements?

Is AI Enthusiasm Coming From Devs — or VCs?

Alison Buki, a software engineer at EdTech company Teachable, has a blunt view of the situation. “There are tinkerers and researchers who have a lot of passion for a wide array of machine learning topics,” she told The New Stack in an email interview.

“But the overwhelming push to commercialize ‘AI’ — which is in itself a marketing term — has been largely driven by venture capital and the need to cash out on the next tech bubble.”

A key point bolstering Buki’s argument is what she describes as the field’s “top-heavy” nature — in other words, “the scale of financial, labor and computing resources required to train and deploy commercial large language models.”

This is a point made by researchers such as Kate Crawford, who, in her book “Atlas of AI” mapped out the sprawling infrastructure required to make AI systems work. Of course, as these tools become more ubiquitous and embedded in our professional milieu, it’s not easy to appreciate the scale of this kind, but it’s nevertheless worth retaining how even the most rudimentary tools actually work.

Dwayne Monroe, a senior cloud architect at Cloudreach, made a similar point to Buki’s in an email interview with The New Stack.

“Although there are individual boosters, the drive is coming from industry elites,” he wrote. Like Buki, he noted the massive amount of investment required at the very foundations of this technology. “Microsoft has a multibillion-dollar investment in OpenAI,” he told TNS, and is therefore “keen to achieve a return on this mounting investment.”

Mining the Open Source Commons

Seen in this way, then, the emergence of generative AI in the context of software engineering doesn’t so much represent a hopeful, exciting new horizon for developers and other technologists, but instead signals a return to industry tensions of the past, best typified in Microsoft’s disdain for open source software (which it has admittedly worked hard to correct over the last decade).

Of course, this time, open source isn’t treated as anathema, but instead a resource to be extracted and leveraged. This frustrates Paul Biggar, founder of CircleCI, Darklang and co-founder of Tech for Palestine (a coalition of people in tech supporting projects for Palestinian freedom).

“All this open source code,” he told The New Stack. “One could argue about how specifically copyright law is worded or whatever, but this was clearly not intended to allow the mass ripping off of the source code by AI companies.”

What should be done though? Biggar is ambivalent, his reservations about copyright tempered by his own generally positive experiences with GitHub Copilot. And indeed, to outright reject a technology might seem churlish and lacking in nuance.

However, Monroe isn’t worried about that. In fact, he’s direct and forthright: “Software developers should resist Copilot and competing products because they aren’t needed, were built using code stolen from GitHub and other sources, and are running in sprawling data centers that are ecological disasters.”

Devaluing Engineering Skills and Knowledge

If AI is disempowering software developers, how is it doing it? There’s undoubtedly a crude version of the story: make developers more productive so you don’t need as many and, eventually, hope that the technology gets to a place where even fairly complex work can be automated away.

However, according to research engineer Danny McClanahan, there may be something more subtle and pernicious happening.

“Very often LLMs are specifically marketed to people who don’t feel confident in their speech — code and writing is speech too — which I find really disgustingly manipulative and on a par with the Axie Infinity fraud employed by cryptocurrency advocates,” they said to The New Stack in a direct message via X (formerly Twitter).

“People are convinced they’re not smart enough,” McClanahan added. Some developers “feel they need to make use of some external tool to be on par with others because they think programming is supposed to be easier than it is because they are constantly being forced to produce things under absurd timelines and don’t understand that those are entirely unfair expectations.”

This kind of framing is completely absent from the current conversation around GenAI. However, placed in the context of discussions about burnout and recent concerns with developer productivity, it’s not difficult to see how various anxieties across the industry create a particularly fertile environment for generative AI to be sold as the future.

Expectation Management

It’s true that many anxieties that seem raw today aren’t necessarily new. Developers have, after all, always had to manage seemingly conflicting demands, between productivity and speed on the one hand and quality on the other. However, while the ostensible narrative is that AI is making this easier, it’s possible that it is, in fact, making it more difficult.

Kate Holterhoff, a developer analyst at RedMonk, described in an email interview with TNS “expectation setting where developers need to see where AI code assistants can factor into their workflows.”

The implication here is that there’s a degree of management — partly practical and perhaps also rhetorical — as developers try to adjust to the introduction of this technology into their working lives.

At first glance maybe this doesn’t sound that remarkable. However, when you consider the industry that has spent the last few years talking about platform engineering and “golden paths,” it’s odd that people are committing time and energy to make something work that more often than not doesn’t.

It was curious to see in Stack Overflow’s 2024 Developer Survey that 81% of participants who use AI-assisted tools in their work cited productivity as one of its top benefits, yet only 30% saw improved accuracy as one of these benefits. While it may be possible that the gains are so substantial that a lack of accuracy isn’t such a big deal, it’s still important to ask exactly what additional work these tools are creating.

Disrupting the Development of Style

OK, so maybe they won’t create more work for everyone. However, some instances or even styles of programming could be particularly impacted by an increasing reliance on generative AI tools.

This was a point raised by Biggar. “It’s really bad for ‘I’m making a form in React,’” he offered as an example. “You watch a good React programmer and what they’re doing … they’re just like typing and they’re cutting and pasting, and they don’t stop.”

Style might seem like a trivial matter, but it certainly isn’t. As Biggar seems to suggest in choosing React as an example, style is something that emerges from our relationships with the tools we use and the way we think about the work we do.

The question then is how we develop our own respective styles. How do we get to know more about the tools we work with?

As Biggar put it, generative AI “makes it easy not to learn your tools.” That might not seem like a major issue, especially if you’re an experienced software engineer, but it could make it harder for developers to solve problems. Often, a key part of fixing a complex or tricky issue is going back to the fundamentals of our understanding, returning to the tools we’ve used or relied upon to work out what might have happened.

The CrowdStrike outage in July was a lesson in attending to our tools and the importance of being on top of the details. Is a culture where we embrace convenient abstractions and convincing fabrications really going to help us ensure these sorts of failures don’t happen again?

The Challenges for Junior Developers

It’s possible that this is just doom-mongering. But what of the future of software engineering? What does this mean for junior developers? It’s concerning to see the data in the Stack Overflow Developer Survey suggest less experienced developers are more likely to trust AI — clearly, something is failing in technical education and training. The hype machine is winning out over sober analysis.

“I think what people need to understand about text generation is that a computer using language correctly doesn’t equate to thought,” Buki said.

It seems strange that it’s so hard for that message to get through. Indeed, one also wonders whether the attempt at nuance and the use of language that eagerly emphasizes “augmentation” is just muddying the waters and making it harder for those willing to make strong, forthright arguments — like Monroe’s — to be heard.

One solution, if the generative AI trend is to continue, is to think in terms of media literacy. This is a term that might sound a bit strange in the context of programming, but it’s one that McClanahan uses when referring to skills like “[understanding] which types of blog posts and Stack Overflow questions are actually useful and which are advertising” and “looking through unfamiliar source code.”

Emphasizing these skills in engineering — alongside a more frank discussion about the limitations of LLMs, as Buki urges — could go a long way in giving developers new to the industry a better chance of success. There’s a danger that AI enthusiasm will lead the industry to forget the qualities and skills of a really good software developer.

Making Our Skepticism Public

This is why we need to make our skepticism more public. Yes, many of us are crying out for stability — optimism even; that’s possibly why so many developers are eager to unlock the benefits, potential and opportunities (insert the marketing term of your choice) of generative AI. Cynicism just doesn’t look like a smart strategy when the onward march of the trend appears inevitable.

However, failing to voice reservations and dissent would be a mistake. From the copyright implications to the environmental impact of training LLMs, many important questions need to be acknowledged and addressed rather than subsumed into an attitude that just says, “Let’s worry about that later.”

Huge challenges posed by AI can’t be tackled by one individual; as Buki said, it “requires collective effort on the level of labor organization and government regulation.” But curbing our AI enthusiasm and sharing our fears or cynicism with peers and colleagues can help everyone gain clarity and purpose in the face of seemingly endless hype and professional vulnerability.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.