包阅导读总结

1. 关键词:Streamkap、Streaming ETL、Apache Kafka、Apache Flink、实时数据处理

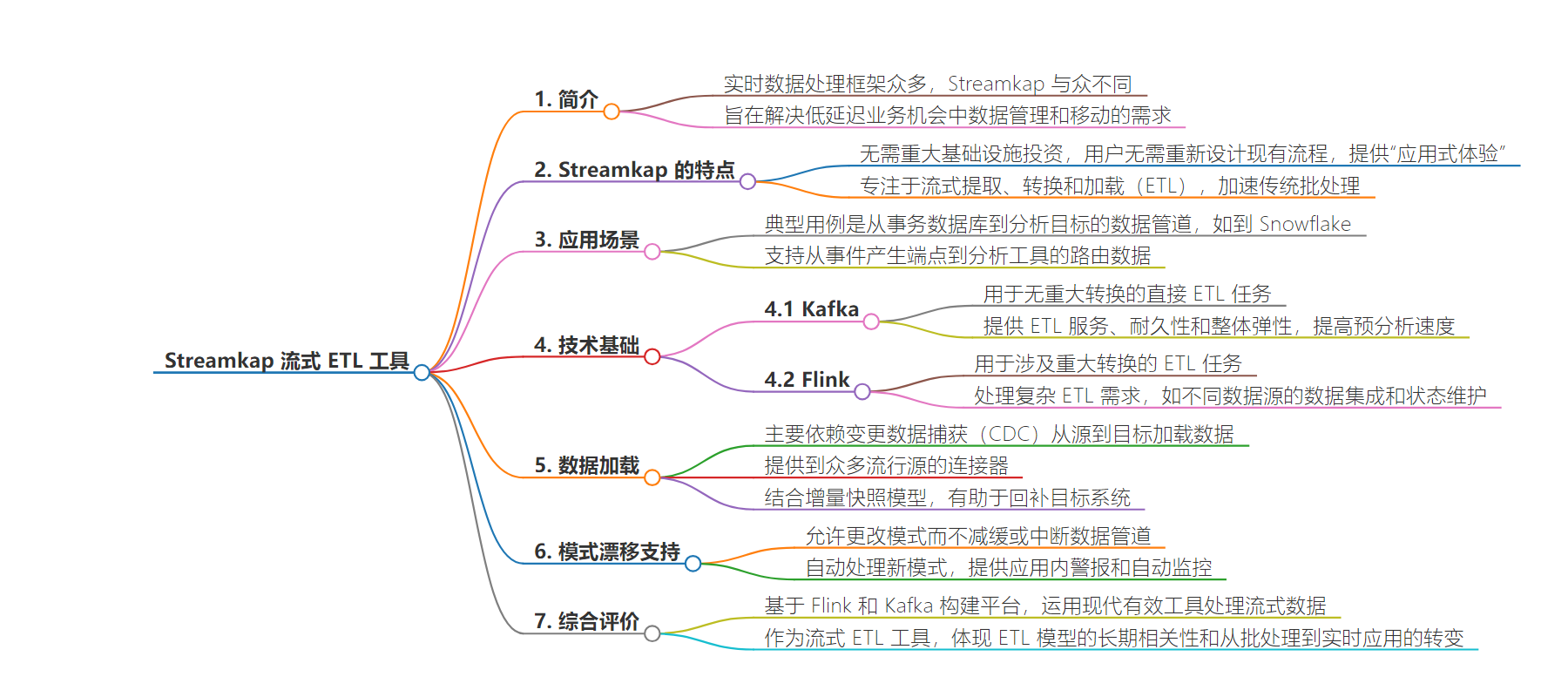

2. 总结:

Streamkap 是一家 2022 年的初创公司,获得了资金。它基于 Apache Kafka 和 Flink,提供流媒体 ETL 工具,无需重大基础设施投资,能加速传统批处理,支持多种数据处理场景,具有诸多优势特性。

3. 主要内容:

– Streamkap 简介:

– 2022 年成立,获种子和预种子资金

– 与其他选择在两方面有区别

– 特点与优势:

– 基于 Apache Kafka 和 Flink,无需重大基础设施投资,提供类应用体验

– 专注流媒体 ETL,加速传统批处理

– 支持从交易数据库到分析目标的数据管道

– 依靠 Kafka 进行简单 ETL 工作,Flink 处理复杂变换

– 主要依靠 CDC 加载数据,包含增量快照模型

– 支持模式漂移,提供警报和自动监控

– 技术与应用:

– 结合 MySQL 和网站低延迟事件数据进行实时个性化

– 能适应模式变化,确保数据持续流动

– 因 Kafka 具备高弹性,减少回补频率

思维导图:

文章地址:https://thenewstack.io/so-long-batch-etl-streamkap-unveils-streaming-etl-tool/

文章来源:thenewstack.io

作者:Jelani Harper

发布时间:2024/8/12 17:49

语言:英文

总字数:1000字

预计阅读时间:4分钟

评分:88分

标签:流式ETL,Apache Kafka,Flink,实时数据处理,变更数据捕获

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

The number of real-time data processing frameworks for organizations accelerating the time required to manage — or just move — data for low latency business opportunities is legion. Common options include streaming data platforms, real-time databases, time-series databases and a wealth of other tooling designed to address these needs.

Streamkap, a 2022 startup that recently garnered $3.3 million in seed and pre-seed funding, is distinguished from nearly each of these choices in two pivotal ways. First, although it’s architected atop both Apache Kafka and Apache Flink, the platform doesn’t entail a significant infrastructural investment.

Users don’t need to redesign existing processes for it. Instead, it delivers what Streamkap CEO Paul Dudley termed an “application-like experience.”

Second, Streamkap specializes in delivering streaming extract, transform and load (ETL) — which isn’t synonymous with streaming data processing — to drastically expedite traditional batch processing while fitting neatly into that same, familiar paradigm.

“Consumers are starting to have the expectation that the experiences they get are powered by real-time data, so businesses have to figure out ways to do that,” Dudley said. “Rather than having to shift their whole architecture to enable it, we’re trying to make it easy to remove what is often the biggest bottleneck, which is their batch ETL.”

Streamkap for Pipelining Data

Although Streamkap can support routing data from event-producing endpoints to analytics tools, its quintessential use case is pipelining data from transactional databases to analytical targets (like Snowflake). Its Kakfa and Flink foundation, coupled with its reliance on change data capture (CDC) and platform features for accommodating schema changes, make this a reality for the enterprise.

“Streamkap is powered by streaming architecture, but it doesn’t require a company to completely re-architect their whole data stack,” Dudley remarked. “They just get faster data.”

Kafka and Flink

In general, Streamkap relies on Kafka for straightforward ETL jobs without significant transformations, and Flink for those that do involve such transformations. Kafka’s publisher subscriber model is essential to Streamkap’s ETL service, durability and overall resiliency while increasing the speed of this pre-analytics process.

According to Dudley, this Kafka attribute endows Streamkap with “a ton of resilience and a distributed log. So, if there are failures on the source or destination side, our system can be the resilient center of that and replay data; if the destination fails, we’re there, retaining data.”

As such, Kafka is a vital component of the pipelines Streamkap builds. Flink is no less so, particularly as the sophistication of the ETL requirements increases.

Dudley described a use case in which a fashion retailer integrates data from MySQL with low latency event data from its website to provide real-time personalization — similar to that of TikTok — for site viewers. “That’s powered by Flink, because you’re joining data from two different data sources and you have to maintain state to effectively do that, so Flink is the tool,” Dudley noted.

However, because of the low infrastructure management requirements Streamkap has, users need not concern themselves about the particulars of which streaming data resource is involved in their application. The ETL tool masks this complexity, giving users “low latency data that’s easy to access,” Dudley said.

Change Data Capture

Streamkap predominantly relies on CDC for loading data from source to target. It offers connectors to a number of popular sources (including data lakes, cloud data warehouses, databases and more), many of which are based on CDC.

According to Dudley, CDC is optimal for the subsecond latency data delivery characteristic of streaming data, because “the log of a database that we’re reading from for change data capture is effectively a streaming source. The source itself is event-driven. Every new change to the database gets written to that log as an event. That lends itself very well to streaming.”

Streamkap also incorporates an incremental snapshot model, which is useful for ensuring all the data, and the data’s changes, have been moved from source to target. It’s particularly useful for its assistance in the backfill process for target systems.

“For a backfill, you want to be able to capture your new streaming events in parallel with it, so that those things aren’t dependent on one another, and make sure that the backfill is not putting too much load on the source database,” Dudley explained. “We do both of those things.” Moreover, because of the resiliency afforded by Kafka, users don’t have to backfill as frequently as they do with batch ETL processing methods.

Schema Drift Support

In addition to its historical snapshots, Streamkap also provides a schema drift support feature that allows for changes to schema without slowing or breaking data pipelines. When developers want to alter schema by say, adding a column, those changes are smoothly propagated to the downstream data model in a streamlined manner.

“What our system does, it automatically accounts for new columns, or new changes in data type, and ensures your data continues flowing without having to stop if a change gets made,” Dudley mentioned.

Streamkap also provides in-app alerting capabilities paired with automatic monitoring to notify users of changes. These mechanisms are “designed to keep customers up to date if there’s been changes on the actual pipeline itself, if there’s any issues,” Dudley revealed.

In Proper Perspective

By scaffolding its platform atop Flink and Kafka, Streamkap employs some of the most modern and effectual tools for transforming and transporting streaming data. The fact that it’s positioned as a streaming ETL tool illustrates the longstanding nature of the ETL model, its continuing enterprise relevance and its inevitable transition from batch to real-time applicability.

Batch processing may yet endure across the data ecosystem, particularly for organizations still using legacy systems. However, the need to hasten the data integration requirement so that it occurs at the pace of contemporary consumer and business demands is readily apparent — and manifest in Streamkap’s recent funding, if not its very presence.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.