包阅导读总结

1. `LLMs、安全更新、网络应用、漏洞、提示注入`

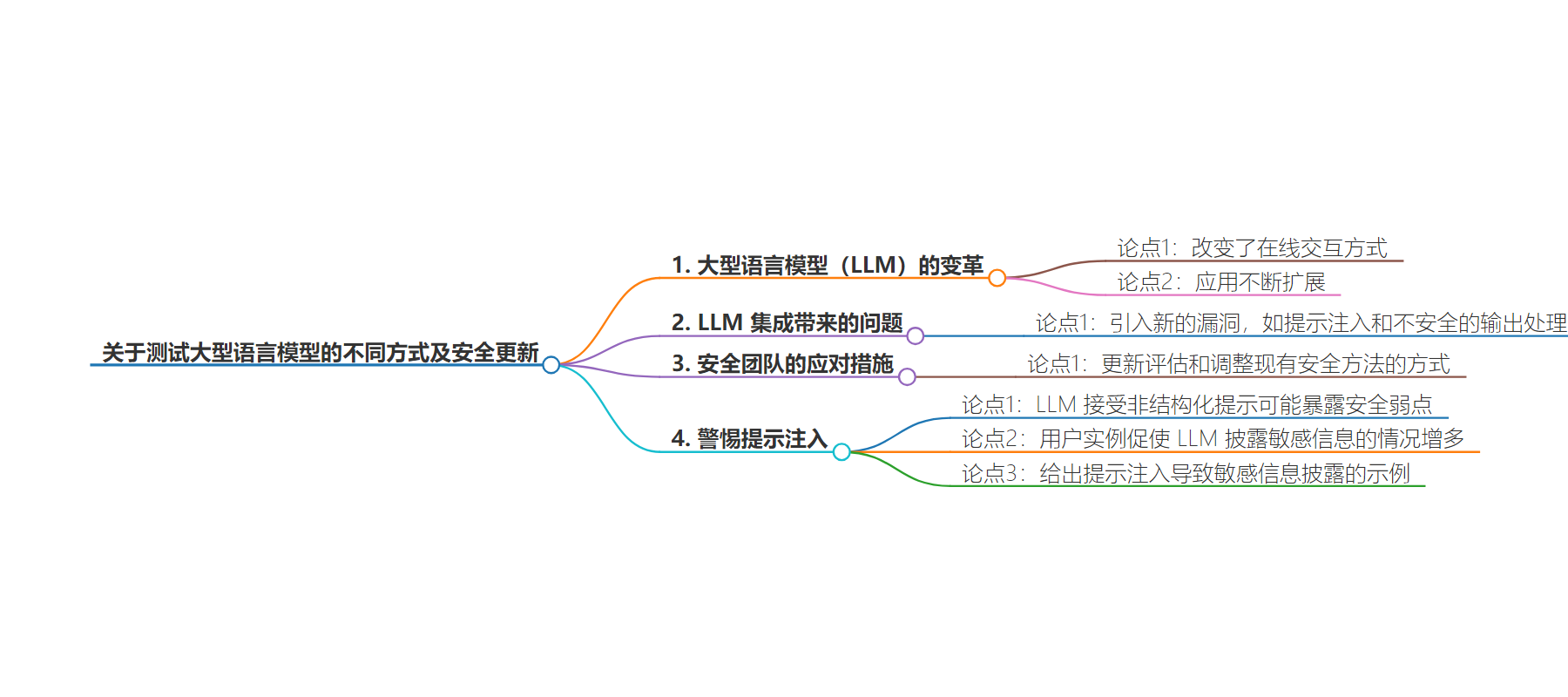

2. 文本主要介绍了网络大型语言模型(LLMs)正改变我们的在线交互方式,虽有商业潜力但也带来新漏洞,如提示注入等,最新网络快照报告建议安全团队更新评估和适应LLMs的安全方法。

3.

– 网络大型语言模型(LLM)使人们能以更自然和对话的方式与应用和系统交互,应用不断扩展。

– 带来变革性商业潜力。

– 但集成也引入新漏洞,如提示注入和不安全的输出处理。

– 虽然可按传统网络应用评估方式评估基于网络的LLM应用,但最新报告建议安全团队更新评估方法。

– 提示注入能暴露安全弱点并导致信息被利用。

– 通用LLMs流行,用户促使其披露敏感信息的情况增多。

思维导图:

文章来源:cloud.google.com

作者:Christian Elston,Jennifer Guzzetta

发布时间:2024/8/20 0:00

语言:英文

总字数:388字

预计阅读时间:2分钟

评分:85分

标签:LLMs,网络安全,提示注入攻击,概率性测试,AI 安全

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Web-based large-language models (LLM) are revolutionizing how we interact online. Instead of well-defined and structured queries, people can engage with applications and systems in a more natural and conversational manner — and the applications for this technology continue to expand.

While LLMs offer transformative business potential for organizations, their integration can also introduce new vulnerabilities, such as prompt injections and insecure output handling. Although web-based LLM applications can be assessed in much the same manner as traditional web applications, in our latest Cyber Snapshot Report we recommend that security teams update their approach to assessing and adapting existing security methodologies for LLMs.

Beware of prompt injections

An LLMs ability to accept non-structured prompts, in an attempt to “understand” what the user is asking, can expose security weaknesses and lead to exploitation. As general purpose LLMs rise in popularity, so do the number of user instances prompting the LLM to disclose sensitive information like usernames and passwords. Here is an example of this type of sensitive information disclosure from prompt injection: