包阅导读总结

1. 关键词:GenOps、Microservices、DevOps、Generative AI、Operational Platforms

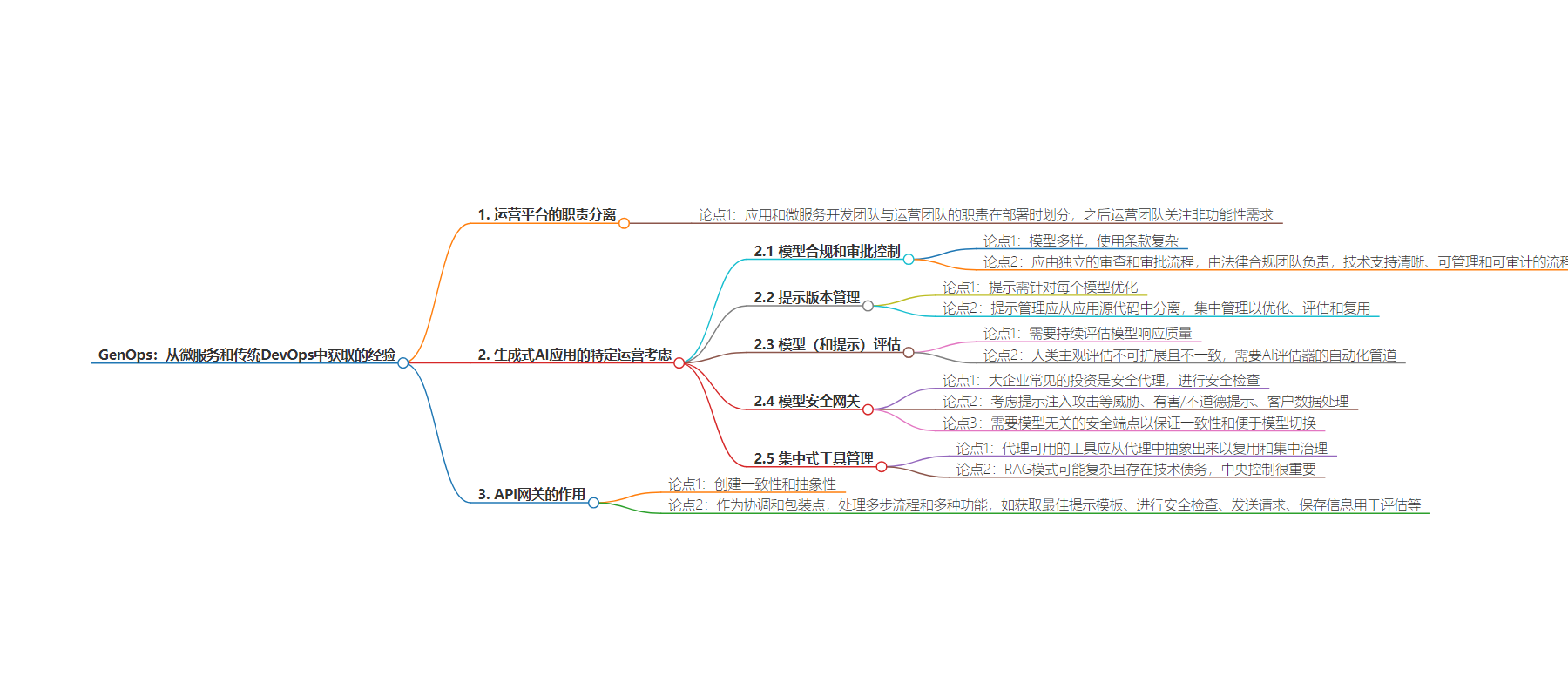

2. 总结:文本讨论了从微服务和传统 DevOps 中获得的 GenOps 经验,指出应明确应用和微服务开发团队与运营团队的职责分离,并针对生成式 AI 应用提出了如模型合规审批、提示版本管理等特殊的运营考虑。

3. 主要内容:

– 运营平台应明确区分开发与运营团队职责

– 生成式 AI 应用的特殊运营考虑

– 模型合规和审批控制

– 存在多种模型,使用条款复杂

– 应由专门流程确定使用条款是否可接受

– 提示版本管理

– 提示需针对模型优化

– 应集中管理,以便优化、评估和复用

– 模型(和提示)评估

– 需持续评估模型响应质量

– 人工评估不可扩展,需 AI 驱动的自动化管道

– 模型安全网关

– 常见安全考虑

– 需要与模型无关的安全端点

– 集中式工具管理

– 工具应从代理中抽象出来以集中治理

– API 网关的作用

– 创造一致性和抽象性

– 可作为协调和包装点,处理多步流程和监控

思维导图:

文章来源:cloud.google.com

作者:Sam Weeks

发布时间:2024/8/30 0:00

语言:英文

总字数:2024字

预计阅读时间:9分钟

评分:89分

标签:生成式运营 (GenOps),生成式 AI,AI 运维,微服务,DevOps

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

All operational platforms should create a clear point of separation between the roles and responsibilities of app and microservice development teams from the responsibilities of the operational teams. With microservice based applications, responsibilities are handed over at the point of deployment, and focus switches to non-functional requirements such as reliability, scalability, infrastructure efficiency, networking and security.

Many of these requirements are still just as important for a generative AI app, and I believe there are some additional considerations specific to generative agents and apps which require specific operational tooling:

1. Model compliance and approval controls

There are a lot of models out there. Some are open-source, some are licensed. Some provide intellectual property indemnity, some do not. All have specific and complex usage terms that have large potential ramifications but take time and the right skillset to fully understand.

It’s not reasonable or appropriate to expect our developers to have the time or knowledge to factor in these considerations during model selection. Instead, an organization should have a separate model review and approval process to determine whether usage terms are acceptable for further use, owned by legal and compliance teams, supported on a technical level by clear, governable and auditable approval/denial processes that cascade down into development environments.

2. Prompt version management

Prompts need to be optimized for each model. Do we want our app teams focusing on prompt optimization, or on building great apps? Prompt management is a non-functional component and should be taken out of the app source code and managed centrally where they can be optimized, periodically evaluated, and reused across apps and agents.

3. Model (and prompt) evaluation

Just like an MLOps platform, there is clearly a need for ongoing assessments of model response quality to enable a data-driven approach to evaluating and selecting the most optimal models for a particular use-case. The key difference with Gen AI models being the assessment is inherently more qualitative compared to the quantitative analysis of skew or drift detection of a traditional ML model.

Subjective, qualitative assessments performed by humans are clearly not scalable, and introduce inconsistency when performed by multiple people. Instead, we need consistent automated pipelines powered by AI evaluators, which although imperfect, will provide consistency in the assessments and a baseline to compare models against each other.

4. Model security gateway

The single most common operational feature I hear large enterprises investing time into is a security proxy for safety checks before passing a prompt on to a model (as well as the reverse: a check against the generated response before passing back to the client).

Common considerations:

-

Prompt Injection attacks and other threats captured by OWASP Top 10 for LLMs

-

Harmful / unethical prompts

-

Customer PII or other data requiring redaction prior to sending on to the model and other downstream systems

Some models have built in security controls; however this creates inconsistency and increased complexity. Instead a model agnostic security endpoint abstracted above all models is required to create consistency and allow for easier model switching.

5. Centralized Tool management

Finally, the Tools available to the agent should be abstracted out from the agent to allow for reuse and centralized governance. This is the right separation of responsibilities especially when involving data retrieval patterns where access to data needs to be controlled.

RAG patterns have the potential to become numerous and complex, as well as in practice not being particularly robust or well maintained with the potential of causing significant technical debt, so central control is important to keep data access patterns as clean and visible as possible.

Outside of these specific considerations, a prerequisite already discussed is the need for the API Gateway itself to create consistency and abstraction above these Generative AI specific services. When used to their fullest, API Gateways can act as much more than simply an API Endpoint but can be a coordination and packaging point for a series of interim API calls and logic, security features and usage monitoring.

For example, a published API for sending a request to a model can be the starting point for a multi-step process:

-

Retrieving and ‘hydrating’ the optimal prompt template for that use case and model

-

Running security checks through the model safety service

-

Sending the request to the model

-

Persisting prompt, response and other information for use in operational processes such as model and prompt evaluation pipelines.