包阅导读总结

1. 关键词:Iceberg、Data Lake、Table Management、Versioning、Schema Evolution

2. 总结:本文介绍了 Apache Iceberg 在数据湖管理中的革新,包括其核心概念、关键特征与优势,对比传统格式的差异,实际应用案例及最佳实践等,强调其为现代数据分析和机器学习带来便利。

3. 主要内容:

– Apache Iceberg 概述

– 定义及核心概念:如不可变性、版本控制、模式演化、事务支持等

– 与传统数据湖表格式对比的优势

– 关键特征和好处

– 确保数据完整性和一致性

– 支持模式演化和版本控制

– 提供性能优化技术

– 支持时间旅行和数据版本化

– 与数据处理框架集成

– 与传统格式比较

– 传统格式的局限性

– Iceberg 如何解决这些局限

– 各自的适用用例

– 实际用例

– 数据仓库

– 分析

– 机器学习

– 成功案例

– 最佳实践和考虑因素

– 数据建模和设计策略

– 性能优化技术

– 与数据处理框架集成

– 安全和数据隐私考虑

– 选择合适的 Iceberg 实现的标准

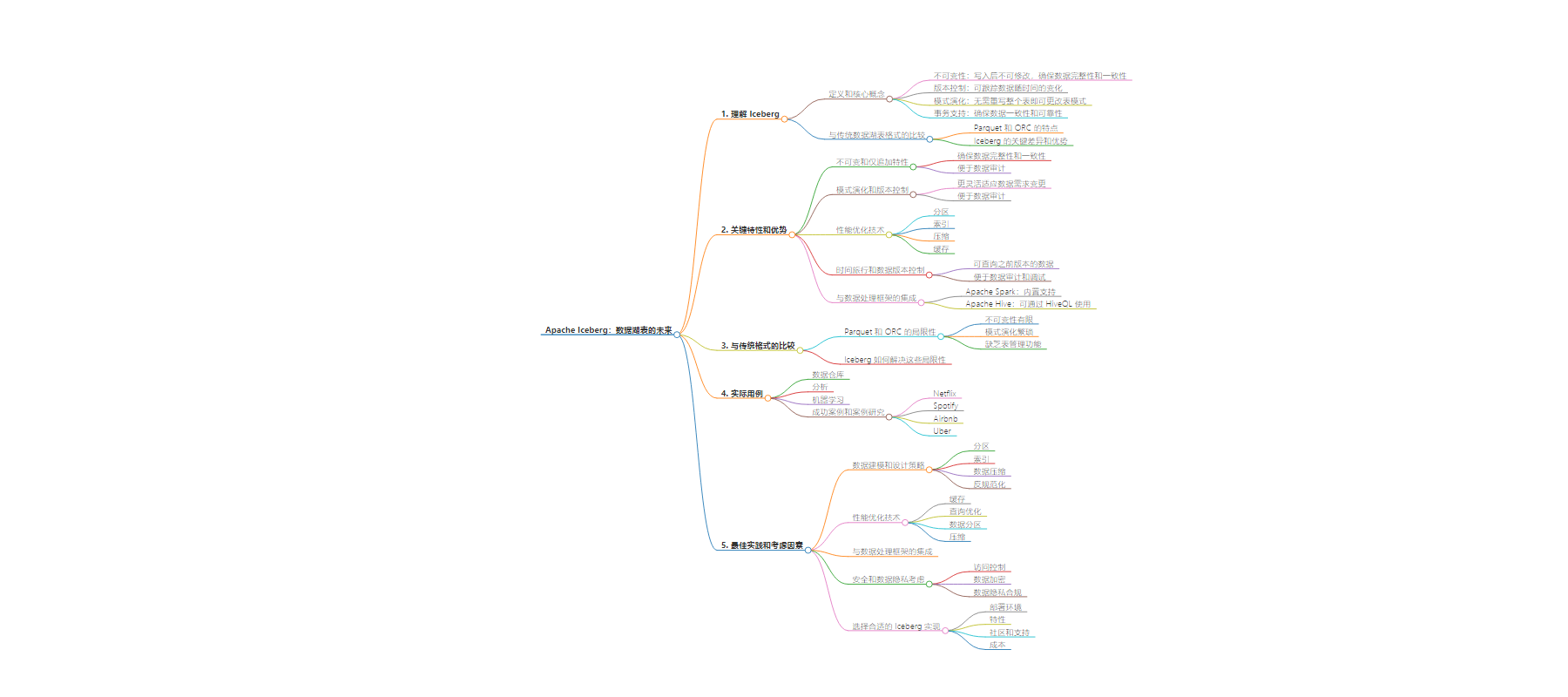

思维导图:

文章地址:https://www.javacodegeeks.com/2024/09/iceberg-the-future-of-data-lake-tables.html

文章来源:javacodegeeks.com

作者:Eleftheria Drosopoulou

发布时间:2024/9/5 9:18

语言:英文

总字数:1507字

预计阅读时间:7分钟

评分:87分

标签:Apache Iceberg,数据湖管理,模式演化,数据完整性,版本控制

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Apache Iceberg has emerged as a revolutionary technology in the realm of data lake management. Its innovative approach to table management offers a host of benefits, making it a compelling choice for modern data analytics and machine learning applications.

This article will delve into the key features, benefits, and practical applications of Iceberg, providing a comprehensive overview for data engineers and analysts seeking to harness its power. We will explore how Iceberg addresses the limitations of traditional data lake table formats, empowering organizations to build scalable, efficient, and reliable data pipelines.

1. Understanding Iceberg

Definition and Core Concepts

Apache Iceberg is a table format designed specifically for data lakes. Unlike traditional data lake table formats, Iceberg offers a more structured and managed approach to data storage and management.

Key concepts of Iceberg:

- Immutability: Iceberg tables are immutable, meaning once data is written to a table, it cannot be modified. This ensures data integrity and consistency.

- Versioning: Iceberg supports versioning, allowing you to track changes to your data over time. This is useful for data auditing and time travel queries.

- Schema evolution: Iceberg allows you to evolve the schema of a table without having to rewrite the entire table. This makes it easier to adapt to changing data requirements.

- Transaction support: Iceberg supports ACID transactions, ensuring data consistency and reliability.

Comparison with Traditional Data Lake Table Formats

Parquet and ORC are two commonly used data lake table formats. While they offer efficient storage and compression, they lack some of the features provided by Iceberg.

| Feature | Iceberg | Parquet | ORC |

|---|---|---|---|

| Immutability | Yes | No | No |

| Versioning | Yes | No | No |

| Schema evolution | Yes | Limited | Limited |

| Transactions | Yes | No | No |

| Table management | Built-in | No | No |

Key differences and advantages of Iceberg:

- Immutability: Iceberg’s immutability ensures data integrity and consistency, making it easier to audit and track changes to your data.

- Versioning: Iceberg’s versioning feature allows you to track changes to your data over time, making it easier to revert to previous versions if needed.

- Schema evolution: Iceberg’s schema evolution capabilities make it easier to adapt to changing data requirements without having to rewrite the entire table.

- Transactions: Iceberg’s support for ACID transactions ensures data consistency and reliability, making it suitable for mission-critical applications.

- Table management: Iceberg provides built-in table management features, such as table partitioning, indexing, and optimization, simplifying data lake management.

2. Key Features and Benefits

Iceberg’s immutable and append-only nature ensures data integrity and consistency. Once data is written to a table, it cannot be modified. New data is added as new files, creating a history of the table’s state over time.

| Feature | Iceberg | Traditional Data Lake Formats |

|---|---|---|

| Immutability | Yes | No |

| Append-only | Yes | No |

| Data integrity | Ensures data integrity | May have issues with data integrity |

| Data auditing | Easy to audit data changes | Can be difficult to audit data changes |

Schema Evolution and Versioning

Iceberg supports schema evolution, allowing you to add or remove columns from a table without having to rewrite the entire table. This makes it easier to adapt to changing data requirements. Additionally, Iceberg’s versioning feature allows you to track changes to your data over time, making it easier to revert to previous versions if needed.

| Feature | Iceberg | Traditional Data Lake Formats |

|---|---|---|

| Schema evolution | Yes | Limited |

| Versioning | Yes | No |

| Flexibility | More flexible | Less flexible |

| Data auditing | Easier to audit data changes | Can be difficult to audit data changes |

Performance Optimization Techniques

Iceberg offers various performance optimization techniques to improve query performance and reduce storage costs. These include:

| Technique | Description |

|---|---|

| Partitioning | Dividing data into smaller partitions based on specific criteria. |

| Indexing | Creating indexes to improve query performance. |

| Compression | Compressing data to reduce storage costs. |

| Caching | Storing frequently accessed data in memory for faster retrieval. |

Time Travel and Data Versioning

Iceberg’s time travel feature allows you to query data from previous versions of a table. This is useful for data auditing, analysis, and debugging.

| Feature | Iceberg | Traditional Data Lake Formats |

|---|---|---|

| Time travel | Yes | No |

| Data versioning | Yes | No |

| Data auditing | Easier to audit data changes | Can be difficult to audit data changes |

| Debugging | Can be used for debugging | Limited debugging capabilities |

Integration with Data Processing Frameworks

Iceberg integrates seamlessly with popular data processing frameworks, such as Apache Spark and Apache Hive. This makes it easy to use Iceberg as a data lake table format in your existing data pipelines.

| Framework | Integration |

|---|---|

| Apache Spark | Built-in support |

| Apache Hive | Can be used with HiveQL |

| Other frameworks | May require custom integrations |

3. Comparison with Traditional Formats

Limitations of Parquet and ORC

Parquet and ORC, while efficient storage formats, have certain limitations:

- Immutability: Parquet and ORC are not inherently immutable, making it difficult to track changes to data over time.

- Schema evolution: While Parquet and ORC support schema evolution to some extent, it can be cumbersome and may require rewriting the entire table.

- Table management: Parquet and ORC lack built-in table management features, making it more challenging to manage data lakes.

How Iceberg Addresses These Limitations

Iceberg addresses the limitations of Parquet and ORC by offering:

- Immutability: Iceberg’s immutable nature ensures data integrity and consistency, making it easier to track changes to data over time.

- Schema evolution: Iceberg’s schema evolution capabilities allow you to add or remove columns from a table without having to rewrite the entire table.

- Table management: Iceberg provides built-in table management features, such as partitioning, indexing, and optimization, simplifying data lake management.

Use Cases for Each Format

- Parquet and ORC:

- Suitable for general-purpose data storage in data lakes.

- Good for batch processing and analytics workloads.

- May be sufficient for simpler data lake use cases.

- Iceberg:

- Ideal for complex data lakes with evolving data requirements.

- Suitable for data warehousing, machine learning, and real-time analytics.

- Provides a more structured and managed approach to data lake management.

4. Practical Use Cases

Data Warehousing

Iceberg is widely used in data warehousing applications due to its ability to handle large datasets, support complex queries, and provide a structured approach to data management. Many organizations have adopted Iceberg to replace traditional data warehouse solutions, such as Teradata and Netezza.

Analytics

Iceberg’s time travel and versioning features make it ideal for analytical workloads. Analysts can use Iceberg to track changes to data over time, compare different versions of data, and perform historical analysis.

Machine Learning

Iceberg is increasingly being used for machine learning applications. Its ability to handle large datasets, support schema evolution, and integrate with popular data processing frameworks makes it a valuable tool for training and deploying machine learning models.

Success Stories and Case Studies

- Netflix: Netflix uses Iceberg to manage its vast dataset of movie and TV show metadata, enabling real-time recommendations and personalized experiences.

- Spotify: Spotify uses Iceberg to store and manage user data, song metadata, and playlist information, supporting its music streaming and recommendation services.

- Airbnb: Airbnb uses Iceberg to manage its data lake, enabling data-driven decision-making and personalization.

- Uber: Uber uses Iceberg to store and manage ride data, driver information, and location data, supporting its real-time ride-hailing platform.

5. Best Practices and Considerations

Data Modeling and Design Strategies

| Strategy | Description |

|---|---|

| Partitioning: | Divide your data into smaller partitions based on specific criteria to improve query performance and scalability. |

| Indexing: | Create indexes on frequently queried columns to improve query performance. |

| Data compression: | Use appropriate compression formats to reduce storage costs and improve query performance. |

| Denormalization: | Denormalize your data to reduce the number of joins required for queries, but be careful not to introduce data redundancy. |

Performance Optimization Techniques

| Technique | Description |

|---|---|

| Caching: | Use caching to store frequently accessed data in memory for faster retrieval. |

| Query optimization: | Optimize your queries to avoid expensive operations like full table scans and nested loops. |

| Data partitioning: | Partition your data to improve query performance and scalability. |

| Compression: | Use appropriate compression formats to reduce storage costs and improve query performance. |

Integration with Data Processing Frameworks

| Framework | Integration |

|---|---|

| Apache Spark | Built-in support |

| Apache Hive | Can be used with HiveQL |

| Apache Flink | Can be used with Flink SQL |

| Other frameworks | May require custom integrations |

Security and Data Privacy Considerations

| Consideration | Description |

|---|---|

| Access controls: | Implement fine-grained access controls to restrict access to sensitive data based on user roles and permissions. |

| Data encryption: | Encrypt sensitive data at rest and in transit to protect it from unauthorized access and disclosure. |

| Data privacy compliance: | Ensure compliance with relevant data privacy regulations, such as GDPR and CCPA. |

Choosing the Right Iceberg Implementation

| Criteria | Factors to Consider |

|---|---|

| Deployment environment: | Consider your deployment environment (on-premises, cloud, hybrid) and choose an implementation that is compatible. |

| Features: | Assess the features offered by different implementations, such as support for specific data processing frameworks, advanced query capabilities, and security features. |

| Community and support: | Evaluate the size and activity of the community surrounding the implementation, as well as the availability of support resources. |

| Cost: | Consider the cost of the implementation, including licensing fees, hardware requirements, and operational costs. |

6. Wrapping Up

Iceberg offers a structured, scalable, and high-performance approach to data lake table management. Its key features, including immutability, schema evolution, versioning, and integration with data processing frameworks, make it ideal for modern data analytics and machine learning applications.