包阅导读总结

1. `Amazon MemoryDB`、`Vector Search`、`AWS`、`Generative AI`、`Managed Database`

2. 总结:

AWS 宣布 Amazon MemoryDB 的向量搜索功能全面可用,它具备超低延迟和最快性能,支持多种应用场景,与多项服务兼容。新功能在 re:Invent 2023 曾预览,现引入新特性,且在不同区域的 7.1 版本单分片配置可用。同时对比了其他支持向量搜索的 AWS 数据库服务。

3. 主要内容:

– Amazon MemoryDB 向量搜索功能

– 全面可用,是具备多 AZ 可用性的内存数据库

– 提供超低延迟和最快性能,召回率高

– 2021 年推出,兼容 Redis

– 应用场景

– 用于生成式 AI 等,如 RAG、异常检测、文档检索、实时推荐

– 可用现有 API 实现相关用例

– 支持存储数百万向量和毫秒级查询

– 支持新特性如 VECTOR_RANGE 和 SCORE

– 对比其他服务

– AWS 还有其他支持向量搜索的数据库

– 各云提供商均推出类似功能以竞争

– 给出选择数据库的建议

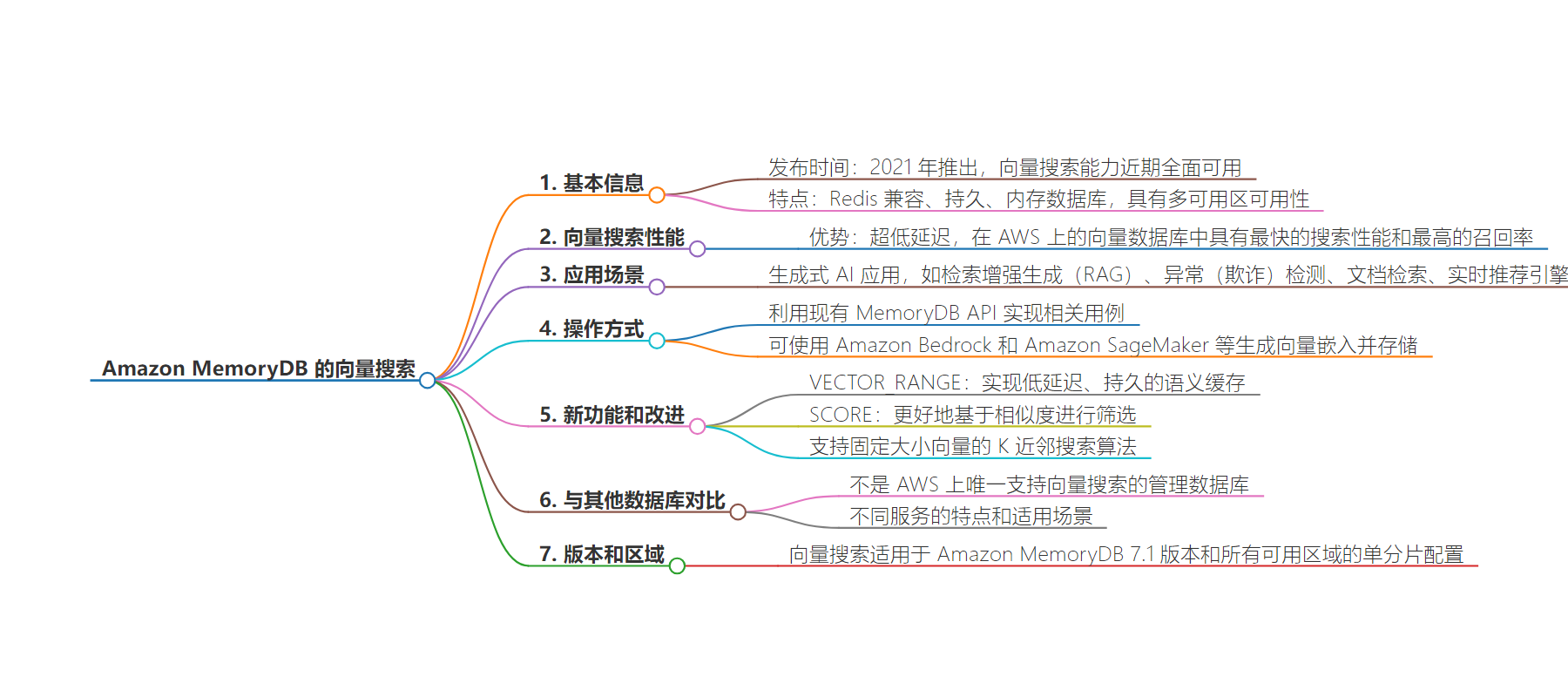

思维导图:

文章来源:infoq.com

作者:Renato Losio

发布时间:2024/8/11 0:00

语言:英文

总字数:538字

预计阅读时间:3分钟

评分:90分

标签:AWS,Amazon MemoryDB,向量搜索,生成式 AI,Redis

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

AWS recently announced the general availability of vector search for Amazon MemoryDB, the managed in-memory database with Multi-AZ availability. The new capability provides ultra-low latency and the fastest vector search performance at the highest recall rates among vector databases on AWS.

Launched in 2021, Amazon MemoryDB is a Redis-compatible, durable, in-memory database. It is now the recommended managed choice for vector search on AWS in scenarios like generative AI applications where peak performance is the most important selection criterion. Channy Yun, principal developer advocate at AWS, writes:

With vector search for Amazon MemoryDB, you can use the existing MemoryDB API to implement generative AI use cases such as Retrieval Augmented Generation (RAG), anomaly (fraud) detection, document retrieval, and real-time recommendation engines. You can also generate vector embeddings using artificial intelligence and machine learning (AI/ML) services like Amazon Bedrock and Amazon SageMaker and store them within MemoryDB.

Developers can generate vector embeddings using managed services like Amazon Bedrock and SageMaker and store them within MemoryDB for real-time semantic search for RAG, low-latency durable semantic caching, and real-time anomaly detection.

Vector search for MemoryDB supports storing millions of vectors with single-digit millisecond queries and provides update latencies at the highest throughput levels with over 99% recall. Yun adds:

With vector search for MemoryDB, you can detect fraud by modeling fraudulent transactions based on your batch ML models, then loading normal and fraudulent transactions into MemoryDB to generate their vector representations through statistical decomposition techniques such as principal component analysis (PCA).

/filters:no_upscale()/news/2024/08/aws-memorydb-vector-search/en/resources/22024-vector-search-memorydb-3-fraud-detect-1722232454177.png)

Source: AWS blog

The new capability was released in preview at re:Invent 2023 and the recent general availability introduces new features and improvements. These include VECTOR_RANGE, which allows the database to operate as a low-latency, durable semantic cache, and SCORE, which better filters on similarity. Vector fields support K-nearest neighbor searching (KNN) of fixed-sized vectors using the flat search (FLAT) and hierarchical navigable small worlds (HNSW) algorithms.

MemoryDB is not the only managed database on AWS supporting vector search. Among the different services targeting generative AI workloads, OpenSearch, Aurora PostgreSQL, RDS PostgreSQL, Neptune, and DocumentDB have introduced vector-related functionalities in the past year. Vinod Goje, software engineering manager at Bank of America, comments:

I’ve been watching the vector database market, which has been growing rapidly with numerous new products emerging (…) Experts believe the market is becoming overcrowded, making it difficult for new products to stand out amidst the plethora of existing options.

Shayon Sanyal and Graham Kutchek, database specialist solutions architects at AWS, detail the key considerations when choosing a database for generative AI applications. They suggest:

If you’re already using OpenSearch Service, Aurora PostgreSQL, RDS for PostgreSQL, DocumentDB or MemoryDB, leverage their vector search capabilities for your existing data. For graph-based RAG applications, consider Amazon Neptune. If your data is stored in DynamoDB, OpenSearch can be an excellent choice for vector search using zero-ETL integration. If you are still unsure, use OpenSearch Service.

All cloud providers provide have recently introduced vector search capabilities to compete with vector databases like Pinecone and serverless Momento Cache. For example, InfoQ previously reported on Google BigQuery and Microsoft Vector Search.

Vector search is available for Amazon MemoryDB version 7.1 and a single shard configuration in all regions where the database is available.