包阅导读总结

1. 关键词:

– AI 开发工具、Astro、OpenAI、Waku、Node.js

2. 总结:

近期 Databricks 调查显示 70%部署 GenAI 的公司使用工具和向量数据库,其中 Plotly Dash 最受欢迎,LangChain 排第三。Astro 4.14 发布,Content Layer API 支持大规模站点。OpenAI 为 GPT-4o 推出微调功能并提供免费代币。Waku 新版本支持 React 服务器动作 API,Node.js 计划移除 Corepack。

3. 主要内容:

– AI 开发工具调查:

– Databricks 对超 10,000 全球客户调查,70%部署 GenAI 的公司用工具和向量数据库增强基础模型。

– Plotly Dash 最受欢迎,其次是 Hugging Face Transformers,LangChain 排第三。

– Astro 4.14 发布:

– 包含 Content Layer API 和 IntelliSense 实验性功能。

– API 基于内容集合,更具性能,支持大规模站点。

– OpenAI 新动态:

– 为 GPT-4o 推出微调功能,开发者可用自定义数据集。

– 9 月 23 日前每天为各组织提供百万免费训练代币。

– Waku 支持新 API:

– 最小化 React 框架 Waku 发布 v0.12,完全支持 React 服务器动作 API。

– Node.js 计划:

– 其 PMWG 成员计划完全移除 Corepack,引发争议。

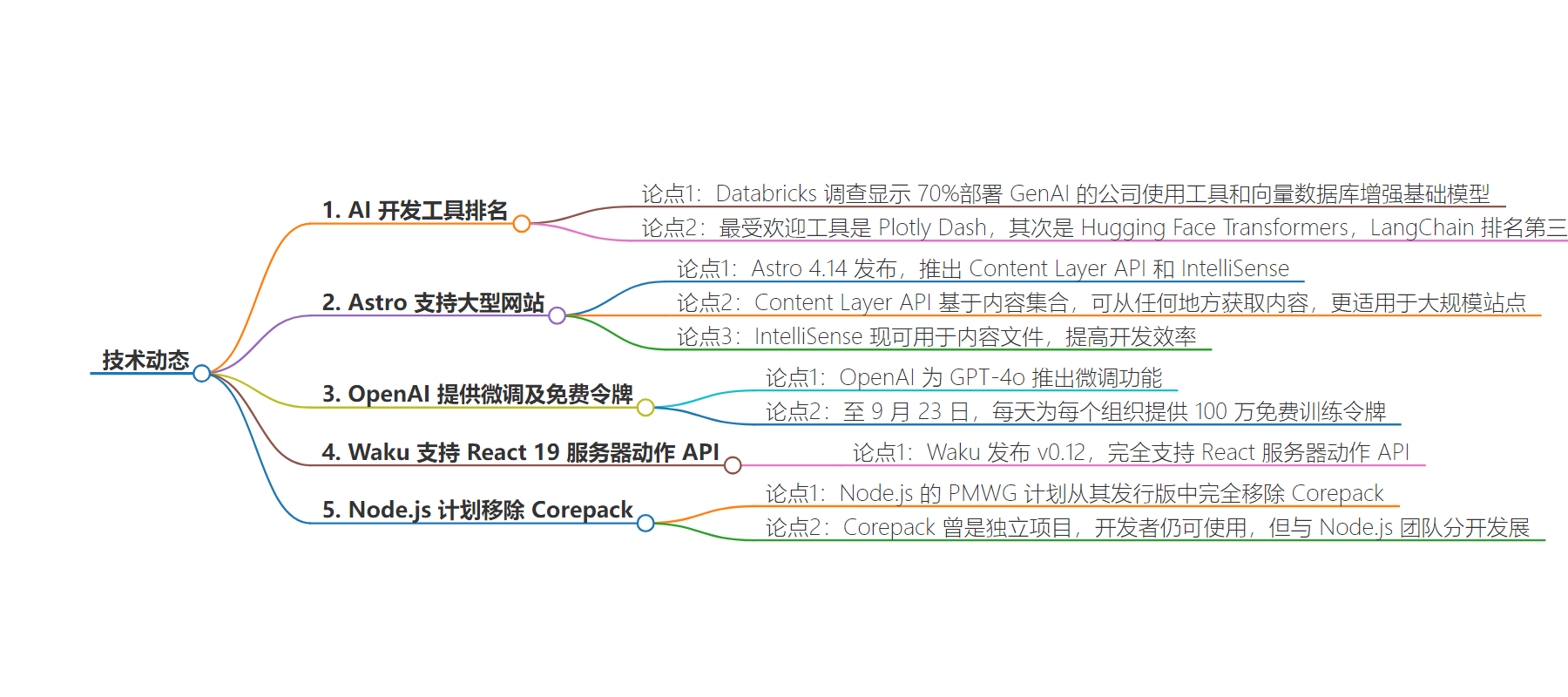

思维导图:

文章地址:https://thenewstack.io/ai-dev-tools-ranked-and-astro-adds-support-for-large-sites/

文章来源:thenewstack.io

作者:Loraine Lawson

发布时间:2024/8/23 16:43

语言:英文

总字数:1025字

预计阅读时间:5分钟

评分:90分

标签:AI 开发工具,LangChain,Astro,OpenAI,Waku

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

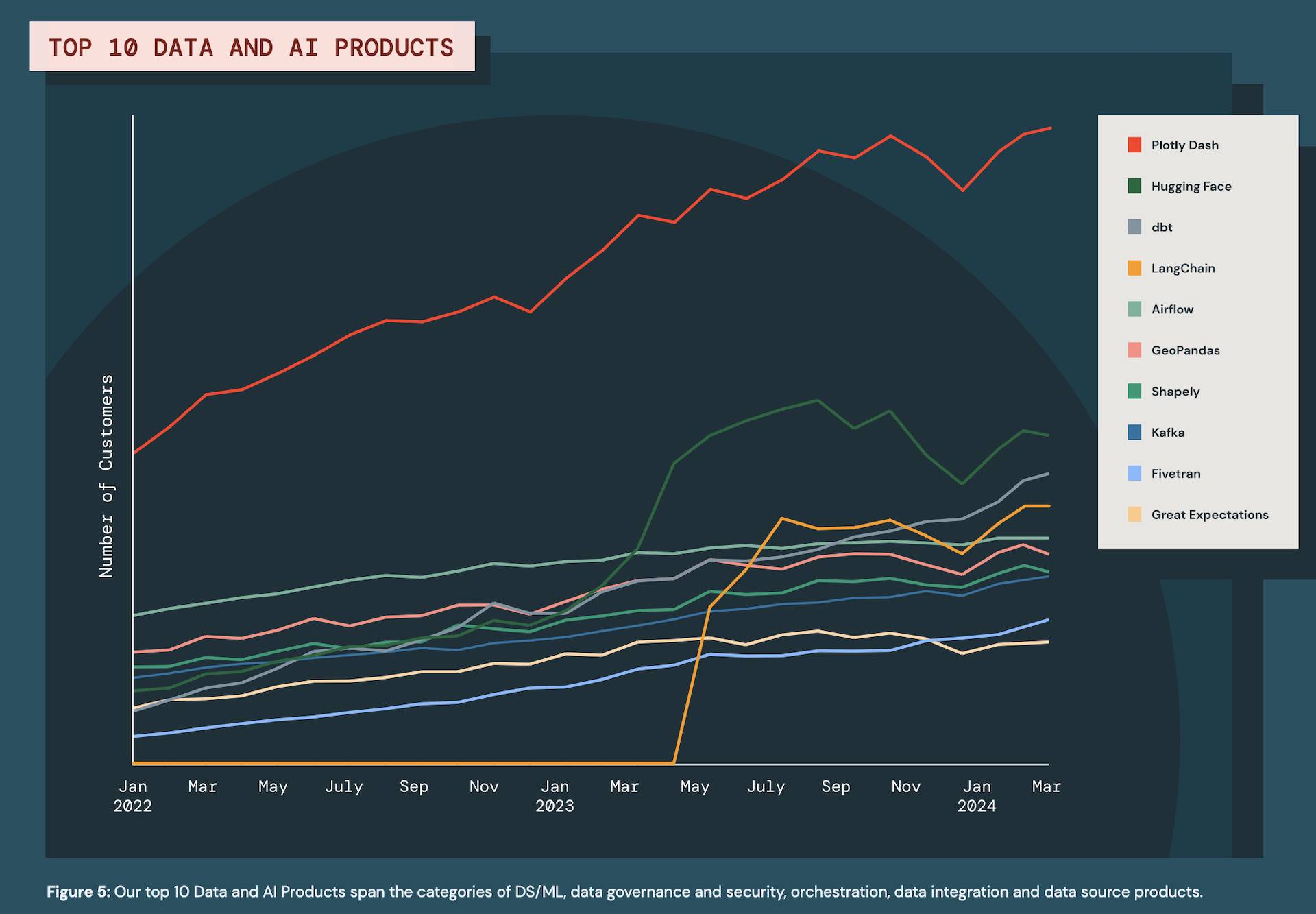

Seventy percent of companies deploying GenAI use tools and vector databases to augment base models, according to a recent survey by Databricks. The company queried more than 10,000 global customers using its Databricks Data Intelligence Platform. The company published the results of its survey in its State of Data + AI report this month.

LangChain is one of the most widely used data and AI products, but it’s not the only one — in fact, it ranked third in this survey. The most popular tool is Plotly Dash, a low-code platform that supports building, scaling and deploying data applications.

Graph courtesy DataBricks

It’s followed by Hugging Face Transformers, which rose in the ranks from number four a year ago.

“Many companies use the open source platform’s pretrained transformer models together with their enterprise data to build and fine-tune foundation models,” the report noted about Hugging Face. “This supports a growing trend we’re seeing with RAG applications.”

Finally, the report cites LangChain.

“When companies build their own modern LLM applications and work with specialized transformer-related Python libraries to train the models, LangChain enables them to develop prompt interfaces or integrations to other systems,” the report stated.

Astro Content Layer API Supports Large Scale Sites

Astro 4.14 released last week with the first experimental releases of the Content Layer API and IntelliSense inside content files.

The Content Layer API builds upon content collections, which were introduced in Astro 2.0 and offer a way to manage local content in Astro projects.

“While this has helped hundreds of thousands of developers build content-driven sites with Astro, we’ve heard your feedback that you want more flexibility and power in managing your content,” the release post stated. “The new Content Layer API builds upon content collections, taking them beyond local files in src/content/ and allowing you to fetch content from anywhere, including remote APIs.”

The collections will work alongside existing collections, so content can be migrated to the new API at the developer’s own pace.

The driver behind the Content Layer API — which is more performant in how it loads content — is to support scaling Astro for sites with hundreds of thousands of pages, Astro stated.

“The new Content Layer API builds upon content collections, taking them beyond local files in src/content/ and allowing you to fetch content from anywhere, including remote APIs.”

— Astro Team

“It caches content locally to avoid the need to keep hitting APIs and has dramatically improved handling of local Markdown and MDX files,” the blog noted, adding that it offers a build time and memory comparisons of building a site with 10,000 pages on a Macbook Air M1.

IntelliSense, a set of code editing features that help developers code more efficiently, is now inside content files in Astro — and this is also experimental.

“This feature helps you write content files more efficiently by providing completions, validation, hover information, and more for frontmatter keys and values,” the post explained. “These tools are based on your content schemas, and available directly in your editor.”

The new release also deprecates support for dynamic prerender values and incorporates a new injectTypes integration API.

“This API allows integrations to inject types into the user’s project, making it easier to provide type definitions for your integration’s features,” the post stated.

OpenAI Offers Fine Tuning, Free Tokens

This week OpenAI launched fine-tuning for GPT-4o, which it noted is one of the most requested features from developers.

“Developers can now fine-tune GPT-4o with custom datasets to get higher performance at a lower cost for their specific use cases,” the company stated. “Fine-tuning enables the model to customize structure and tone of responses, or to follow complex domain-specific instructions.”

This allows programmers to produce strong results for their applications with as few as a dozen examples in their training data set, it noted.

OpenAI is also offering a million training tokens per day for free for every organization through September 23.

The blog post outlines how to get started on any tier level by visiting the fine-tuning dashboard, clicking create, and selecting gpt-4o-2024-08-06 from the base model drop-down.

Waku Supports React 19 Server Actions API

Waku, a minimalistic React framework, released v0.12 this week. It fully supports the React server actions API, including inline server actions created in server components, server actions imported from separate files, and the invocation of server actions via element action props, according to the framework’s blog.

“Server actions allow you to define and securely execute server-side logic directly from your React components without the need for manually setting up API endpoints, sending POST requests to them with fetch, or managing pending states and errors,” the blog noted.

Node.js to Remove Corepack

Node.js’ PMWG (Package Maintenance Working Group) members were originally discussing enabling Corepack by default when the conversation did a 180-degree turn — now the group plans to remove Corepack completely from its distribution, according to Sarah Gooding, head of content marketing at developer security platform Socket.

Node.js is an open source, cross-platform JavaScript runtime environment that executes JavaScript code outside of a web browser. Corepack is a Node.js tool that helps manage package managers such as npm, Yarn, and pnpm within Node.js projects.

The roadmap for removal is on GitHub.

Not everyone is happy about the decision. Those who are happily using Corepack see its removal as a step backward. The blog post quotes one developer who said the npm has become unusable, noting that it’s slow, gives confusing error messages, and is sometimes just plain wrong.

Gooding explained that Corepack actually existed as a separate project from Node.js previously. Developers can still use it, but the team thought it should evolve separately from Node.js. This is apparently in part because the Corepack maintainers were not working with the team to develop it within Node.js.

Gooding quotes software engineer Jordan Harband, who said that yarn and Corepack maintainers usually have not attended the meetings where decisions are being made for the better part of a decade, prioritizing collaboration on GitHub or Twitter.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.