包阅导读总结

1. 关键词:Kolmogorov–Arnold Networks、Interpretable、Neural Network、Physics Modeling、Functions

2. 总结:大学研究者创建了新型可解释神经网络 KAN,其受定理启发,在物理建模任务中表现出色,准确性更高且参数更少,还具备可视化和可编辑性,但训练较传统神经网络复杂,源代码已在 GitHub 上公布。

3. 主要内容:

– 研究者创建新型神经网络 KAN

– 来自麻省理工、加州理工和东北大学

– KAN 的特点

– 受 Kolmogorov-Arnold 表示定理启发

– 学习每个输入的激活函数,输出求和

– 性能优于基于感知机的模型

– 准确性高,参数少

– 可视化有助于发现符号公式

– KAN 的结构与优势

– 类似 MLP,学样条函数而非权重

– 能学习和优化数据特征

– 遵循与 MLP 相同的缩放定律

– KAN 的交互性

– 有接口供用户解释和编辑

– 可简化、替换函数

– 与传统神经网络比较

– 训练更棘手,需更多调参和技巧

– 传统神经网络训练更易,适用条件更广

– KAN 源代码在 GitHub 可获取

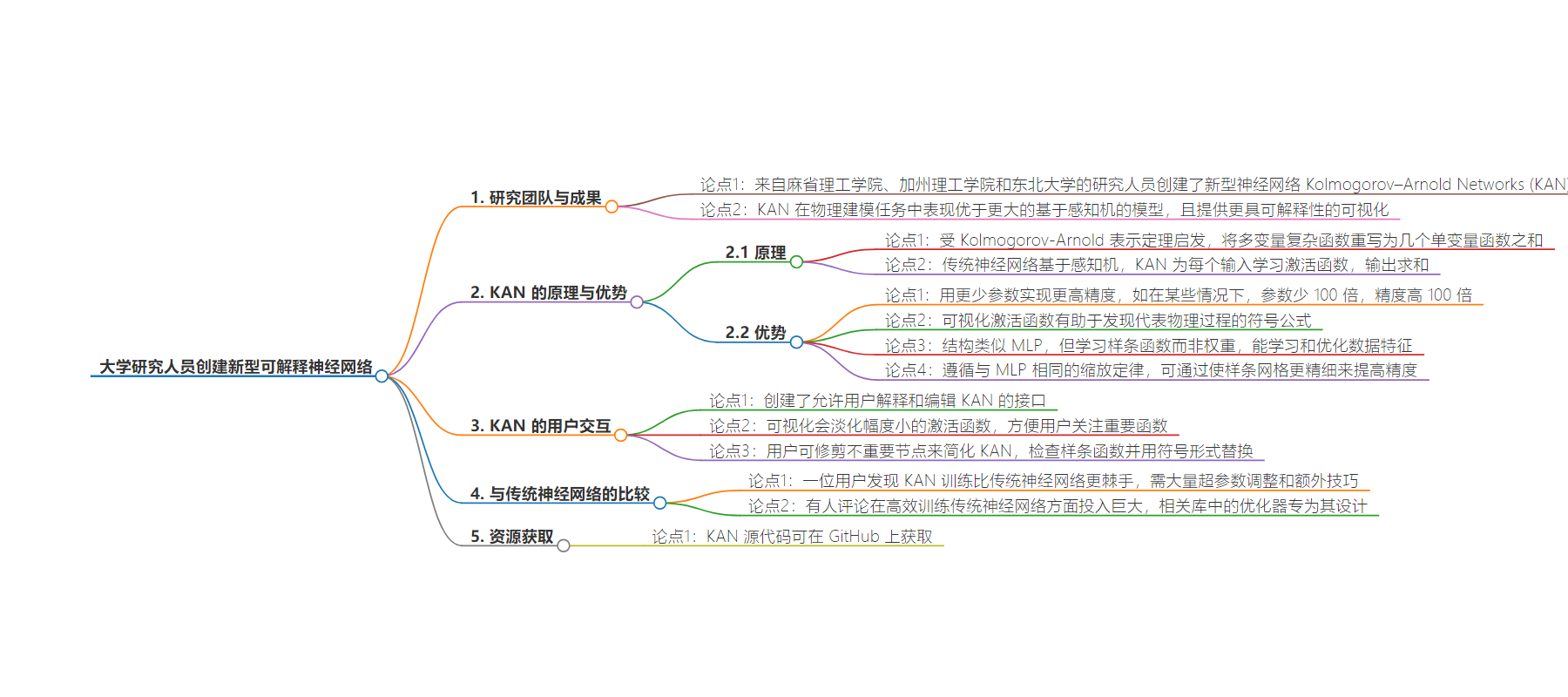

思维导图:

文章来源:infoq.com

作者:Anthony Alford

发布时间:2024/8/20 0:00

语言:英文

总字数:535字

预计阅读时间:3分钟

评分:84分

标签:神经网络,可理解 AI,物理建模,科尔莫哥罗夫-阿诺德网络,机器学习

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Researchers from Massachusetts Institute of Technology, California Institute of Technology, and Northeastern University created a new type of neural network: Kolmogorov–Arnold Networks (KAN). KAN models outperform larger perceptron-based models on physics modeling tasks and provide a more interpretable visualization.

KANs were inspired by the Kolmogorov-Arnold representation theorem, which states that any complex function of multiple variables can be re-written as the sum of several functions of a single variable. While today’s neural networks are based on the perceptron, which learns a set of weights used to create a linear combination of its inputs that is passed to an activation function, KANs learn an activation function for each input, and the outputs of those functions are summed. The researchers compared the performance of KANs traditional multilayer perceptron (MLP) neural networks on the task of modeling several problems in physics and mathematics and found that KANs achieved better accuracy with fewer parameters; in some cases, 100x accuracy with 100x fewer parameters. The researchers also showed that visualizing the KAN’s activation functions helped users discover symbolic formulas that could represent the physical process being modeled. According to the research team:

The reason why large language models are so transformative is because they are useful to anyone who can speak natural language. The language of science is functions. KANs are composed of interpretable functions, so when a human user [works with] a KAN, it is like communicating with it using the language of functions.

KANs have a structure similar to MLPs, but instead of learning weights for each input, they learn a spline function. Because of their layered structure, the research team showed KANs can not only learn features in the data, but “also optimize these learned features to great accuracy” because of the splines. The team also showed that KANs follow the same scaling laws as MLPs, such as increasing parameter count to improve accuracy, and they found that they could increase a trained KAN’s number of parameters, and thus its accuracy, “by simply making its spline grids finer.”

The researchers created an interface that allows human users to interpret and edit the KAN. The visualization will “fade out” activation functions with small magnitude, allowing users to focus on importantfunctions. Users can simplify the KAN by pruning unimportantnodes. Users can also examine the spline functions and if desired replace them with symbolic forms, such as trigonometric or logarithmic functions.

In a Hacker News discussion about KANs, one user shared his own experience comparing KANs to traditional neural networks (NN):

My main finding was that KANs are very tricky to train compared to NNs. It’s usually possible to get per-parameter loss roughly on par with NNs, but it requires a lot of hyperparameter tuning and extra tricks in the KAN architecture. In comparison, vanilla NNs were much easier to train and worked well under a much broader set of conditions. Some people commented that we’ve invested an incredible amount of effort into getting really good at training NNs efficiently, and many of the things in ML libraries (optimizers like Adam, for example) are designed and optimized specifically for NNs. For that reason, it’s not really a good apples-to-apples comparison.

The KAN source code is available on GitHub.