包阅导读总结

1.

– `GitHub Models`

– `Microsoft`

– `Generative AI`

– `Azure`

– `Developers`

2.

GitHub Models 是微软新推出的面向开发者的平台。它是预训练的生成式 AI 模型,具有模型发现、测试及与 GitHub 集成等特点。旨在为生成式 AI 开发者提供便捷路径,且与 Azure AI SDK 兼容,是微软吸引开发者使用 Azure 的新尝试。

3.

– GitHub Models 介绍

– 是新加入 GitHub 市场的产品,方便开发者使用最新生成式 AI 模型。

– 预训练的生成式 AI 模型,可用于多种任务。

– 关键特征包括模型发现、测试及与 GitHub 集成。

– 推出原因

– 生成式 AI 领域创新迅速,开发者获取模型存在挑战。

– 现有平台存在不足,如获取和使用模型困难,计算资源要求高等。

– 优势

– 为生成式 AI 开发者提供便捷的生产路径。

– 与 GitHub CLI 和 Codespaces 紧密集成。

– 与 Azure AI SDK 兼容,便于从原型到生产的转换。

– 总结

– 是微软吸引开发者使用 Azure 的新尝试。

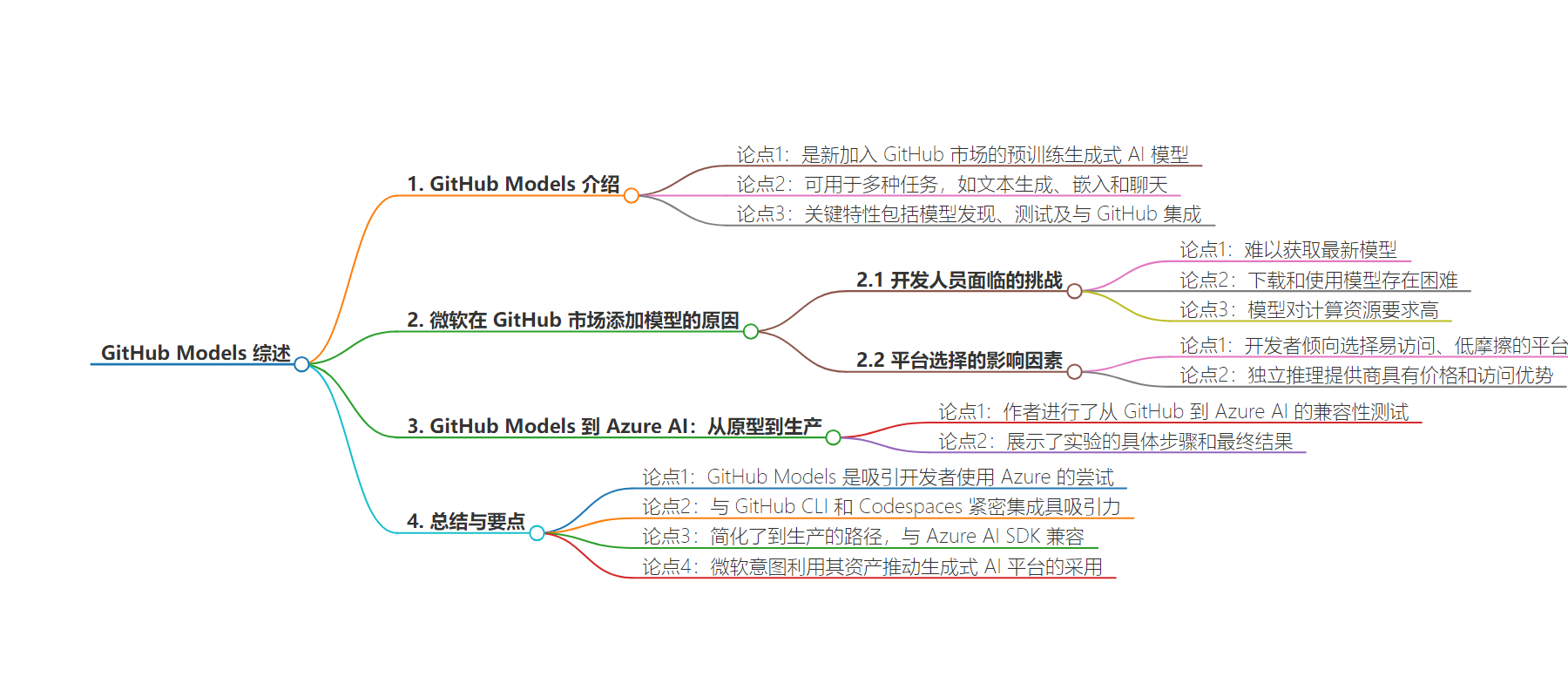

思维导图:

文章地址:https://thenewstack.io/github-models-review-of-microsofts-new-ai-engineer-platform/

文章来源:thenewstack.io

作者:Janakiram MSV

发布时间:2024/8/8 13:55

语言:英文

总字数:1173字

预计阅读时间:5分钟

评分:82分

标签:GitHub 模型平台,生成式 AI,微软,Azure AI,AI 开发

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Last week, GitHub announced the launch of GitHub Models, a new addition to the existing marketplace, which makes it easy for developers to consume the latest generative AI models.

I got early access to GitHub Features and here is my review and analysis of this offering.

What Are GitHub Models?

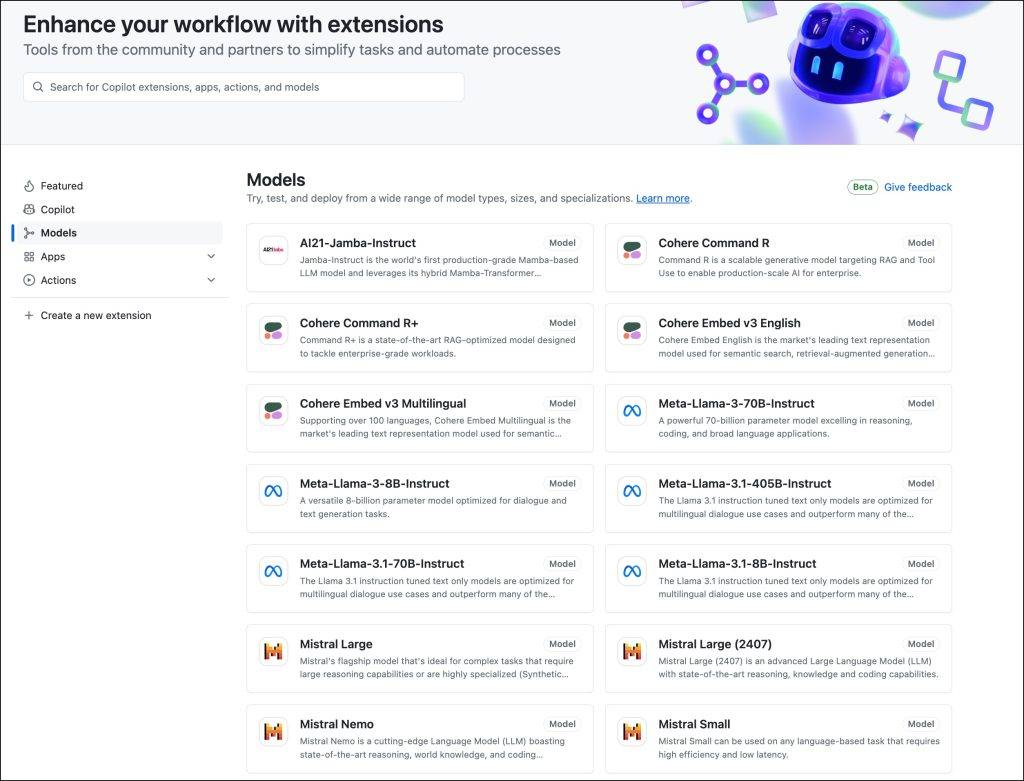

GitHub Models are pre-trained generative AI models that can be used for a variety of tasks, such as text generation, embeddings, and chat. These models are made available through the GitHub Marketplace, allowing developers to browse, search, and select models that fit their specific needs.

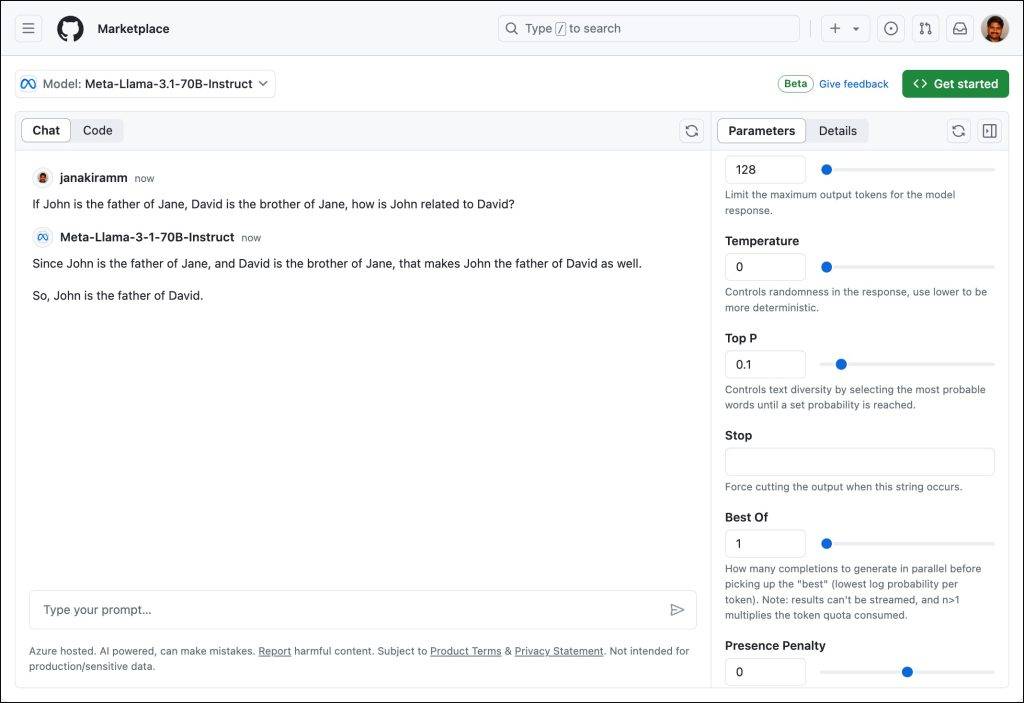

Key features of GitHub Models include model discovery, where developers can easily find and explore AI models through the GitHub Marketplace, with filters for model type, size, and specialization. Model testing allows you to test and experiment with models using the built-in playground, adjusting parameters and submitting prompts to see how the model responds. Additionally, integration with GitHub offers seamless integration with GitHub repositories, CLI, and Codespaces, allowing developers to automate their workflows and enhance collaboration.

Why Microsoft Added Models to the GitHub Marketplace

No week goes by without the announcement of a new large language model. Innovation in the generative AI domain is accelerating at breakneck speed. It’s becoming tough for developers to keep up with the rapid pace of development.

One of the challenges is the accessibility of the latest and greatest models launched by companies such as Meta and Mistral. Though Hugging Face has emerged as the de facto platform for publishing AI models, downloading and consuming them is still a challenge for many developers. Most of these models demand high-end compute resources that are not easily available. This led to many developers relying on inference-as-a-service platforms such as Runpod, Replicate, Groq, Fireworks AI, and Perplexity Labs to quickly build applications that talk to the models. Sometimes there may be a delay from the inference providers to add a new model to their catalog.

When developers have a choice, they always choose platforms that are accessible and have less friction.

On the other hand, model providers such as Anthropic, AI21 Labs, Cohere, Google Deepmind, and Meta are collaborating with cloud providers to make their models available through cloud-based managed offerings. For example, as soon as Meta announced the Llama 3 herd of models, they became available in Amazon Bedrock, Azure AI, and Google VertexAI. The model providers are also working closely with independent inference providers like Groq and Fireworks AI to publish their models.

When developers have a choice, they always choose platforms that are accessible and have less friction. Compared to the public cloud-based generative AI platforms, independent inference providers are cheap and easily accessible. They offer a generous free tier and an API that is compatible with the popular OpenAI SDK. These factors are moving developers away from non-cloud platforms to build their early prototypes, and even production-grade applications targeting the new breed of inference platforms.

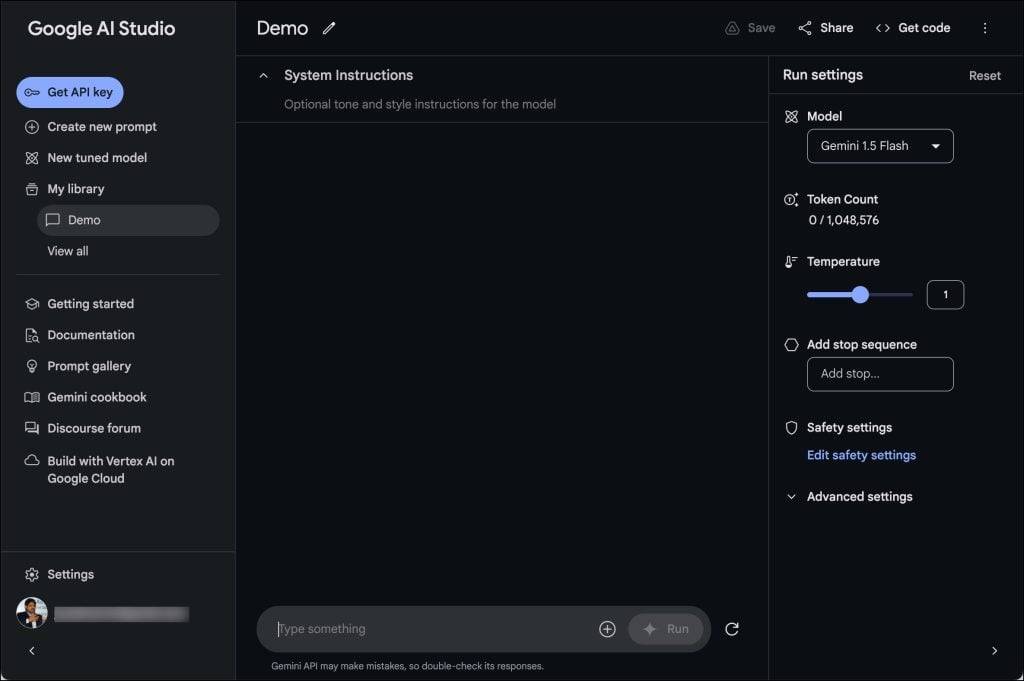

Google realized this early and started to offer the Gemini family of models through Google AI Studio. Accessing models through AI Studio is much simpler than using Google Cloud’s managed AI platform, Vertex AI. But Google has work to do to bring parity between AI Studio and Vertex AI. Currently, developers deal with disparate SDKs and APIs, making it hard to move from AI Studio to Vertex AI.

When it comes to Azure, there is a long process and a complex workflow before a developer can write their first prompt targeting an LLM. In order to deploy the model, you must first create a resource group and an instance of Azure AI Studio followed by configuring a project for the deployment. Most importantly, this is available to only those developers who have an active Azure subscription.

GitHub Models aims to provide an on-ramp and a happy path to production for generative AI developers.

This is exactly what Microsoft wants to target with GitHub Models: provide an on-ramp and a happy path to production for generative AI developers. Developers can start experimenting with GitHub Models and seamlessly move to Azure AI for production. Both use the same SDK, which is 100% compatible, making it easy for developers to change a couple of lines to move from prototyping to production.

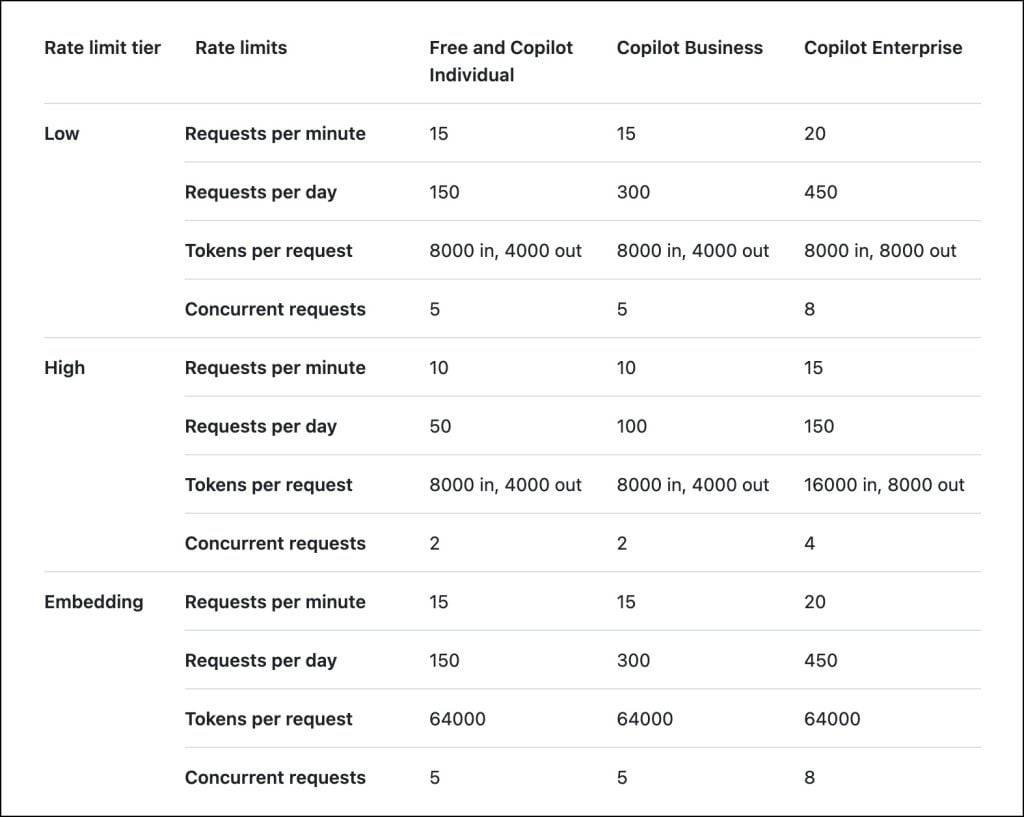

The GitHub Models platform has a liberal rate limit for developers with free and Copilot subscriptions. These limits are sufficient for experimentation and building prototypes based on RAG and AI Assistant use cases.

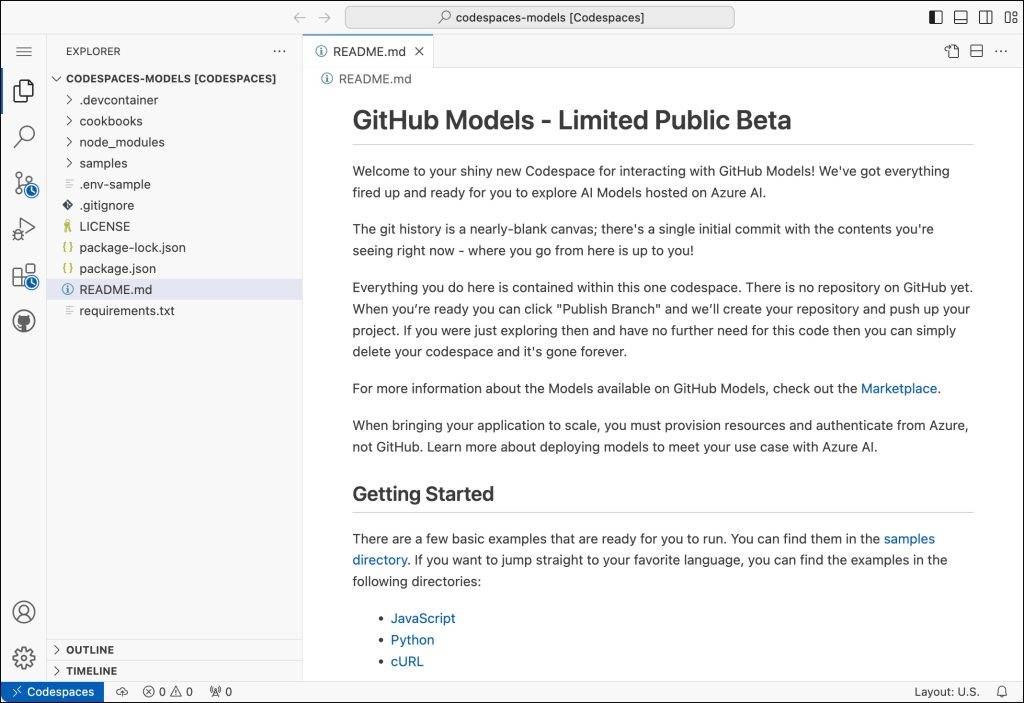

The tight integration with Codespaces is another advantage developers get when using GitHub Models. They can quickly copy and paste the code from the Playground to start building applications. The ability to access a model, experiment with it, and export the code to an editor without leaving the browser tab is one of the key advantages of GitHub Models.

GitHub Models to Azure AI: Prototype to Production

As soon as I got access to the limited beta of GitHub Models, the first test I did was to check the compatibility between the SDK and the ease of moving from GitHub to Azure AI.

My goal was to access Llama 3.1 70B on GitHub Models, experiment with it, and then use the same code to target the Llama 3.1 70B model deployment running in Azure AI.

Here are the high-level steps from this experiment:

First, I accessed the model, tried a few prompts and changed some parameters, like temperature.

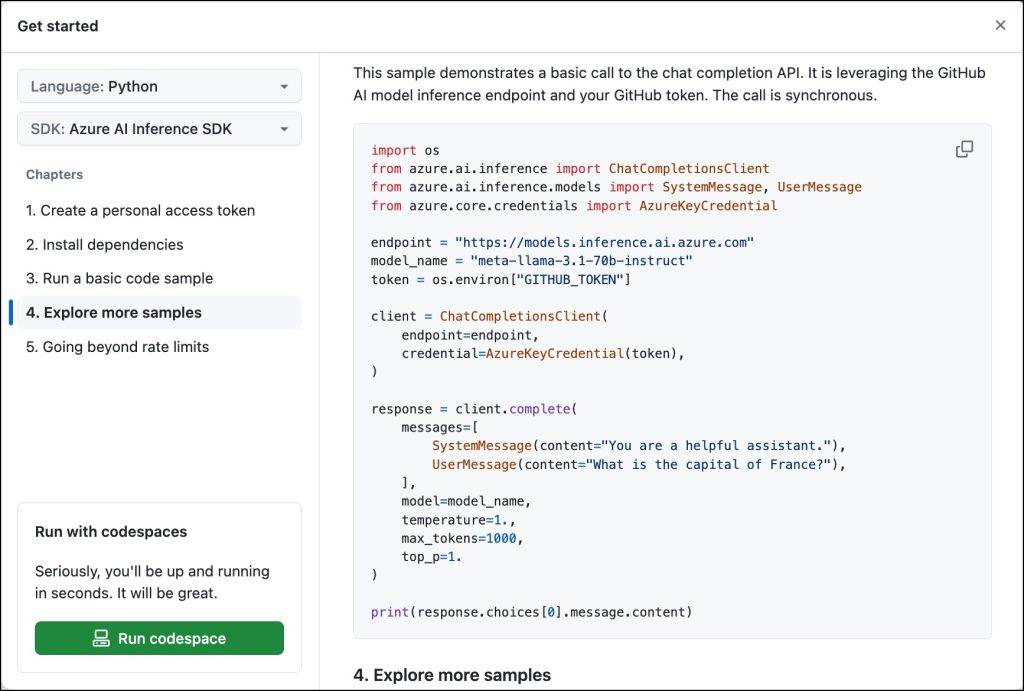

I selected the “Get started” button and copied the playground’s generated Python code.

Since I want to run this within GitHub Codespaces, I clicked on the “Run codespace” button. In a few minutes, the codespace was configured based on a sample repo.

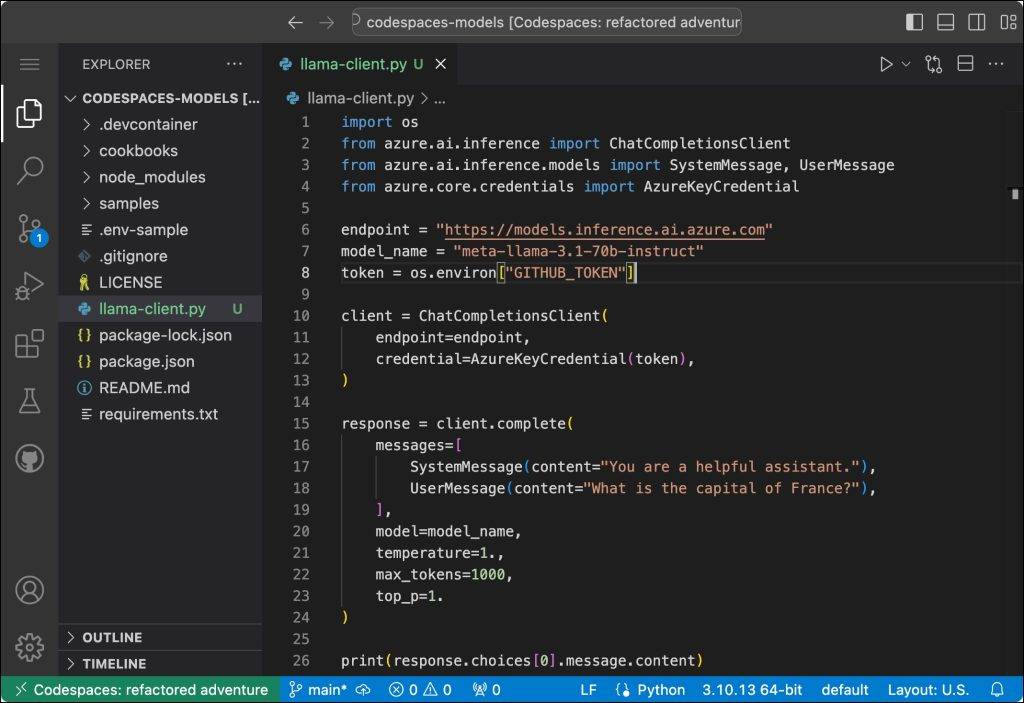

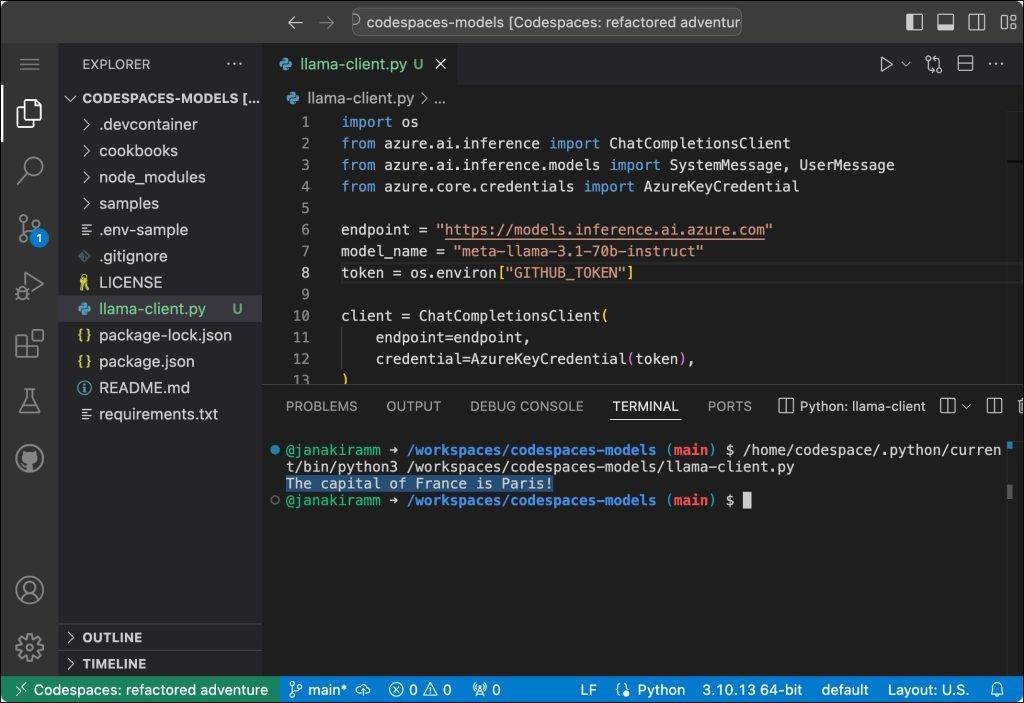

I accessed the terminal and installed the Python Azure AI inference library through pip install azure-ai-inference, which was already installed. I also initialized the environment variable, GITHUB_TOKEN, for the GitHub token.

I ran the code to test the client. Llama 3.1 70B model responded with the correct answer.

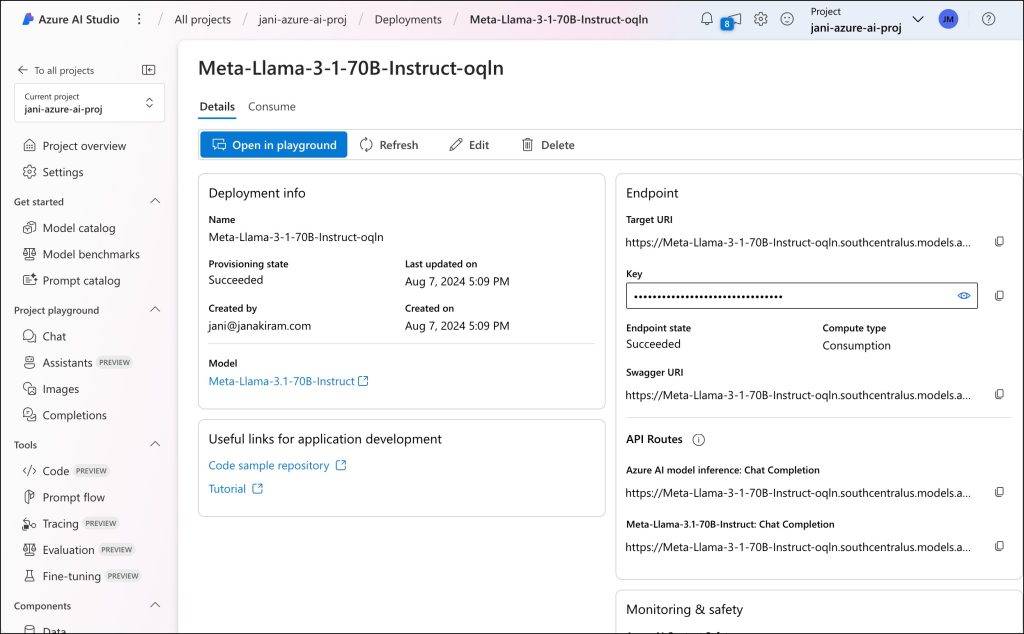

Now, the final test was to change the endpoint and token to point them to the Azure AI environment, which I already have. The below screenshot shows my Azure AI Studio, Azure AI Project and a deployment based on the Llama 3.1 70B model.

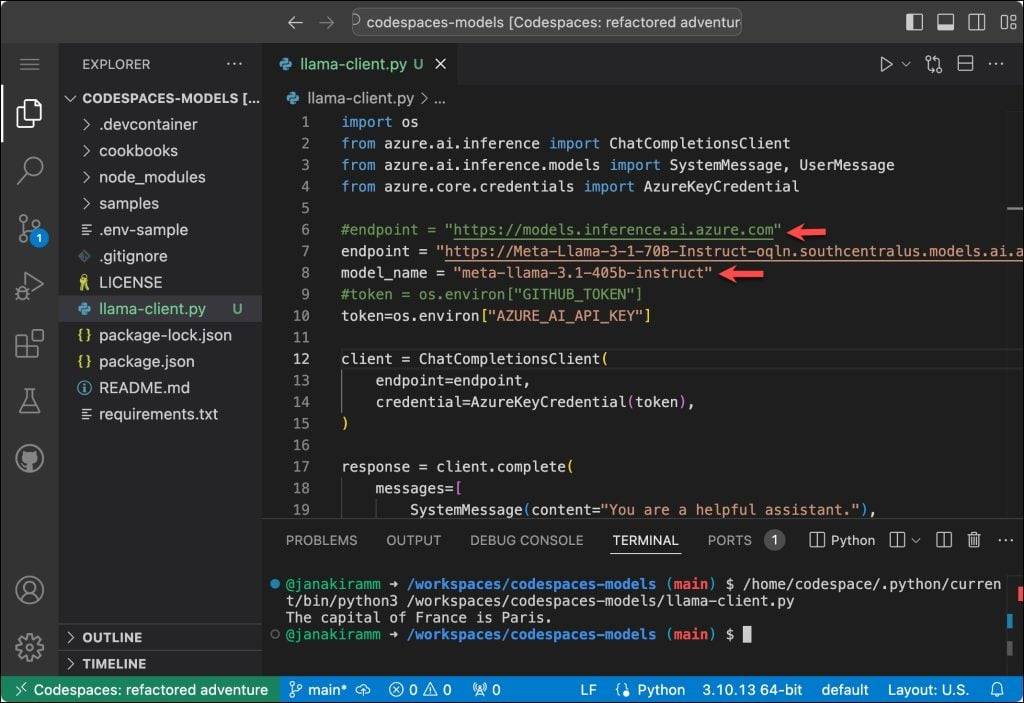

I changed two lines of the code and ran it again. This time the client was talking to the Azure AI endpoint rather than GitHub Models. I got the same response as the previous time.

Summary and Takeaways

GitHub Models is the latest attempt to attract developers to Azure.

GitHub Models is not just another inference platform. Its tight integration with GitHub CLI and Codespaces makes it attractive for developers to get started with state-of-the-art GenAI models. It simplifies the path to production through compatibility with the Azure AI SDK.

It’s clear that Microsoft wants to leverage all of its assets to drive the adoption of its generative AI platform. GitHub Models is the latest attempt to attract developers to Azure.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.