包阅导读总结

1.

“`

AI 应用开发、LLM 解决方案、托管、自托管、Google Cloud

“`

2.

本文探讨了在 AI 应用开发中选择自托管或托管解决方案,对比了如 Vertex AI 和在 GKE 上自托管的优劣,介绍了在 Google Cloud 中开发 AI 的优势,并以构建一个 Java 应用为例展示部署情况。

3.

– AI 应用开发需明确业务目标和用例,有效利用新兴工具如 LLM

– 关键决策是选择托管的 LLM 解决方案(如 Vertex AI)还是在 GKE 上自托管

– 介绍了在 Google Cloud 中开发 AI 的好处,如选择、灵活性、可扩展性、端到端支持

– 对比托管和自托管模型的优缺点

– 托管方案使用简单但定制有限且成本可能较高

– 自托管在 GKE 控制完全但需更多技术和维护

– 以构建 Java 应用并部署在 Cloud Run 为例,模型自托管在 GKE 或托管在 Vertex AI,还能从 CloudSQL 数据库获取预配置的图书语录

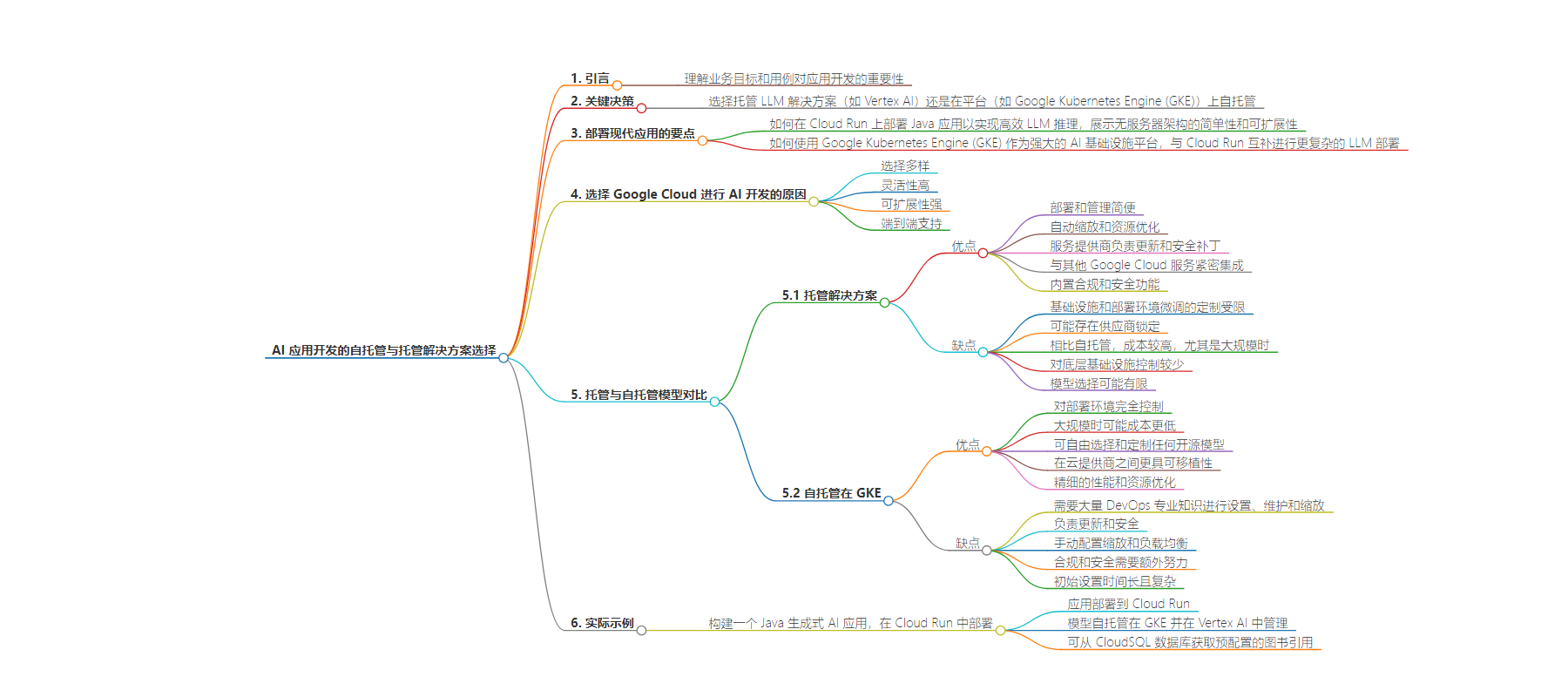

思维导图:

文章来源:cloud.google.com

作者:Dan Dobrin,Yanni Peng

发布时间:2024/8/23 0:00

语言:英文

总字数:2135字

预计阅读时间:9分钟

评分:87分

标签:AI 应用开发,Google Cloud Platform,托管与自托管解决方案,大型语言模型,Google Kubernetes Engine

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

In today’s technology landscape, building or modernizing applications demands a clear understanding of your business goals and use cases. This insight is crucial for leveraging emerging tools effectively, especially generative AI foundation models such as large language models (LLMs).

LLMs offer significant competitive advantages, but implementing them successfully hinges on a thorough grasp of your project requirements. A key decision in this process is choosing between a managed LLM solution like Vertex AI and a self-hosted option on a platform such as Google Kubernetes Engine (GKE).

In this blog post, we equip developers, operations specialists, or IT decision-makers to answer the critical questions of “why” and “how” to deploy modern apps for LLM inference. We’ll address the balance between ease of use and customization, helping you optimize your LLM deployment strategy. By the end, you’ll understand how to:

-

deploy a Java app on Cloud Run for efficient LLM inference, showcasing the simplicity and scalability of a serverless architecture.

-

use Google Kubernetes Engine (GKE) as a robust AI infrastructure platform that complements Cloud Run for more complex LLM deployments

Let’s get started!

Why Google Cloud for AI development

But first, what are some of the factors that you need to consider when looking to build, deploy and scale LLM-powered applications? Developing an AI application on Google Cloud can deliver the following benefits:

-

Choice: Decide between managed LLMs or bring your own open-source models to Vertex AI.

-

Flexibility: Deploy on Vertex AI or leverage GKE for a custom infrastructure tailored to your LLM needs.

-

Scalability: Scale your LLM infrastructure as needed to handle increased demand.

-

End-to-end support: Benefit from a comprehensive suite of tools and services that cover the entire LLM lifecycle.

Managed vs. self-hosted models

When weighing the choices for AI development in Google Cloud with your long-term strategic goals, consider factors such as team expertise, budget constraints and your customization requirements. Let’s compare the two options in brief.

Managed solution

Pros:

-

Ease of use with simplified deployment and management

-

Automatic scaling and resource optimization

-

Managed updates and security patches by the service provider

-

Tight integration with other Google Cloud services

-

Built-in compliance and security features

Cons:

-

Limited customization in fine-tuning the infrastructure and deployment environment

-

Potential vendor lock-in

-

Higher costs vs. self-hosted, especially at scale

-

Less control over the underlying infrastructure

-

Possible limitations on model selection

Self-hosted on GKE

Pros:

-

Full control over deployment environment

-

Potential for lower costs at scale

-

Freedom to choose and customize any open-source model

-

Greater portability across cloud providers

-

Fine-grained performance and resource optimization

Cons:

-

Significant DevOps expertise for setup, maintenance and scaling

-

Responsibility for updates and security

-

Manual configuration for scaling and load balancing

-

Additional effort for compliance and security

-

Higher initial setup time and complexity

In short, managed solutions like Vertex AI are ideal for teams for quick deployment with minimal operational overhead, while self-hosted solutions on GKE offer full control and potential cost savings for strong technical teams with specific customization needs. Let’s take a couple of examples.

Build a gen AI app in Java, deploy in Cloud Run

For this blog post, we wrote an application that allows users to retrieve quotes from famous books. The initial functionality was retrieving quotes from a database, however gen AI capabilities offer an expanded feature set, allowing a user to retrieve quotes from a managed or self-hosted large-language model.

The app, including its frontend, are being deployed to Cloud Run, while the models are self-hosted in GKE (leveraging vLLM for model serving) and managed in Vertex AI. The app can also retrieve pre-configured book quotes from a CloudSQL database.