包阅导读总结

1.

关键词:GreenOps、Operational Efficiency、Carbon Footprint、Kube-Green、Resource Utilization

2.

总结:本文主要介绍了 GreenOps 理念,强调了基础设施的环境成本,通过 kube-green 这一工具能更合理利用云资源,减少碳足迹和成本,还展示了其实际使用效果及未来发展。

3.

– 基础设施存在环境和经济成本,IT 部门碳排放量占比达 1.4%。

– 理解软件对环境的影响需碳感知方法,我们对资源的随意使用会增加碳足迹。

– 借助 kube-green 等工具能更有意识地使用云资源,FinOps 和 GreenOps 密切相关。

– 信息通信技术行业碳排放量巨大,创建环保软件需跨领域知识。

– 测量软件碳排放量需收集多方面数据,让软件更环保可从三方面入手。

– 减少碳排放需获取数据中心碳强度数据,降低能耗是目前较优方式。

– 软件生命周期通常包括设计、开发、发布等阶段,不同环境有不同可用性要求。

– 假设理想情况,持续激活非工作时间的开发和预生产环境会浪费大量资源。

– 通过 kube-green 配置可自动关闭和重启不必要的 Kubernetes 资源,实际使用中大幅减少了资源消耗和碳排放。

– kube-green 项目在不断发展,未来将支持更多资源和更灵活的规则。

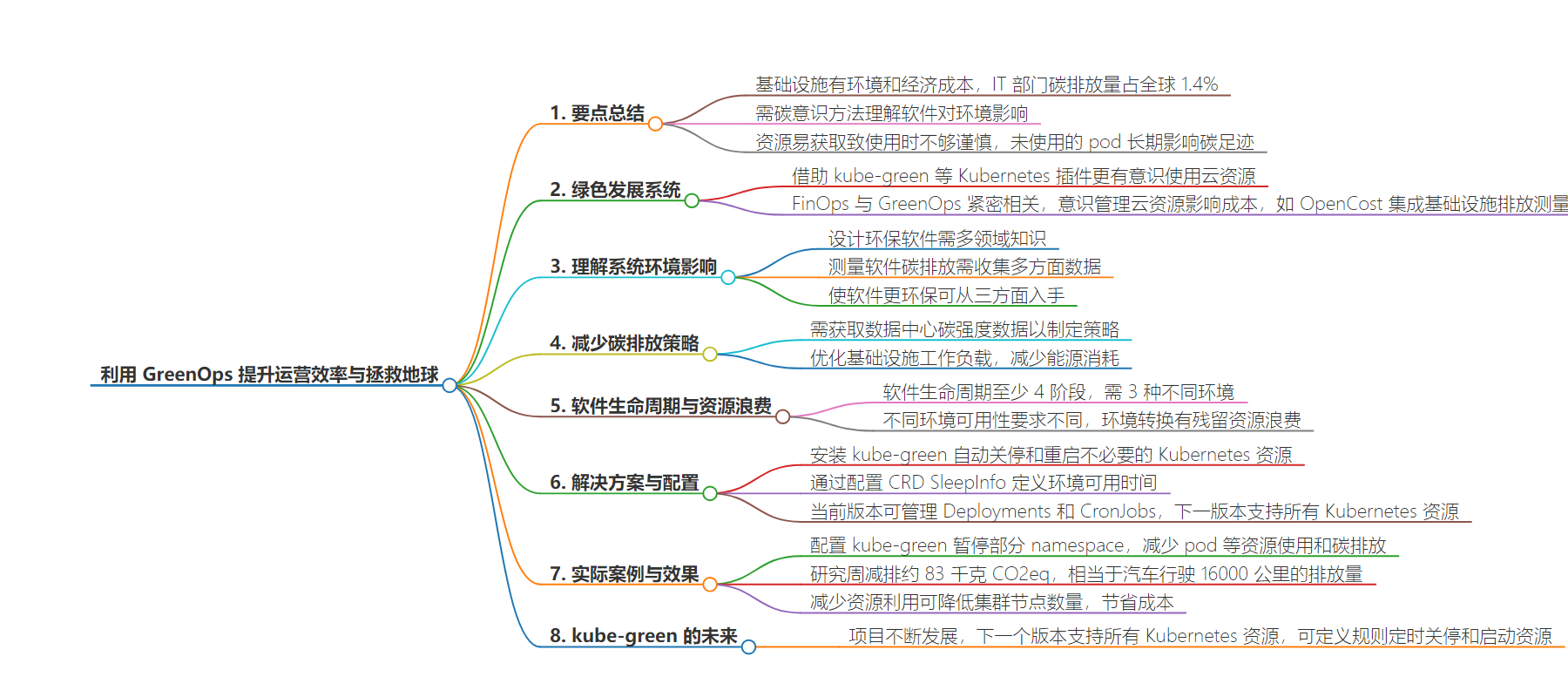

思维导图:

文章来源:infoq.com

作者:Davide Bianchi,Graziano Casto

发布时间:2024/6/26 0:00

语言:英文

总字数:1912字

预计阅读时间:8分钟

评分:86分

标签:绿色运维,运营效率,DevOps,Kubernetes,绿色软件

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Key Takeaways

- Our infrastructures come with an environmental cost as well as an economic one; the IT sector alone is responsible for 1.4% of carbon emissions worldwide.

- Understanding the impact of our software on the environment requires a carbon-aware approach.

- These days, our easy access to resources has made us a bit less mindful of how we use them. An unused pod isn’t just an unused pod; in the long run, it has a significant impact on our overall carbon footprint.

- With a simple Kubernetes addon like kube-green, you can use cloud resources more consciously, taking the first step towards a greener development of systems without needing to impact their architectures.

- FinOps and GreenOps are closely related concepts; managing cloud resources consciously also impacts costs. Nowadays, FinOps tools like OpenCost are integrated with measurements of our infrastructure emissions, providing a comprehensive overview.

Paraphrasing the words of a wise Uncle Ben (from the 2002 movie Spiderman): “With great infrastructures come great responsibilities” and these responsibilities are not only towards the end users of our systems but also towards the environment surrounding us.

The information and communication technology sector alone produces around 1.4% of overall global emissions. This equates to an estimated 1.6 billion tons of greenhouse gas emissions, making each one of us internet users responsible for about 400kg of carbon dioxide a year.

Understand the environmental impact of our systems

Creating environmentally friendly software involves using data to design programs that produce as little carbon emissions as possible. This requires knowledge from different fields like climate science, computer programming, and how electricity works.

Related Sponsored Content

When we talk about “carbon,” we mean all the stuff that contributes to global warming. To measure this, we use terms like CO2eq, which tells us how much carbon dioxide is released and carbon intensity measures how much carbon is released for each unit of electricity used.

To figure out how much carbon a software program produces, we need to gather data on how much energy it uses, how dirty that energy is, and what kind of computer it runs on. This can be tricky, even for big companies tracking their own software usage.

To make software greener, we can focus on three things: making it use less energy, raising awareness about carbon emissions, and using more efficient hardware.

To reduce carbon emissions without reducing the actual energy usage of our infrastructure, accessing data on the carbon intensity of data centers is necessary. This allows us to set up a spatial or temporal shifting strategy, thereby prioritizing the use of renewable energy sources where available.

Since putting these strategies into action involves optimizing the workloads that use our infrastructure, and considering that upgrading to more efficient hardware can be expensive and challenging, the easiest and fastest way to enhance our environmental impact is by reducing energy consumption. But how can we achieve this without compromising the availability of our systems?

Zombies are real, and they are just around the corner

In simplest terms, the software lifecycle typically involves at least 4 phases: a design phase where the system is conceptualized and experiments may be conducted, a development phase where all features to be implemented are built, and a release phase to put the developed software into production, but not before testing everything in a secure environment.

To implement a workflow as described, we’ll need at least three different environments: the first being a development environment that will be useful for both the design and implementation phases, a staging or pre-production environment to test our software before delivering it to end users, and finally the production environment.

/filters:no_upscale()/articles/greenops-operational-efficiency/en/resources/28fig1-1719225589942.jpg)

These three environments have different availability requirements: the development and pre-production environments will be useful to engineers only during working hours, while the production environment needs to be constantly up and running. Additionally, transitioning from one environment to another often leaves behind remnants that linger in our infrastructure like zombies, consuming resources without serving a useful purpose in the overall software lifecycle.

Kill them all and save the planet

Suppose we hypothetically set an ideal case where all environments have the same number of pods running on the same infrastructure with a consistent and overlapping computational demand. We can assert that by keeping the development and staging environments constantly active even outside working hours, we’re using more resources than necessary for 128 out of the total 168 hours in a week, which equates to approximately 103% more usage than what is actually required. In other words, by keeping the development and staging environments always active, engineers would effectively utilize them for only about 23% of their actual consumption.

With this simple example, it is evident how significant the amount of resources that engineering companies waste every day is. Fortunately, remedying this requires nothing more than a handful of configuration lines and the installation of a Kubernetes operator on our clusters. kube-green is an add-on for Kubernetes that, once installed, allows you to automatically shut down Kubernetes resources when they are not needed and restart them when they are, all in an automated manner simply by configuring the CRD SleepInfo.

apiVersion: kube-green.com/v1alpha1kind: SleepInfometadata: name: working-hoursspec: weekdays: "1-5" sleepAt: "20:00" wakeUpAt: "08:00" timeZone: "Europe/Rome" suspendCronJobs: true excludeRef: - apiVersion: "apps/v1" kind: Deployment name: my-deploymentWith just a few lines of configuration like those in the previous image, you can define that all namespaces on the cluster with Kube-green are available only from Monday to Friday and only during the hours from 8 AM to 8 PM in the Rome timezone.

With the current Kube-green version, both Deployments and CronJobs can be managed, but with the next version all the Kubernetes resources will be supported.

In the case of Deployments, to stop the resource, the ReplicaSet is set to zero and then reset to its previous value to restart it. Conversely, to suspend CronJobs, they are marked as suspended.

In the SleepInfo configurations, you can also specify resources that should not be suspended, such as the “my-deployment” in the example configuration provided above.

Data not just words: a real-world kube-green usage report

The following configuration of kube-green was used to suspend 48 out of 75 namespaces outside of working hours while keeping all deployments with the name api-gateway active.

apiVersion: kube-green.com/v1alpha1kind: SleepInfometadata: name: working-hoursspec: weekdays: "1-5" sleepAt: "20:00" wakeUpAt: "08:00" timeZone: "Europe/Rome" suspendCronJobs: true excludeRef: - apiVersion: "apps/v1" kind: Deployment name: api-gatewayA cluster of this size, operating without kube-green, manages an average of 1050 pods with an allocated amount of resources equal to 75 GB of memory and 45 CPUs. In terms of actual resource consumption, the cluster records usage of 45 GB of memory and 4.5 CPUs, emitting approximately 222 kg of CO2 into the environment per week.

By configuring SleepInfo on this cluster, we can reduce the total number of pods by 600, bringing it down to 450. The allocated memory decreases to 30 GB, and the CPUs decrease to only 15. With kube-green, there is also a decrease in terms of resource consumption, with memory usage reduced to 21 GB and CPUs reduced to 1, resulting in emissions of approximately 139 kg of CO2.

| without kube-green | with kube-green | difference | |

| number of pods | 1050 | 450 | 600 |

| memory consumed [Gb] | 54 | 21 | 33 |

| CPU consumed [cpu] | 4.5 | 1 | 3.5 |

| memory allocated [Gb] | 75 | 30 | 45 |

| CPU allocated [cpu] | 40 | 15 | 25 |

| CO2eq/week [kg] | 222 | 139 | 83 |

The use of kube-green on this cluster during the study week resulted in emitting approximately 83 kg of CO2eq into the environment, which is 38% less than what we would have emitted under normal circumstances. These numbers become even more impressive when projected over the entire year, resulting in approximately 4000 kg less CO2eq emitted thanks to the simple use of kube-green.

To put it into perspective, this reduction is equivalent to the carbon emissions from an average car traveling approximately 16,000 km.

/filters:no_upscale()/articles/greenops-operational-efficiency/en/resources/20fig2-1719225589942.jpg)

/filters:no_upscale()/articles/greenops-operational-efficiency/en/resources/15fig3-1719225589942.jpg)

/filters:no_upscale()/articles/greenops-operational-efficiency/en/resources/13fig4-1719225589942.jpg)

/filters:no_upscale()/articles/greenops-operational-efficiency/en/resources/11fig5-1719225589942.jpg)

In this specific use case, as evidenced by the snapshots of resource usage metrics, the use of kube-green has allowed for a significant decrease in resource utilization, enabling the downscaling of the number of nodes in the cluster. On average, 4 nodes are stopped during the night and on weekends, each of which has a size of 2 CPU cores and 8 GB of memory.

This brings several benefits, not only reducing CO2 emissions but also directly proportional cost savings for our cluster. This is because the pricing structure of clusters in major cloud providers is typically based on the number of nodes used. By scaling down the number of nodes when they are not needed, we effectively reduce the cost of operating the cluster.

The future of kube-green

The kube-green project is constantly evolving, and as mentioned earlier, we are already working on improvements that will make kube-green a better tool, hopefully making the world a better place as well.

In the next release of kube-green, all resources available in Kubernetes will be supported. Instead of directly acting on specific resources, it will be possible to define rules to schedule the shutdown and possibly the startup of any resource at defined times.

With these patches, kube-green’s support won’t be limited to just Deployments and CronJobs but will also extend to ReplicaSets, Pods, StatefulSets, and any other custom CRDs.

apiVersion: kube-green.com/v1alpha1kind: SleepInfometadata: name: working-hoursspec: weekdays: "*" sleepAt: "20:00" wakeUpAt: "08:00" suspendCronJobs: true patches: - target: group: apps kind: StatefulSet patch: - path: /spec/replicas op: add value: 0 - target: group: apps kind: ReplicaSet patch: - path: /spec/replicas op: add value: 0 excludeRef: - apiVersion: "apps/v1" kind: StatefulSet name: do-not-sleep - matchLabels: kube-green.dev/exclude: "true"We are experimenting with solutions that would allow kube-green to interact with other operators like Crossplane. This would enable scheduling the shutdown and restart of entire pieces of infrastructure managed through this tool.

Anyone who wants to share their thoughts or contribute directly to the project with ideas, code, or content can take a look at the repository on GitHub or contact us directly.

Use your resources consciously: keep what you need when you need it

These days, our easy access to resources has made us a bit less mindful of how we use them. An unused pod isn’t just an unused pod; in the long run, it has a significant impact on our overall carbon footprint.

GreenOps allows us to have a significant positive impact on the environment with a negligible impact on our architectures. With minimal additional effort, we can ensure that our clusters use resources much more efficiently and without waste.

With modern approaches, we can implement our GreenOps strategy without drastically altering the management of our workloads, while also achieving positive impacts on our infrastructure costs. GreenOps and FinOps are extremely related concepts, and often, rationalizing our resources from a sustainability perspective leads to savings on our cloud bills.

To make informed decisions, it is essential to have visibility into both the costs of our clusters and their CO2eq emissions into the environment. For this reason, many cost monitoring tools, such as OpenCost, have introduced carbon cost emissions tracking across Kubernetes and cloud spending.

In conclusion, GreenOps can be the first step in aligning our operational practices with environmental sustainability before implementing more complex strategies aimed at using more efficient hardware or preferring renewable energy sources. With GreenOps techniques, you can improve your environmental impact from day one and see immediate results. This allows you to collect data and move more consciously in the vast world of eco-friendly software.