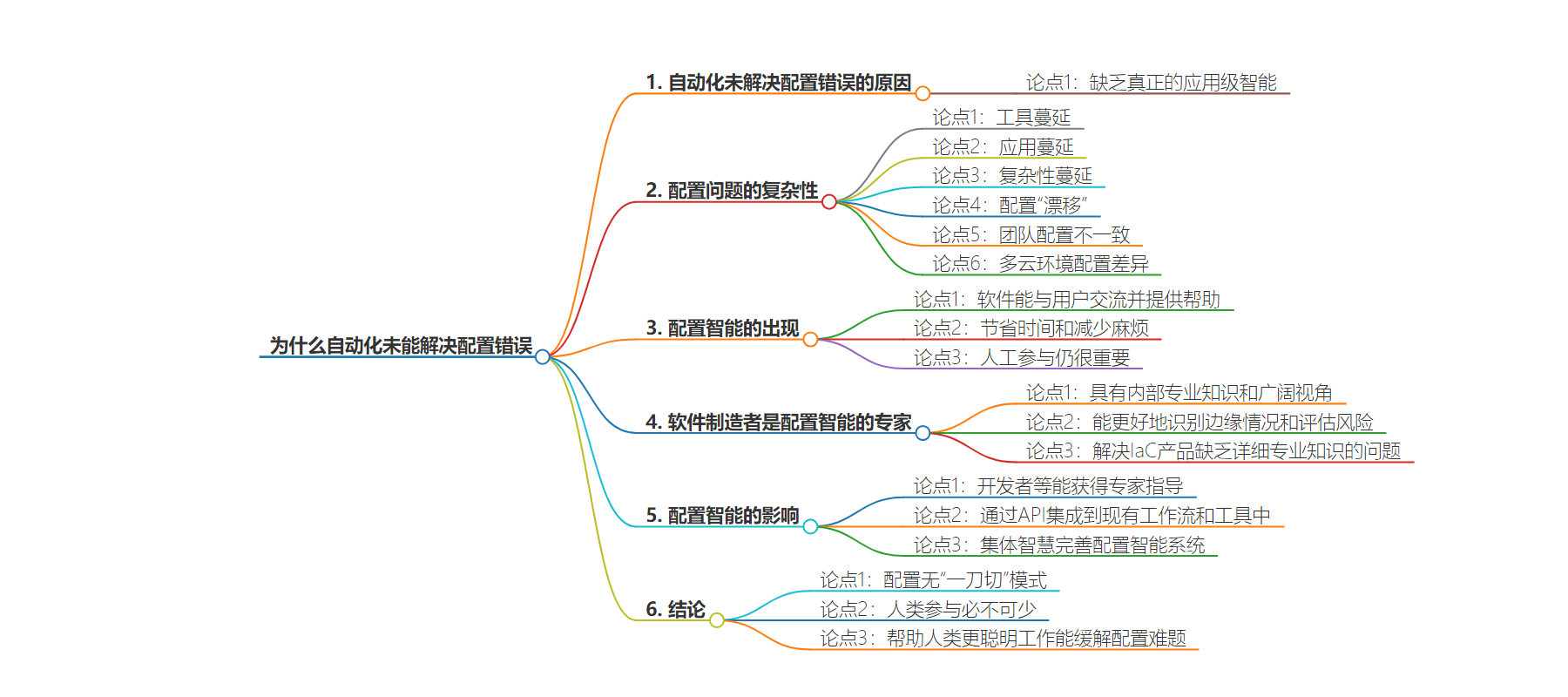

包阅导读总结

1. 关键词:配置错误、自动化、配置智能、软件制造者、现代应用

2. 总结:本文探讨了自动化未能解决配置错误的问题,指出当前环境中配置管理的复杂性,强调配置智能的出现及重要性,软件制造者在配置优化推荐上有优势,最后提出现代应用的配置需考虑语义和上下文,人类始终需参与其中。

3. 主要内容:

– 配置错误长期困扰技术团队,自动化未解决因其缺乏应用级智能,如今进入配置智能时代

– 现代技术环境中工具、应用和复杂性蔓延,配置易“漂移”,不同团队配置不同,多云部署使配置更复杂,导致配置管理愈加困难

– 配置智能让软件能与用户交流,帮助用户,简化维护过程,但人工参与仍必要

– 软件制造者最适合推荐配置优化,因其具有内部专业知识和广泛视角,配置智能通过 API 为开发者等提供专家指导

– 现代应用配置需考虑语义和上下文,人类需参与,配置智能虽不能完全实现自动化,但能帮助人类更高效解决配置难题

思维导图:

文章地址:https://thenewstack.io/why-cant-automation-eliminate-configuration-errors/

文章来源:thenewstack.io

作者:Michelle Ensey

发布时间:2024/8/21 13:09

语言:英文

总字数:1091字

预计阅读时间:5分钟

评分:90分

标签:配置管理,自动化,人工智能技术,基础设施即代码,平台运营

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Since the advent of the Digital Age, configuration errors have persistently challenged technology teams. A host of solutions have emerged over time to attempt to tackle the problem, often under the description of Infrastructure as Code (IaC). Yet configuration problems remain one of the largest issues for network operations, security and platform operations teams. As more application development teams “shift left” and take care of configuration of infrastructure components, the challenge is only growing.

Why has automation not solved this problem? Because until now, automation lacked true application-level intelligence. And that’s changing quickly, moving us toward an era when humans can make faster, better configuration decisions and work in tandem with automation to finally make headway on the configuration conundrum – the era of configuration intelligence.

Tool Sprawl, Application Sprawl, Complexity Sprawl

And not a moment too soon. The modern technology environment is replete with tools. For example, the average security team must oversee configurations of dozens of controls. The advent of platform operations to accommodate shift left by giving developers choice — with guardrails for key application delivery components — resulted in more configurations coming under management and more complexity.

Because code is constantly shipping, configurations “drift” as modifications are made to optimize application performance and enable connections between application components and APIs (both internal and external).

At the same time, siloed teams may not be on the same page for configurations; different AppDev teams may have different preferred configurations depending on their use cases. With organizations deploying applications on multiple clouds, configuration must take into account the different conventions in each environment. For modern apps deployed in Kubernetes, configuration was supposed to be standardized around YAML manifests and container images. However, configuration is still held hostage to underlying infrastructure platforms.

In other words, keeping configurations straight is growing more complicated, not less. This is evidenced by the significant number of major cyberattacks exploiting misconfigured systems.

Your Apps Can Talk: Configuration Intelligence

With AI being baked into technology products at lightspeed, a key change is taking place around maintaining a secure, efficient configuration stance. Software can now talk to the users and help them!

This is both novel and critical. It means that makers of software components can snapshot a configuration, analyze the contents, see how it compares to correct configurations and provide guidance. With large language models (LLMs) and new coding assistants, this guidance can take place in any form factor — a chatbot, inline in the CLI or in an observability module.

The emergence of configuration intelligence changes the game in several ways. First, it means that anyone tasked with maintaining configurations can save a lot of time and trouble that used to involve manual, tedious but cognitively intense tasks like reading through YAML manifests or config files to identify tiny errors. Yes, some tools existed to do this before, but they mostly functioned more like “linters,” spotting obvious syntax errors. By simplifying the process, time to manually maintain configs is drastically reduced.

When I say manually maintain, I choose this carefully because we are not yet ready to completely trust our configuration adjustments to machines. The system may lack critical context, such as why an outdated encryption library is used on a test machine, or why an API is configured in such a way to remove some security settings for a particularly secure partner feed. Configuration fixes work best when a human is in the loop.

Software Makers: The OGs of Configuration Intelligence

At the same time, no one is better suited to recommend configuration optimization than the maker of the software. That’s because the maker of the software not only has the internal expertise on how it works, but also the broadest perspective on problems reported and the best information on which to train machine learning systems to identify and categorize configuration anomalies.

The maker of the software can recognize edge cases, assess risks and understand security implications of configuration settings better than anyone else. None of this knowledge is easy to transfer or is easy to encode in IaC, build, CI/CD or development tooling beyond basic linting and syntax fixes.

The lack of detailed expertise has been a traditional problem of IaC products, which struggle to keep up with configuration recommendations across the dozens of software applications and infrastructure components they manage and automate. The lack of detailed configuration expertise also creates a cadre of in-house experts, who become key sources of institutional memory — but also major risks. When your load-balancing guru walks out the door to take another job, then everything they know that’s not clearly documented goes out the door too.

With configuration intelligence and recommendation engines, every developer, PlatformOps, NetOps and SecOps engineer has access to expert guidance. Over time, they too will become experts. When configuration intelligence is available via API, as it should be, then workers in these roles can integrate the configuration chops they need into their existing workflows and tools, essentially performing a mind meld with the software maker and their engineers and experts.

Because configuration intelligence is driven by LLMs, which by default are addressed via API, this means that modern apps will contain a configuration meta layer riding above the app code that teams can access to ensure their bespoke architecture works well. And, over time, the collective intelligence of the crowd will better inform configuration intelligence systems to recognize more edge cases and uncover emergent patterns of problems or errors.

Conclusion: Modern Apps Must Be Semantic Apps

The reality is, there is no “one-size-fits-all” in configuration, and what works and is secure in one use case may not be in another. For modern apps to work their best, semantic and contextual awareness of configurations is key. The source of truth for that will be the software maker, leveraging internal expertise and the collective data of myriad users to capture the patterns and create the best recommendations for any given architecture and application stack.

While we may be able to identify and automate away the most egregious configuration problems, configuration will never go completely on autopilot. A human must always be in the loop due to the variance of software builds, security and compliance requirements and infrastructure needs.

But helping that human work smarter and become a configuration guru faster, and learn more on a continuous basis, will go a long way toward alleviating the timeless configuration conundrum.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.