包阅导读总结

1. 关键词:Data Engineering、GenAI、Data Pipelines、Data Quality、AI Challenges

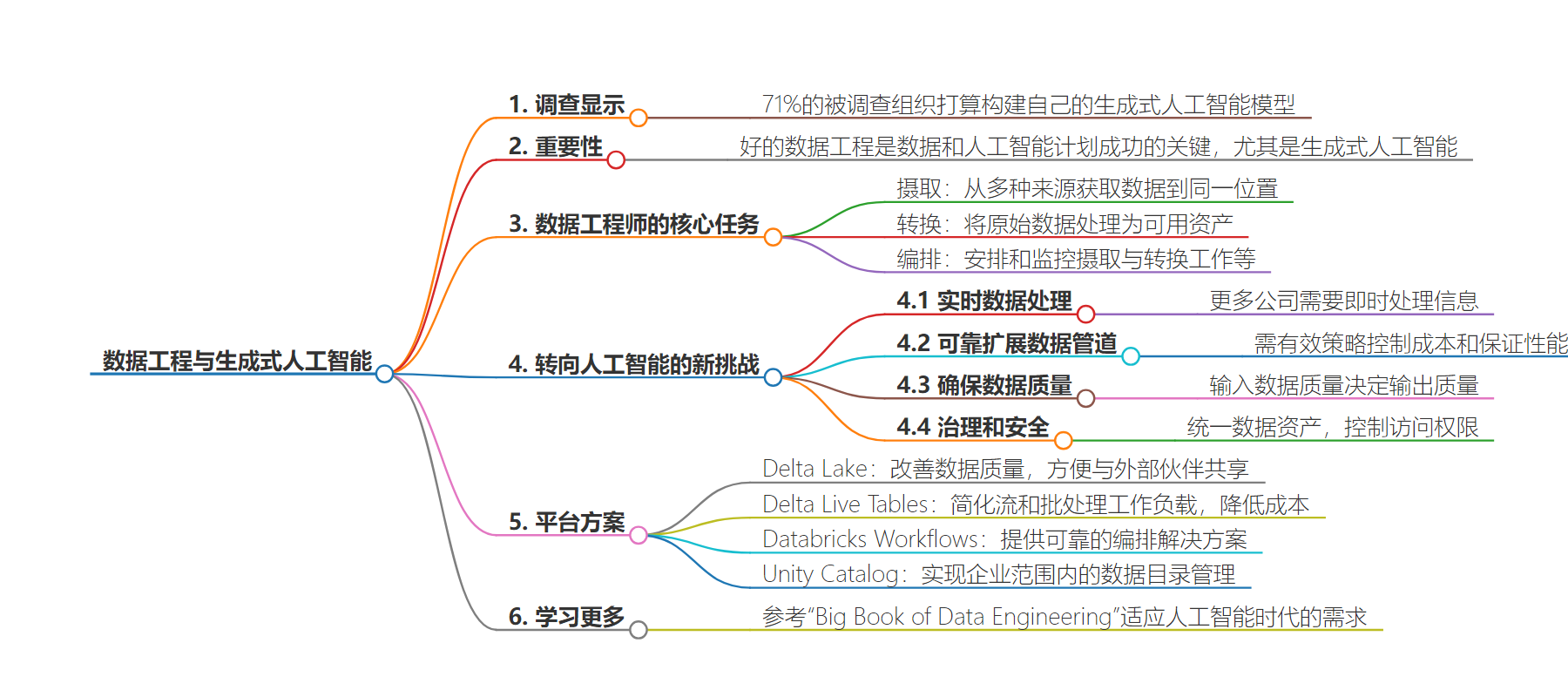

2. 总结:MIT 报告显示多数组织欲建 GenAI 模型,强调好的数据工程对其成功至关重要。数据工程师工作通常涵盖三项任务,AI 时代带来新挑战,文中还介绍了应对挑战的平台及相关功能。

3. 主要内容:

– 背景

– MIT 报告称 71%组织打算建 GenAI 模型,好数据是关键。

– 数据工程师的核心任务

– 数据摄取:从多源获取数据到一处。

– 数据转换:通过过滤等将原始数据转为可用资产。

– 工作编排:调度和监控相关工作及处理问题。

– 因 AI 产生的新挑战

– 处理实时数据。

– 可靠扩展数据管道并控制成本。

– 保证数据质量。

– 治理和保障数据安全。

– 应对的平台及功能

– Delta Lake:改善数据质量,方便与外部共享。

– Delta Live Tables:简化 ETL 工作,降低成本。

– Databricks Workflows:提供可靠编排和控制等方案。

– Unity Catalog:企业级数据目录,统一管理和共享数据。

思维导图:

文章地址:https://www.databricks.com/blog/data-engineering-and-genai-tools-practitioners-need

文章来源:databricks.com

作者:Databricks

发布时间:2024/8/30 15:30

语言:英文

总字数:653字

预计阅读时间:3分钟

评分:85分

标签:数据工程,生成式人工智能,数据管道,数据质量,Delta Lake

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

A recent MIT Tech Review Report shows that 71% of surveyed organizations intend to build their own GenAI models. As more work to leverage their proprietary data for these models, many encounter the same hard truth: The best GenAI models in the world will not succeed without good data.

This reality emphasizes the importance of building reliable data pipelines that can ingest or stream vast amounts of data efficiently and ensure high data quality. In other words, good data engineering is an essential component of success in every data and AI initiative especially for GenAI.

While many of the tasks involved in this effort remain the same regardless of the end workloads, there are new challenges that data engineers need to prepare for when building GenAI applications.

The Core Functions

For data engineers, the work typically spans three key tasks:

- Ingest: Getting the data from many sources – spanning on-premises or cloud storage services, databases, applications and more – into one location.

- Transform: Turning raw data into usable assets through filtering, standardizing, cleaning and aggregating. Often, companies will use a medallion architecture (Bronze, Silver and Gold) to define the different stages in the process.

- Orchestrate: The process of scheduling and monitoring ingestion and transformation jobs, as well as overseeing other parts of data pipeline development and addressing failures.

The Shift to AI

With AI becoming more of a focus, new challenges are emerging across each of these functions, including:

- Handling real-time data: More companies need to process information immediately. This could be manufacturers using AI to optimize the health of their machines, banks trying to stop fraudulent activity, or retailers giving personalized offers to shoppers. The growth of these real-time data streams adds yet another asset that data engineers are responsible for.

- Scaling data pipelines reliably: The more data pipelines, the higher the cost to the business. Without effective strategies to monitor and troubleshoot when issues arise, internal teams will struggle to keep costs low and performance high.

- Ensuring data quality: The quality of the data entering the model will determine the quality of its outputs. Companies need high-quality data sets to deliver the end performance needed to move more AI systems into the real world.

- Governance and security: We hear it from businesses every day: data is everywhere. And increasingly, internal teams want to use the information locked in proprietary systems across the business for their own, unique purposes. This has added new pressure on IT leaders to unify the growing data estates and exert more control over which employees are able to access which assets.

The Platform Approach

We built the Data Intelligence Platform to be able to address this diverse and growing set of challenges. Among the most critical features for engineering teams are:

- Delta Lake: Unstructured or structured; the open source storage format means it no longer matters what type of information the company is trying to ingest. Delta Lake helps businesses improve data quality and allows for easy and secure sharing with external partners. And now, with Delta Lake UniForm breaking down the barriers between Hudi and Iceberg, enterprises can keep even tighter control of their assets.

- Delta Live Tables: A powerful ETL framework that helps engineering teams simplify both streaming and batch workloads, across both Python and SQL, to lower costs.

- Databricks Workflows: A simple, reliable orchestration solution for data and AI that provides engineering teams enhanced control flow capabilities, advanced observability to monitor and visualize workflow execution and serverless compute options for smart scaling and efficient task execution.

- Unity Catalog: With Unity Catalog, data engineering and governance teams benefit from an enterprise-wide data catalog with a single interface to manage permissions, centralized auditing, automatically track data lineage down to the column level and share data across platforms, clouds and regions.

To learn more about how to adapt your company’s engineering team to the needs of the AI era, check out the “Big Book of Data Engineering.”