包阅导读总结

1.

关键词:Nue 框架、Anthropic、OpenAI、AI API、UX 开发

2.

总结:本文介绍了开源 UX 框架 Nue 及其特性,Anthropic 的 AI API 支持提示缓存及作用,OpenAI 的 API 新增结构化输出及优势,还提到软件工程师对 AI 如自动完成或初级程序员的看法。

3.

主要内容:

– Nue 框架

– 是面向 UX 开发者的开源 Web 框架

– 处于测试阶段,设计更简单

– 适用于多种开发者和相关人员

– 1.0 版包括全球设计系统、改进的 CSS 堆栈等

– 提供安装指南和教程

– Anthropic 的 Claude

– 其 AI API 支持提示缓存

– 可在 API 调用间缓存常用上下文,降低成本和延迟

– 适用于多种场景

– OpenAI

– API 新增结构化输出

– 确保模型生成输出匹配开发者提供的 JSON 模式

– 以两种形式提供,SDK 已更新支持

– 列举了部分用例和局限性

– 软件工程师对 AI 的看法

– 调查结果显示开发者不太满意

– 将其比作自动完成或初级程序员、实习生

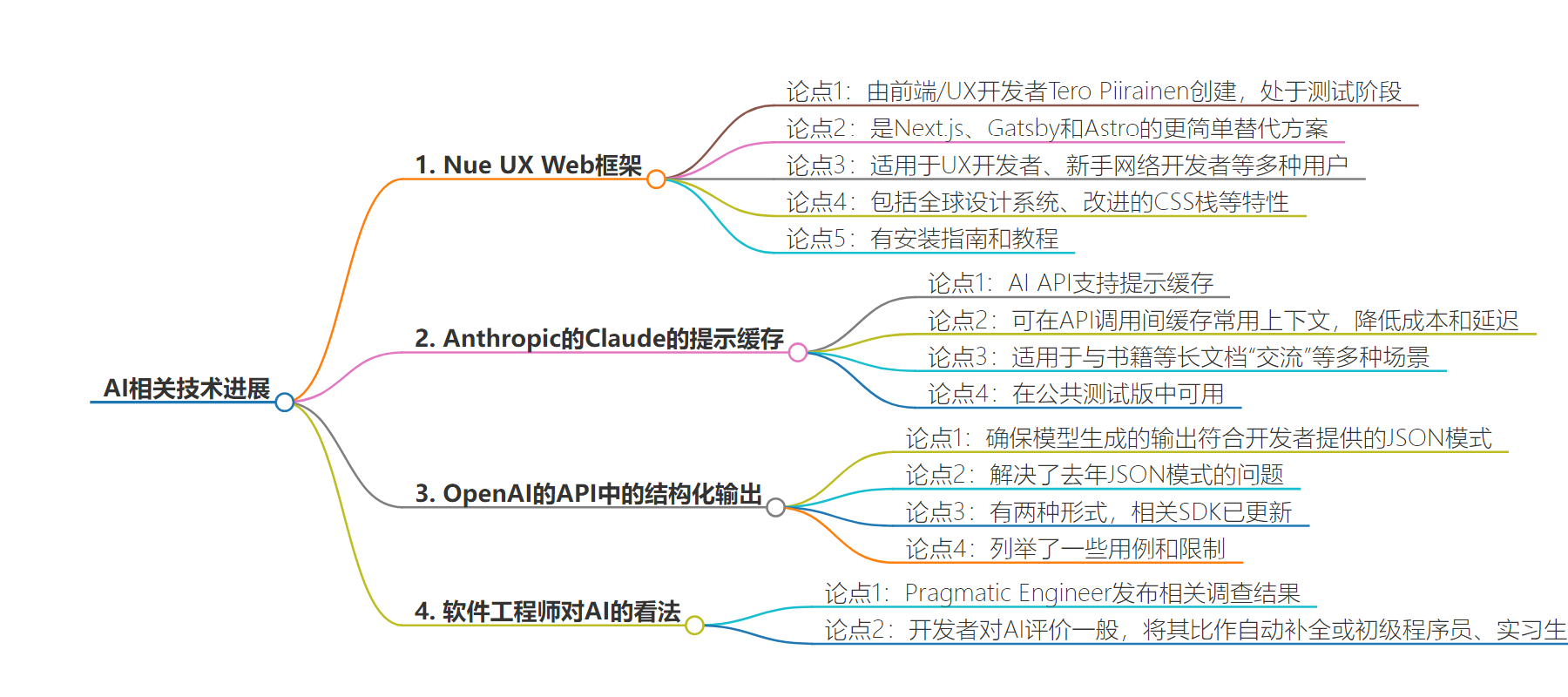

思维导图:

文章地址:https://thenewstack.io/a-nue-ux-web-framework-plus-anthropic-openai-boost-ai-apis/

文章来源:thenewstack.io

作者:Loraine Lawson

发布时间:2024/8/16 15:54

语言:英文

总字数:907字

预计阅读时间:4分钟

评分:91分

标签:Web 框架,UX 开发,AI API,Anthropic,OpenAI

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Nue is an open source web framework for UX developers by frontend/UX developer Tero Piirainen. It’s now available in beta and is designed to be a “ridiculously simpler alternative” to Next.js, Gatsby and Astro, writes its creator.

“What used to take a React specialist and an absurd amount of JavaScript can now be done by a UX developer and a small amount of CSS,” Piirainen stated in an announcement Thursday.

It’s designed for UX developers, obviously, but he listed other potential users as beginner web developers, experienced JS developers that are frustrated with the React stack, designers, teachers and parents.

Nue 1.0 includes a global design system, which lets UX developers quickly create different designs by using exactly the same markup between projects, according to the documentation.

It also has an improved CSS stack with a CSS theming system that supports hot-reloading, CSS inlining, error reporting and automatic decency management.

It also includes Lightening CSS enabled by default, library folders that can be included on pages with a new include property and there’s an exclude property that strips unneeded assets from the request to lighten the payload.

The Nue site includes a three-step installation guide and a tutorial.

Antropic’s Claude Introduces Prompt Caching

Anthropic’s AI API now supports prompt caching. This capability allows developers to cache frequently used context between API calls. This is an issue where developers have a large amount of prompt context that they want to refer to repeatedly and long-term over requests.

“With prompt caching, customers can provide Claude with more background knowledge and example outputs — all while reducing costs by up to 90% and latency by up to 85% for long prompts,” the company stated.

The Anthropic documentation explains how prompt caching works. When a request is sent with prompt caching enabled, the system first checks if the prompt prefix is already cached from a recent query. If it’s found, then the system uses the cached version; if it’s not, then the full prompt is processed and the prefix cached for future use.

As it turns out, it also reduces costs.

As for uses, prompt caching makes it possible to “talk” to books or other long documents, which happens to be my personal favorite AI use case. With prompt caching, developers can bring books into the knowledge base by embedding the document into the prompt and letting users ask questions related to it.

But there are other uses as well, including creating conversational agents, coding assistants, large document processing or when there’s a detailed instruction set.

The blog post includes tables that show the latency improvements early customers saw with prompt content. It’s available in public beta for Claude 3.5 Sonnett and Claude 3 Haiku.

OpenAI Adds Structured Outputs in the API

This week, OpenAI added Structure Outputs to its API, which is designed to ensure model-generated outputs will exactly match JSON schemas provided by developers, the company stated. It’s now generally available in the API.

This resolves a problem with the JSON mode introduced last year to improve model reliability. JSON mode generated valid JSON outputs but did not guarantee that the models’ response would conform to a particular schema.

“Generating structured data from unstructured inputs is one of the core use cases for AI in today’s applications,” OpenAI noted, but developers have had to use open source tools, prompting and retry requests to work with LLM limitations in this area. “Structured Outputs solves this problem by constraining OpenAI models to match developer-supplied schemas and by training our models to better understand complicated schemas.”

The new model was able to score a perfect 100 in complex JSON schemas, compared to less than 40% with the GPT that did not have Structured Outputs, the company stated.

This capability is available in two forms: As a function call and as a new option for the response_format parameter. The Python and Node SDKs have been updated with native support for Structured Outputs as well.

Some use cases for this cited by OpenAI are:

- Dynamically generating user interfaces based on the user’s intent;

- Separating a final answer from supporting reasoning or additional commentary;

- Extracting structured data from unstructured data.

The blog post outlines how OpenAI achieved this and explains alternative approaches to the problem. It also lists the limitations of Structured Outputs, including that it allows only a subset of JSON schema. There’s also documentation for implementing Structured Outputs.

Survey Respondent: AI Like ‘Auto Complete’

“It’s like having a really eager, fast intern as an assistant, who’s really good at looking stuff up, but has no wisdom or awareness.”

Anonymous survey respondent

While we’re on the topic of AI, the Pragmatic Engineer published the results of an AI survey on how software engineers are using large language models. The site shared the results in their newsletter and blog. The survey was completed by 211 tech professionals, which is not a lot, but the results are fun to read. It turns out, developers are not that impressed with AI, with respondents comparing it to autocomplete or being paired with a junior programmer.

One respondent even compared it to an intern.

“It’s like having a really eager, fast intern as an assistant, who’s really good at looking stuff up, but has no wisdom or awareness,” the anonymous respondent wrote.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.