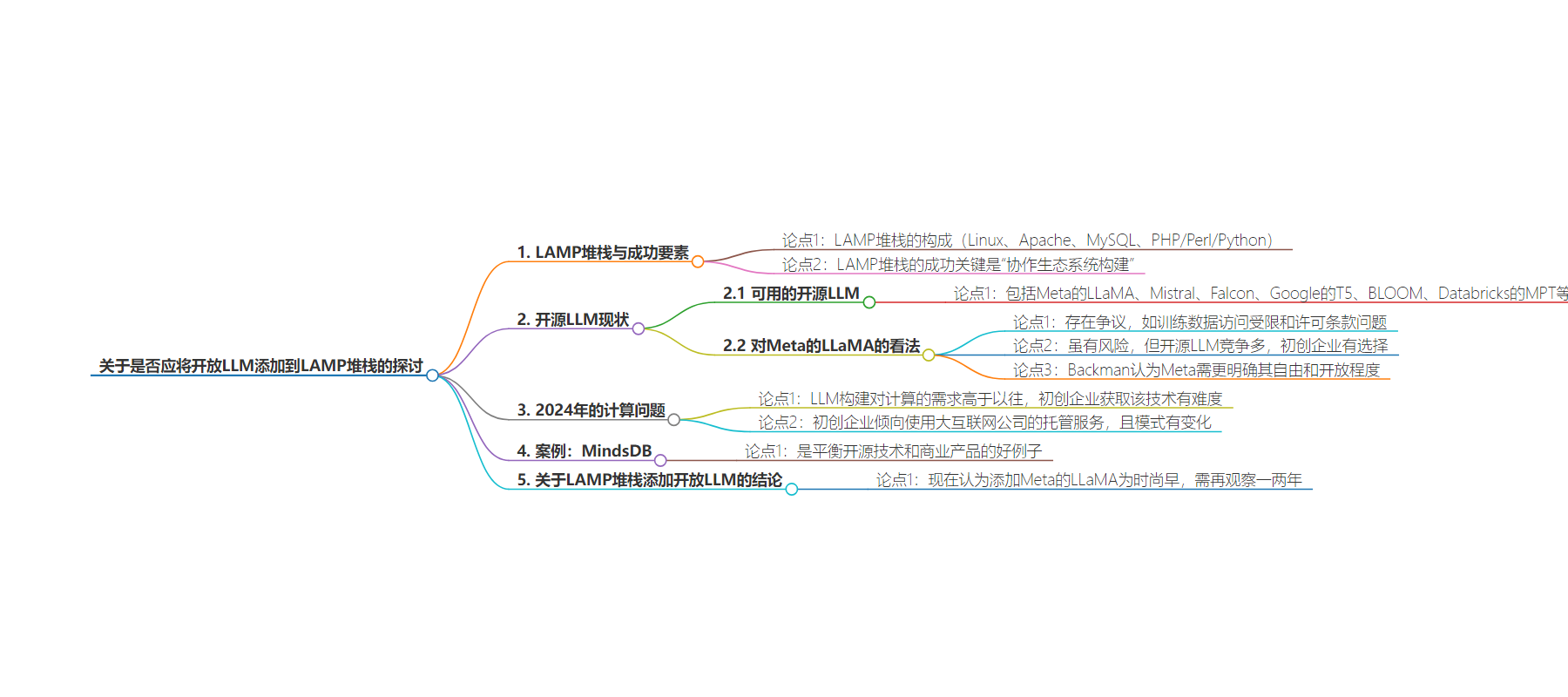

包阅导读总结

1. 关键词:LAMP 栈、开源、LLM、AI、协作生态

2. 总结:文章围绕是否应将开源 LLM 加入 LAMP 栈展开,介绍了 LAMP 栈的成功因素,探讨了当前开源 LLM 的情况,包括其开放性、社区协作及计算需求等问题,还以 MindsDB 为例说明了开源与商业的平衡。

3. 主要内容:

– LAMP 栈的背景与成功因素

– 基于 Linux、Apache、MySQL、PHP/Perl/Python 的缩写,推动了网络发展

– “协作生态建设”是成功关键

– 开源 LLM 现状

– 包括 Meta 的 LLaMA 等模型,存在开放性和协作争议

– 虽有风险,但竞争多,初创企业有选择

– 计算需求问题

– 构建先进 AI 模型计算要求高,初创企业获取技术有难度

– 案例 MindsDB

– 平衡开源技术与商业产品

– 关于是否将开源 LLM 加入 LAMP 栈的结论

– 目前太早肯定 Meta 的 LLaMA,需再观察一两年

思维导图:

文章地址:https://thenewstack.io/should-the-lamp-stack-add-an-open-llm-like-metas-llama/

文章来源:thenewstack.io

作者:Richard MacManus

发布时间:2024/8/12 15:09

语言:英文

总字数:1280字

预计阅读时间:6分钟

评分:90分

标签:LAMP 堆栈,开源大语言模型,Meta 的 LLaMA,AI 在 Web 开发中,开源社区

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Patrik Backman knows a thing or two about open source software and the impact of the LAMP stack on the early web. He was a co-founder of MariaDB and before that the director of software engineering at MySQL, the original “M” in the LAMP stack — although many argue that MariaDB is the “M” these days. Backman is now a general partner at European VC firm, OpenOcean, and continues to be involved in open source technologies (he’s still on the board of MariaDB). Recently, he’s been on the lookout for open source generative AI products that could be added to the LAMP stack.

According to Patrik Backman, “collaborative ecosystem building” is what made LAMP successful.

The LAMP stack, in case you’ve been living under an online rock, is based on an acronym for Linux, Apache, MySQL, PHP/Perl/Python. It was coined at the end of 1998 to denote a set of open source solutions to build web applications. The LAMP stack was what led to the Dot Com boom and the Web 2.0 revolutionthat followed after, because it enabled startups to leverage low-cost, open technologies to build new products.

Backman, who was along for the ride with MySQL and then MariaDB, told me that “collaborative ecosystem building” was a key part of what made the LAMP stack successful.

“In the early days, [we] thought that it’s natural to have all these open source events and so forth, to both meet with the key people from these other initiatives and projects, but also collaborate on packaging things well together, open documentation, open development principles, open bug reports, and development plans.”

Perhaps the biggest question around the LAMP stack now, and whether AI fits into this template, is whether the current open source solutions for AI are even capable of community building and collaboration. For example, before we even think about adding Meta’s LLaMA models to the LAMP stack — LAMPL? — we first need to figure out whether they’re open enough. And secondly, can Meta play well with the open source community?

Can Developers Trust Open Source LLMs?

Meta’s LLaMA models aren’t the only open source large language models available. Some of the other prominent ones include Mistral, Falcon, Google’s T5, BLOOM, and Databricks’ MPT models (previously known as MosaicML MPT). There are many more listed on Hugging Face’s open LLM leaderboard.

“I think there will be many open source players competing, which also restricts what Meta / Facebook can do with LLaMA.”

– Patrik Backman, OpenOcean

Meta’s models are probably the most well-known and well-used open source LLM, but they’re also the most controversial. Some have claimed that the LLaMA models aren’t truly open source, because of limited access to training data and other issues — such as the licensing terms encouraging development within Meta’s ecosystem (rather than allowing unrestricted use and modification, as is typical of open source software). Meta also imposes commercial restrictions on LLaMA model use. I asked Backman what he thinks of Meta’s LLaMA.

Firstly, Backman agrees with Mark Zuckerberg that the future of AI will be open source. “The technologies around AI are moving at an enormous speed and to innovate in the best way, the open source approach is the winning one,” he told me.

But does he think there is a danger of startups building on LLaMA, given the limitations of the license and Meta’s control over it?

Backman replied that although there are such risks, ultimately he thinks there is enough open source LLM competition — he specifically mentioned Mistral AI from France — and so startups have plenty of choices.

“I think there will be many open source players competing, which also restricts what Meta / Facebook can do with LLaMA,” he said. “I do think that […] for it to really succeed, they [Meta] will maybe need to be a bit clearer on the freedom and the openness of the initiative. But, of course, the community needs everyone participating and working […] to put tougher requirements and requests on the openness side.”

On a podcast earlier this year, Backman made it clear that he thinks companies running open source projects are entitled to create whatever license they feel is best for their business. “I think what should be recognized, and understood, and respected is that, indeed, anyone building software, even providing a free and open-source software, they should be allowed to have a credible and sustainable business model in order for them to be able to maintain and further develop it,” he told the Open Source Underdogs podcast. “But, then, it’s about what kind of balance you want to strike with what is free and what is commercial, and that’s where the license comes in.”

Compute an Issue in 2024

“…for building advanced AI models, the compute side is way more demanding than before.”

Even if we added an open LLM to the LAMP stack, there is a big question about whether startups can easily access this technology, due to the massive amount of compute that LLMs require.

“Of course, now for building advanced AI models, the compute side is way more demanding than before,” said Backman. He suggested that access to compute, and the ability to afford it, makes it trickier for a startup than (for example) standing up a free Apache server on a Linux machine and plugging in MySQL, back in the day.

I asked whether startups he deals with tend to use managed services from the big internet companies for their compute needs. He replied that yes, they do, but the pattern has changed from five-to-ten years ago. Previously, startups tended to pick one cloud provider over the others, he said, but these days they will pit the providers against each other.

“Now, there’s kind of a knowledge that, hey, you have to be able to support them [different cloud providers] in parallel, to be able to pit them against each other and [have them] compete with each other, so that you keep prices low, you get credits, and this and that support from them.”

An Example: MindsDB

“It’s a balance of providing a great [open] stack […] while having a commercial cloud offering that we build a business around.”

One of the startups Backman’s VC firm has invested in is MindsDB, a self-described “AI data automation platform.” Like a lot of recent enterprise-focused AI products, the goal of MindsDB is to help organizations meld generative AI technology with their own in-house data.

“If you develop AI models that use your own proprietary data, then it’s the best platform out there,” said Backman, adding that MindsDB has had over 300,000 deployments so far and is “growing very fast.”

Backman contends that MindsDB is a good example of a startup striking the balance between open source technologies and commercial offerings.

“It’s a balance of providing a great stack — open source, freely available, good documentation, good use cases, good examples, and so forth — while [also] having a commercial cloud offering that we […] then build a business around.”

The LAMP Question

Returning to the question I posed at the start of this article: should we add an open LLM to the LAMP stack?

Based on what I learned from Patrik Backman and what we currently know about the LLaMA license, I think it’s too early to give Meta the benefit of the doubt. But let’s give it another year or two to see if LLaMA matures as an open source solution, or whether one of its competitors emerges to become a LAMP-worthy product.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.