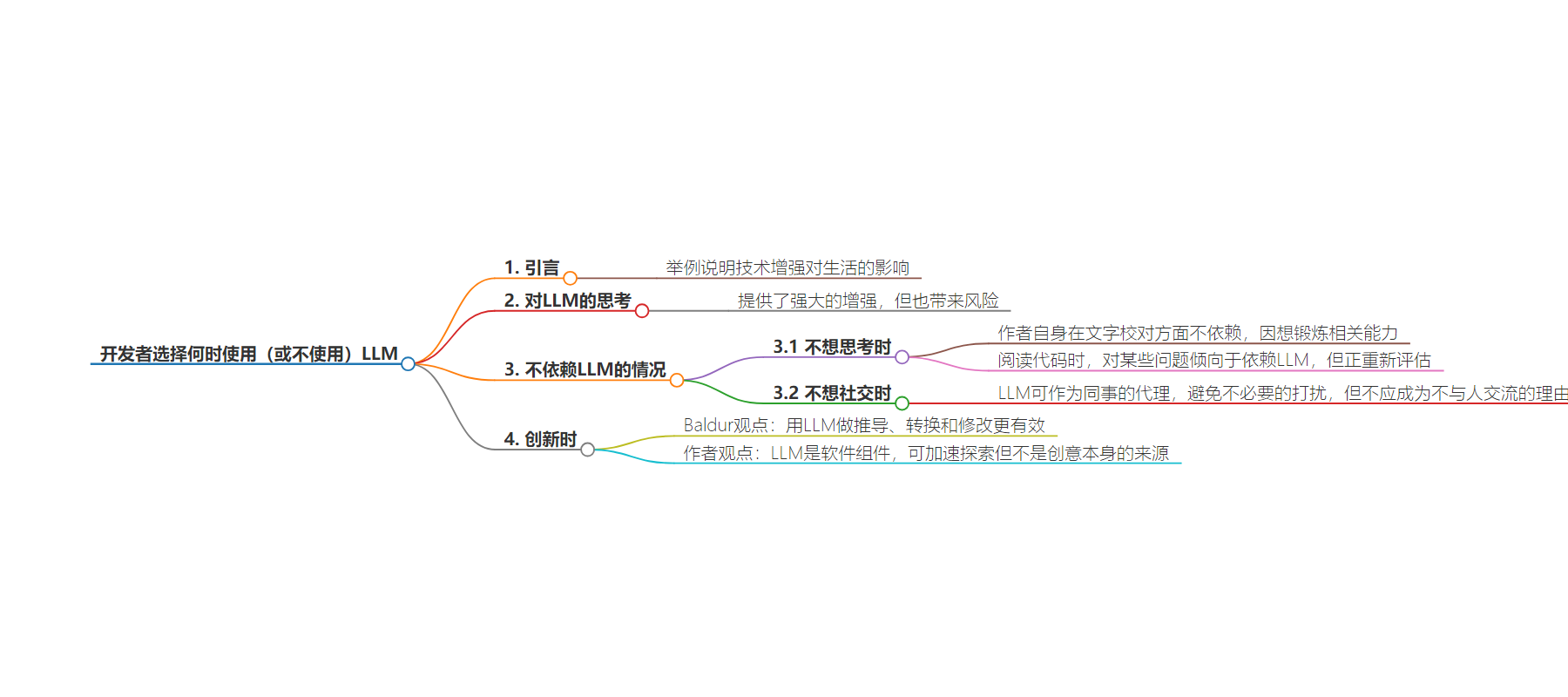

包阅导读总结

1. 关键词:LLMs、增强、危险、思维、创造力

2. 总结:本文探讨了作为开发者何时使用或不使用LLMs,提到其带来增强的同时也有危险,比如导致思维懒惰。还从不想思考、不想社交、需要创造力等方面分析了使用LLMs的情况,强调要合理运用,不能过度依赖。

3. 主要内容:

– 开篇通过手机导航的例子引入技术增强的话题

– 指出LLMs带来的增强及诸多危险

– 提到不要直接使用搜索引擎或LLMs查找答案,应先思考

– 以马丁·路德·金为例说明未被增强的思维的力量

– 讲述作者自身在文字校对和代码阅读方面对LLMs的使用情况

– 说明LLM-backed开发者工具可避免打扰同事但也不能过度避免社交

– 引用观点指出使用生成式AI不应只是生成,应注重衍生、转换和修改

– 作者认为LLMs是软件组件,其创意来自自身而非LLMs

思维导图:

文章地址:https://thenewstack.io/choosing-when-to-use-or-not-use-llms-as-a-developer/

文章来源:thenewstack.io

作者:Jon Udell

发布时间:2024/8/7 21:00

语言:英文

总字数:1357字

预计阅读时间:6分钟

评分:87分

标签:大型语言模型,开发者工具,认知增强,人工智能伦理,创造性流程

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

“My daughter will never know what it’s like to be lost.” I forget who said that about 10 years ago, but the message sank in and resonates more deeply as we are ever more profoundly augmented by tech. The speaker was referring, of course, to the fact that almost nobody younger than 15 (now 25) is ever without the handheld GPS wayfinding device that we anachronistically call a phone.

Of course, zooming and panning the small screen of a mobile device is a terrible way to visualize paths through a city or landscape. Our brains are built to absorb patterns from much larger visual fields. A small screen can’t compete with a real map that you can unfold and scan in a holistic way. Nor does following turn-by-turn directions help you build the mental map of a region that enables you to visualize relationships among arbitrary locations. That’s why, if the stakes for getting lost are low — or when getting lost is actually the goal! — I like to leave the phone in my pocket and practice dead reckoning.

LLMs offer a level of augmentation that I wasn’t sure I’d live to see. They also bring an array of perils.

In a similar vein, I’ve long been annoyed by the snarky “Let me google that for you” (now, of course, also “Let me GPT that for you”) response to a question. There’s a kind of shaming going on here. We are augmented with superpowers, people imply, so you’re an idiot to not use them.

Well sure, we can always look things up. But going there directly is a conversation killer. Can’t we pause, put our heads together and consider what inboard knowledge we may hold individually, or can collectively synthesize, before consulting the outboard brains?

Here’s my favorite tale about the power of the unaugmented mind. In “Behind the Dream,” Clarence Jones (Martin Luther King’s lawyer and adviser) reveals that the “Letter from Birmingham Jail” was written without access to books.

“What amazed me was that there was absolutely no reference material for Martin to draw upon. There he was pulling quote after quote from thin air. The Bible, yes, as might be expected from a Baptist minister, but also British prime minister William Gladstone, Mahatma Gandhi, William Shakespeare, and St. Augustine.”

He adds:

“Martin could remember exact phrases from several of his unrelated speeches and discover a new way of linking them together as if they were all parts of a singular ever-evolving speech. And he could do it on the fly.”

MLK’s power flowed in part from his ability to reconfigure a well-stocked working memory and scan it like a big map. He’d arguably have been far less effective if he had to consult outboard sources serially in small context windows.

Fast-forward to now. LLMs offer a level of augmentation that I wasn’t sure I’d live to see. They also bring an array of perils, many of which are ably documented in Baldur Bjarnason’s fiercely critical “The Intelligence Illusion,” including the one I’m addressing here:

“We’re using the AI tools for cognitive assistance. This means that we are specifically using them to think less.”

Full disclosure: Baldur and I speak often, though not much about AI because we are far apart on the kinds of benefits that I claim to regularly experience and have been documenting in this column. But on this key point we agree. And, mea culpa, I have sometimes found myself getting lazy about exercising the muscle between my ears. So, when not to LLM?

When You Don’t Want To Think

I’ve written a lot of code over the years, but I am first and foremost a writer of prose. I’m also a spectacularly good copy editor because I rarely fail to spot misspellings and errors of punctuation and grammar. This can be an annoying superpower because even a few such unignorable errors interrupt my flow when reading for business or pleasure. It means, though, that spellchecking is one form of augmentation I’ve never come to rely on.

My brain isn’t a fuzzy parser — I’m just not wired to see that kind of problem.

LLMs make pretty good proofreaders, because they grok higher-order patterns. But I don’t rely on them for that purpose either. I want to keep exercising my proofing and copy-editing muscles. And rereading prose for which I’m responsible — mine or that of a colleague — is just intrinsically valuable. There are always ways to improve a piece of writing.

I’m wired differently when it comes to reading code. A bug caused by inconsistent spelling of a variable doesn’t jump out at me in the same way that misspellings in prose do. Neither does a logical error. I’ve noticed that many of my more code-oriented colleagues catch misspellings, and structural problems, more readily in code than they do in prose. Because reading code doesn’t come as easily to me as to others, I’ve tended to lean on LLMs for help. Now I’m reevaluating.

For certain things, the LLM is a clear win. If I’m looking at an invalid blob of JSON that won’t even parse, there’s no reason to avoid augmentation. My brain isn’t a fuzzy parser — I’m just not wired to see that kind of problem, and that isn’t likely to change with effort and practice. But if there are structural problems with code, I need to think about them before reaching for assistance. That’s a skill that can improve with effort and practice.

When You Don’t Want To Be Social

LLM-backed developer tools can serve as proxies for coworkers when you shouldn’t interrupt them.

We all know that knowledge workers, and especially software developers, need to achieve mental flow supported by rich context that’s hard to assemble and tragically vulnerable to interruption. Because LLM-backed developer tools like Cody and Unblocked mine local knowledge — your code, your documentation — they can serve as proxies for coworkers, and thus shields that protect them from interruption. Even if you haven’t tried these specialized tools, you’ve likely achieved the same effect relative to the world knowledge marshaled by ChatGPT and Claude. It’s a real and important benefit.

But let’s not go overboard. Programmers are stereotypically not the most social people. When LLMs help us avoid unnecessarily interrupting others’ flow? Great. When they provide one more reason not to talk to people we should be talking to? Not great.

When You Need To Be Creative

Baldur nails the paradox:

“The most productive way to use generative AI is to not use it as generative AI.”

Instead, he argues we should use it “primarily for derivation, conversion, and modification.” Here too we agree. The uses I’ve described in this column — to which I ascribe more value than does Baldur — belong in that category.

The hybrid solution I arrived at in my last column, Human Insight + LLM Grunt Work = Creative Publishing Solution, was, on the other hand, if not completely novel, then at least rare enough to not appear in the literature absorbed (at that time) by the language models. I claim it was the kind of creative act that transcends derivation, conversion, and modification.

For me, LLMs are software components.

It’s true that my use of LLMs to help implement the idea was, arguably, another form of creativity. For me, LLMs are software components, and my strongest superpower in the technical realm has always been finding novel ways to use and remix such components. Learning to work effectively with this new breed of insanely powerful pattern recognizers and transformers affords plenty of scope for that kind of innovation.

But the idea itself didn’t, and wouldn’t, come directly from my team of assistants. They played a supporting role, by accelerating my exploration of conversion strategies that ultimately proved to be dead ends. When the idea came to me, though, it did not appear on a screen in a conversation with Claude or ChatGPT: It appeared in my head while hiking up a mountain.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.