包阅导读总结

1.

关键词:Mistral AI、语言模型、Apache 2.0 许可、性能优势、模型应用

2.

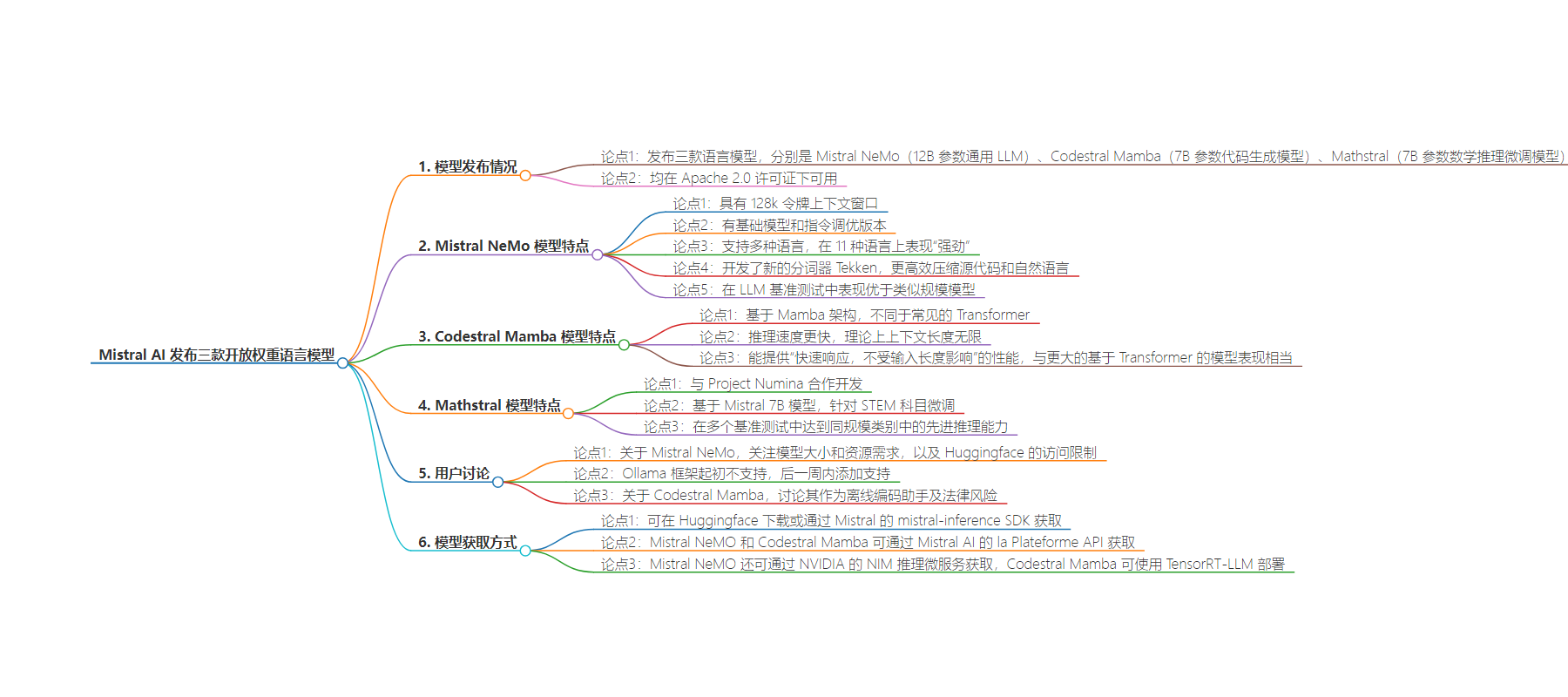

总结:Mistral AI 发布了三款开放权重语言模型,包括 Mistral NeMo、Codestral Mamba 和 Mathstral,均在 Apache 2.0 许可下可用,具有各自特点和优势,模型可通过多种方式获取和部署。

3.

主要内容:

– Mistral AI 发布三款语言模型

– Mistral NeMo:12B 参数通用 LLM,128k 令牌上下文窗口,有基础和指令调优版本,支持多语言,新 tokenizer,在多项基准测试中表现出色

– Codestral Mamba:7B 参数代码生成模型,基于 Mamba 架构,推理速度快,理论上上下文长度无限

– Mathstral:7B 参数,与 Project Numina 合作,针对数学和推理微调,在多项基准测试中表现良好

– 模型特点与优势

– NeMo 在多项测试中超越同类规模模型

– Mamba 模型推理速度快,能快速响应不同输入长度

– Mathstral 在其规模类别中推理能力达到先进水平

– 模型获取与部署

– 可在 Huggingface 下载或通过 Mistral 的 SDK

– NeMo 和 Mamba 可通过 Mistral AI 的 API

– NeMo 还可通过 NVIDIA 的服务,Mamba 可使用 TensorRT-LLM 部署

思维导图:

文章来源:infoq.com

作者:Anthony Alford

发布时间:2024/8/6 0:00

语言:英文

总字数:565字

预计阅读时间:3分钟

评分:88分

标签:语言模型,Mistral AI,代码生成,数学推理,Mamba 架构

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Mistral AI released three open-weight language models: Mistral NeMo, a 12B parameter general-purpose LLM; Codestral Mamba, a 7B parameter code-generation model; and Mathstral, a 7B parameter model fine-tuned for math and reasoning. All three models are available under the Apache 2.0 license.

Mistral AI calls NeMo their “new best small model.” The model has a 128k token context window and is available in both a base model and an instruct-tuned version. Mistral NeMO supports multiple languages, with “strong” performance on 11 languages including Chinese, Japanese, Arabic, and Hindi. Mistral developed a new tokenizer for the model, called Tekken, which has more efficient compression of source code and natural language. On LLM benchmarks such as MMLU and Winogrande, Mistral NeMO outperforms similarly-sized models, including Gemma 2 9B and Llama 3 8B.

Codestral Mamba is based on the Mamba architecture, an alternative to the more common Transformer that most LLMs are derived from. Mamba models offer faster inference than Transformers and theoretically infinite context length. Mistral touts their model’s ability to provide users with “quick responses, irrespective of the input length” and performance “on par” with larger Transformer-based models such as CodeLlama 34B.

Mathstral was developed in collaboration with Project Numina, a non-profit organization dedicated to fostering AI for mathematics. It is based on the Mistral 7B model and is fine-tuned for performance in STEM subjects. According to Mistral AI, Mathstral “achieves state-of-the-art reasoning capacities in its size category” on several benchmarks, including 63.47% on MMLU and 56.6% on MATH.

In a discussion on Hacker News about Mistral NeMo, one user pointed out that:

[The model features] an improvement at just about everything, right? Large context, permissive license, should have good perf. The one thing I can’t tell is how big 12B is going to be (read: how much VRAM/RAM is this thing going to need). Annoyingly and rather confusingly for a model under Apache 2.0, [Huggingface] refuses to show me files unless I login and “You need to agree to share your contact information to access this model”…though if it’s actually as good as it looks, I give it hours before it’s reposted without that restriction, which Apache 2.0 allows.

Other users pointed out that, at release time, the model was not supported by the popular Ollama framework, because Mistral NeMo used a new tokenizer. However, the Ollama devs added support for NeMo in less than a week.

Hacker News users also discussed Codestral Mamba, speculating whether it would be a good solution for an “offline” or locally hosted coding assistant. One user wrote:

I don’t have a gut feel for how much difference the Mamba arch makes to inference speed, nor how much quantisation is likely to ruin things, but as a rough comparison Mistral-7B at 4 bits per param is very usable on CPU. The issue with using any local models for code generation comes up with doing so in a professional context: you lose any infrastructure the provider might have for avoiding regurgitation of copyright code, so there’s a legal risk there. That might not be a barrier in your context, but in my day-to-day it certainly is.

The new models are available for download on Huggingface or via Mistral’s mistral-inference SDK. Mistral NeMO and Codestral Mamba are available via Mistral AI’s la Plateforme API. Mistral NeMO is further available via NVIDIA’s NIM inference microservice, and Codestral Mamba can be deployed using TensorRT-LLM.