包阅导读总结

1. 关键词:Kubernetes、AI 推理、可扩展性、资源优化、容错性

2. 总结:本文介绍了使用 Kubernetes 进行 AI 推理的优势,包括可扩展性、资源优化、性能优化、可移植性和容错性,强调其对满足 AI 推理需求的重要性。

3. 主要内容:

– 可扩展性

– 三种原生自动缩放机制:Horizontal Pod Autoscaler(HPA)、Vertical Pod Autoscaler(VPA)和 Cluster Autoscaler(CA)。

– HPA 根据指标缩放运行应用或模型的 Pod 数量。

– VPA 调整 Pod 中容器的资源需求和限制。

– CA 调整集群的计算资源池。

– 资源优化

– 有效分配资源。

– 详细控制资源的“限制”和“请求”。

– 利用自动缩放节省成本。

– 性能优化

– HPA、VPA 和 CA 有助于改进推理性能。

– 可使用额外工具控制和预测性能。

– 可移植性

– 容器化技术将模型和应用及其依赖打包成可移植容器。

– 支持多环境,包括多云和混合环境。

– 容错性

– Pod 级和节点级的容错机制。

– 支持滚动更新。

– 就绪和活性探针。

– 集群自愈。

– 结论

– 强调组织采用 Kubernetes 处理大规模 AI 推理的重要性。

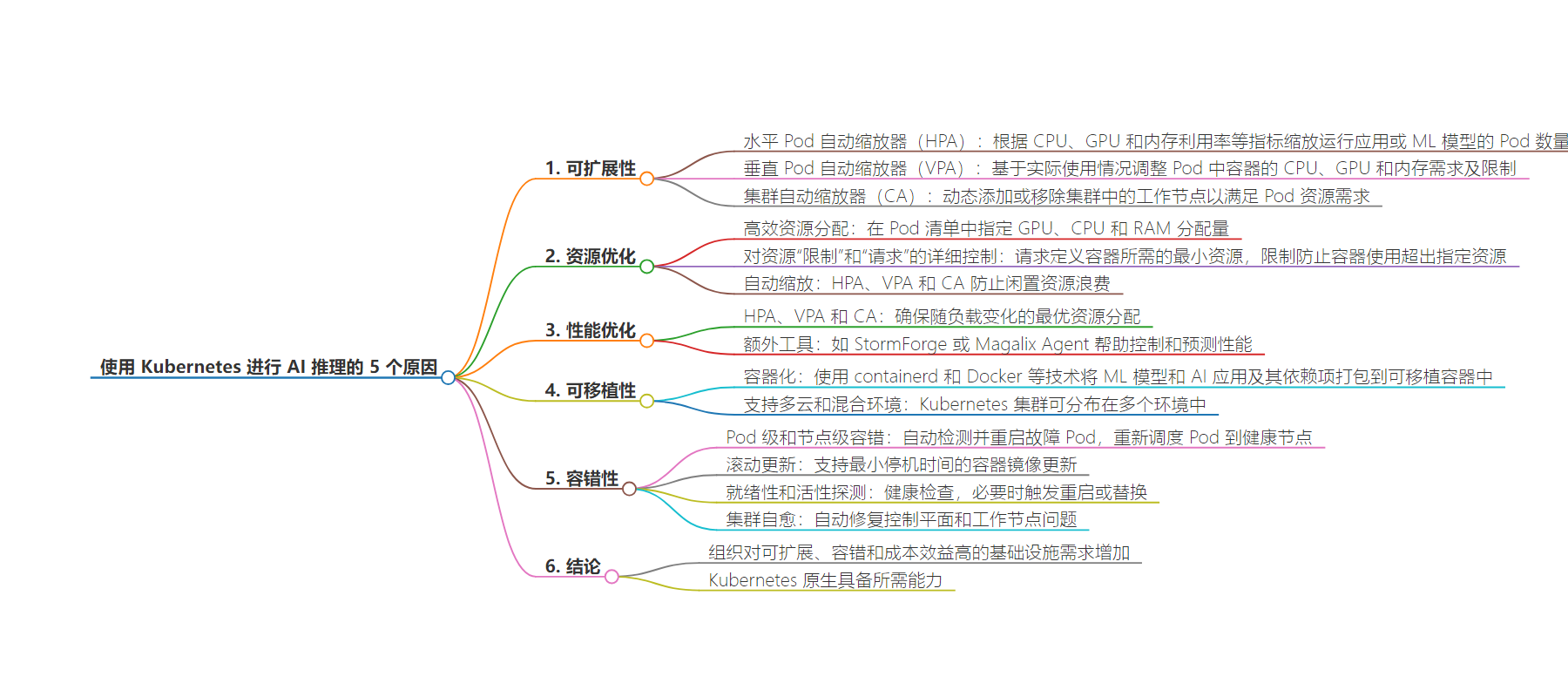

思维导图:

文章地址:https://thenewstack.io/5-reasons-to-use-kubernetes-for-ai-inference/

文章来源:thenewstack.io

作者:Zulyar Ilakhunov

发布时间:2024/7/23 13:25

语言:英文

总字数:1101字

预计阅读时间:5分钟

评分:90分

标签:Kubernetes,AI 推理,可扩展性,资源优化,性能优化

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Many of the key features of Kubernetes naturally fit the needs of AI inference, whether it’s AI-enabled microservices or ML models, almost as if they were invented for the purpose. Let’s look at these features and how they benefit inference workloads.

1. Scalability

Scalability for AI-enabled applications and ML models ensures they can handle as much load as needed, such as the number of concurrent user requests. Kubernetes has three native autoscaling mechanisms, each benefiting scalability: Horizontal Pod Autoscaler (HPA), Vertical Pod Autoscaler (VPA) and Cluster Autoscaler (CA).

- Horizontal Pod Autoscaler scales the number of pods running applications or ML models based on various metrics such as CPU, GPU and memory utilization. When demand increases, such as a spike in user requests, HPA scales resources up. When the load decreases, HPA scales the resources down.

- Vertical Pod Autoscaler adjusts the CPU, GPU, and memory requirements and limits for containers in a pod based on their actual usage. By changing

limitsin a pod specification, you can control the amount of specific resources the pod can receive. It’s useful for maximizing the utilization of each available resource on your node. - Cluster Autoscaler adjusts the total pool of compute resources available across the cluster to meet workload demands. It dynamically adds or removes worker nodes from the cluster based on the resource needs of the pods. That’s why CA is essential for inferencing large ML models with a large user base.

Here are the key benefits of K8s scalability for AI inference:

2. Resource Optimization

By thoroughly optimizing resource utilization for inference workloads, you can provide them with the appropriate amount of resources. This saves you money, which is particularly important when renting often-expensive GPUs. The key Kubernetes features that allow you to optimize resource usage for inference workloads are efficient resource allocation, detailed control over limits and requests, and autoscaling.

- Efficient resource allocation: You can allocate a specific amount of GPU, CPU and RAM to pods by specifying it in a pod manifest. However, only NVIDIA accelerators currently support time-slicing and multi-instance partitioning of GPUs. If you use Intel or AMD accelerators, pods can only request entire GPUs.

- Detailed control over resource “limits” and “requests”:The

requestsdefine the minimum resources a container needs, whilelimitsprevent a container from using more than the specified resources. This provides granular control over computing resources. - Autoscaling: HPA, VPA and CA prevent wasting money on idle resources. If you configure these features correctly, you won’t have any idle resources at all.

With these Kubernetes capabilities, your workloads receive the computing power they need and no more. Since renting a mid-range GPU in the cloud can cost $1 to $2 per hour, you save a significant amount of money in the long run.

3. Performance Optimization

While AI inference is typically less resource-intensive than training, it still requires GPU and other computing resources to run efficiently. HPA, VPA and CA are the key contributors to Kubernetes’ ability to improve inference performance. They ensure optimal resource allocation for AI-enabled applications even as the load changes. However, you can use additional tools to help you control and predict the performance of your AI workloads, such as StormForge or Magalix Agent.

Overall, Kubernetes’ elasticity and ability to fine-tune resource usage allows you to achieve optimal performance for your AI applications, regardless of their size and load.

4. Portability

It’s critical that AI workloads, such as ML models, are portable. This allows you to run them consistently across environments without worrying about infrastructure differences, saving you time and, consequently, money. Kubernetes enables portability primarily through two built-in features: containerization and compatibility with any environment.

- Containerization: Kubernetes uses containerization technologies, like containerd and Docker, to package ML models and AI-enabled applications, along with their dependencies, into portable containers. You can then use those containers on any cluster, in any environment, and even with other container orchestration tools.

- Support for multicloud and hybrid environments: Kubernetes clusters can be distributed across multiple environments, including public clouds, private clouds and on-premises infrastructure. This gives you flexibility and reduces vendor lock-in.

Here are the key benefits of K8s portability:

- Consistent ML model deployments across different environments

- Easier migration and updates of AI workloads

- Flexibility to choose cloud providers or on-premises infrastructure

5. Fault Tolerance

Infrastructure failures and downtime when running AI inference can lead to significant accuracy degradation, unpredictable model behavior or simply a service outage. This is unacceptable for many AI-enabled applications, including safety-critical applications such as robotics, autonomous driving and medical analytics. Kubernetes’ self-healing and fault tolerance features help prevent these problems.

- Pod-level and node-level fault tolerance: If a pod goes down or doesn’t respond, Kubernetes automatically detects the issue and restarts the pod. This ensures that the application remains available and responsive. If a node running a pod fails, Kubernetes automatically reschedules the pod to a healthy node.

- Rolling updates: Kubernetes supports rolling updates, so you can update your container images with minimal downtime. This lets you quickly deploy bug fixes or model updates without disrupting the running inference service.

- Readiness and liveliness probes: These probes are health checks that detect when a container is not ready to receive traffic or has become unhealthy, triggering a restart or replacement if necessary.

- Cluster self-healing: K8s can automatically repair control plane and worker node problems, like replacing failed nodes or restarting unhealthy components. This helps maintain the overall health and availability of the cluster running your AI inference.

Here are the key benefits of K8s fault tolerance:

- Increased resiliency of AI-enabled applications by keeping them highly available and responsive

- Minimal downtime and disruption when things go wrong

- Increased user satisfaction by making applications and models highly available and more resilient to unexpected infrastructure failures

Conclusion

As organizations continue to integrate AI into their applications, use large ML models and face dynamic loads, adopting Kubernetes as a foundational technology is critical. As a managed Kubernetes provider, we’ve seen an increasing demand for scalable, fault-tolerant and cost-effective infrastructure that can handle AI inference at scale. Kubernetes is a tool that natively delivers all of these capabilities.

Want to learn more about accelerating AI with Kubernetes? Explore this Gcore ebook.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.