包阅导读总结

1. 关键词:Meta Llama 3.1、Workers AI、Cloudflare、开源模型、函数调用

2. 总结:

Cloudflare 作为 Meta 的发布合作伙伴,使 Llama 3.1 8B 模型在 Workers AI 上首日可用,该模型免费使用直至测试结束,具有更高精度、多语言支持和函数调用等新特性,能助开发者更高效构建应用。

3. 主要内容:

– Meta Llama 3.1 8B 模型现可在 Workers AI 上使用

– Cloudflare 是开源社区的支持者,策略是提供助于用开源模型构建应用的开发者体验和工具包

– 成为 Meta 发布伙伴,Llama 3.1 8B 模型首日对所有 Workers AI 用户可用,可通过换模型 ID 或在 Playground 测试

– Llama 3.1 8B 模型的特点

– 性能出色,在多领域表现良好

– 更高精度,支持 8 种语言,内置多语言支持可直接用多种语言写提示和接收响应

– 引入原生函数调用,支持生成结构化 JSON 输出,无需微调变体,Workers AI 有嵌入式函数调用

– 使用说明

– 遵循 Meta 的可接受使用政策和许可

– 可参考开发者文档开始使用

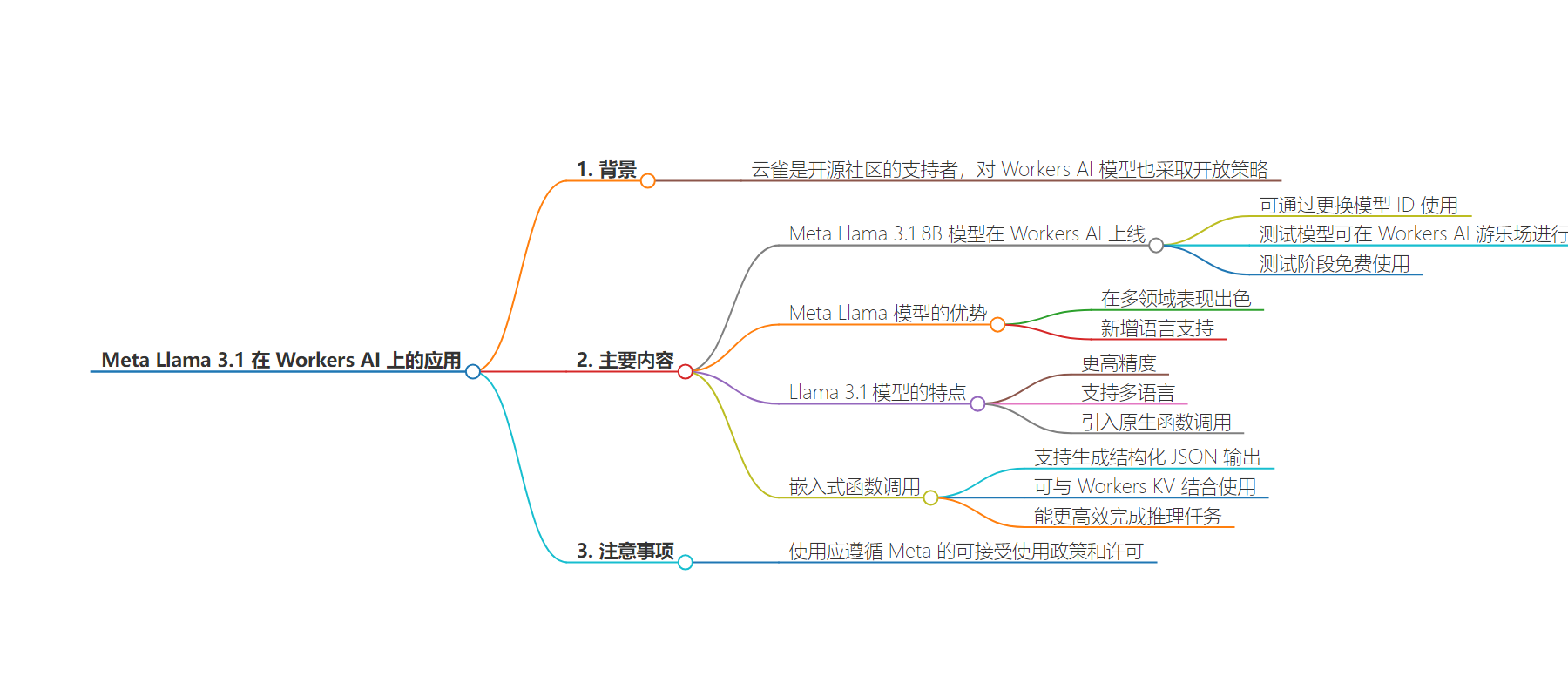

思维导图:

文章地址:https://blog.cloudflare.com/meta-llama-3-1-available-on-workers-ai

文章来源:blog.cloudflare.com

作者:Michelle Chen

发布时间:2024/7/23 16:15

语言:英文

总字数:563字

预计阅读时间:3分钟

评分:92分

标签:AI 模型,Cloudflare Workers AI,Meta 拉玛 3.1,开源,函数调用

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

At Cloudflare, we’re big supporters of the open-source community – and that extends to our approach for Workers AI models as well. Our strategy for our Cloudflare AI products is to provide a top-notch developer experience and toolkit that can help people build applications with open-source models.

We’re excited to be one of Meta’s launch partners to make their newest Llama 3.1 8B model available to all Workers AI users on Day 1. You can run their latest model by simply swapping out your model ID to @cf/meta/llama-3.1-8b-instruct or test out the model on our Workers AI Playground. Llama 3.1 8B is free to use on Workers AI until the model graduates out of beta.

Meta’s Llama collection of models have consistently shown high-quality performance in areas like general knowledge, steerability, math, tool use, and multilingual translation. Workers AI is excited to continue to distribute and serve the Llama collection of models on our serverless inference platform, powered by our globally distributed GPUs.

The Llama 3.1 model is particularly exciting, as it is released in a higher precision (bfloat16), incorporates function calling, and adds support across 8 languages. Having multilingual support built-in means that you can use Llama 3.1 to write prompts and receive responses directly in languages like English, French, German, Hindi, Italian, Portuguese, Spanish, and Thai. Expanding model understanding to more languages means that your applications have a bigger reach across the world, and it’s all possible with just one model.

const answer = await env.AI.run('@cf/meta/llama-3.1-8b-instruct', { stream: true, messages: [{ "role": "user", "content": "Qu'est-ce que ç'est verlan en français?" }],});Llama 3.1 also introduces native function calling (also known as tool calls) which allows LLMs to generate structured JSON outputs which can then be fed into different APIs. This means that function calling is supported out-of-the-box, without the need for a fine-tuned variant of Llama that specializes in tool use. Having this capability built-in means that you can use one model across various tasks.

Workers AI recently announced embedded function calling, which is now usable with Meta Llama 3.1 as well. Our embedded function calling gives developers a way to run their inference tasks far more efficiently than traditional architectures, leveraging Cloudflare Workers to reduce the number of requests that need to be made manually. It also makes use of our open-source ai-utils package, which helps you orchestrate the back-and-forth requests for function calling along with other helper methods that can automatically generate tool schemas. Below is an example function call to Llama 3.1 with embedded function calling that then stores key-values in Workers KV.

const response = await runWithTools(env.AI, "@cf/meta/llama-3.1-8b-instruct", { messages: [{ role: "user", content: "Greet the user and ask them a question" }], tools: [{ name: "Store in memory", description: "Store everything that the user talks about in memory as a key-value pair.", parameters: { type: "object", properties: { key: { type: "string", description: "The key to store the value under.", }, value: { type: "string", description: "The value to store.", }, }, required: ["key", "value"], }, function: async ({ key, value }) => { await env.KV.put(key, value); return JSON.stringify({ success: true, }); } }]})We’re excited to see what you build with these new capabilities. As always, use of the new model should be conducted with Meta’s Acceptable Use Policy and License in mind. Take a look at our developer documentation to get started!