包阅导读总结

1.

关键词:MLOps、GitHub Actions、Arm64 runners、机器学习、自动化

2.

总结:本文探讨了如何利用 GitHub Actions 和 Arm64 runners 优化 MLOps 管道,强调其在提高效率、降低成本、增强性能方面的优势,涵盖了 MLOps 的重要性、相关优化配置及实际影响等。

3.

主要内容:

– 介绍 MLOps 及其在机器学习中的重要性

– MLOps 连接模型开发与部署,需自动化以减少错误

– ML 项目阶段多,手动管理耗时易错

– 阐述 MLOps 中的 CI 和部署

– CI/CD 管道是核心,可自动集成新数据和代码变更

– 定义好的管道能自动重训练和重部署模型

– 讲解 Arm64 runners 的性能提升

– 利用 Arm 架构,具成本和能效优势

– 对 ML 任务优化性能、成本和可扩展性

– Arm 对 PyTorch 优化,达到与传统系统相当性能

– 介绍用 Actions 自动化 MLOps 工作流

– 可在 GitHub 库直接创建自定义工作流

– 能自动化数据处理、模型训练等任务

– 具集成、并行、定制等优点

– 讲述构建高效 MLOps 管道

– 包括项目设置、数据处理、模型训练等阶段

– 考虑高级配置优化工作流

– 给出提高效率的技巧

– 提及实际影响和案例研究

– 报告显示训练时间减少、成本节省等

– 强调持续改进和迭代的重要性

– 包括监测、反馈、更新、协作等

– 总结整合的优势和鼓励探索使用

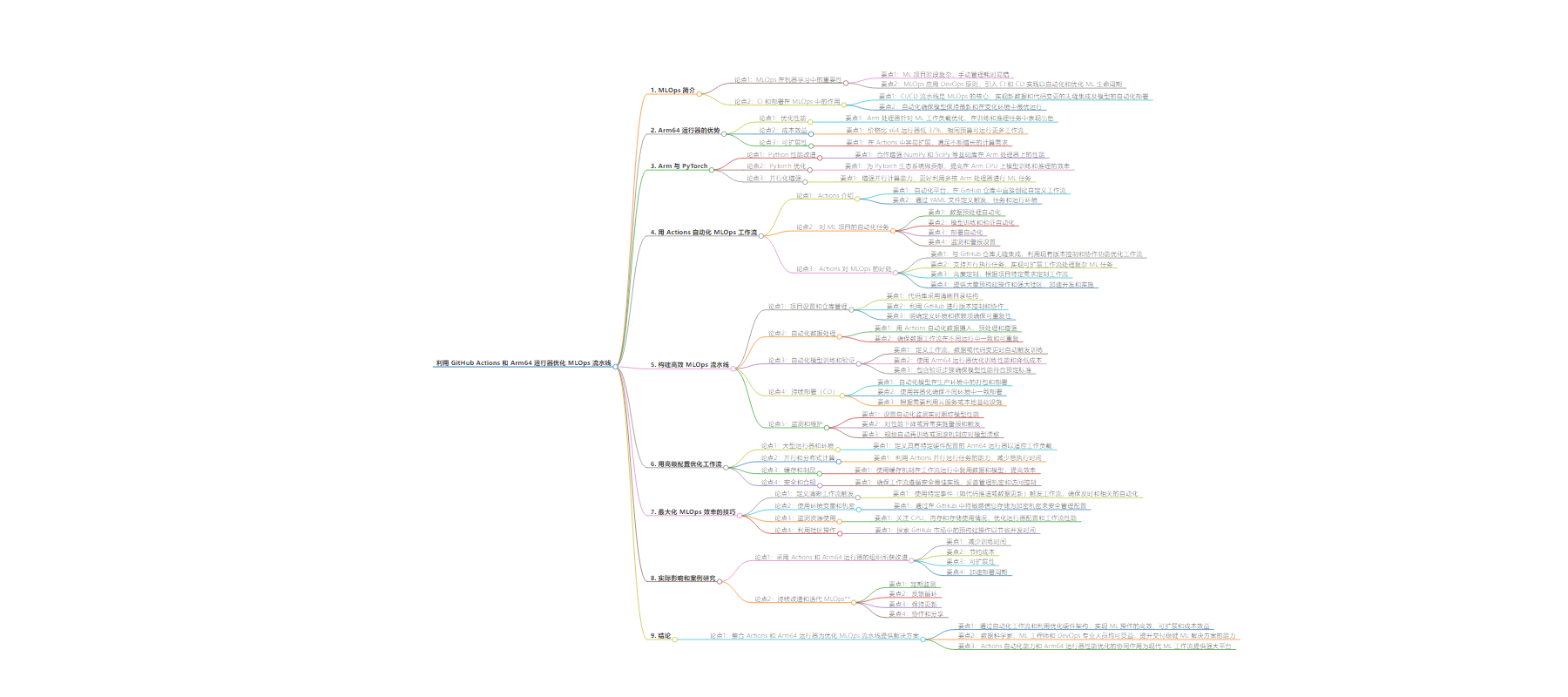

思维导图:

文章来源:github.blog

作者:Scott Arbeit

发布时间:2024/9/11 16:00

语言:英文

总字数:1237字

预计阅读时间:5分钟

评分:87分

标签:MLOps,GitHub Actions,Arm64,机器学习,CI/CD

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

In today’s rapidly evolving field of machine learning (ML), the efficiency and reliability of deploying models into production environments have become as crucial as the models themselves. Machine Learning Operations, or MLOps, bridges the gap between developing machine learning models and deploying them at scale, ensuring that models are not only built effectively but also maintained and monitored to deliver continuous value.

One of the key enablers of an efficient MLOps pipeline is automation, which minimizes manual intervention and reduces the likelihood of errors. GitHub Actions on Arm64 runners, now generally available, in conjunction with PyTorch, offers a powerful solution to automate and streamline machine learning workflows. In this blog, we’ll explore how integrating Actions with Arm64 runners can enhance your MLOps pipeline, improve performance, and reduce costs.

The significance of MLOps in machine learning

ML projects often involve multiple complex stages, including data collection, preprocessing, model training, validation, deployment, and ongoing monitoring. Managing these stages manually can be time-consuming and error-prone. MLOps applies the principles of DevOps to machine learning, introducing practices like Continuous Integration (CI) and Continuous Deployment (CD) to automate and streamline the ML lifecycle.

CI and deployment in MLOps

CI/CD pipelines are at the heart of MLOps, enabling the seamless integration of new data and code changes, and automating the deployment of models into production. With a robust CI/CD pipeline defined using Actions workflows, models can be retrained and redeployed automatically whenever new data becomes available or when the codebase is updated. This automation ensures that models remain uptodate and continue to perform optimally in changing environments.

Enhancing performance with Arm64 runners

Arm64 runners are GitHub-hosted runners that utilize Arm architecture, providing a cost-effective and energy-efficient environment for running workflows. They are particularly advantageous for ML tasks due to the following reasons:

- Optimized performance: Arm processors have been increasingly optimized for ML workloads, offering competitive performance in training and inference tasks.

- Cost efficiency: Arm64 runners are priced 37% lower than GitHub’s x64 based runners, allowing you to get more workflow runs within the same budget.

- Scalability: easily scalable within Actions, allowing you to handle growing computational demands.

Arm ❤️ PyTorch

In recent years, Arm has invested significantly in optimizing machine learning libraries and frameworks for Arm architecture. For instance:

- Python performance improvements: collaborations to enhance the performance of foundational libraries like NumPy and SciPy on Arm processors.

- PyTorch optimization: contributions to the PyTorch ecosystem, improving the efficiency of model training and inference on Arm CPUs.

- Parallelization enhancements: enhancements in parallel computing capabilities, enabling better utilization of multi-core Arm processors for ML tasks.

These optimizations mean that running ML workflows on Arm64 runners can now achieve performance levels comparable to traditional x86 systems, with cost and energy efficiency.

Automating MLOps workflows with Actions

Actions is an automation platform that allows you to create custom workflows directly in your GitHub repository. By defining workflows in YAML files, you can specify triggers, jobs, and the environment in which these jobs run. For ML projects, Actions can automate tasks such as:

- Data preprocessing: automate the steps required to clean and prepare data for training.

- Model training and validation: run training scripts automatically when new data is pushed or when changes are made to the model code.

- Deployment: automatically package and deploy models to production environments upon successful training and validation.

- Monitoring and alerts: setup workflows to monitor model performance and send alerts if certain thresholds are breached.

Actions offer several key benefits for MLOps. It integrates seamlessly with your GitHub repository, leveraging existing version control and collaboration features to streamline workflows. It also supports parallel execution of jobs, enabling scalable workflows that can handle complex machine learning tasks. With a high degree of customization, Actions allows you to tailor workflows to the specific needs of your project, ensuring flexibility across various stages of the ML lifecycle. Furthermore, the platform provides access to a vast library of pre-built actions and a strong community, helping to accelerate development and implementation.

Building an efficient MLOps pipeline

An efficient MLOps pipeline leveraging Actions and Arm64 runners involves several key stages:

- Project setup and repository management:

- Organize your codebase with a clear directory structure.

- Utilize GitHub for version control and collaboration.

- Define environments and dependencies explicitly to ensure reproducibility.

- Automated data handling:

- Use Actions to automate data ingestion, preprocessing, and augmentation.

- Ensure that data workflows are consistent and reproducible across different runs.

- Automated model training and validation:

- Define workflows that automatically trigger model training upon data or code changes.

- Use Arm64 runners to optimize training performance and reduce costs.

- Incorporate validation steps to ensure model performance meets predefined criteria.

- CD:

- Automate the packaging and deployment of models into production environments.

- Use containerization for consistent deployment across different environments.

- Leverage cloud services or on-premises infrastructure as needed.

- Monitoring and maintenance:

- Setup automated monitoring to track model performance in real time.

- Implement alerts and triggers for performance degradation or anomalies.

- Plan for automated retraining or rollback mechanisms in response to model drift.

Optimizing workflows with advanced configurations

To further enhance your MLOps pipeline, consider the following advanced configurations:

- Large runners and environments: define Arm64 runners with specific hardware configurations suited to your workload.

- Parallel and distributed computing: utilize Actions’ ability to run jobs in parallel, reducing overall execution time.

- Caching and artifacts: use caching mechanisms to reuse data and models across workflow runs, improving efficiency.

- Security and compliance: ensure that workflows adhere to security best practices, managing secrets and access controls appropriately.

Real-world impact and case studies

Organizations adopting Actions with Arm64 runners have reported significant improvements:

- Reduced training times: leveraging Arm optimizations in ML frameworks leads to faster model training cycles.

- Cost savings: lower power consumption and efficient resource utilization result in reduced operational costs.

- Scalability: ability to handle larger datasets and more complex models without proportional increases in cost or complexity.

- CD: accelerated deployment cycles, enabling quicker iteration and time-to-market for ML solutions.

Embracing CI

MLOps is not a one-time setup but an ongoing practice of continuous improvement and iteration. To maintain and enhance your pipeline:

- Regular monitoring: continuously monitor model performance and system metrics to proactively address issues.

- Feedback loops: Incorporate feedback from production environments to refine models and workflows.

- Stay updated: keep abreast of advancements in tools like Actions and developments in Arm architecture optimizations.

- Collaborate and share: engage with the community to share insights and learn from others’ experiences.

Conclusion

Integrating Actions with Arm64 runners presents a compelling solution for organizations looking to streamline their MLOps pipelines. By automating workflows and leveraging optimized hardware architectures, you can achieve greater efficiency, scalability, and cost-effectiveness in your ML operations.

Whether you’re a data scientist, ML engineer, or DevOps professional, embracing these tools can significantly enhance your ability to deliver robust ML solutions. The synergy between Actions’ automation capabilities and Arm64runners’ performance optimizations offers a powerful platform for modern ML workflows.

Ready to transform your MLOps pipeline? Start exploring Actions and Arm64 runners today, and unlock new levels of efficiency and performance in your ML projects.

Additional resources

Written by

Senior Technical Architect, GitHub