包阅导读总结

1.

关键词:Intel MPI Library、HPC 性能、Google Cloud、VM 家族、通信优化

2.

总结:本文介绍了英特尔 MPI 库如何提升高性能计算(HPC)在谷歌云的性能。谷歌云的 VM 家族适用于 HPC 工作负载,英特尔 MPI 库针对第三代 VM 和 Titanium 进行了优化,通过测试表明其能显著提升 HPC 应用性能。

3.

主要内容:

– 高性能计算(HPC)在各行业作用重大,通过模拟加速产品设计等。

– HPC 依靠大量计算元素等协作,并通过消息传递接口(MPI)通信。

– 谷歌云提供适合 HPC 工作负载的 VM 家族,如 H3 等。

– 这些 VM 有先进技术和功能,并被英特尔软件工具优化。

– 英特尔基础设施处理单元(IPU)E2000 可将网络从 CPU 卸载。

– 英特尔 MPI 库实现 MPI API 标准,基于开源 MPICH 项目。

– 2021.11 版对特定提供者进行优化,利用处理器优势,支持新系统和应用。

– 以西门子 Simcenter STAR-CCM+软件为例测试新英特尔 MPI 库性能。

– 多数测试场景实现良好加速,部分因问题规模小未持续扩展。某些测试甚至出现超线性扩展。

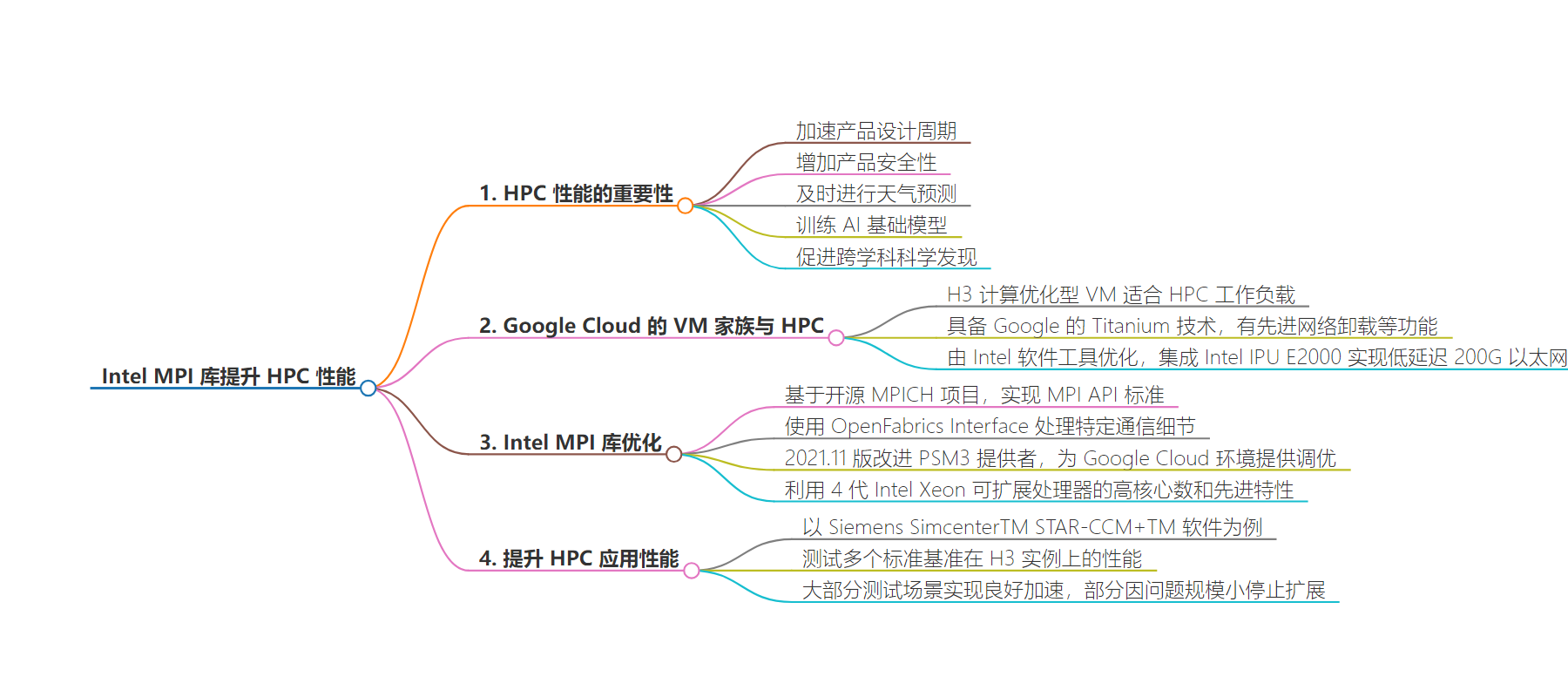

思维导图:

文章地址:https://cloud.google.com/blog/topics/hpc/how-the-intel-mpi-library-boosts-hpc-performance/

文章来源:cloud.google.com

作者:Mansoor Alicherry,Todd Rimmer

发布时间:2024/8/13 0:00

语言:英文

总字数:917字

预计阅读时间:4分钟

评分:86分

标签:高性能计算,英特尔 MPI 库,Google Cloud,Simcenter STAR-CCM+,性能优化

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

High performance computing (HPC) is central to fueling innovation across industries. Through simulation, HPC accelerates product design cycles, increases product safety, delivers timely weather predictions, enables training of AI foundation models, and unlocks scientific discoveries across disciplines to name but a few examples. HPC tackles these computationally demanding problems by employing large numbers of computing elements, servers, or virtual machines, in tight orchestration with one another and communicating via the Message Passing Interface (MPI). In this blog, we show how we boosted HPC performance on Google Cloud using Intel® MPI Library.

Google Cloud offers a wide range of VM families that cater to demanding workloads, including H3 compute optimized VMs, which are ideal for HPC workloads. These VMs feature Google’s Titanium technology, for advanced network offloads and other functions, and are optimized by Intel software tools to bring together the latest innovations in computing, networking, and storage into one platform. In third-generation VMs such as H3, C3, C3D or A3, the Intel Infrastructure Processing Unit (IPU) E2000 offloads the networking from the CPU onto a dedicated device, securely enabling low latency 200G Ethernet. Further, integrated support for Titanium in the Intel MPI library, brings the benefits of network offload to HPC workloads such as molecular dynamics, computational geoscience, weather forecasting, front-end and back-end Electronic Design Automation (EDA), Computer Aided Engineering (CAE), and Computational Fluid Dynamics (CFD). The latest version of the Intel MPI Library is included in the Google Cloud HPC VM Image.

MPI Library optimized for 3rd gen VMs and Titanium

Intel MPI Library is a multi-fabric message-passing library that implements the MPI API standard. It’s a commercial-grade MPI implementation based on the open-source MPICH project, and it uses the OpenFabrics Interface (OFI, aka libfabric) to handle fabric-specific communication details. Various libfabric providers are available, each optimized for a different set of fabrics and protocols.

Version 2021.11 of the Intel MPI Library specifically improves the PSM3 provider and provides tunings for the PSM3 and OFI/TCP providers for the Google Cloud environment, including the Intel IPU E2000. The Intel MPI Library 2021.11 also takes advantage of the high core counts and advanced features available on 4th Generation Intel Xeon Scalable Processors and supports newer Linux OS distributions and newer versions of applications and libraries. Taken together, these improvements unlock additional performance and application features on 3rd generation VMs with Titanium.

Boosting HPC application performance

Applications like Siemens SimcenterTM STAR-CCM+TM software shorten the time-to-solution through parallel computing. For example, if doubling the computational resources solves the same problem in half the time, the parallel scaling is 100% efficient, and the speedup is 2x compared to the run with half the resources. In practice, a speedup of 2x per doubling may not be achieved for a variety of reasons, such as not exposing enough parallelism, or overhead from inter-node communication. An improved communication library directly improves the latter problem.

To demonstrate the performance improvements of the new Intel MPI Library, Google and Intel tested Simcenter STAR-CCM+ with several standard benchmarks on H3 instances. The figure shows five standard benchmarks up to 32 VMs (2,816 cores). As you can see, good speedups are achieved throughout the tested scenarios; only the smallest benchmark (LeMans_Poly_17M) stops scaling beyond 16 nodes due to its small problem size (which is not addressed by communication library performance). In some benchmarks (LeMans_100M_Coupled and AeroSuvSteadyCoupled106M), superlinear scaling can even be observed for some VM counts, likely due to the increased available cache.