包阅导读总结

1.

关键词:GKE、Cluster Autoscaler、基础设施、自动缩放、性能提升

2.

总结:本文介绍了 GKE 中 Cluster Autoscaler 的新改进,包括目标副本计数跟踪、快速同质扩展、减少 CPU 浪费等,通过基准测试展示了显著的性能提升,且无需复杂配置,体现了 GKE 致力于优化用户体验。

3.

主要内容:

– GKE 一直默默创新优化基础设施

– 虽改进不常占据头条,但带来性能提升和用户体验改善

– Cluster Autoscaler 的新特性

– 目标副本计数跟踪,加速扩展,消除 GPU 自动缩放延迟,该功能将开源

– 快速同质扩展,提升相同 Pod 扩展速度

– 减少 CPU 浪费,更快决策,避免不必要延迟

– 内存优化,提高整体效率

– 基准测试结果

– 对比 GKE 1.27 和 1.29 版本

– 包括基础设施和工作负载层面的多种场景测试

– 如 Autopilot 通用 5k 副本部署、繁忙批处理集群、10 副本 GPU 测试、应用端用户延迟测试等

– 结果显示 GKE 1.29 版本在各方面均有显著提升

– 结论

– GKE 致力于优化用户体验

– 鼓励用户保持版本更新,关注 GKE 发展

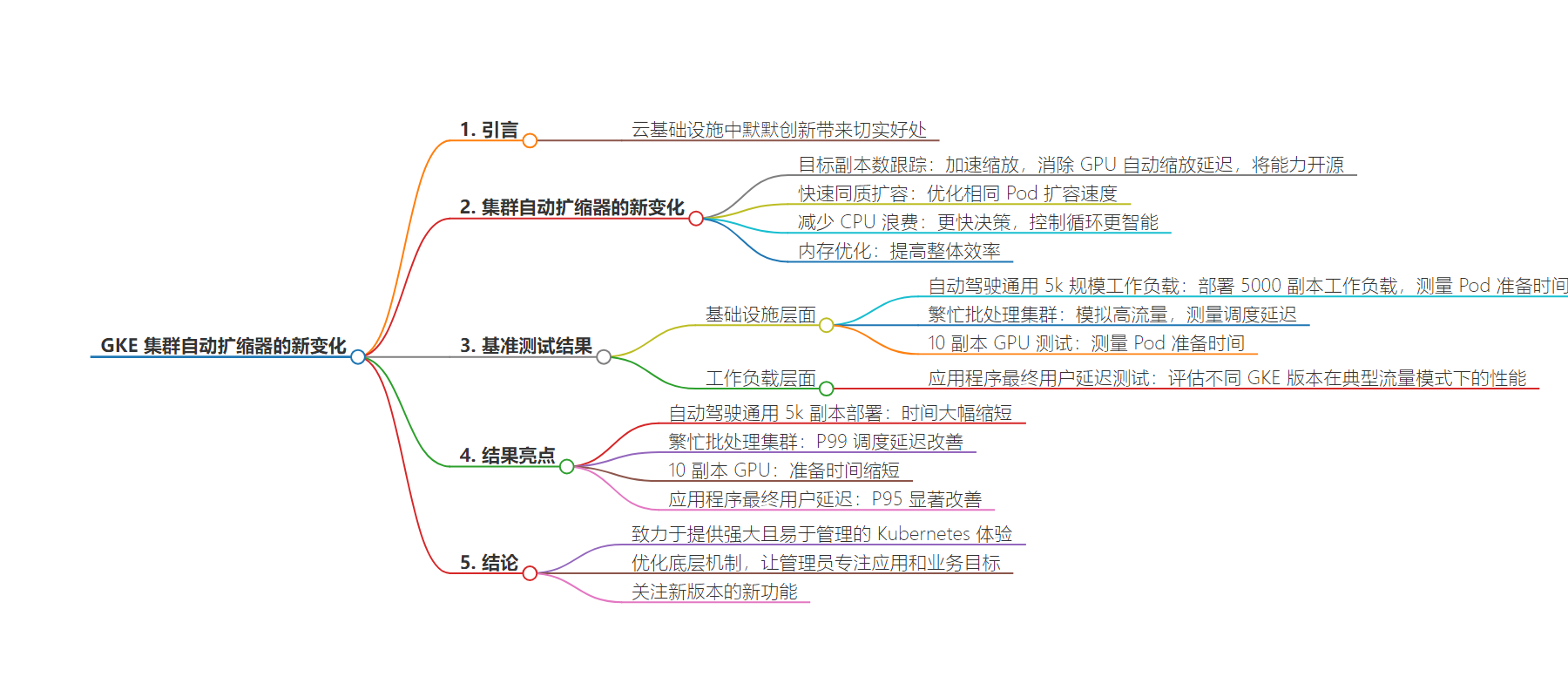

思维导图:

文章地址:https://cloud.google.com/blog/products/containers-kubernetes/whats-new-with-gke-cluster-autoscaler/

文章来源:cloud.google.com

作者:Daniel K??obuszewski,Roman Arcea

发布时间:2024/6/24 0:00

语言:英文

总字数:697字

预计阅读时间:3分钟

评分:88分

标签:容器与 Kubernetes,GKE,开发人员与实践者,集群自动扩展器,自动扩展

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

In the world of cloud infrastructure, sometimes the most impactful features are the ones you never have to think about. When it comes to Google Kubernetes Engine (GKE), we have a long history of quietly innovating behind the scenes, optimizing the invisible gears that keep your clusters running smoothly. These enhancements might not always grab the headlines, but they deliver tangible benefits in the form of improved performance, reduced latency, and a simpler user experience.

Today, we’re shining a spotlight on some of these “invisible” GKE advancements, particularly in the realm of infrastructure autoscaling. Let’s dive into how recent changes in the Cluster Autoscaler (CA) can significantly enhance your workload performance without requiring any additional configuration on your part.

What’s new with Cluster Autoscaler

The GKE team has been hard at work refining the Cluster Autoscaler, the component responsible for automatically adjusting the size of your node pools based on demand. Here’s a breakdown of some key improvements:

-

Target replica count tracking: This feature accelerates scaling when you add several Pods simultaneously (think new deployments or large resizes). It also eliminates a previous 30-second delay that affected GPU autoscaling. This capability is headed to open-source so that the entire community can benefit from improved Kubernetes performance.

-

Fast homogeneous scale-up: If you have numerous identical pods, this optimization speeds up the scaling process by efficiently bin-packing Pods onto nodes.

-

Less CPU waste: The CA now makes decisions faster, which is especially noticeable when you need multiple scale-ups across different node pools. Additionally, CA is smarter about when to run its control loop, avoiding unnecessary delays.

-

Memory optimization: Although not directly visible to the user, the CA has also undergone memory optimizations that contribute to its overall efficiency.

Benchmarking results

To demonstrate the real-world impact of these changes, we conducted a series of benchmarks across two GKE versions (1.27 and 1.29) and scenarios:

Infrastructure-level:

-

Autopilot generic 5k scaled workload: We deployed a 5,000-replica workload on Autopilot and measured the time it took for all pods to become ready.

-

Busy batch cluster: We simulated a high-traffic batch cluster by creating 100 node pools and deploying multiple 20-replica jobs at regular intervals. We then measured the scheduling latency.

-

10-replica GPU test: A 10-replica GPU deployment was used to measure the time for all pods to become ready.

Workload-level:

-

Application end-user latency test: We employed a generic web application that responds predictably to an API call with defined response and latency when not under load. Using a standard load testing framework (Locust), we assessed the performance of various GKE versions under a typical traffic pattern that prompts GKE to scale with both HPA and NAP. We measured the P50 and P95 end-user latency with the application scaled on CPU with an HPA CPU target of 50%.

Results highlights (Illustrative)

|

Scenario |

Metric |

GKE v1.27 (baseline) |

GKE v1.29 |

|---|---|---|---|

|

Autopilot generic 5k replica deployment |

Time-to-ready |

7m 30s |

3m 30s (55% improvement) |

|

Busy batch cluster |

P99 scheduling latency |

9m 38s |

7m 31s (20% improvement) |

|

10-replica GPU |

Time-to-ready |

2m 40s |

2m 09s (20% improvement) |

|

Application end-user latency |

Application response latency as measured by the end user. P50 and P95 in seconds. |

P50: 0.43s P95: 3.4s |

P50: 0.4s P95: 2.7s (P95: 20% improvement) |

(Note: These results are illustrative and will vary based on your specific workload and configuration).

Significant improvements, such as reducing the deployment time of 5k Pods by half or enhancing application response latency at the 95th percentile by 20%, typically necessitate intensive optimization efforts or overprovisioned infrastructure. The new changes to Cluster Autoscaler stand out by delivering these gains without requiring complex configurations, idle resources, or overprovisioning.

Conclusion

At Google Cloud, we’re committed to making your Kubernetes experience not just powerful, but also effortless to manage and use. By optimizing underlying mechanisms such as Cluster Autoscaler, we help GKE administrators focus on their applications and business goals, with the confidence that their clusters nare scaling efficiently and reliably.

Each new GKE version brings a number of new capabilities, either visible or invisible, so make sure to stay up to date with the latest releases. And stay tuned for more insights into how we’re continuing to evolve GKE to meet the demands of modern cloud-native applications!