包阅导读总结

1. 关键词:

– Data Intelligence

– Azure Databricks

– Microsoft Fabric

– Architecture

– Analytics

2. 总结:

本文介绍了结合 Azure Databricks 和 Microsoft Fabric 的端到端数据智能架构,涵盖数据摄入、处理、丰富、服务等流程,还包括相关平台和组件,展示了其在现代化数据架构、实时分析、AI 应用等方面的潜在用途。

3. 主要内容:

– 架构介绍

– 数据摄入

– 从 Azure Event Hubs 摄入流式数据到 Delta Lake 表

– 从 Data Lake Storage Gen2 摄入非结构化和半结构化数据

– 从关系数据库摄入数据

– 数据处理

– 使用 Delta Live Tables 和 Photon Engine 处理批量和流式数据

– 遵循奖章架构进行数据分层

– 数据存储

– 以特定格式存储在 Azure Data Lake Gen2

– 数据丰富

– 进行数据分析、模型训练和管理

– 部署和监控 AI 模型

– 为即席分析和 BI 提供服务

– 相关平台

– Databricks 平台

– 统一的工作流编排、计算层和数据治理

– Azure 平台

– 身份管理、成本管理、监控等

– 组件

– 场景细节

– 展示如何结合 Azure Databricks 和 Power BI 实现数据和 AI 民主化

– 以统一的 Lakehouse 基础和 Unity Catalog 治理满足企业需求

– 潜在用例

– 现代化遗留数据架构

– 支持实时分析用例

– 构建生产级 Gen AI 应用

– 赋能业务领导获取数据洞察

– 安全共享或货币化数据

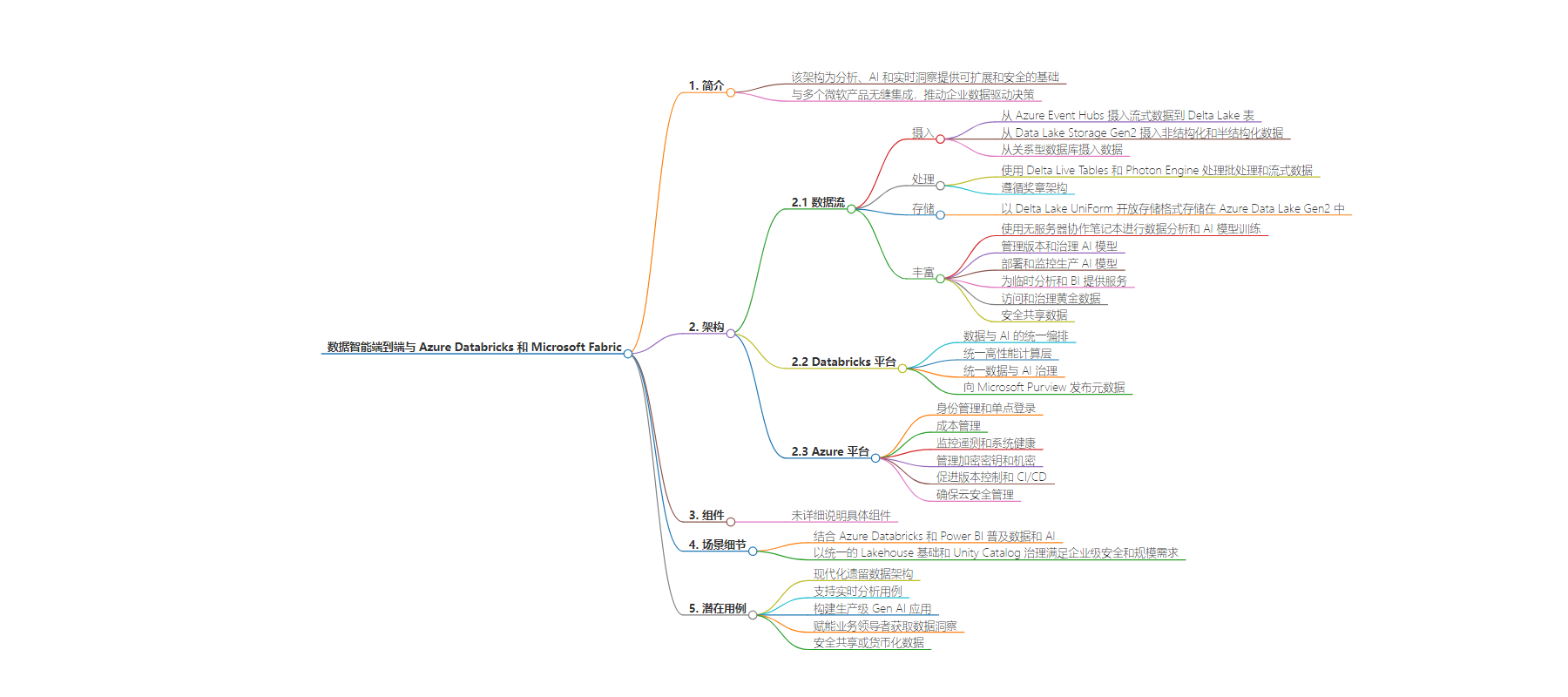

思维导图:

文章来源:techcommunity.microsoft.com

作者:katiecummiskey

发布时间:2024/9/4 2:35

语言:英文

总字数:688字

预计阅读时间:3分钟

评分:86分

标签:数据分析与 AI,Azure Databricks,Microsoft Fabric,Delta Lake,实时分析

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

This Azure Architecture Blog was written in conjunction withIsaac Gritz, Senior Solutions Architect, at Databricks.

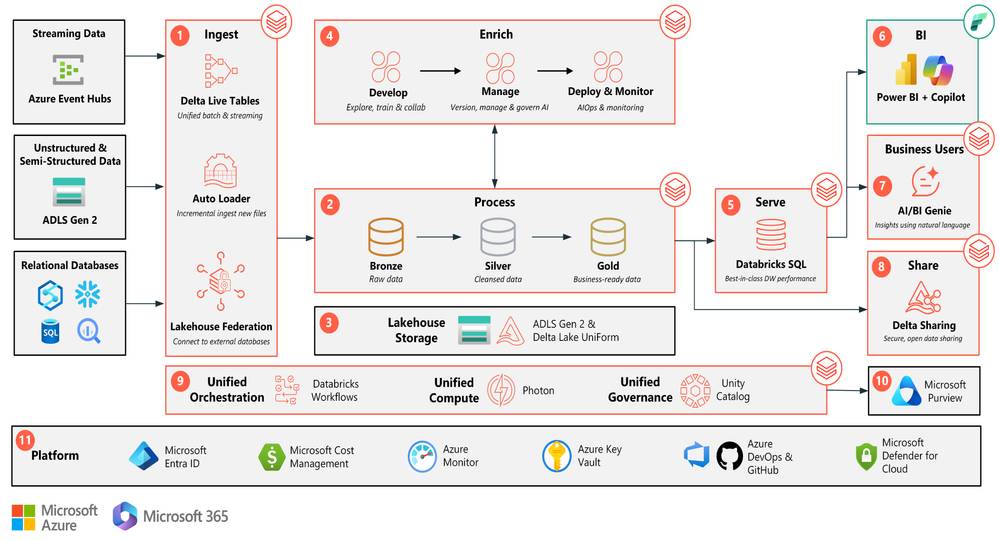

The Data Intelligence End-to-End Architecture provides a scalable, secure foundation for analytics, AI, and real-time insights across both batch and streaming data. The architecture seamlessly integrates with Power BI and Copilot in Microsoft Fabric, Microsoft Purview, Azure Data Lake Storage Gen2, and Azure Event Hubs, empowering data-driven decision-making across the enterprise.

Architecture

Dataflow

- Ingestion:

- Ingest raw streaming data from Azure Event Hubs using Delta Live Tables into Delta Lake tables ensuring governance through Unity Catalog.

- Incrementally ingest unstructured and semi-structured data from Data Lake Storage Gen2 using Auto Loader into Delta Lake, maintaining consistent governance through Unity Catalog.

- Seamlessly connect to and ingest data from relational databases using Lakehouse Federation into Delta Lake, ensuring unified governance across all data sources.

- Process both batch and streaming data at scale using Delta Live Tables and the highly performant Photon Engine following the medallion architecture:

- Bronze: raw data for retention and auditability

- Silver: cleansed, filtered, and joined data

- Gold: business-ready data either in a dimensional model or aggregated

- Store all data in Delta Lake UniForm’s open storage format with Azure Data Lake Gen2, supporting Delta Lake, Iceberg, and Hudi for cross-ecosystem compatibility.

- Enrich:

- Perform exploratory data analysis, collaborate in real-time, and AI model training using serverless, collaborative notebooks.

- Manage versions and govern AI models, features, and vector indexes using MLflow, Feature Store, Unity Catalog, and Vector Search.

- Deploy and monitor production AI models and Compound AI Systems with support for batch and real-time deployment through Model Serving and Lakehouse Monitoring.

- Serve ad-hoc analytics and BI at high concurrency directly from your data lake using Databricks SQL Serverless.

- Data analysts generate reports and dashboards using Power BI and Copilot within Microsoft Fabric.

- Gold data is accessed and governed live via a published Power BI Semantic Model connected to Unity Catalog and Databricks SQL.

- Business users can Databricks AI/BI Genie to unlock natural language insights from their data.

- Securely share data with external customers or partners using Delta Sharing, an open protocol that ensures compatibility and security across various data consumers.

- Databricks Platform

- Unified orchestration for Data & AI with Databricks Workflows

- Unified, performant compute layer with the Photon Engine

- Unified Data & AI governance with Unity Catalog

- Publish metadata from Unity Catalog to Microsoft Purview for visibility across you data estate.

- Azure Platform

- Identity management and single sign-on (SSO) via Microsoft Entra ID

- Manage costs and billing via Microsoft Cost Management

- Monitor telemetry and system health via Azure Monitor

- Manage encrypted keys and secrets via Azure Key Vault

- Facilitate version control and CI/CD via Azure DevOps and GitHub

- Ensure cloud security management via Microsoft Defender for Cloud

Components

This solution uses the following components:

Scenario Details

This solution demonstrates how you can leverage the Azure Databricks Data Intelligence Platform combined with Power BI to democratize Data and AI while meeting the needs for enterprise-grade security and scale. This architecture achieves that by starting with an open, unified Lakehouse foundation, governed by Unity Catalog. Then, the Data Intelligence Engine leverages the uniqueness of an organization’s data to provide a simple, robust, and accessible solution for ETL, data warehousing, and AI so organizations can deliver data products quicker and easier.

Potential Use Cases

This approach can be used to:

- Modernize a legacy data architecture by combining ETL, data warehousing, and AI to create a simpler and future-proof platform.

- Power real-time analytics use cases such as e-commerce recommendations, predictive maintenance, and supply chain optimization at scale.

- Build production-grade Gen AI applications such as AI-driven customer service agents, personalization, and document automation.

- Empower business leaders within an organization to gain insights from their data without a deep technical skillset or custom-built dashboards.

- Securely sharing or monetizing data with partners and customers.