包阅导读总结

1.

关键词:Kubernetes、GenAI、Workloads、Storage、Frameworks

2.

总结:本文探讨了 Kubernetes 对 GenAI 的支持,包括其为运行 GenAI 工作负载提供的价值,如提供关键功能、支持 GPU 启用、部署模型和推理引擎、满足数据和存储需求等,强调其作为 GenAI 平台的优势。

3.

主要内容:

– Kubernetes 与 GenAI 概述:

– GenAI 快速发展,应用广泛。

– Kubernetes 拥有诸多特性,迎来 10 周年。

– Kubernetes 适合 GenAI 的原因:

– 提供多种关键能力,如工作负载调度、存储等。

– 方便模型共享存储和数据库高可用。

– 在 Kubernetes 上启用 GPU:

– 上游 Kubernetes 通过框架支持多种 GPU。

– 第三方集成可简化管理。

– 部署模型和推理引擎:

– 训练后的模型需下载到 Kubernetes 环境。

– 可用 Helm 部署推理引擎。

– 数据和存储:

– 需共享存储加载大语言模型。

– RAG 框架可补充模型数据。

– 应用可能需要自身数据存储。

– RAG 框架:

– 可添加新数据补充训练模型。

– 向量数据常存储在数据库,需持久存储。

– 结论:Kubernetes 为 GenAI 提供众多优势。

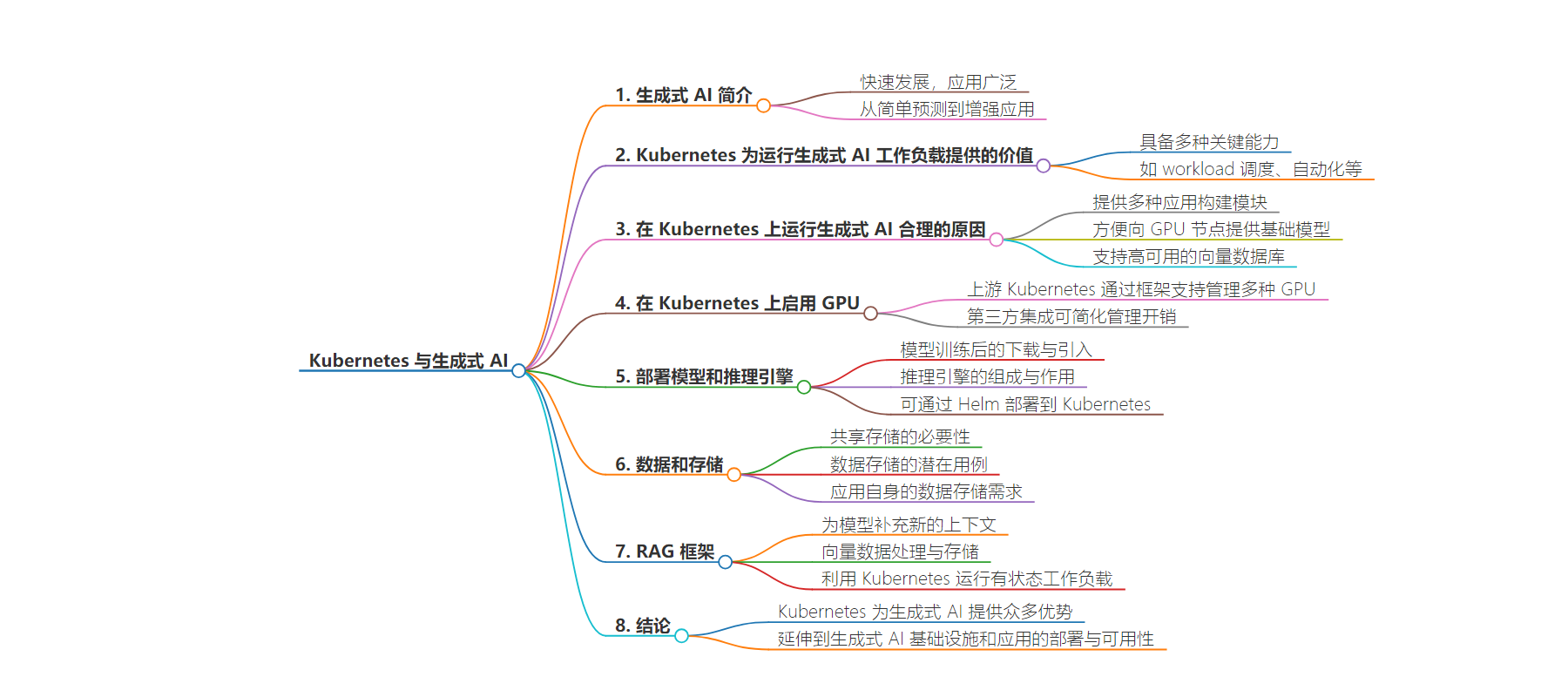

思维导图:

文章地址:https://thenewstack.io/kubernetes-for-genai-why-it-makes-so-much-sense/

文章来源:thenewstack.io

作者:Ryan Wallner

发布时间:2024/6/26 14:48

语言:英文

总字数:1288字

预计阅读时间:6分钟

评分:86分

标签:赞助商-戴尔科技,赞助帖子-贡献

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Generative AI (or GenAI) is quickly evolving, becoming essential to many organizations. It’s moved beyond simple prediction to enhancing applications with code completion, automation, deep knowledge and expertise. Whether your use case is web-based chat, customer service, documentation search, content generation, image manipulation, infrastructure troubleshooting or countless other functions, GenAI promises to help us become more efficient problem solvers.

Kubernetes, which recently marked its 10th birthday, offers valuable features for running GenAI workloads. Over the years, Kubernetes and the cloud native community have been improving, integrating and automating numerous infrastructure layers to make the lives of admins, developers and operations professionals easier.

GenAI can take advantage of this work to build frameworks that work well on top of Kubernetes. For example, the Operator Framework is already being used to adopt GenAI within Kubernetes, as it allows building applications in an automated and scalable way.

Let’s look a little deeper at why Kubernetes makes a great home for building GenAI workloads.

Why Generative AI on Kubernetes Makes Sense

Kubernetes provides building blocks for any type of application. It provides workload scheduling, automation, observability, persistent storage, security, networking, high availability, node labeling and other capabilities that are crucial for GenAI and other applications.

Take, for instance, making a foundational GenAI model like Google‘s Gemma or Meta’s Llama2 available to worker nodes with graphics processing units (GPUs). Kubernetes’ built-in Container Storage Interface (CSI) driver mechanisms make it much simpler to expose persistent shared storage for a model so that inference engines can quickly load it to the GPU’s core memory.

Another example is running a vector database like Chroma within a retrieval-augmented generation (RAG) pipeline. Databases often need to remain highly available, and Kubernetes’ built-in scheduling capability coupled with CSI drivers can enable vector databases to move to different workers in the Kubernetes cluster. This is critical in case of node, network, zone and other failures, as it keeps your pipelines up and running with access to the embeddings.

Whether you’re looking at observability, networking or much more, Kubernetes is a suitable place for GenAI applications because of its “batteries included” architecture.

Enabling GPUs on Kubernetes

Upstream Kubernetes supports managing Intel, AMD and NVIDIA GPUs through its device plugin framework, as long as an administrator has provisioned and installed the necessary hardware and drivers to the nodes.

This, along with third-party integrations via plugins and operators, sets up Kubernetes with the essential building blocks needed for enabling GenAI workloads.

Vendor support, such as the Intel Device Plugins Operator and NVIDIA GPU Operator, can also help simplify administrative overhead. For instance, the NVIDIA GPU Operator helps manage the driver, CUDA runtime and container toolkit installation and life cycle without having to perform them separately.

Deploying Models and Inference Engines

Enabling GPUs on a Kubernetes cluster is only a small part of the full GenAI puzzle. GPUs are needed to run GenAI models on Kubernetes; however, the full infrastructure layer includes other elements such as shared storage, inference engines, serving layers, embedding models, web apps and batch jobs that are needed to run a GenAI application.

Once a model is trained and available, the model needs to be downloaded and pulled into the Kubernetes environment. Many of the foundation models can be downloaded from Hugging Face, then loaded into the serving layer, which is part of the inference server or engine.

An inference engine or server, such as NVIDIA Triton Inference Server and Hugging Face Text Generation Interface (TGI), is made up of software that interfaces with pretrained models. It loads and unloads models, handles requests to the model, returns results, monitors logs and versions, and more.

Inference engines and serving layers do not have to be run on Kubernetes, but that’s what I will focus on here. You can deploy Hugging Face TGI to Kubernetes via Helm, a Kubernetes application package manager. This Helm chart from Substratus AI is an example of how to deploy and make TGI available to a Kubernetes environment using a simple configuration file to define the model and GPU-labeled nodes.

Data and Storage

Several types of data storage are required for running models and GenAI architectures, outside of the raw datasets that are fed into the training process.

For one, it is not realistic to replicate large language models (LLMs), which can be gigabytes to terabytes in size, after they’re downloaded to an environment. A better approach is using shared storage, such as a performant shared file system like a Network File System (NFS). This enables a model to be loaded into shared storage and mounted to any node that may need to load and serve the model on an available GPU.

Another potential use case for data storage is running a RAG framework to supplement running models with external or more recent sources. RAG frameworks often use vectorized data and vector databases, and a block storage-based Persistent Volume (PV) and Persistent Volume Claim (PVC) in Kubernetes can improve availability of the vector database.

Lastly, the application utilizing the model may need its own persistence to store user data, sessions and more. This will be highly dependent on the application and its data storage requirements. For example, a chatbot may store a specific user’s recent prompt queries to save the history for lookback.

RAG Frameworks

Another deployment scenario is implementing RAG or a context augmentation framework using tools such as LlamaIndex or Langchain. Deployed foundational models are typically trained on datasets at a point in time, and RAG or context augmentation can add additional context to an LLM. These frameworks add a step in the query process that can take newly sourced data and feed it and the user query to the LLM.

For example, a model trained on a company’s documents can implement a RAG framework to add newly sourced documents created after the model was trained to add context for a query. Data in a RAG framework is usually loaded and then processed into smaller chunks (called vectors) and stored in embeddings within a vector database, such as Chroma, PGVector or Milvus. These embeddings can represent diverse types of data including text, audio and images.

RAG frameworks can retrieve relevant information from the embeddings, and the model can use them as additional context in its generative response. Vector data is often more condensed and smaller than the model, but it can still benefit from using persistent storage.

Using Kubernetes to run stateful workloads is nothing new, Existing projects such as Postgres can add the PGVector extension to a Postgres cluster deployed via CloudNativePG using a PVC. PVCs enable high availability of persistent locations for databases, which allow data to move around a Kubernetes cluster. This can be important for the health of the RAG framework in case of failures or pod life cycle events.

Conclusion

Kubernetes provides a GenAI toolbox that supports compute scheduling, third-party operators, storage integrations, GPU enablement, security frameworks, monitoring and logging, application life cycle management and more. These are all considerable tactical advantages to using Kubernetes as a platform for GenAI.

In the end, using Kubernetes as the platform for your GenAI application extends the advantages it provides for operators, engineers, DevOps professionals and application developers to the deployment and usability of GenAI infrastructure and applications.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.