包阅导读总结

1. 关键词:Self-service、Sampling、BigQuery、Data Science、Limitations

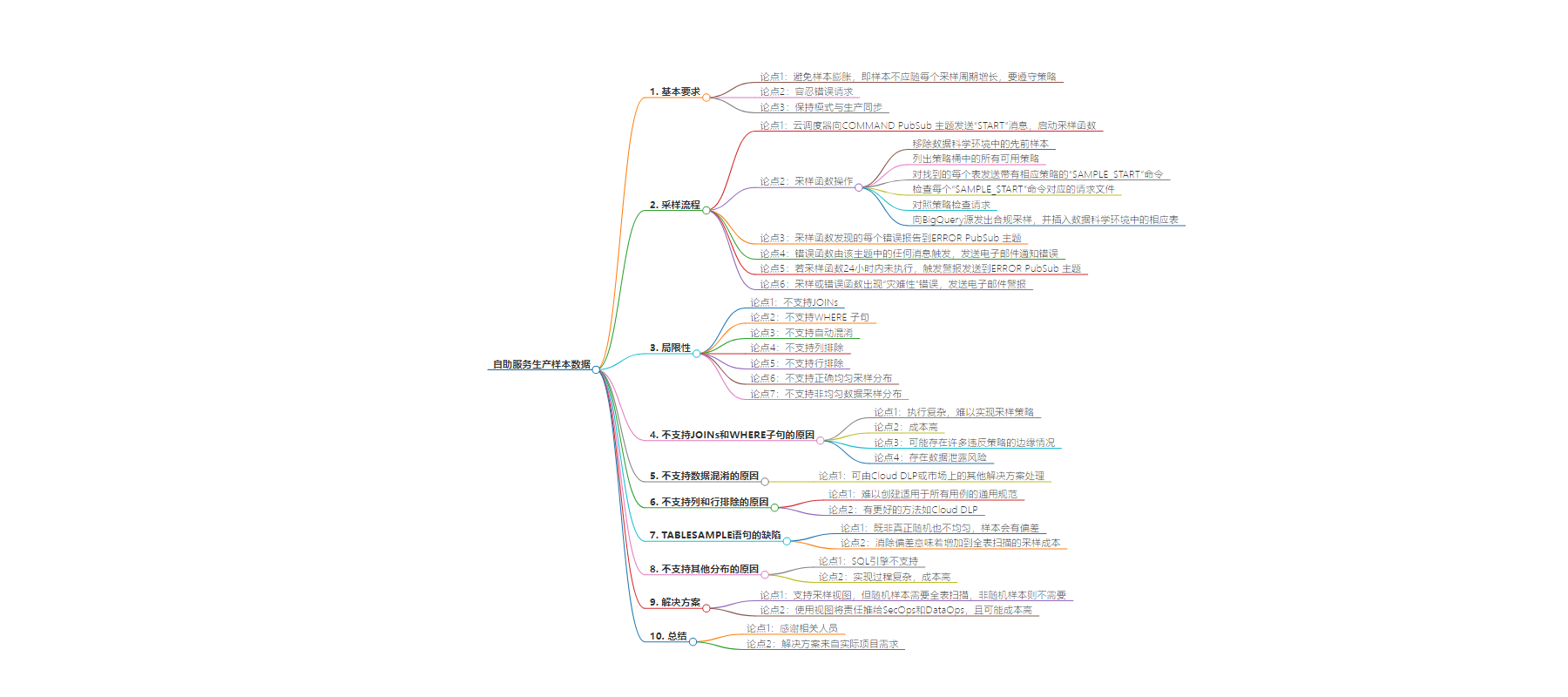

2. 总结:本文介绍了 Self-service 生产样本数据的流程及相关要求,包括避免样本膨胀、容忍错误请求、保持模式同步等,还列举了采样的局限性,如不支持 JOIN、WHERE 等操作,并说明了原因和替代方案,最后提到了解决方案设计及感谢相关人员。

3. 主要内容:

– Self-service 生产样本数据

– 需确保避免样本膨胀等问题

– 采样流程

– Cloud Scheduler 启动采样

– 采样函数执行一系列操作

– 报告错误

– 错误处理及超时警报

– 局限性

– 不支持 JOIN、WHERE 等操作,因复杂、昂贵且易违规

– 不支持自动混淆、列和行排除等,可用 Cloud DLP 等替代

– TABLESAMPLE 非真正随机均匀,有偏差

– 不支持其他数据采样分布

– 视图可作为 workaround,但随机采样需全表扫描

– 解决方案设计及致谢

思维导图:

文章来源:cloud.google.com

作者:Gustavo Kuhn Andriotti

发布时间:2024/7/8 0:00

语言:英文

总字数:2293字

预计阅读时间:10分钟

评分:85分

标签:BigQuery,数据采样,Google Cloud,DevOps,数据科学

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Figure 4 might seem like overkill, but it isn’t. We need to make sure that:

-

Sample inflation doesn’t happen, i.e., your sample should not grow with each sampling cycle. Meaning, policies must be respected.

-

You must be tolerant of faulty requests.

-

Keep the schemas in sync with production.

In detail, the sampler has the following flow:

-

Cloud Scheduler puts a message

STARTinto theCOMMANDPubSub topic. It tells the sampler function to start the sampling. -

The sampler function will do the following:

-

Remove all previous samples in the Data Science environment.

-

List all available policies in the policy bucket.

-

For each table it finds, send a

SAMPLE_STARTcommand with the corresponding policy. -

For each

SAMPLE_STARTcommand, it checks if there is a corresponding request file. They are in the request bucket. -

The request is checked against the policy.

-

A compliant sampling is issued to the BigQuery source. It is inserted in the corresponding table in the Data Science Environment.

-

Each error the sampler function finds, it reports into the

ERRORPubSub topic. -

The error function is triggered by any message in this topic. It sends an email informing about the error.

-

Assume that the sampler function isn’t executed within 24h. Then it triggers an alert that is sent to the

ERRORPubSub topic. -

If there is a “catastrophic” error in either the sampling or error functions, it sends an email alert.

Limitations

We are going to address each point in detail in the following sections. For reference, here is a short rundown of things we aren’t supporting:

-

JOINs of any kind -

WHEREclauses -

Auto-obfuscation (the data is auto-anonymized before inserting the sample)

-

Column exclusion

-

Row exclusion

-

Correct uniform sampling distribution

-

Non-uniform data sampling distributions (such as Gaussian, Power, and Pareto)

Wait, some seem pretty straightforward, why not “future work”?

We are going to “explain ourselves”. The “NOT”s do fall, mostly, in one of the categories:

We address each item in the laundry list in the sections that follow.

JOINs and WHEREs, really?

Unfortunately, yes. The issue with JOINs and WHEREs is that they are too complex to implement to enforce a sampling policy. Here is a simple example:

-

Table

TYPE_OF_AIRCRAFT, which is a simple ID for a specific aircraft, for example, Airbus A320 neo has IDABC123. -

Table

FLIGHT_LEG, which is a single flight on a specific day, for example, London Heathrow to Berlin at 14:50 Sunday. -

Table

PASSENGER_FLIGHT_LEGprovides which passenger is sitting where in a particularFLIGHT_LEG.

You can now construct a query that joins all of these tables together. You can ask all passengers flying in a particular aircraft type on a particular day. In this case, to honor the policies, we have to do the following:

-

Execute the query.

-

Verify how much data from each particular table is being pulled through it.

-

Start capping based on the “allowances”.

This process will be:

-

Hard to implement without a SQL AST.

-

Probably very expensive for you. Therefore, we will execute and then “trim” (you are paying for the full query).

-

Can have many edge cases that violate the policies.

-

Risk of data exfiltration.

But I need to obfuscate data, please

A lot of people do need obfuscation, we know. This topic alone is addressed by Cloud DLP. There are also many (more) capable solutions in the market that you can use for it. See the blog post: Take charge of your data: using Cloud DLP to de-identify and obfuscate sensitive information.

Column and row exclusion sounds simple

We agree that column and row exclusions are simple, and it is even easier (and safer) to deal with using views or Cloud DLP. The reason we don’t do it here is because it is a difficult use case to create a generic specification that works for all use cases. Also, there are much better approaches like Cloud DLP. It all depends on the reason why you want to drop columns or rows.

Wait, is it not really uniform either?

Except for views, we rely on TABLESAMPLE statements. The reason is cost. A truly random sample means using the ORDER BY RAND() strategy, which requires a full table scan. With TABLESAMPLE statements, you are only paying a bit more than the amount of data you want. Let us go deeper.

The caveat on TABLESAMPLE statements

This technique allows us to sample a table without having to read it all. But there is a huge CAVEAT using TABLESAMPLE. It is neither truly random nor uniform. Your sample will have the bias in your table blocks. Here is how it works, according to the documentation:

The following example reads approximately 20% of the data blocks from storage and then randomly selects 10% of the rows in those blocks:

SELECT * FROM dataset.my_table TABLESAMPLE SYSTEM (20 PERCENT)

WHERE rand() < 0.1

An example is always easier. Let us build one with a lot of skewness to show what TABLESAMPLE does. Imagine that your table has a single integer column. Now picture that your blocks have the following characteristics:

|

Block ID |

Average |

Distribution |

Description |

|

1 |

10 |

Single value |

All values are 10 |

|

2 |

9 |

Single value |

All values are 9 |

|

3 |

5 |

Uniform from 0 to 10 |

|

|

4 |

4 |

Uniform from -1 to 9 |

|

|

5 |

0 |

Uniform from -5 to 5 |

At this point, we are interested in looking at what happens to the average of your sample when using TABLESAMPLE. For simplicity, assume:

TABLESAMPLE will sample 40% of the blocks and you will get two blocks. Let us look at your average. Let us assume that blocks with Block ID 1 and 2 were selected. This means that your sample average is now 9.5. Even if you use the downsampling that is suggested in the documentation, you will still end up with a biased sample. Simply put, if your blocks have bias, your sample has it too.

Again, removing the potential bias means increasing the sampling costs to a full table scan.

I need other distributions, why not?

There are several reasons why not. The main reason is that other distributions aren’t supported by the SQL engine. There is no workaround for the missing feature. The only way to have it is to implement it. Here is where things get complicated. Fair warning, if your stats are rusty, it is going to be rough.

All the statements below are based on the following weird property of the cumulative distribution function (CDF):

For a given distribution its CDF is continuously distributed.

Source and a lecture if you feel like it.

For it to work, you will need to do the following:

-

Get all data on the target column (which is being the target of the distribution).

-

Compute the column’s CDF.

-

Randomly/uniformly sample the CDF.

-

Translate the above to a row number/ID.

-

Put the rows in the sample.

This process can be done, but has some implications, such as the following:

-

You will need a full table scan.

-

You will have to have a “beefier” instance to hold all of the data (think billions of rows), and you will have to compute the CDF.

This means that you will be paying for the following:

We decided it isn’t worth the cost.

Views: the workaround

We do support sampling views. This means that you can always pack your “special sauce” in them and let the sampler do its job. But views don’t support BigQuery’s TABLESAMPLE statement. This means that random samples need a full table scan using the ORDER BY RAND() strategy. The full table scan doesn’t happen on non-random samples.

Feels like you cheated

Yes, you are right, we cheated. The workaround using views pushes the liability onto SecOps and DataOps, who will need to define compliant views and sample policies. Also, it can be costly, because querying the view is like executing the underlying query and sampling it. Be very careful especially with the random samples from views due to the full table scan nature of it on views.

Solution design

We settled around a very simple solution that has the following components:

Now that you know what is under the hood, let us do it: check out the code here and happy hacking.

We owe a big “thank you” to the OPSD project team from SWISS and Lufthansa Group. This post came from a real necessity within the project to have fresh PROD samples and to be compliant with SecOps. We want to especially thank Federica Lionetto for co-writing this post, Yaroslav Khanko for reviewing the security aspects, Ziemowit Kupracz for the terraform code review, and Regula Gantenbein for the CI/CD integration.