包阅导读总结

1. 关键词:Multimodel search、NLP、BigQuery、embeddings、Cloud Storage

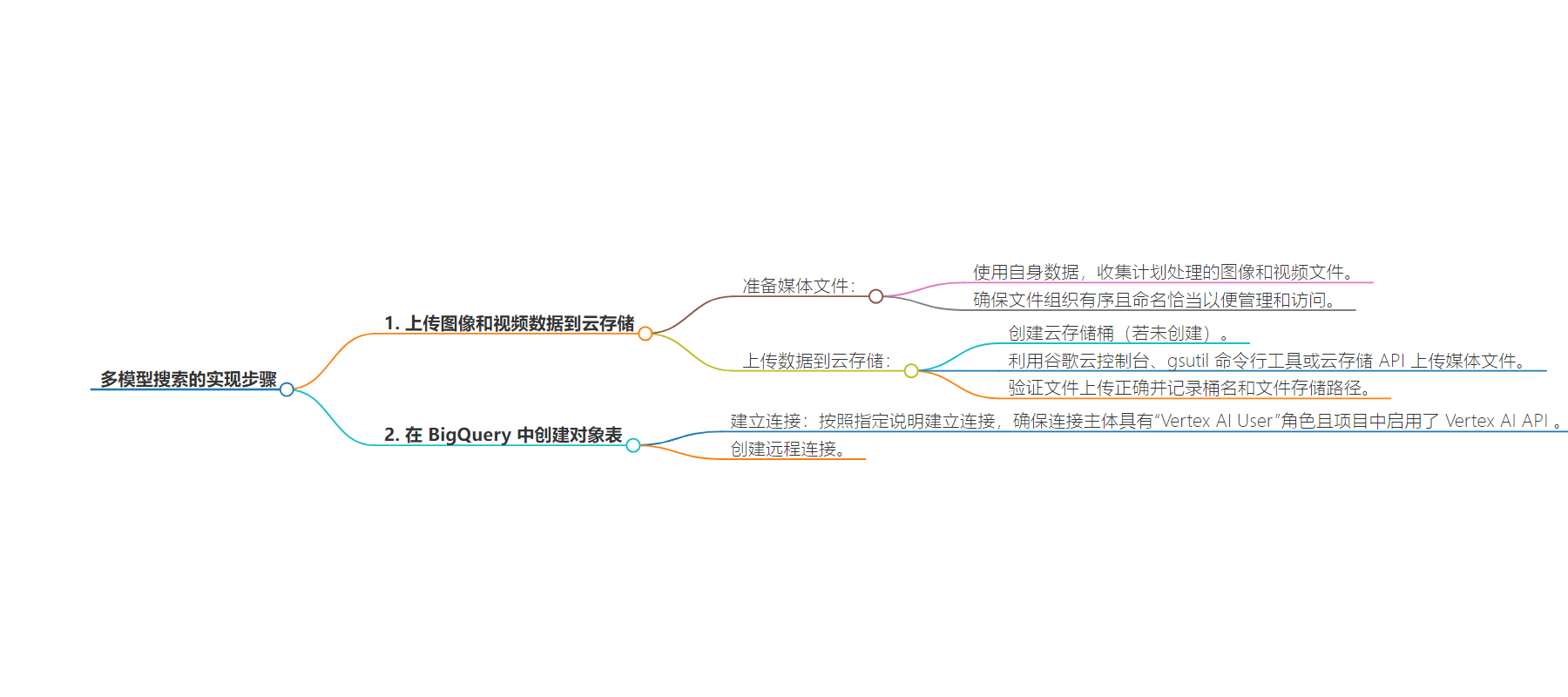

2. 总结:本文主要介绍了实现多模型搜索类似解决方案的步骤,包括向 Cloud Storage 上传图像和视频数据,在 BigQuery 中创建对象表,还提到了准备媒体文件和建立连接的相关要求。

3. 主要内容:

– 上传图像和视频数据到 Cloud Storage

– 准备媒体文件,包括使用自有数据、确保文件组织和命名恰当。

– 创建 Cloud Storage 桶并上传媒体文件,可通过多种方式上传,上传后需验证并记录相关信息。

– 在 BigQuery 中创建对象表

– 该表指向 Cloud Storage 桶中的源图像和视频文件,是只读表。

– 创建前需建立连接,确保连接主体有“Vertex AI User”角色且项目中启用了 Vertex AI API。

思维导图:

文章来源:cloud.google.com

作者:Layolin Jesudhass

发布时间:2024/8/26 0:00

语言:英文

总字数:1002字

预计阅读时间:5分钟

评分:91分

标签:多模态搜索,NLP,BigQuery,嵌入,Google Cloud

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

To implement a similar solution, follow the steps below.

Steps 1 – 2: Upload image and video data to Cloud Storage

Upload all image and video files to a Cloud Storage bucket. For the demo, we’ve downloaded some images and videos from Google Search that are available on GitHub. Be sure to remove the README.md file before uploading them to your Cloud Storage bucket.

Prepare your media files:

-

Using your own data, collect all the images and video files you plan to work with.

-

Ensure the files are organized and named appropriately for easy management and access.

Upload data to Cloud Storage:

-

Create a Cloud Storage bucket, if you haven’t already.

-

Upload your media files into the bucket. You can use the Google Cloud console, the

gsutilcommand-line tool, or the Cloud Storage API. -

Verify that the files are uploaded correctly and note the bucket’s name and path where the files are stored (e.g.,

gs://your-bucket-name/your-files).

Step 3: Create an object table in BigQuery

Create an Object table in BigQuery to point to your source image and video files in the Cloud Storage bucket. Object tables are read-only tables over unstructured data objects that reside in Cloud Storage. You can learn about other use cases for BigQuery object tables here.

Before you create the object table, establish a connection, as described here. Ensure that the connection’s principal has the ‘Vertex AI User’ role and that the Vertex AI API is enabled for your project.

Create remote connection