包阅导读总结

1.

关键词:Spring AI、Groq、AI 推理引擎、配置、性能

2.

总结:本文介绍了 Spring AI 对 Groq 这一快速 AI 推理引擎的支持,包括获取 API 密钥、添加依赖、配置环境变量等,还提供了代码示例和关键考虑事项,展示了其在 Spring 应用中的集成及优势。

3.

主要内容:

– Spring AI 与 Groq 集成

– 介绍 Groq 是快速的 AI 推理引擎,支持工具/函数调用

– 利用 Groq 的 OpenAI 兼容 API 实现无缝集成

– 配置与依赖

– 获取 Groq API 密钥

– 添加 Spring AI OpenAI 启动器依赖

– 设置环境变量或在 application.properties 中配置

– 代码示例

– 展示简单的 REST 控制器示例,包括生成单个响应和流响应的端点

– 提供使用 Groq 函数调用与 Spring AI 的示例

– 关键考虑事项

– 包括工具/函数调用支持、API 兼容性、模型选择、多模态限制和性能等方面

– 结论

– 强调集成的可能性和优势,鼓励探索和更新文档以适应变化

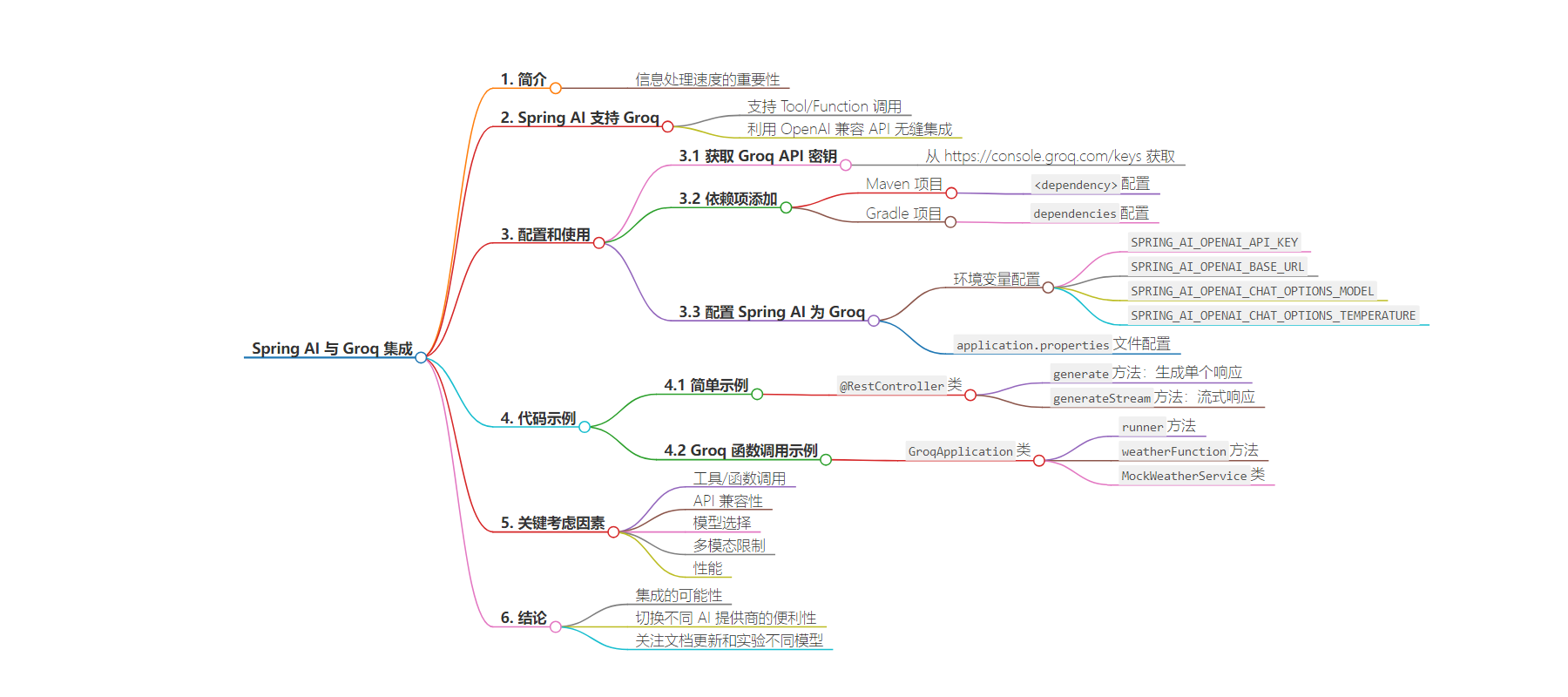

思维导图:

文章地址:https://spring.io/blog/2024/07/31/spring-ai-with-groq-a-blazingly-fast-ai-inference-engine

文章来源:spring.io

作者:Christian Tzolov

发布时间:2024/7/31 0:00

语言:英文

总字数:830字

预计阅读时间:4分钟

评分:81分

标签:Spring AI,Groq,AI 推理引擎,OpenAI API,Java

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Faster information processing not only informs – it transforms how we perceive and innovate.

Spring AI, a powerful framework for integrating AI capabilities into Spring applications, now offers support for Groq – a blazingly fast AI inference engine with support for Tool/Function calling.

Leveraging Groq’s OpenAI-compatible API, Spring AI seamlessly integrates by adapting its existing OpenAI Chat client.This approach enables developers to harness Groq’s high-performance models through the familiar Spring AI API.

We’ll explore how to configure and use the Spring AI OpenAI chat client to connect with Groq.For detailed information, consult the Spring AI Groq documentation and related tests.

Groq API Key

To interact with Groq, you’ll need to obtain a Groq API key from https://console.groq.com/keys.

Dependencies

Add the Spring AI OpenAI starter to your project.

<dependency> <groupId>org.springframework.ai</groupId> <artifactId>spring-ai-openai-spring-boot-starter</artifactId></dependency>For Gradle, add this to your build.gradle

dependencies { implementation 'org.springframework.ai:spring-ai-openai-spring-boot-starter'}Ensure you’ve added the Spring Milestone and Snapshot repositories and add the Spring AI BOM.

Configuring Spring AI for Groq

To use Groq with Spring AI, we need to configure the OpenAI client to point to Groq’s API endpoint and use Groq-specific models.

Add the following environment variables to your project:

export SPRING_AI_OPENAI_API_KEY=<INSERT GROQ API KEY HERE> export SPRING_AI_OPENAI_BASE_URL=https://api.groq.com/openai export SPRING_AI_OPENAI_CHAT_OPTIONS_MODEL=llama3-70b-8192Alternatively, you can add these to your application.properties file:

spring.ai.openai.api-key=<GROQ_API_KEY>spring.ai.openai.base-url=https://api.groq.com/openaispring.ai.openai.chat.options.model=llama3-70b-8192spring.ai.openai.chat.options.temperature=0.7Key points:

- The

api-keyis set to one of your Groq keys. - The

base-urlis set to Groq’s API endpoint:https://api.groq.com/openai - The

modelis set to one of Groq’s available Models.

For the complete list of configuration properties, consult the Groq chat properties documentation.

Code Example

Now that we’ve configured Spring AI to use Groq, let’s look at a simple example of how to use it in your application.

@RestControllerpublic class ChatController { private final ChatClient chatClient; @Autowired public ChatController(ChatClient.Builder builder) { this.chatClient = builder.build(); } @GetMapping("/ai/generate") public Map generate(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) { String response = chatClient.prompt().user(message).call().content(); return Map.of("generation", response); } @GetMapping("/ai/generateStream") public Flux<String> generateStream(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) { return chatClient.prompt().user(message).stream().content(); }}In this example, we’ve created a simple REST controller with two endpoints:

/ai/generate: Generates a single response to a given prompt./ai/generateStream: Streams the response, which can be useful for longer outputs or real-time interactions.

Groq API endpoints support tool/function calling when selecting one of the Tool/Function supporting models.

You can register custom Java functions with your ChatModel and have the provided Groq model intelligently choose to output a JSON object containing arguments to call one or many of the registered functions.This is a powerful technique to connect the LLM capabilities with external tools and APIs.

Here’s a simple example of how to use Groq function calling with Spring AI:

@SpringBootApplicationpublic class GroqApplication { public static void main(String[] args) { SpringApplication.run(GroqApplication.class, args); } @Bean CommandLineRunner runner(ChatClient.Builder chatClientBuilder) { return args -> { var chatClient = chatClientBuilder.build(); var response = chatClient.prompt() .user("What is the weather in Amsterdam and Paris?") .functions("weatherFunction") // reference by bean name. .call() .content(); System.out.println(response); }; } @Bean @Description("Get the weather in location") public Function<WeatherRequest, WeatherResponse> weatherFunction() { return new MockWeatherService(); } public static class MockWeatherService implements Function<WeatherRequest, WeatherResponse> { public record WeatherRequest(String location, String unit) {} public record WeatherResponse(double temp, String unit) {} @Override public WeatherResponse apply(WeatherRequest request) { double temperature = request.location().contains("Amsterdam") ? 20 : 25; return new WeatherResponse(temperature, request.unit); } }}In this example, when the model needs weather information, it will automatically call the weatherFunction bean, which can then fetch real-time weather data.

The expected response looks like this: “The weather in Amsterdam is currently 20 degrees Celsius, and the weather in Paris is currently 25 degrees Celsius.”

Read more about OpenAI Function Calling.

Key Considerations

When using Groq with Spring AI, keep the following points in mind:

- Tool/Function Calling: Groq supports Tool/Function calling. Check for the recommended models to use.

- API Compatibility: The Groq API is not fully compatible with the OpenAI API. Be aware of potential differences in behavior or features.

- Model Selection: Ensure you’re using one of the Groq-specific models.

- Multimodal Limitations: Currently, Groq doesn’t support multimodal messages.

- Performance: Groq is known for its fast inference times. You may notice improved response speeds compared to other providers, especially for larger models.

Conclusion

Integrating Groq with Spring AI opens up new possibilities for developers looking to leverage high-performance AI models in their Spring applications.By repurposing the OpenAI client, Spring AI makes it straightforward to switch between different AI providers, allowing you to choose the best solution for your specific needs.

As you explore this integration, remember to stay updated with the latest documentation from both Spring AI and Groq, as features and compatibility may evolve over time.

We encourage you to experiment with different Groq models and compare their performance and outputs to find the best fit for your use case.

Happy coding, and enjoy the speed and capabilities that Groq brings to your AI-powered Spring applications!