包阅导读总结

1.

“`

开源 AI、风险缓解策略、PAI、GitHub、负责任实践

“`

2.

今天,Partnership on AI(PAI)发布有关开源基础模型价值链风险缓解策略的报告,这是开源 AI 价值链负责任实践的重要一步。GitHub 参与相关工作,致力于支持活跃且负责的开源生态系统,报告对政策和实践具有重要意义。

3.

– 开源 AI 负责任实践推进

– PAI 发布报告《开源基础模型价值链的风险缓解策略》,为相关参与者提供指导

– 报告基于 GitHub 与 PAI 共同举办的研讨会

– GitHub 支持活跃且负责的开源生态

– 平台上有大量 AI 相关开源项目

– 努力使创新更易获取和理解,评估并更新平台政策

– 参与应对 AI 风险的相关活动,向政策制定者宣传开源 AI

– 报告是重要资源,有助于政策和实践,能让政策制定者更清楚了解 AI 系统创建中的角色和责任分布

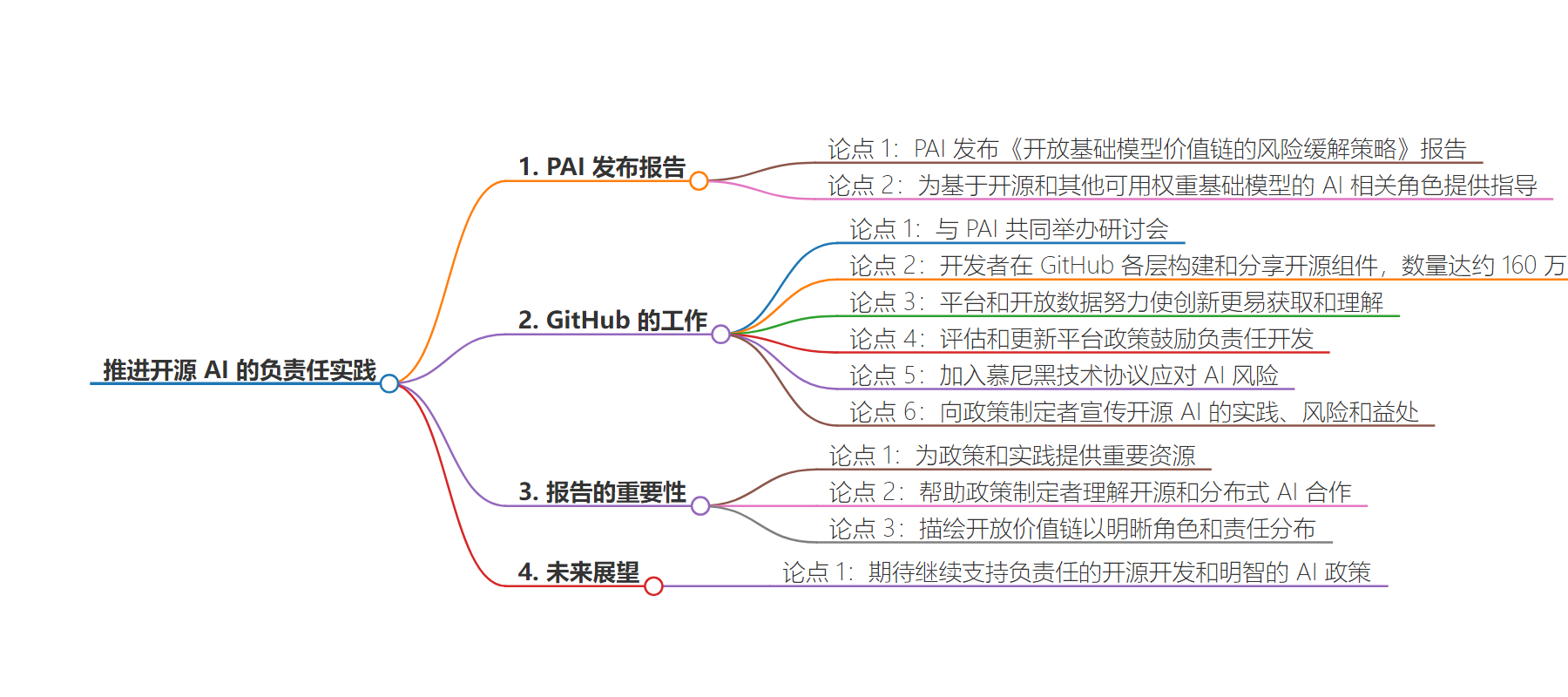

思维导图:

文章地址:https://github.blog/2024-07-11-advancing-responsible-practices-for-open-source-ai/

文章来源:github.blog

作者:Peter Cihon

发布时间:2024/7/11 21:26

语言:英文

总字数:319字

预计阅读时间:2分钟

评分:89分

标签:开源人工智能,风险缓解,人工智能治理,GitHub,人工智能合作组织

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

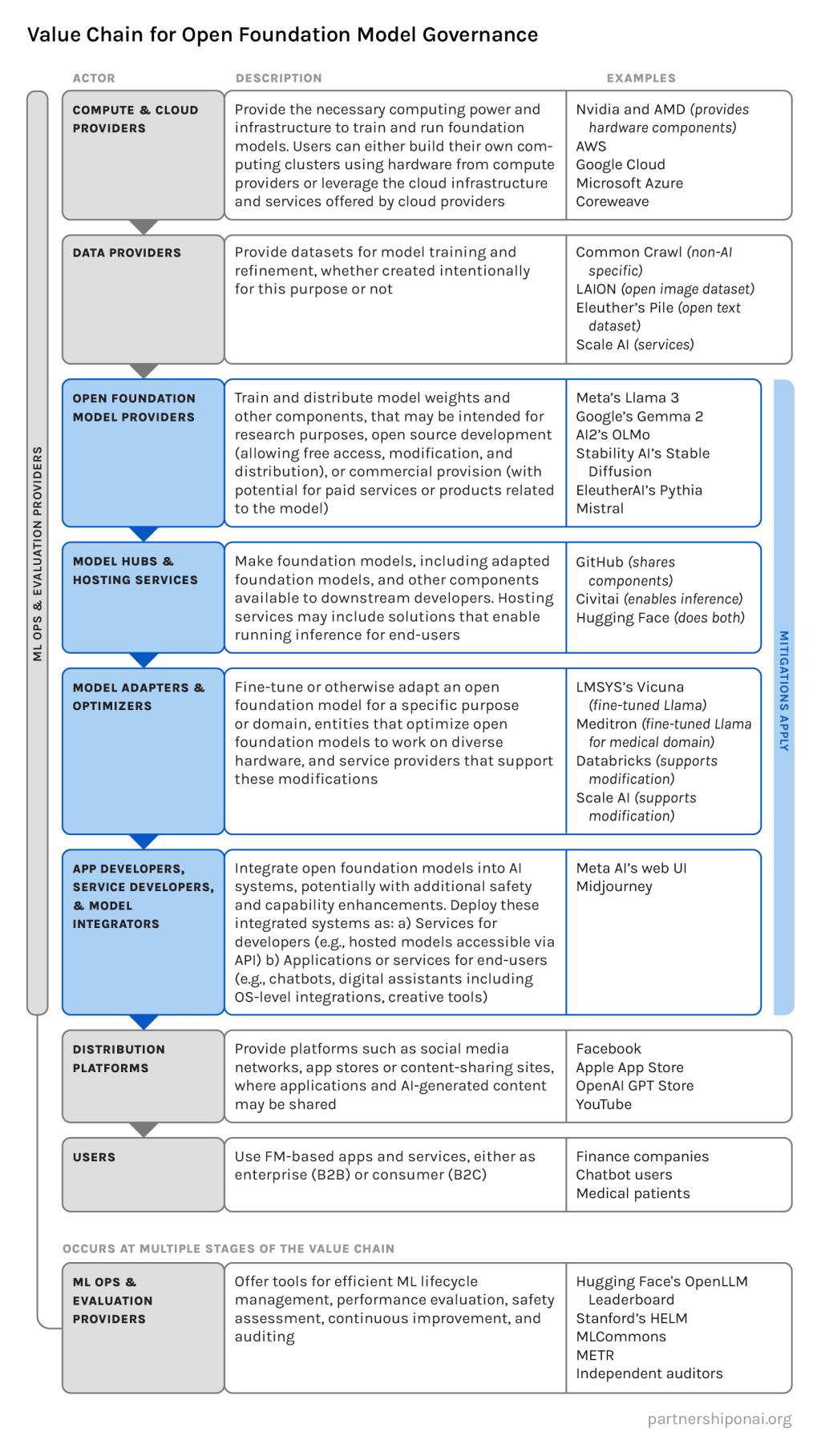

Today, the Partnership on AI (PAI) published a report, Risk Mitigation Strategies for the Open Foundation Model Value Chain. The report provides guidance for actors building, hosting, adapting, and serving AI that relies on open source and other weights-available foundation models. It is an important step forward for responsible practices in the open source AI value chain.

The report is based on a workshop that GitHub recently co-hosted with PAI, as part of our work to support a vibrant and responsible open source ecosystem. Developers build and share open source components at every level of the AI stack on GitHub, amounting to some 1.6 million repositories. These projects range from foundational frameworks like PyTorch, to agent orchestration software LangChain, to models like Grok and responsible AI tooling like AI Verify. Our platform and open data efforts work to make this innovation more accessible and understandable to developers, researchers, and policymakers alike. We evaluate and periodically update our platform policies to encourage responsible development, and we recently joined the Munich Tech Accord to address AI risks in this year’s elections. We work to educate policymakers on the practices, risks, and benefits of open source AI, including in the United States to inform implementation of the Biden Administration’s Executive Order and in the EU to secure an improved AI Act.

Reports like Risk Mitigation Strategies for the Open Foundation Model Value Chain are important resources to inform policy and practice. Policymakers often have a better understanding of vertically integrated AI stacks and the governance affordances of API access than they do of open source and distributed AI collaborations. In addition to beginning to consolidate best practices, the report delineates the open value chain (as pictured below) to provide policymakers a clearer understanding of the distribution of roles and responsibilities in the creation of AI systems today. We look forward to continuing to support responsible open source development and informed AI policy.