包阅导读总结

1. `GenAI`、`customer support`、`Vertex AI`、`automation`、`proof of concept`

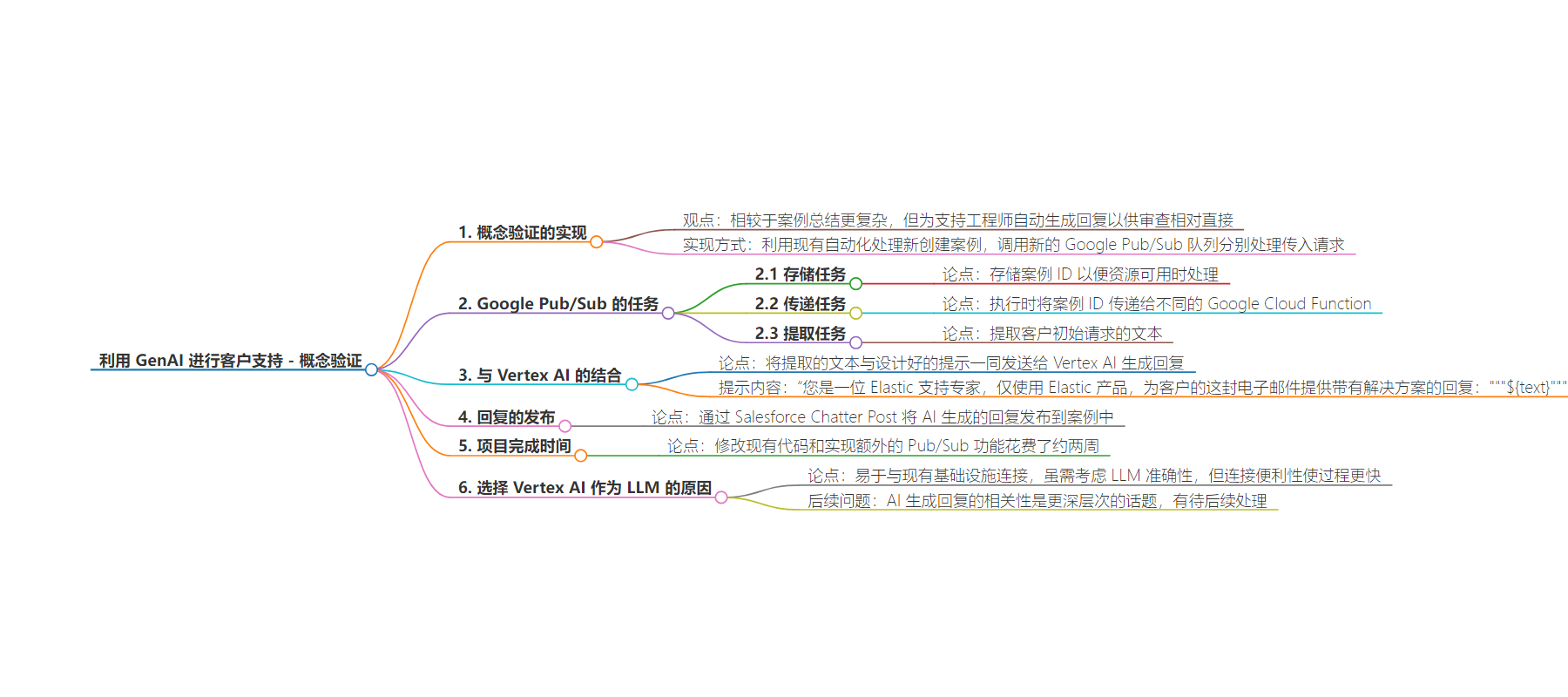

2. 本文主要讲述了在客户支持中构建 GenAI 概念验证的过程,包括利用现有自动化和新的 Google Pub/Sub 队列处理请求,使用 Vertex AI 生成回复并发布到案例,此过程花费约两周,且选择 Vertex AI 因其易与现有基础设施连接。

3.

– 构建 GenAI 用于客户支持的概念验证

– 比案例总结复杂一些,自动化回复供支持工程师审查相对直接

– 利用现有自动化处理新创建案例,调用新的 Google Pub/Sub 队列分别处理请求

– 存储案例 ID 待资源可用

– 执行时将案例 ID 传递给云函数提取客户初始请求文本

– 将文本发送至 Vertex AI 结合提示生成回复

– 通过 Salesforce Chatter Post 将回复发布到案例

– 选择 Vertex AI 作为 LLM,因易与现有基础设施连接,两周左右完成,后续将处理回复的相关性等问题

思维导图:

文章地址:https://www.elastic.co/blog/genai-customer-support-building-proof-of-concept

文章来源:elastic.co

作者:Chris Blaisure

发布时间:2024/8/9 2:27

语言:英文

总字数:1981字

预计阅读时间:8分钟

评分:81分

标签:生成式 AI,客户支持,Elasticsearch,Google Cloud Platform,Salesforce

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

While a little more complex than case summaries, automating a reply for our support engineers to review was relatively straightforward. We leveraged an existing automation for all newly created cases and called a new Google Pub/Sub queue to handle all the incoming requests separately. The Pub/Sub performed the following tasks:

1. It stored the Case ID in the queue for when resources were available.

2. On execution, it passed the Case ID to a different Google Cloud Function that would extract only the customer’s initial request as text.

3. The retrieved text would then be automatically sent to Vertex AI combined with the following engineered prompt:

You are an expert Elastic Support Engineer, using only Elastic products, provide a \

response with resolution to this email by a customer:

“””

${text}

“””`;

4. The AI-generated response was posted to the case via a Salesforce Chatter Post.

Again, a simple approach to capturing an initial draft reply that was scalable for the subset of cases we were looking at. This took us a few extra days to modify our existing code and the additional Pub/Sub functionality and took us roughly two weeks to complete.

Using Vertex AI as our LLM for this proof of concept was an easy decision. We knew we would have plenty to think about related to LLM accuracy (see below), but the ease of connecting it with our existing infrastructure made this process much quicker. Much like search, the relevance of an AI-generated response is a deeper conversation and something we knew we would tackle next.