包阅导读总结

1. 关键词:LLM、Chat 接口、异构数据源、Azure、自然语言

2. 总结:本文主要探讨了利用大语言模型(LLM)构建能利用异构数据源的聊天接口,介绍了相关工作和使用 Azure OpenAI 及其他功能的概念验证,包括与 CSV 和数据库交互的示例及步骤。

3. 主要内容:

– 构建 LLM 聊天接口面临挑战

– 作者使用 Azure OpenAI 及其他功能进行集成和研究

– 目标是为架构师和爱好者提供探索 Azure AI 潜力的基础

– 介绍与 CSV 交互的步骤

– 定义所需变量,如 API 密钥等

– 上传文件并创建数据框

– 创建 LLM

– 创建使用 CSV 和 LLM 的代理

– 与代理聊天

– 介绍与数据库(如 SQL DB、Cosmos DB)交互的步骤

– 加载连接变量

– 创建 Azure OpenAI 客户端和模型响应

– 用 Panda 读取 SQL 获取查询结果

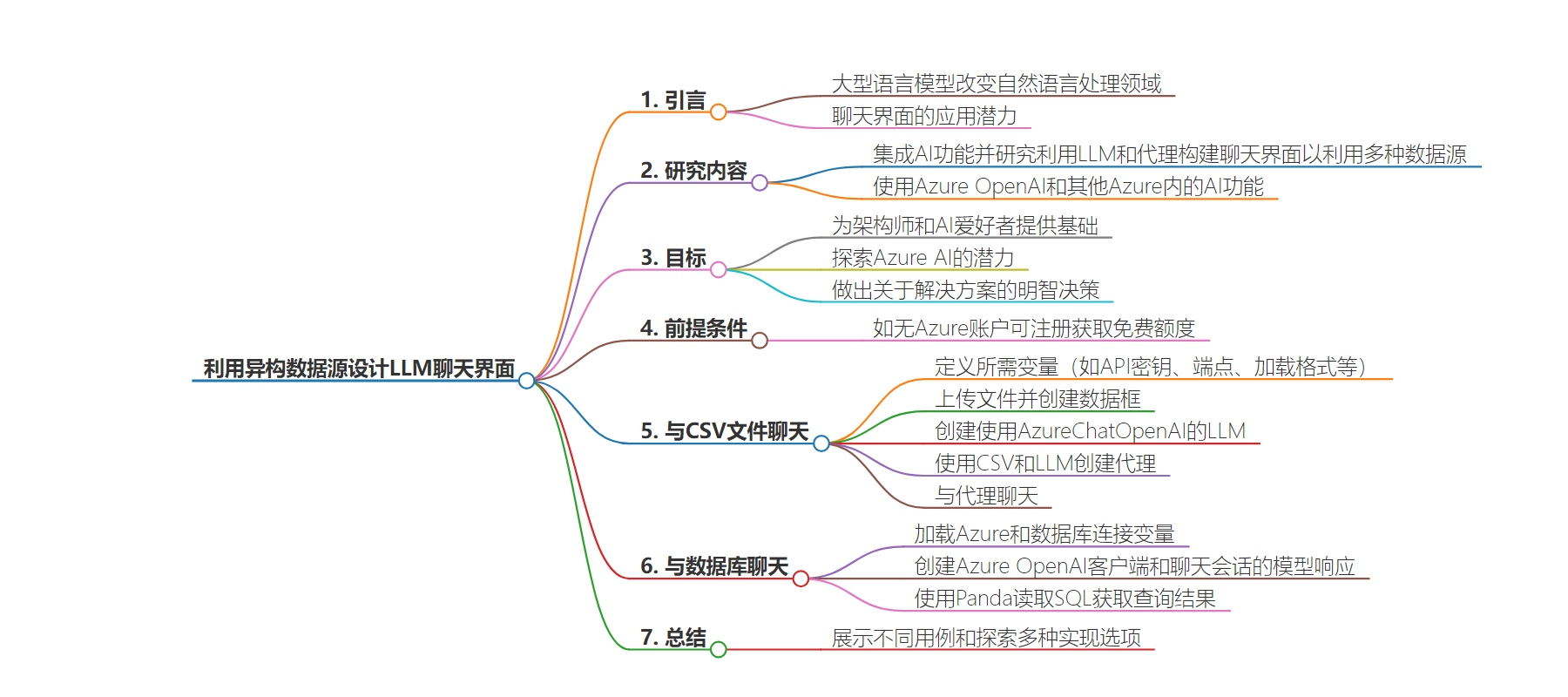

思维导图:

文章地址:https://thenewstack.io/designing-llm-chat-interfaces-to-leverage-heterogeneous-data-sources/

文章来源:thenewstack.io

作者:Deepak Jayablalan

发布时间:2024/7/12 20:08

语言:英文

总字数:2571字

预计阅读时间:11分钟

评分:85分

标签:LLM 聊天界面设计,Azure OpenAI,异构数据源,自然语言处理,AI 代理

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Large language models (LLMs) have changed the game of natural language processing in recent times, enabling developers to build complex chat interfaces that can talk like humans. The potential for these interfaces spans customer service, virtual assistants in general, training, and education through entertainment platforms. However, constructing useful LLM chat interfaces is not without its complications and challenges.

I have been working on integrating AI functionalities and researching how chat interfaces can be built to navigate and utilize diverse data sources using LLM and Agents. For this proof of concept, I have used Azure OpenAI and other AI functionalities within Azure. It demonstrates various use cases, design patterns, and implementation options.

The goal is to provide a foundation for architects and AI enthusiasts to explore the potential of Azure AI and make informed decisions about solution approaches. These use cases leverage diverse data sources such as SQL DB, Cosmos DB, CSV files, Multiple data sources, etc. The primary goal of this project is not only to showcase different use cases but also to explore various implementation options.

Pre-requisites:

If you don’t have an Azure account set up, you can set one up here with some free credits.

Chat With CSV:

Below is an example that shows how a natural language interface can be built on any CSV file using LLM and Agents. By leveraging the sample code, users can upload a preprocessed CSV file, ask questions about the data, and get answers from the AI model.

You can find the complete file for chat_with_CSV here.

Step 1: Define the required variables like API keys, API endpoints, loading formats, etc

I have used environment variables. You can have them in a config file or define them in the same file.

|

file_formats = { “csv”: pd.read_csv, “xls”: pd.read_excel, “xlsx”: pd.read_excel, “xlsm”: pd.read_excel, “xlsb”: pd.read_excel,

}

aoai_endpoint = os.environ[“AZURE_OPENAI_ENDPOINT” ] aoai_api_key = os.environI “AZURE_OPENAI_API_KEY” ] deployment_name = os.environ[“AZURE_OPENAI_DEPLOYMENT_NAME” ] aoai_api_version = os.environ[ “AZURE_OPENAI_API_VERSION” ] #”2023-05-15″ aoai_api_type = os.environ[“AZURE_OPENAI_API_TYPE”] |

Step 2: Upload the file and create a dataframe

|

uploaded_file = st. file_uploader( “Upload a Data file”, type=list (file_formats. keys()), help=“Supports CSV and Excel files!”, on_change=clear_submit,

}

if uploaded_file: df = load_data(uploaded_file) |

Step 3: Create an LLM using AzureChatOpenAI

For this, we need to import AzureChatOpenAI from langchain_openai and use the below params,

|

11m = AzureChatOpenAI (azure_endpoint=aoai_endpoint, openai_api_key = aoai_api_key, temperature=0, azure_deployment=deployment_name, openai_api_version=aoai_api_version, streaming=True) |

- azure_endpoint: Azure endpoint, including the resource.

- openai_api_key: This is a unique identifier that is used to authenticate and control access to OpenAI’s APIs.

- openai_api_version: The service APIs are versioned using the API-version query parameter. All versions follow the YYYY-MM-DD date structure.

- streaming: Default this boolean to False, which indicates whether the stream has the results.

- Temperature: Temperature is a parameter that controls the randomness of the output generated by the AI model. A lower temperature results in more predictable and conservative outputs. A higher temperature allows for more creativity and diversity in the responses. It’s a way to fine-tune the balance between randomness and determinism in the model’s output.

- deployment_name: A model deployment. If given, set the base client URL to include /deployments/{azure_deployment}. Note: this means you won’t be able to use non-deployment endpoints.

You can add more parameters if required; the details are in this link.

Step 4: Creating an agent using CSV and LLM

For this, we need to import create_pandas_dataframe_agent from langchain_experimental.agents and import AgentType from langchain.agent.

|

pandas_df_agent = create_pandas_dataframe_agent( 11m, df, verbose=True, agent_type=AgentType.OPENAI_FUNCTIONS, handle_parsing_errors=True, number_of_head_rows=df.shape[0] #send all the rows to LLM ) |

The pandas agent is a LangChain agent created using the create_pandas_dataframe_agent function, which takes the below inputs and params,

- A language model(LLM) as input.

- A pandas data frame(CSV data) containing the data as input.

- Verbose: If the agent is returning Python code, it might be helpful to examine this code to understand what’s going wrong. You could do this by setting verbose=True when creating the agent, which should print out the generated Python code.

- agent_Type: This shows how to initialize the agent using the OPENAI_FUNCTIONS agent type. This creates an agent that uses OpenAI function calling to communicate its decisions on what actions to take.

- handle_parsing_error: Occasionally, the LLM cannot determine what step to take because its outputs are not correctly formatted to be handled by the output parser. In this case, by default, the agent errors. But you can easily control this functionality with handle_parsing_errors.

Step 5: Chatting with Agent

For this step, we need to use import StreamlitCallbackHandler from langchain.callbacks.

When the run method is called on the panda agent with the input message from the prompt and callback arguments, it goes through a sequence of steps to generate an answer.

|

with st.chat_message(“assistant”): st_cb = StreamlitCallbackHandler (st.container(), expand_new_thoughts=False) response = pandas_df_agent.run(st. session_state.messages, callbacks=[st_cb]) st.session_state.messages.append({“role”: “assistant”, “content”: response}) st.write (response) if __name__ == “__main__”: main() |

Initially, the agent identifies the task and selects the appropriate action to retrieve the required information from the data frame. After that, it observes the output, combines the observations, and generates the final answer.

Chat with Database:

The sample code below shows how a natural language interface can be built on structured data like SQL DB and NoSQL, like Cosmos DB, with Azure OpenAI’s capabilities. This can be utilized as an SQL programmer Assistant. The objective is to generate SQL code (SQL Server) to retrieve an answer to a natural language query.

You can find the entire file for chat_with_DB here.

Structured Data Like SQL DB:

Step 1: Load the Azure and Database connection variables

I have used environment variables; you can have it as a config file or define it in the same file.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

#Load environment variables load_dotenv (“credentials.env”)

aoai_endpoint = os.environ[“AZURE_OPENAI_ENDPOINT”] aoai_api_key = os.environ[“AZURE_OPENAI_API_KEY”] deployment_name = os.environ[“AZURE_OPENAI_DEPLOYMENT_NAME”] aoai_api_version = os.environ[“AZURE_OPENAI_API_VERSION”] aoai_api_version_For_COSMOS = “2023-08-01-preview”#### for cosmos other API versio aoai_embedding_deployment = os.environ[“AZURE_EMBEDDING_MODEL” ]

sql_server_name = os.environ[“SQL_SERVER_NAME”] sal_server_db = os.environ[“SQL_SERVER_DATABASE”] sal_server_username = os.environ[“SQL_SERVER_USERNAME”] sal_Server_pwd = os.environ[“SQL_SERVER_PASSWORD”] SQL_ODBC_DRIVER_PATH = os.environ[“SQL_ODBC_DRIVER_PATH”]

COSMOS_MONGO_CONNECTIONSTRING = os.environ[“COSMOS_MONGO_CONNECTIONSTRING”] COSMOS_MONGO_DBNAME = os.environ[“COSMOS_MONGO_DBNAME”] COSMOS_MONGO_CONTAINER = os.environ[“COSMOS_MONGO_CONTAINER”] COSMOS_MONGO_API = os.environ[“COSMOS_MONGO_API”] |

Step 2: Create an Azure OpenAI client and a model response for the chat conversation

For this, we need the python library openai. Once installed, you can use the library by importing openai and your api secret key to run the following:

To create clients, we utilize AzureOpenAI from Openai.

- api-version: A valid Azure OpenAI API version, such as the API version you selected when you imported the API.

- api_key: A unique identifier used to authenticate and control access to OpenAI’s APIs.

|

client = openai.AzureOpenAI( base_url=f“{aoai_endpoint}/openai/deployments/{deployment_name}/”, api_key=aoai_api_key, api_version=“2023-12-01-preview” )

response = client.chat.completions.create( model=deployment_name, # The deployment name you chose when you deployed the ChatGPT or GPT-4 model.

messages=messages, temperature=0, max_tokens=2000

) |

After getting the client, the API ChatCompletions gets the user prompt and generates the SQL query for the natural language query

- model: OpenAI uses the model keyword argument to specify what model to use. Azure OpenAI has the concept of unique model deployments. When you use Azure OpenAI, a model should refer to the underlying deployment name you chose when you deployed the model. See the model endpoint compatibility table for details on which models work with the Chat API.

- max_tokens The maximum number of tokens can be generated in the chat completion. The total length of input tokens and generated tokens is limited by the model’s context length.

- temperature: What sampling temperature should be used? Between 0 and 2. Higher values like 0.8 will make the output more random, while lower values like 0.2 will make it more focused and deterministic. We generally recommend altering this or top_p but not both.

- messages: A list of messages comprising the conversation so far.

If you want, you can add more params based on the requirement.

Step 3: Using Panda, read sql to get the query result

Utilizing panda read sql (pandas.read_sql(sql, con)) to read the sql query or database table into a data frame and return the pandas data frame containing the results of the query run.

|

def run_sql_query(aoai_sqlquery): ”’ Function to run the generated SQL Query on SQL server and retrieve output. Input: AOAI completion (SQL Query) Output: Pandas dataframe containing results of the query run ”’ conn = connect_sql_server () df = pd.read_sql(aoai_sqlquery, conn) return df |

No SQL like COSMOS DB:

Step 1: Create an Azure OpenAI client

To create clients, we utilize AzureOpenAI from Openai.

- api-version: A valid Azure OpenAI API version, such as the API version you selected when you imported the API.

- api_key: A unique identifier used to authenticate and control access to OpenAI’s APIs.

|

client = openai.AzureOpenAI( base_url=f“{aoai_endpoint}/openai/deployments/{deployment_name}/extensions/”, api_key=aoai_api_key, api_version=aoai_api_version_For_COSMOS ) |

Step 2: Create a model response for the chat conversation

After getting the client, the API ChatCompletions gets the user prompt and generates the query along with the response for the natural language query. Adding params details based on the requirement.

Be sure to include the “extra_body” parameter when using Cosmos as the data source.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

return client. chat.completions.create( model=deployment_name, messages=[ {“role”: m[“role”], “content”: m[“content”]} for m in messages ], stream=True, extra_body={ “dataSources”: [ { “type”: “AzureCosmosDB”, “parameters”: { “connectionString”: COSMOS._MONGO_CONNECTIONSTRING, “indexName” : index_name, “containerName”: COSMOS_MONGO_CONTAINER, “databaseName”: COSMOS_MONGO_DBNAME, “fieldsMapping”: { “contentFieldsSeparator”: “\n”, “contentFields”: [“text”], “filepathField”: “id”, “titleField”: “description”, “urlField”: None, “vectorFields”: [“embedding”], }, “inScope”: “true”, “roleInformation”: “You are an AI assistant that helps people find information from retrieved data”, “embeddingEndpoint”: f“{aoai_endpoint}/openai/deployments/{aoai_embedding_deployment}/embeddings/”, “embeddingKey”: aoai_api_key, “strictness”: 3, ” topNDocuments”: 5, } |

Chat with Multiple Data Sources:

This POC shows various implementation patterns for building chat interfaces using Azure AI services and Orchestrators on multiple data sources.

You can find the entire file for chatting with multiple data sources here.

Step 1: Define the Azure and connection variables

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

load_dotenv(“credentials.env”)

aoai_endpoint = os.environ[“AZURE_OPENAI_ENDPOINT”] aoai_api_key = os.environ[“AZURE_OPENAI_API_KEY”] deployment_name = os.environI “AZURE_OPENAI_DEPLOYMENT_NAME”] aoai_api_version = os.environI “AZURE_OPENAI_API_VERSION”]

sal_server_name = os.environ “SQL_SERVER_NAME”] sal_server_db = os.environ “SQL_SERVER_DATABASE”] sql_server_username = os. environ[” SQL_SERVER_USERNAME”] sql_Server_pwd = os.environ “SQL_SERVER_PASSWORD”]

blob_connection_string = os.environI “BLOB_CONNECTION_STRING”] blob_sas_token = os.environ[“BLOB_SAS_TOKEN”]

azure_cosmos_endpoint = os.environ[“AZURE_COSMOSDB_ENDPOINT”] azure_cosmos_db = os.environ[“AZURE_COSMOSDB_NAME”] azure_cosmos_container = os.environ[“AZURE_COSMOSD_CONTAINER”] azure_cosmos_connection = os.environ[“AZURE_COSMOSDB_CONNECTION_STRING”] |

Step 2: Create an LLM using AzureChatOpenAI

For this, we need to import AzureChatOpenAI from langchain_openai and use the below params,

|

# Set LLM 11m = AzureChatOpenAI(azure_endpoint=aoai_endpoint, openai_api_key = aoai_api_key, azure_deployment=deployment_name, openai._api_version=aoai_api_version, streaming=False, temperature=0.5, max_tokens=1000 ,callback_manager=cb_manager ) |

Step 3: Util Methods

All methods DocSearchAgent, BingSearchAgent, SQLSearchAgent, and ChatGPTTool are in utils calls.

|

# Initialize our Tools/Experts text_indexes = [azure_search_covid_index] #, “cogsrch-index-csv”] doc_search = DocSearchAgent(11m=11m, indexes=text_indexes, k=10, similarity_k=4, reranker_th=1, sas_token=blob_sas_token, name=“docsearch”, verbose=False) #callback_manager=cb_manager

www_search = BingSearchAgent(11m=11m, k=5,verbose=False) sal_search = SQLSearchAgent(11m=11m, k=10,verbose=False) chatgpt_search = ChatGPTTo01(11m=11m)#callback_manager=cb_manager tools = [www_search, sql_search, doc_search, chatgpt_search] #csv_search, |

Step 4: Create an agent and execute it

For this, we need to import AgentExecutor, create_openai_tools_agent from langchain.agents

Also, to run the agent import RunnableWithMessageHistory from langchain_core.runnables.history

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

agent = create_openai_tools_agent(11m, tools, CUSTOM_CHATBOT_PROMPT) agent_executor = AgentExecutor(agent=agent, tools=tools) brain_agent_executor = RunnableWithMessageHistory( agent_executor, get_session_history, input_messages_key=“question”, history_messages_key=“history”, history_factory_config=[ ConfigurableFieldSpec( id=“user_id”, annotation=str, name=“User ID”, description=“Unique identifier for the user.”, default=“”, is_shared=True, ), ConfigurableFieldSpec( id=“session_id”, annotation=str, name=“Session ID”, description=“Unique identifier for the conversation.”, default=“”, is_shared=True, ), ], ) |

Utilizing create_openai_tools_agent to create an agent that uses OpenAItools and

Create an agent executor by passing in the agent and tools and run the agent using RunnableWithMessageHistory. RunnableWithMessageHistory must always be called with a config that contains the appropriate parameters for the chat message history factory.

Step 5: Agent Executor is invoked with prompt and config

|

response = brain_agent_executor.invoke({“question”: prompt},config=config)[ “output”] |

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.