包阅导读总结

1. 关键词:Transformer 架构、音乐推荐、YouTube、用户行为、上下文

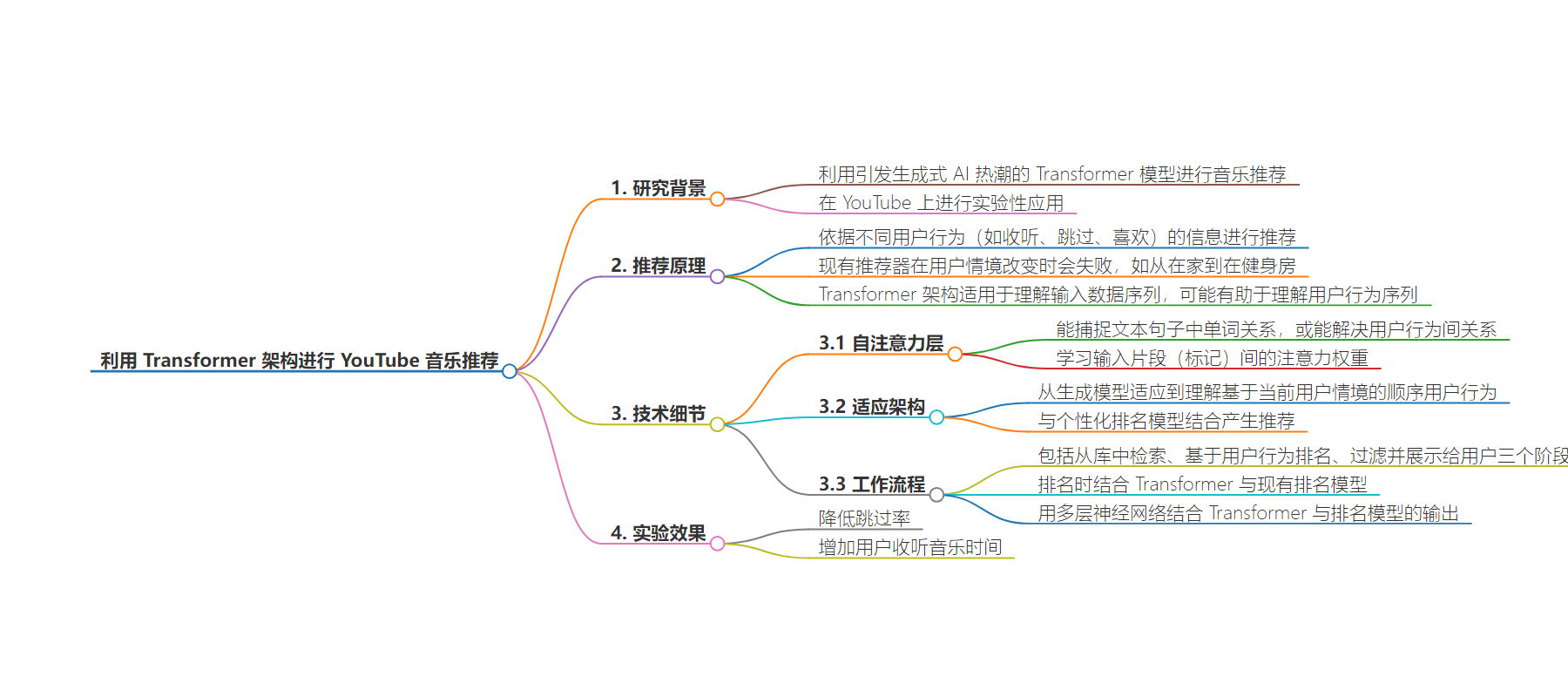

2. 总结:谷歌利用 Transformer 架构进行 YouTube 音乐推荐实验,旨在理解用户行为序列和上下文以更好预测偏好,能应对用户情境变化,初期实验显示推荐效果有所提升。

3. 主要内容:

– 谷歌将引发生成式 AI 热潮的 Transformer 模型用于音乐推荐,在 YouTube 上进行实验。

– 推荐器利用用户听、跳过、喜欢等行为信息来推荐。

– 现有推荐器在用户情境变化时会失败,如从在家听到在健身房听。

– Transformer 架构适合处理输入数据序列,能理解用户行为序列与上下文关系。

– 自注意力层可捕获文本单词关系,或能解决用户行为关系。

– 谷歌旨在基于当前用户情境,让 Transformer 架构理解用户行为序列,并与个性化排名模型结合推荐。

– 推荐系统包含从库中检索、基于用户行为排名、过滤展示给用户三个阶段,排名时结合 Transformer 和现有模型,初期实验效果良好。

思维导图:

文章来源:infoq.com

作者:Sergio De Simone

发布时间:2024/9/6 0:00

语言:英文

总字数:613字

预计阅读时间:3分钟

评分:90分

标签:音乐推荐,Transformer模型,YouTube,AI应用,用户上下文

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Google has described an approach to use transformer models, which ignited the current generative AI boom, for music recommendation. This approach, which is currently being applied experimentally on YouTube, aims to build a recommender that can understand sequences of user actions when listening to music to better predict user preferences based on their context.

A recommender leverages the information conveyed by different user actions, such as listening, skipping, or liking a piece, which is then used to make recommendations about items the user could be likely interested in.

A typical scenario where current music recommenders would fail, say Google researchers, is when a user’s context changes, e.g., from home listening to gym listening. This context change can produce a shift in their music preferences towards a different genre or rhythm, e.g., from relaxing to upbeat music. Trying to take such contextual changes into account makes the task of recommendation systems much harder, say Google researchers, since they need to understand user actions in the user’s current context.

This is where the transformer architecture may help, they believe, since it is especially suited to making sense of sequences of input data, as shown by NLP and more generally large language models (LLMs). Google researchers are confident that the transformer architecture may show the same ability to make sense of sequences of user actions as they do of language based on the user’s context.

The self-attention layers capture the relationship between words of text in a sentence, which suggests that they might be able to resolve the relationship between user actions as well. The attention layers in transformers learn attention weights between the pieces of input (tokens), which are akin to word relationships in the input sentence.

Google researchers aim at adapting the transformer architecture from generative models to understanding sequential user actions based on the current user context. This understanding is then blended with personalized ranking models to produce a recommendation. To explain how user actions may have different meaning depending on the context, the researchers depict a user listening to music at the gym who might prefer more upbeat music. They would normally skip that kind of music when at home, so this action should get a lower attention weight when at the gym. In other words, the recommender applies different attention weights in the user context versus the global user’s listening history.

We still utilize their previous music listening, while recommending upbeat music that is close to their usual music listening. In effect, we are learning which previous actions are relevant in the current task of ranking music, and which actions are irrelevant.

As a short summary of how it works, Google’s transformer-based recommender follows the typical structure of recommendation system and is comprised of three different phases: retrieving items from a corpus or library, ranking them based on user actions, and filtering them to show a reduced selection to the user. While ranking items, the system combines a transformer with an existing ranking model. Each track is associated to a vector called track embedding which is used both for the transformer and the model. Signals associated to user actions and track metadata are projected on to a vector of the same length, so they can be manipulated just like track embeddings. For example, when providing inputs to the transformer the user-action embedding and the music-track embedding are simply added together to generate a token. Finally, the output of the transformer is combined with that of the ranking model using a multi-layer neural network.

According to Google’s researchers, initial experiments show an improvement of the recommender, measured as a reduction in skip-rate and an increase in time users spend listening to music.