包阅导读总结

1. 关键词:AI 部署、业务价值、技术风险、数据质量、持续评估

2. 总结:本文强调在 AI 采用周期中确保其为业务提供价值的重要性,指出要明确目标、保障数据质量、选择合适算法、注重人机协作和伦理考量,并进行持续监测评估,以实现成功的 AI 部署。

3. 主要内容:

– AI 部署与业务价值:

– 速度重要,但确保 AI 投资提供预期业务价值且不增加风险更关键。

– 虽量化 IT 投资的业务价值有难度,但并非不可能。

– 目标设定与监控:

– 定义明确的 AI 实施目标和成果,从小规模核心用例开始。

– 设置现实的指标,持续监测,选择可靠的 IT 伙伴。

– 数据质量与学习:

– 确保高质量、多样化的数据用于训练和优化,避免敏感数据泄露。

– 定期更新和优化 AI 系统以适应变化。

– 算法选择与模型:

– 根据业务用例选择合适算法和模型。

– 人机协作与伦理:

– 结合人类专长和 AI 能力辅助决策,避免完全自主。

– 解决 AI 开发和部署中的偏差、隐私和透明度问题。

– 监测与评估:

– 定期评估 AI 性能和影响,确保达到预期成果。

– 市场上 AI 解决方案众多,选择时要评估技术和安全。

– 成功实施要点:

– AI 不是一劳永逸的工具,需要持续管理和优化。

– 定期评估有助于改进和复制成功经验。

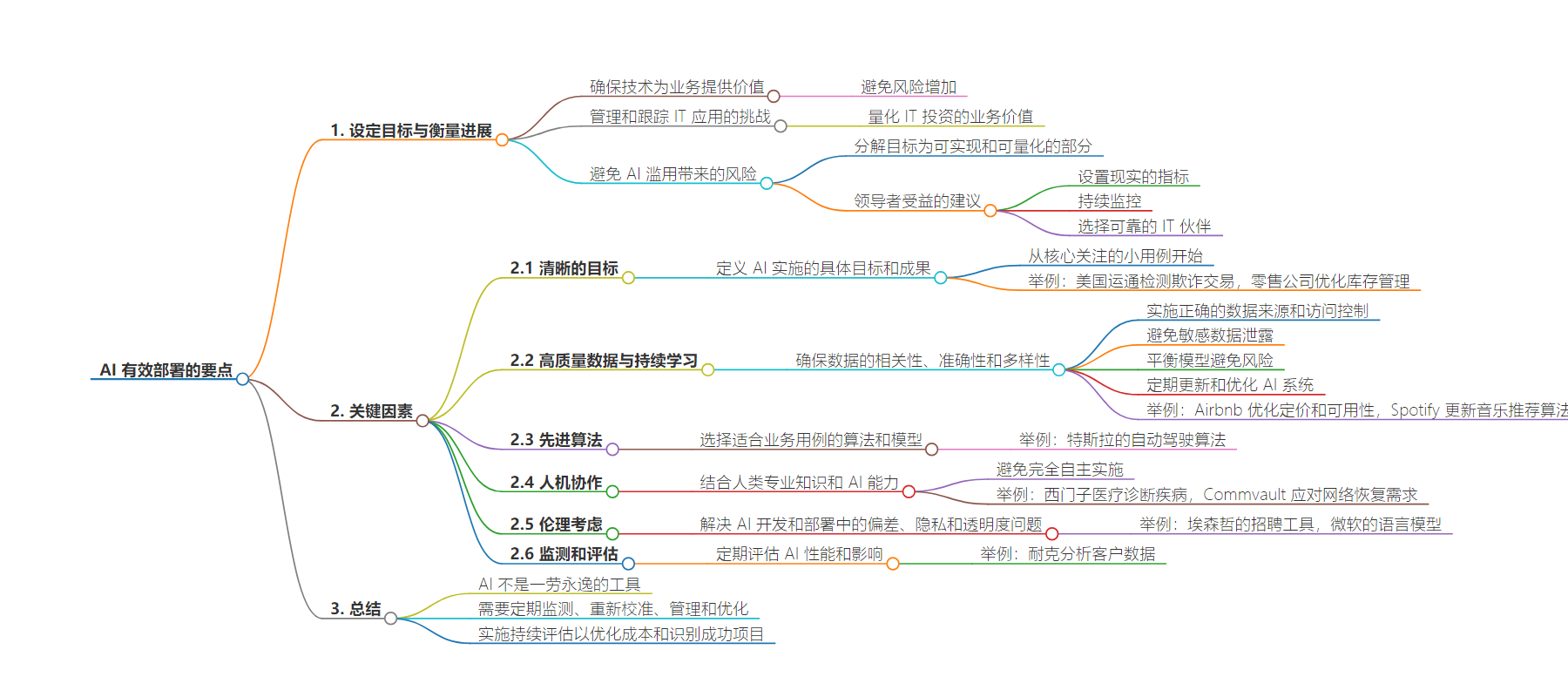

思维导图:

文章地址:https://thenewstack.io/set-goals-and-measure-progress-for-effective-ai-deployment/

文章来源:thenewstack.io

作者:Vidya Shankaran

发布时间:2024/7/1 20:50

语言:英文

总字数:1533字

预计阅读时间:7分钟

评分:86分

标签:AI部署,商业价值,数据质量,持续学习

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

One of the most essential parts of the AI adoption cycle is often the most overlooked: ensuring the technology provides value to the business.

The current AI frenzy is turning even the most risk-averse companies into digital vanguards set on taking advantage of powerful new applications as quickly as possible. While speed is undoubtedly critical, so is ensuring that AI investments deliver the desired business value as intended without further compounding the company’s risk exposure — whether that be a reputational, financial, or security risk.

As the number of IT priorities continues to grow, managing and tracking the various custom and SaaS applications has become increasingly challenging. Though accurately quantifying the business value of IT investments may seem difficult, it is not impossible. Like any technology adoption, embracing AI also requires the same level of thorough evaluation and due diligence to ensure successful implementation.

The history of human evolution shows that any tool has the potential to be turned into a weapon — it just depends on who uses it and why. This is even more true today, where cyberthreats are increasingly created by malicious actors wielding their forms of AI. Without sufficient oversight in AI adoption, this inherent risk of misuse can compound an organization’s risk exposure even further. While measuring the business outcome might seem overwhelming, breaking down each of the goals into achievable and quantifiable bytes (pun intended) is recommended.

While hashing out those goals, leaders would also benefit from the following recommendations: setting realistic metrics, continuous monitoring, and selecting reliable IT partners who can best help them meet those targets.

Key Factor 1: Clear objectives: Define specific goals and outcomes for AI implementation. Identifying what the business intends to achieve with the implementation of AI is the first critical step. It helps to start small on a suitable use case that is a core concern for the organization. This helps measure success as tangible outcomes, making it easier to scale the implementation to broader use cases across the organization.

For example, as stated in this article, one of American Express’s critical business objectives was to detect fraudulent transactions using AI, aiming to reduce financial losses by 30%.

Similarly, a retail company could use AI to optimize inventory management, aiming to reduce stockouts and overstocking by 20%. These provide a starting point to measure the “what,” with key decisions as a takeaway to drive desired business outcomes.

Key Factor 2: Need for high-quality data and continuous learning: Ensure access to relevant, accurate, and diverse data for training and optimization. An AI deployment’s success is only as good as the data quality used for training its models. This is where it becomes critical for organizations to ensure the correct data sources with the necessary access controls and guardrails are implemented. Further, organizations should confirm that sensitive data is not being fed into the data pipelines; this will help avoid any sensitive data leakage incidents.

This facet is critical and a formidable goal to achieve, as organizations must now find the right balance to prevent their large language models (LLMs) from becoming echo chambers while safeguarding against risks of LLM poisoning, sensitive data leakage, prompt injection attacks, and potential AI hallucinations.

These risks have also given rise to various solutions that provide data firewalls for AI LLMs. The broad spectrum of data firewall solutions now enables IT leaders to make selections based on the best fit for their use cases.

We thought it would be interesting to see what ChatGPT 4.0 thought the most appropriate definition of AI LLM poisoning was, and the following is its response:

AI LLM poisoning refers to the malicious manipulation of LLM training data to intentionally introduce biases, errors, or harmful behavior into the model’s output. This can occur through various means, including:

Data poisoning: Tampering with the training data to manipulate the model’s performance or introduce specific biases.

Adversarial examples: Crafting input data designed to cause the model to produce an incorrect or misleading output.

Model inversion attacks: Exploiting the model’s relationships to extract sensitive information from the training data.

One example of a business that realizes value by continuously improving the quality of data fed into its LLM is Airbnb. The company leverages user data to optimize pricing and availability, increasing host revenue by 15%. The more accurate the user data, the more reliable and predictable revenues it can drive for platform users and subscribers.

It is critical to regularly update and refine AI systems to adapt to changing environments. This is achieved by ensuring that the data feeding into the LLMs is kept up-to-date and relevant; in some cases, even live data is used.

Interestingly, Spotify continuously updates its music recommendation algorithms to adapt to changing user preferences and listening habits. Similarly, streaming platforms continuously update their recommendation algorithms to adapt to the ever-changing viewer preferences and purchasing behavior. These platforms leverage user data to create personalized recommendations, drive viewership based on viewers’ interests, and thereby increase user engagement.

Key Factor 3: Advanced algorithms: Select appropriate algorithms and models for the business use case being considered. AI does not conform to “one size fits all.” Each use case requires relevant algorithms and models to be engaged.

For example, AI models used by Tesla for advanced computer vision algorithms used in its autonomous driving system help improve safety and driver assistance. This is unique to Tesla’s use case and very different from the previous examples of AI’s benefits for other businesses.

Key Factor 4: Human-AI collaboration: Combining human expertise and AI capabilities to augment decision-making is an essential tenet in responsible AI principles. The current age of AI adoption should be considered a “coming together of humans and technology.” Humans will continue to be the custodians and stewards of data, which ties into Key Factor 2 about the need for high-quality data, as humans can help curate the relevant data sets to train an LLM.

This is critical, and the “human-in-the-loop” facet should be embedded in all AI implementations to avoid completely autonomous implementations. Apart from data curation, this allows humans to take more meaningful actions when equipped with relevant insights, thus achieving better business outcomes.

It would be worthwhile to note that Siemens Healthineers combines AI with human expertise to diagnose diseases more accurately and efficiently.

The same philosophy holds for cyber resiliency with Commvault. Our AI/ML engines help customers make more informed decisions when responding to cyber recovery requirements. The richness of context from Commvault’s anomaly detection capabilities is further enhanced by humans who can triage an event effectively and respond appropriately.

Key Factor 5: Ethical considerations: Addressing bias, privacy, and transparency in AI development and deployment is the pivotal metric in measuring its success. Like any technology, laying out guardrails and rules of engagement are core to this factor.

Enterprises such as Accenture implement measures to detect and prevent bias in their AI recruitment tools, helping to ensure fair hiring practices. Similarly, Microsoft implements measures to detect and mitigate bias in its AI language models to ensure fairness and inclusivity.

Key Factor 6: Monitoring and evaluation: Regularly assessing AI performance and impact to ensure desired outcomes helps an organization stay on track with its mission toward successful AI implementation. This is a slippery slope, as there is a general propensity to use qualitative assumptions that cannot be tied back to prescriptive quantifiable metrics.

Most organizations deploy AI-based cyber defense tools and expect never to have another security breach. Given the cybercrime realities of today’s world, that is quixotic at best.

However, companies like Nike have used AI to analyze customer data and measure the effectiveness of their personalized marketing campaigns, resulting in a 20% increase in sales — a tangible metric.

The remarkable advancement in AI is spawning a whole new generation of solutions competing for corporate buyers’ attention. The sudden and steady burgeoning of AI solutions in the market, contaminated with AI Washing of solutions thrown into the mix, makes it imperative for organizations to invest cycles in technology assessment and fitment.

Further, with security becoming a core concern across all enterprises, knowing your supply-chain players in AI/ML solutions and their commitment to security also becomes a key selection criterion. Like the supply-chain ramifications of cybersecurity, choosing the right technology partners is paramount.

While AI is a powerful technology that promises to reinvent processes within every business, it’s not a set-it-and-forget-it tool. The technology requires regular monitoring, recalibration, curation, and management. Leaders would benefit from being willing to make course corrections in their IT journey. Regular mid-point evaluations help identify areas of improvement early on or templatize aspects that are working well for scaling success.

Implementing a continual evaluation approach facilitates long-term optimization of the Total Cost of Operations and Ownership and enables the identification of the most influential and viable projects. Such recognition aids in developing standardized protocols that can be replicated and scaled in other business areas.

A successful AI implementation requires a multidisciplinary approach, ongoing refinement, and a commitment to responsible AI practices. These factors are foundational for evolving into an AI-centric business.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.