包阅导读总结

1.

关键词:Apple Foundation Models、Apple Intelligence、Language Models、Adapter Architecture、Safe Output

2.

总结:Apple 公布新的 Apple Foundation Models(AFM),这是一系列为 Apple Intelligence 提供动力的大语言模型,包括不同尺寸版本。介绍了其开发细节、性能评估、安全措施,以及在多种任务中的表现和用户的相关评价。

3.

主要内容:

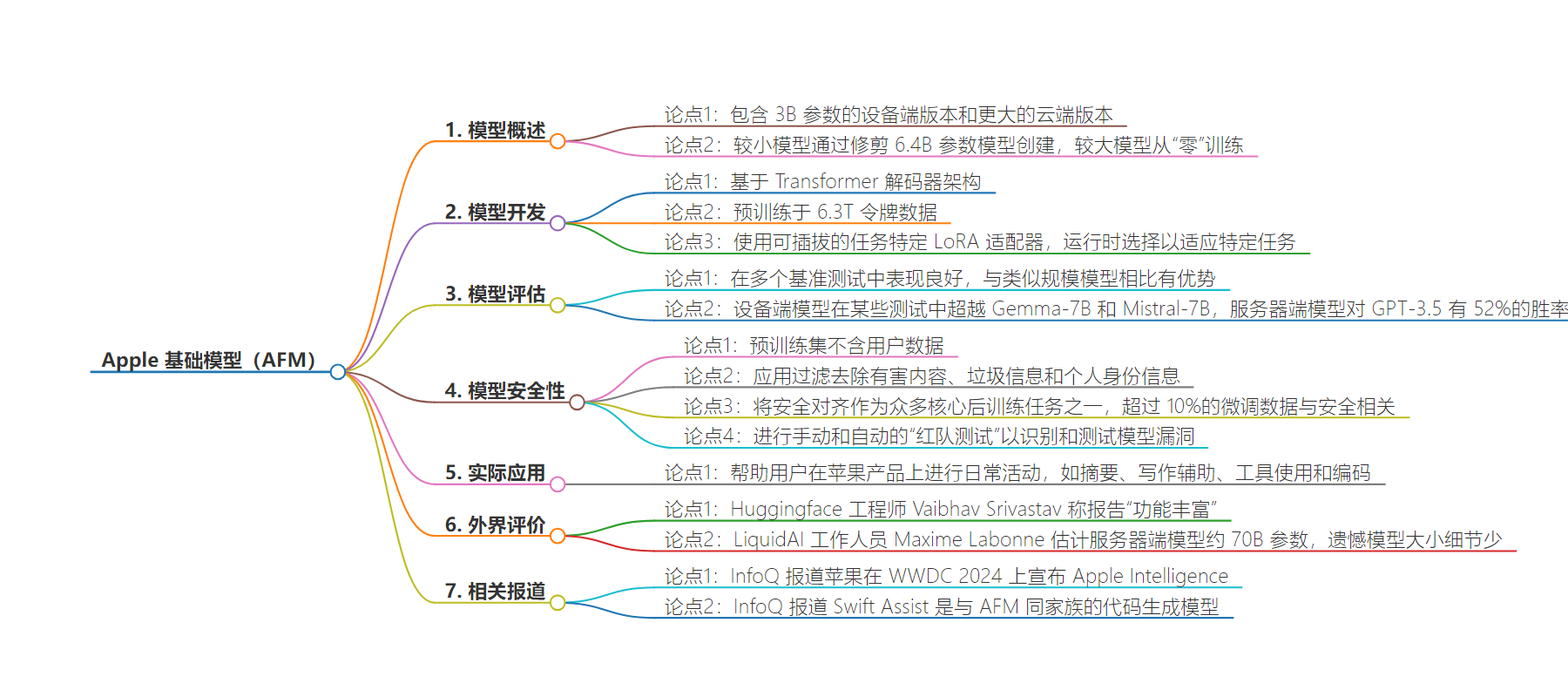

– Apple Foundation Models 发布:

– 包括 3B 参数的设备端版本和更大的云版本。

– 较小模型通过修剪创建,较大模型从头训练。

– 模型特点:

– 基于 Transformer 解码器架构,预训练数据量大。

– 使用可插拔任务特定的 LoRA 适配器。

– 适配器架构可根据任务实时修改。

– 性能与评估:

– 在多个基准测试中表现良好,与类似规模模型相比有优势。

– 人工评判中设备端模型超越部分大型模型,云版本有竞争力结果。

– 安全措施:

– 确保预训练集无用户数据,过滤有害内容。

– 微调阶段重视安全,进行手动和自动测试。

– 用户评价:

– 有人称报告内容丰富,有人对云版本模型大小细节不足表示遗憾。

思维导图:

文章来源:infoq.com

作者:Anthony Alford

发布时间:2024/8/27 0:00

语言:英文

总字数:542字

预计阅读时间:3分钟

评分:91分

标签:苹果基础模型,大型语言模型,消费科技中的AI,模型剪枝,任务专用适配器

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Apple published the details of their new Apple Foundation Models (AFM), a family of large language models (LLM) that power several features in their Apple Intelligence suite. AFM comes in two sizes: a 3B parameter on-device version and a larger cloud-based version.

The smaller model, AFM-on-device, was created by pruning a 6.4B parameter model; the larger model, known as AFM-server, was trained “from scratch,” but Apple did not disclose its size. Apple did release details of both models’ development: both are based on the Transformer decoder-only architecture, pre-trained on 6.3T tokens of data. The models use pluggable task-specific LoRA adapters that are chosen at runtime to tailor model performance for specific tasks, such as proofreading or replying to email. Apple evaluated both models on several benchmarks, including instruction-following and mathematical reasoning, and found that they “compared favorably,” and in some cases outperformed, similar-sized models such Llama 3 or GPT-4. According to Apple:

Our models have been created with the purpose of helping users do everyday activities across their Apple products, and developed responsibly at every stage and guided by Apple’s core values. We look forward to sharing more information soon on our broader family of generative models, including language, diffusion, and coding models.

InfoQ recently covered Apple’s announcement of Apple Intelligence at their WWDC 2024 event. InfoQ also covered Swift Assist, a code generation model integrated with XCode, which Apple describes as being part of the same family of generative AI models as AFM.

The adapter architecture allows AFM to be modified “on-the-fly” for specific tasks. The adapters are “small neural network modules” that plug into the self-attention and feed-forward layers of the base model. They are created by fine-tuning the base model with task-specific datasets. The adapter parameters are quantized to low bit-rates to save memory; the on-device adapters consume on the order of 10 MB, making them suitable for small embedded devices.

Apple took several steps to ensure AFM produced safe output. In addition to ensuring that no user data was included in their pre-training set, Apple applied filtering to remove harmful content, spam, and PII. In the fine-tuning stage, Apple treated “safety alignment as one of the many core post-training tasks” and more than 10% of the fine-tuning data was safety-related. They also performed manual and automated “red-teaming” to identify and test model vulnerabilities.

Apple evaluated AFM’s performance on a variety of benchmarks and compared the results to several baseline models, including GPT-4, Llama 3, and Phi-3. In tests where human judges ranked the outputs of two models side-by-side, AFM-on-device outperformed larger models Gemma-7B and Mistral-7B. AFM-server achieved “competitive” results, with a win-rate of 52% against GPT-3.5.

Ruoming Pang, the lead author of Apple’s technical report on AFM, posted on X that

While these LMs are not chatbots, we trained them to have general purpose capabilities so that they can power a wide range of features including summarization, writing assistance, tool-use, and coding.

Several other users posted their thoughts about AFM on X. Huggingface engineer Vaibhav Srivastav summarized the report, calling it “quite feature packed” and saying he “quite enjoyed skimming through it.” LiquidAI Staff ML Scientist Maxime Labonne estimated that AFM-server might have ~70B parameters, but lamented that the paper had “almost no details” on this model’s size.