包阅导读总结

1. 关键词:AI、软件开发、管道编排、数据管理、事件驱动架构

2. 总结:本文探讨了 AI 在软件开发中的变革,强调了在融入 AI 时软件基础开发原则的重要性,重点阐述了数据管理、事件驱动架构、治理、LLM 输出控制、人机交互和编排等关键要素,指出编排对创建实用可靠的 AI 系统至关重要。

3. 主要内容:

– AI 与软件开发:AI 带来软件开发生态巨变,开发者角色转变,融入 AI 成为需求。

– 关键概念:

– 定义了 LLM、RAG、幻觉等相关术语。

– 基础要素:

– 数据是核心,要整合、管理不同类型数据,与现有系统集成。

– 向量数据库、数据端点暴露很重要。

– 事件驱动架构:AI 时代 API 端点通信规模剧增,事件驱动架构能应对,为 AI 代理提供通信网络。

– 治理和 API 管理:API 管理重要,控制数据访问,确保安全与盈利。

– 验证和控制 LLM 输出:LLM 可能产生错误信息,可控制数据和提示输入及输出处理。

– 人机交互和持续学习:人类元素关键,创建端点让人类验证,助系统学习改进。

– 编排的关键作用:包括集成、自动化、协调、监控优化,是有效可靠运行的关键。

– 结论:强调编排原则重要,开发者掌握编排是发挥 AI 潜力关键。

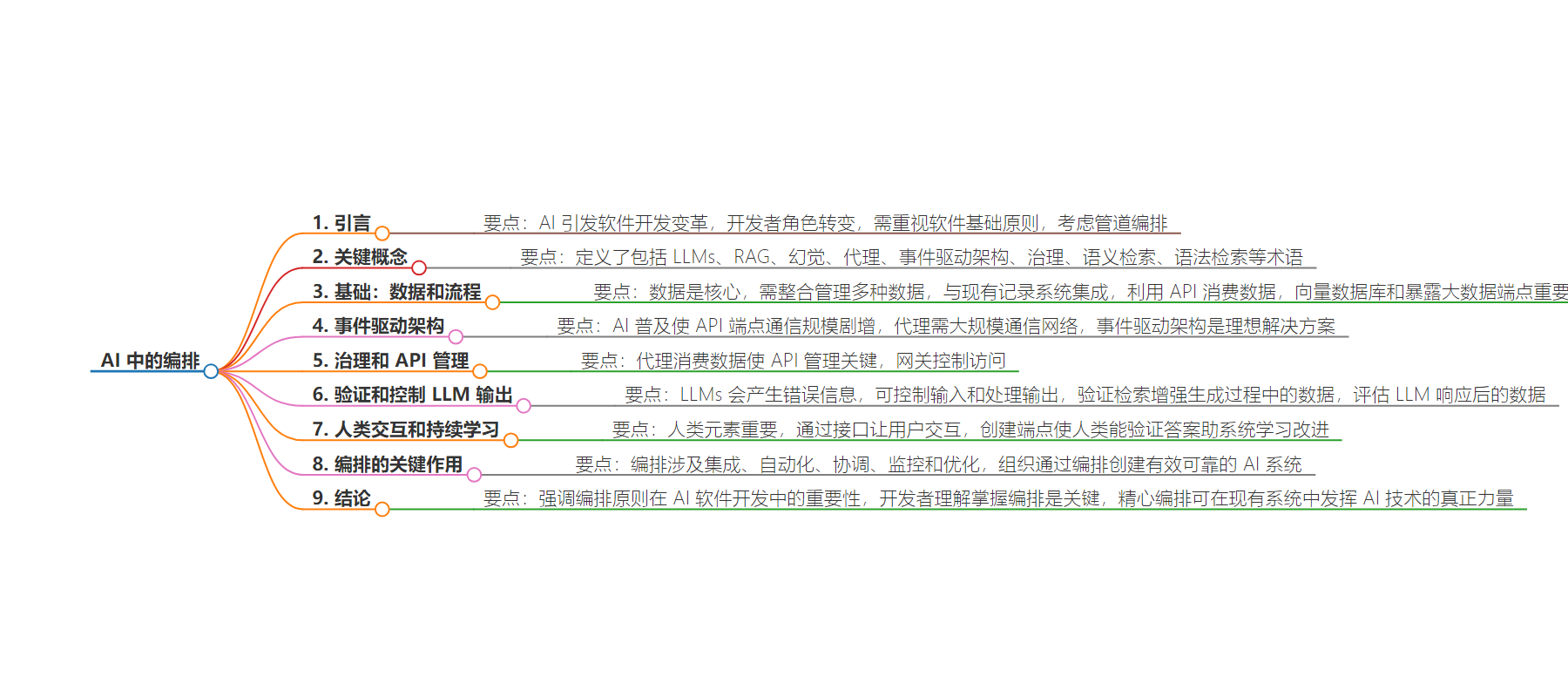

思维导图:

文章地址:https://thenewstack.io/yes-orchestration-is-for-ai-too/

文章来源:thenewstack.io

作者:Michael Bachman

发布时间:2024/7/2 13:24

语言:英文

总字数:1199字

预计阅读时间:5分钟

评分:81分

标签:人工智能,编排,大型语言模型,检索增强生成,代理

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

AI is creating a seismic shift in software development. Yet, as AI revolutionizes our approach, fundamental software development principles are more important than ever. The role of the developer is transforming, driven by market demands, executive mandates and requests from business operators to incorporate AI into software systems.

Among the many facets of AI and the software development lifecycle (SDLC) we could explore — such as security, AI-assisted programming (copilots), AIOps and model instruction tuning — there is another critical element to factor in: pipeline orchestration. To leverage large language models (LLMs) efficiently, effectively and practically, we must develop a comprehensive understanding of data management, processes and prompting techniques.

While this article isn’t exhaustive, it aims to provide a solid foundation for developing practical, AI-infused applications for both front- and back-office use cases.

Key Concepts

Before diving deeper, let’s define some essential terms:

- LLMs (large language models): Advanced AI models trained on vast amounts of text data, capable of understanding and generating human-like text.

- RAG (retrieval-augmented generation): A technique that combines information retrieval with text generation to produce more accurate and contextually relevant responses.

- Hallucinations: Instances where AI models generate false or nonsensical information.

- Agents: Autonomous programs that complete tasks based on goals, often utilizing LLMs for natural language understanding and generation.

- Event-driven architecture: A software design pattern where the flow of the program is determined by events such as user actions, sensor outputs or messages from other programs.

- Governance: The framework for managing, securing and controlling access to data and AI systems.

- Semantic retrieval: The process of finding information based on meaning and context rather than exact keyword matches.

- Syntactic retrieval: Information retrieval based on the structure and format of data rather than its meaning.

The Foundation: Data and Processes

Data is at the core of any practical AI implementation. The ability to integrate, manage and leverage various types of data is crucial. This includes structured data (relational databases), semi-structured data (document databases, key-value stores) and unstructured data (files, images, videos and audio).

Integrating AI with existing systems of record is critical for businesses. This involves creating, reading and persisting data across different storage systems. It’s also essential to expose and make accessible APIs for consuming data through frontend applications or services, connecting to backend datastores and creating processes that transform, filter, enrich and move data through pipelines to achieve desired outcomes.

Vector databases play a crucial role in storing and retrieving data for LLMs, particularly for semantic search and retrieval. Additionally, exposing large data endpoints from data lakes, warehouses and marts is necessary for AI systems to access and utilize enterprise data effectively.

Event-Driven Architecture

As AI becomes more prevalent, the scale of communication between API endpoints is growing at an astronomical rate. The current API economy is just the tip of the iceberg compared to what we can expect in an AI-infused future.

Enter AI agents: autonomous, often ephemeral programs that complete tasks based on goals, without explicit instructions on how to plan, reason or create rules. These agents, powered by language models, will be ubiquitous, getting and putting data in place, writing code, scanning, filtering and performing various actions. As agents proliferate, they will require massively scalable communication networks.

This is where event-driven architecture (EDA) comes into play. Evolved from the publish-subscribe (pub/sub) pattern, modern EDA offers robust capabilities for handling massive scale, guaranteeing message delivery, deduplicating messages and connecting to various endpoints. Some EDA implementations can even trace data lineage, scale to many nested topics and offer flexible data persistence options.

EDA provides the type of communication network that Internet of Things (IoT) devices use, and AI agents communicate similarly. As agents pop in and out of existence, they will require a robust data network for communication, making EDA an ideal solution.

Governance and API Management

As agents consume data from various sources, API management becomes critical. Gateways must control access to applications’ or endpoints’ data, managing not only who or what has access but also the amount and frequency of access. This is crucial for both monetization opportunities and defending against unauthorized data access.

Validating and Controlling LLM Outputs

LLMs are known to hallucinate, producing false or nonsensical information. For most organizations not developing their own foundation models, controlling the LLM’s response to prompts can be challenging. However, you can control the data and prompts fed to the LLM, as well as how the model’s output is processed and presented to the end user.

Validating data within a retrieval-augmented generation (RAG) process allows for harmonization, canonization and vector embedding of your corporate data before presenting it to the LLM. This approach helps ensure that when combining your proprietary data with the LLM’s knowledge, you maintain control over the inputs.

Evaluating data after the LLM responds is also possible and often advisable. Ranking agents can observe the answers given to a consumer before the information is presented, adding an additional layer of quality control.

Human Interaction and Continuous Learning

The human element remains crucial in AI ecosystems. Interfaces such as chatbots or frontend applications allow users to interact with the orchestrated systems around the LLM. By creating endpoints within processes that enable humans to validate answers, you can help the orchestrated systems learn and improve from external, human interactions.

The Critical Role of Orchestration

All these elements — data management, process control, event-driven communication, governance, validation and human interaction — require orchestration. An LLM on its own is not grounded; it needs a carefully orchestrated ecosystem to function effectively and reliably in real-world applications.

Orchestration involves:

- Integration: Connecting dissimilar applications, creating abstractions and exposing APIs for data consumption.

- Automation: Streamlining processes and reducing manual intervention in data and AI workflows.

- Coordination: Ensuring that different components of the AI system work together seamlessly.

- Monitoring and optimization: Continuously assessing system performance and making necessary adjustments.

By focusing on orchestration, organizations can create AI systems that are not only powerful but also practical, reliable and aligned with business objectives.

Conclusion

As we navigate the exciting frontier of AI in software development, it’s clear that the principles of orchestration are more critical than ever. The ability to effectively integrate, automate and coordinate various components of AI systems will distinguish successful implementations from those that fall short.

For developers, understanding and mastering orchestration is key to harnessing the full potential of AI. By grounding our approach in solid orchestration practices, we can create AI-infused applications that are not just innovative, but also practical and valuable for businesses across industries.

As you embark on your AI journey, remember that the true power of these technologies lies not in the models themselves, but in how we orchestrate them within our existing systems and processes. With careful attention to data management, communication architectures, governance and human interaction, we can build AI systems that will transform the way we work and create value.

Want to learn more? Register now for “Unleashing the Power of GenAI with Enterprise Low-Code Orchestration,” a free online webinar hosted by The New Stack with Boomi’s Michael Bachman as our special guest.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.