包阅导读总结

1. 关键词:Lucene、Elasticsearch、BKD 树、低基数字段、优化

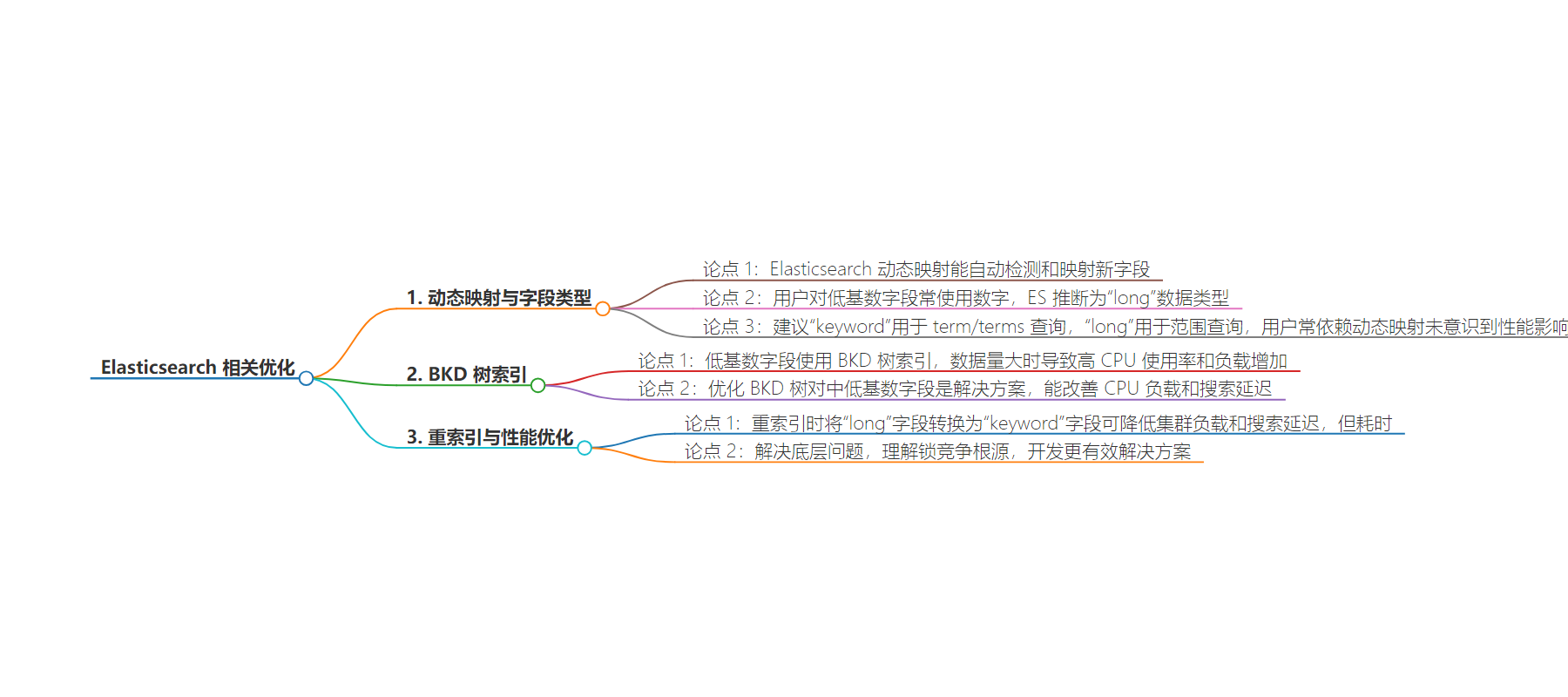

2. 总结:Elasticsearch 基于 Lucene,其动态映射对低基数字段处理存在问题,如使用“long”类型导致高 CPU 使用率和负载增加。将“long”转“keyword”可改善但耗时,优化 BKD 树对中低基数字段是解决方案,理解这些有助于解决锁竞争等问题。

3. 主要内容:

– Elasticsearch 基于 Lucene,具有动态映射功能可自动检测和映射新字段。

– 用户索引低基数字段(如身高、年龄)常用数字,被推断为“long”数据类型,使用 BKD 树索引,数据量增长时会导致高 CPU 使用率和负载增加,尤其在批量数据操作时。

– 重索引时将“long”转“keyword”能降低集群负载和搜索延迟,但耗时。Elastic 推荐不同查询用不同类型,用户常依赖动态映射未注意性能影响。

– 优化 BKD 树是中低基数字段的解决方案,理解这些能助于解决锁竞争等底层问题。

思维导图:

文章地址:https://www.elastic.co/blog/optimizing-lucene-caching

文章来源:elastic.co

作者:George Kobar

发布时间:2024/8/20 14:13

语言:英文

总字数:1105字

预计阅读时间:5分钟

评分:91分

标签:Lucene,Elasticsearch,缓存优化,读写锁,锁争用

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Elasticsearch, built on top of Lucene, can automatically detect and map new fields for users with its dynamic mapping feature. When users index low cardinality fields, such as height and age, they often use numbers to represent these values. Elasticsearch will infer these fields as “long” data types and use the BKD tree as the index for these long fields. As the data volume grows, building the result set for low-cardinality fields can lead to high CPU usage and increased load.

This issue is multiplied when the CPU is heavily used in bulk data operations. During a reindexing process, converting “long” fields to “keyword” fields can significantly reduce cluster load and search latency but can also be time-consuming. Elastic recommends using “keyword” for term/terms queries and “long” for range queries. However, users often don’t realize the performance impact of using “long” for low cardinality fields, relying on dynamic mapping that automatically selects the type. Optimizing the BKD tree is a solution for low/medium cardinality fields that would make a significant difference. This understanding highlights the importance of making the BKD tree indexing more efficient for these types of fields, addressing both CPU load and search latency issues.

By addressing these underlying issues, particularly in the context of Elasticsearch’s dynamic mapping and the use of “long” fields for low cardinality data, we can better understand the root causes of lock contention and develop more effective solutions.