包阅导读总结

1. `Kubernetes 1.31`、`PodAffinity`、`matchLabelKeys`、`mismatchLabelKeys`、`调度优化`

2. Kubernetes 1.31 中 PodAffinity 中的 matchLabelKeys 特性移至 beta 版且默认启用。matchLabelKeys 用于优化滚动更新时的调度,mismatchLabelKeys 用于服务隔离,这些特性由 SIG Scheduling 管理,欢迎反馈。

3.

– Kubernetes 1.29 引入 podAffinity 和 podAntiAffinity 中的新字段 matchLabelKeys 和 mismatchLabelKeys

– 在 1.31 中此特性移至 beta 版,对应特性门默认启用

– matchLabelKeys

– 解决滚动更新时调度器无法区分 Pod 版本的问题

– 示例展示如何使用

– mismatchLabelKeys

– 用于服务隔离,通过示例说明其应用场景

– 此特性由 Kubernetes SIG Scheduling 管理,欢迎参与和反馈

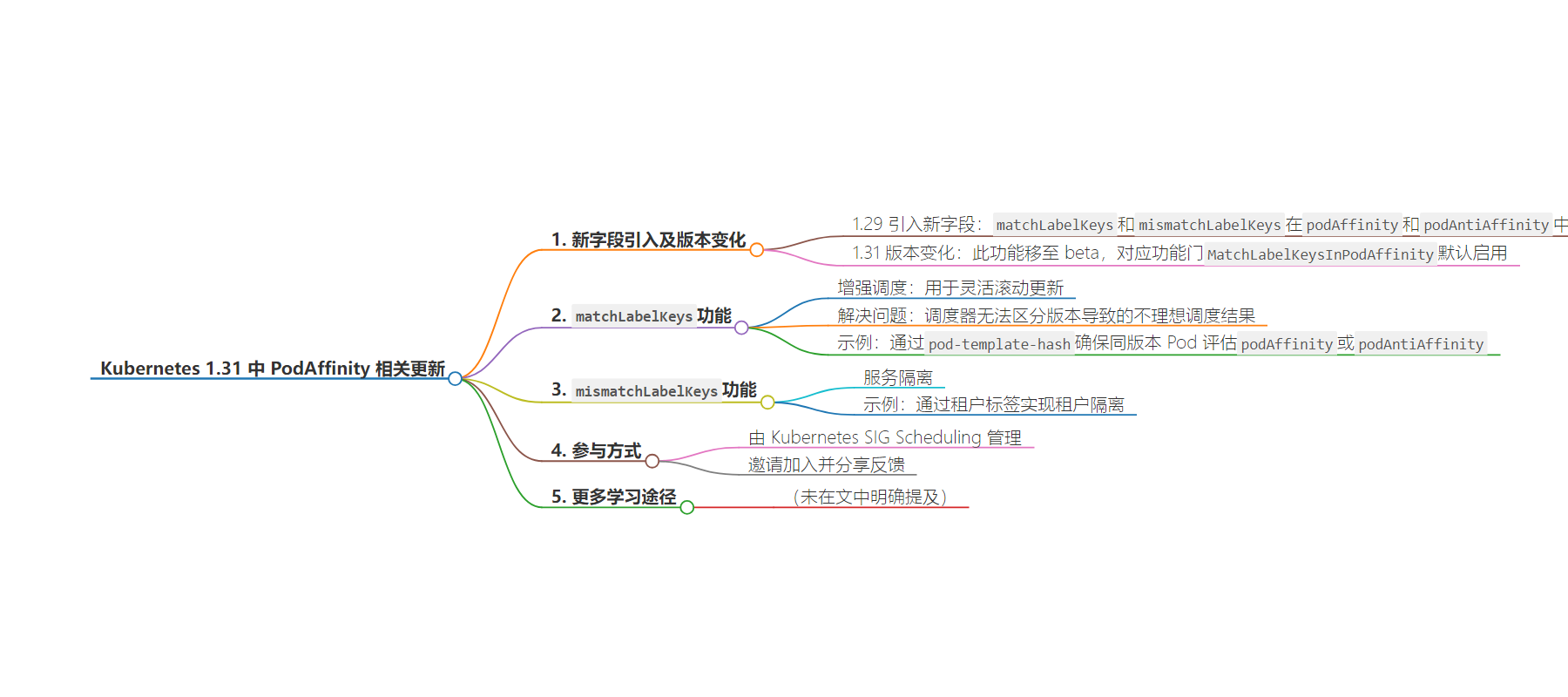

思维导图:

文章地址:https://kubernetes.io/blog/2024/08/16/matchlabelkeys-podaffinity/

文章来源:kubernetes.io

作者:Kubernetes Blog

发布时间:2024/8/16 0:00

语言:英文

总字数:618字

预计阅读时间:3分钟

评分:87分

标签:Kubernetes,PodAffinity,调度,滚动更新,服务隔离

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Kubernetes 1.31: MatchLabelKeys in PodAffinity graduates to beta

By Kensei Nakada (Tetrate) |

Kubernetes 1.29 introduced new fields matchLabelKeys and mismatchLabelKeys in podAffinity and podAntiAffinity.

In Kubernetes 1.31, this feature moves to beta and the corresponding feature gate (MatchLabelKeysInPodAffinity) gets enabled by default.

matchLabelKeys – Enhanced scheduling for versatile rolling updates

During a workload’s (e.g., Deployment) rolling update, a cluster may have Pods from multiple versions at the same time.However, the scheduler cannot distinguish between old and new versions based on the labelSelector specified in podAffinity or podAntiAffinity. As a result, it will co-locate or disperse Pods regardless of their versions.

This can lead to sub-optimal scheduling outcome, for example:

- New version Pods are co-located with old version Pods (

podAffinity), which will eventually be removed after rolling updates. - Old version Pods are distributed across all available topologies, preventing new version Pods from finding nodes due to

podAntiAffinity.

matchLabelKeys is a set of Pod label keys and addresses this problem.The scheduler looks up the values of these keys from the new Pod’s labels and combines them with labelSelectorso that podAffinity matches Pods that have the same key-value in labels.

By using label pod-template-hash in matchLabelKeys,you can ensure that only Pods of the same version are evaluated for podAffinity or podAntiAffinity.

apiVersion: apps/v1kind: Deploymentmetadata: name: application-server... affinity: podAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app operator: In values: - database topologyKey: topology.kubernetes.io/zone matchLabelKeys: - pod-template-hashThe above matchLabelKeys will be translated in Pods like:

kind: Podmetadata: name: application-server labels: pod-template-hash: xyz... affinity: podAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app operator: In values: - database - key: pod-template-hash # Added from matchLabelKeys; Only Pods from the same replicaset will match this affinity. operator: In values: - xyz topologyKey: topology.kubernetes.io/zone matchLabelKeys: - pod-template-hashmismatchLabelKeys – Service isolation

mismatchLabelKeys is a set of Pod label keys, like matchLabelKeys,which looks up the values of these keys from the new Pod’s labels, and merge them with labelSelector as key notin (value)so that podAffinity does not match Pods that have the same key-value in labels.

Suppose all Pods for each tenant get tenant label via a controller or a manifest management tool like Helm.

Although the value of tenant label is unknown when composing each workload’s manifest,the cluster admin wants to achieve exclusive 1:1 tenant to domain placement for a tenant isolation.

mismatchLabelKeys works for this usecase;By applying the following affinity globally using a mutating webhook,the cluster admin can ensure that the Pods from the same tenant will land on the same domain exclusively,meaning Pods from other tenants won’t land on the same domain.

affinity: podAffinity: # ensures the pods of this tenant land on the same node pool requiredDuringSchedulingIgnoredDuringExecution: - matchLabelKeys: - tenant topologyKey: node-pool podAntiAffinity: # ensures only Pods from this tenant lands on the same node pool requiredDuringSchedulingIgnoredDuringExecution: - mismatchLabelKeys: - tenant labelSelector: matchExpressions: - key: tenant operator: Exists topologyKey: node-poolThe above matchLabelKeys and mismatchLabelKeys will be translated to like:

kind: Podmetadata: name: application-server labels: tenant: service-aspec: affinity: podAffinity: # ensures the pods of this tenant land on the same node pool requiredDuringSchedulingIgnoredDuringExecution: - matchLabelKeys: - tenant topologyKey: node-pool labelSelector: matchExpressions: - key: tenant operator: In values: - service-a podAntiAffinity: # ensures only Pods from this tenant lands on the same node pool requiredDuringSchedulingIgnoredDuringExecution: - mismatchLabelKeys: - tenant labelSelector: matchExpressions: - key: tenant operator: Exists - key: tenant operator: NotIn values: - service-a topologyKey: node-poolGetting involved

These features are managed by Kubernetes SIG Scheduling.

Please join us and share your feedback. We look forward to hearing from you!