包阅导读总结

1. 关键词:Apache Airflow、Cloud Composer、Alerting、Data Workflows、Google Cloud

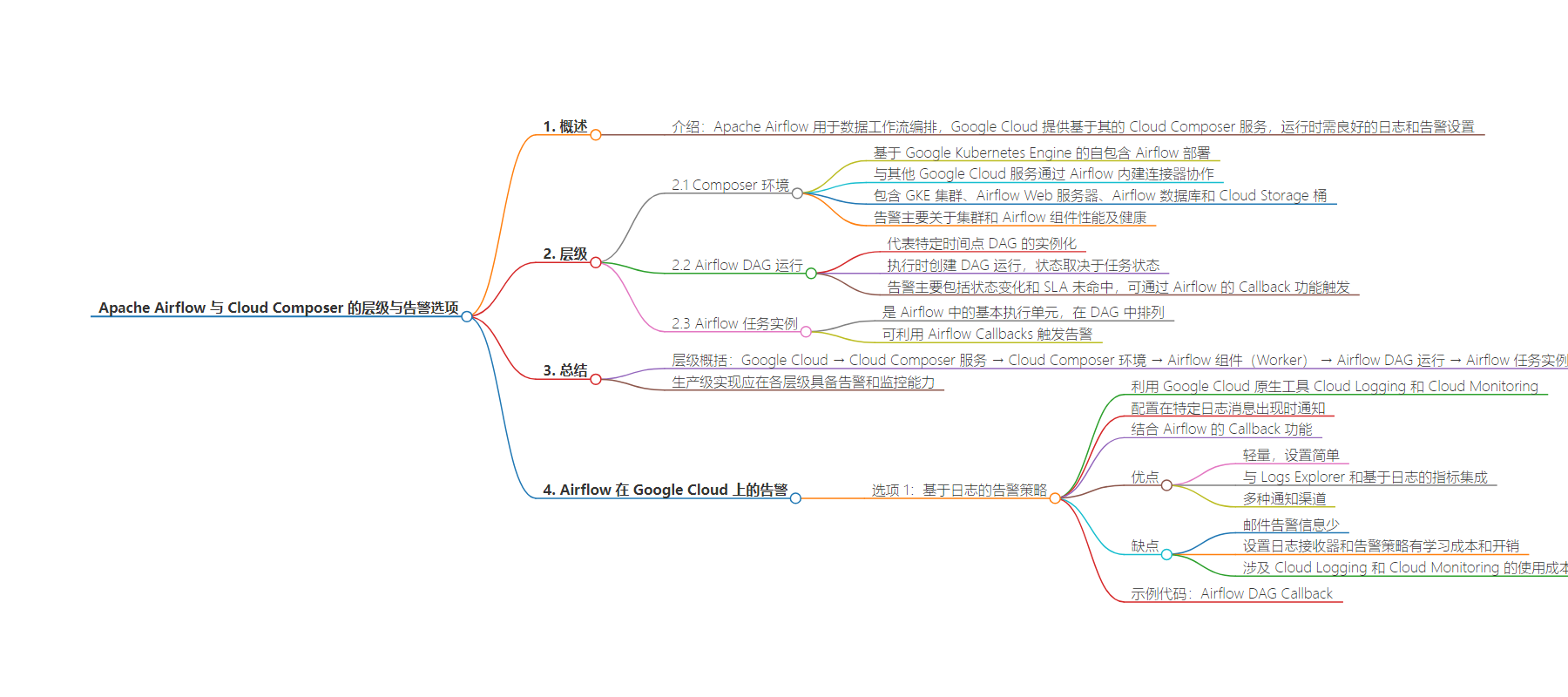

2. 总结:本文介绍了 Apache Airflow 及 Google Cloud 的 Cloud Composer 服务,重点阐述了 Cloud Composer 的警报层次结构,包括环境、DAG 运行、任务实例等层级的警报,还探讨了在 Airflow DAG 运行和任务层面的三种警报选项,如基于日志的警报策略,并分析其优缺点。

3. 主要内容:

– Apache Airflow 与 Cloud Composer

– Apache Airflow 用于编排数据工作流

– Cloud Composer 是基于 Apache Airflow 的托管服务

– Cloud Composer 警报层次结构

– Composer 环境:包括 GKE 集群等组件,警报关注性能和健康

– Airflow DAG 运行:状态变化和 SLA 错过可触发警报

– Airflow 任务实例:可利用回调触发警报

– Airflow 在 Google Cloud 的警报选项

– 选项 1:基于日志的警报策略

– 利用 Google Cloud 的 Cloud Logging 和 Cloud Monitoring

– 优点:轻量、集成、多通知渠道

– 缺点:邮件信息少、设置有学习成本、有成本

– 示例代码:包括定义回调函数等步骤

思维导图:

文章来源:cloud.google.com

作者:Christian Yarros

发布时间:2024/8/20 0:00

语言:英文

总字数:1728字

预计阅读时间:7分钟

评分:89分

标签:Apache Airflow,云端作曲家,Google Cloud,数据工作流编排,报警系统

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

About

Apache Airflow is a popular tool for orchestrating data workflows. Google Cloud offers a managed Airflow service called Cloud Composer, a fully managed workflow orchestration service built on Apache Airflow that enables you to author, schedule, and monitor pipelines. And when running Cloud Composer, it’s important to have a robust logging and alerting setup to monitor your DAGs (Directed Acyclic Graphs) and minimize downtime in your data pipelines.

In this guide, we will review the hierarchy of alerting on Cloud Composer and the various alerting options available to Google Cloud engineers using Cloud Composer and Apache Airflow.

Getting started

Hierarchy of alerting on Cloud Composer

Composer environment

Cloud Composer environments are self-contained Airflow deployments based on Google Kubernetes Engine. They work with other Google Cloud services using connectors built into Airflow.

Cloud Composer provisions Google Cloud services that run your workflows and all Airflow components. The main components of an environment are GKE cluster, Airflow web server, Airflow database, and Cloud Storage bucket. For more information, check out Cloud Composer environment architecture.

Alerts at this level primarily consist of cluster and Airflow component performance and health.

Airflow DAG Runs

A DAG Run is an object representing an instantiation of the DAG at a point in time. Any time the DAG is executed, a DAG Run is created and all tasks inside it are executed. The status of the DAG Run depends on the task’s state. Each DAG Run is run separately from one another, meaning that you can have many runs of a DAG at the same time.

Alerts at this level primarily consist of DAG Run state changes such as Success and Failure, as well as SLA Misses. Airflow’s Callback functionality can trigger code to send these alerts.

Airflow Task instances

A Task is the basic unit of execution in Airflow. Tasks are arranged into DAGs, and then have upstream and downstream dependencies set between them in order to express the order they should run in. Airflow tasks include Operators and Sensors.

Like Airflow DAG Runs, Airflow Tasks can utilize Airflow Callbacks to trigger code to send alerts.

Summary

To summarize Airflow’s alerting hierarchy: Google Cloud → Cloud Composer Service → Cloud Composer Environment → Airflow Components (Worker) → Airflow DAG Run → Airflow Task Instance.

Any production-level implementation of Cloud Composer should have alerting and monitoring capabilities at each level in the hierarchy. Our Cloud Composer engineering team has extensive documentation around monitoring and alerting at the service/environment level.

Airflow Alerting on Google Cloud

Now, let’s consider three options for alerting at the Airflow DAG Run and Airflow Task level.

Option 1: Log-based alerting policies

Google Cloud offers native tools for logging and alerting within your Airflow environment. Cloud Logging centralizes logs from various sources, including Airflow, while Cloud Monitoring lets you set up alerting policies based on specific log entries or metrics thresholds.

You can configure an alerting policy to notify you whenever a specific message appears in your included logs. For example, if you want to know when an audit log records a particular data-access message, you can get notified when the message appears. These types of alerting policies are called log-based alerting policies. Check out Configure log-based alerting policies | Cloud Logging to learn more.

These services combine nicely with Airflow’s Callback feature previously mentioned above. To accomplish this:

-

Define a Callback function and set at the DAG or Task level.

-

Use Python’s native logging library to write a specific log message to Cloud Logging.

-

Define a log-based alerting policy triggered by the specific log message and sends alerts to a notification channel.

Pros and cons

Pros:

-

Lightweight, minimal setup: no third party tools, no email server set up, no additional Airflow providers required

-

Integration with Logs Explorer and Log-based metrics for deeper insights and historical analysis

-

Multiple notification channel options

Cons:

-

Email alerts contain minimal info

-

Learning curve and overhead for setting up log sinks and alerting policies

-

Costs associated with Cloud Logging and Cloud Monitoring usage

Sample code

Airflow DAG Callback: