包阅导读总结

1. `Vertex AI`、`Search Applications`、`RAG`、`Natural Language`、`Data Sources`

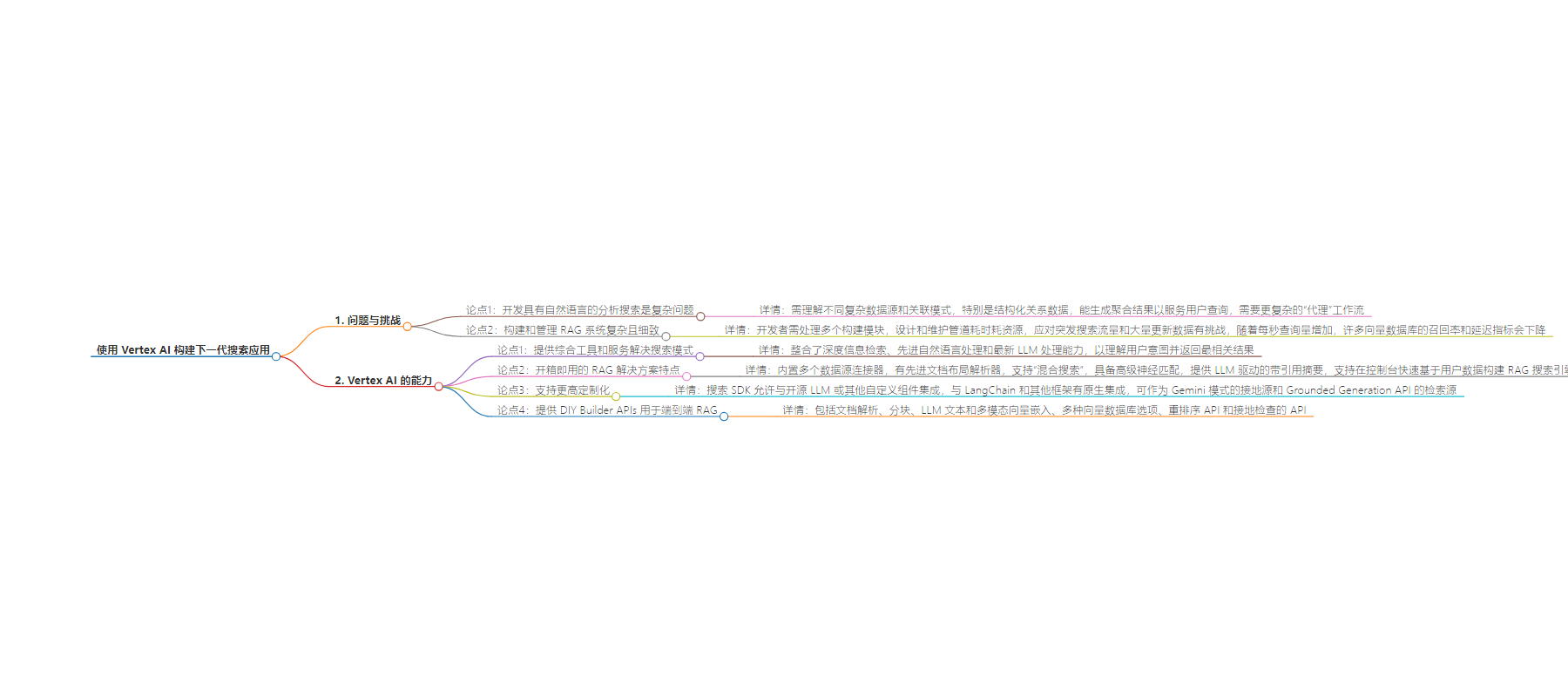

2. 本文主要介绍了利用 Vertex AI 构建下一代搜索应用,指出开发自然语言分析搜索的复杂性,强调了 Vertex AI 在解决搜索模式方面的优势,包括提供多种工具和服务、支持开箱即用和高度定制等。

3.

– Vertex AI 与下一代搜索应用

– 自然语言分析搜索复杂,需复杂“代理”工作流,LLM 驱动的 RAG 是助力组件之一。

– Vertex AI 优势

– 提供全面工具和服务处理复杂的搜索模式。

– 融合多种技术理解用户意图,返回最相关结果。

– 支持开箱即用和高度定制化的 RAG 解决方案。

– 与多种数据源有内置连接,有先进的文档布局解析器等特性。

– 提供 DIY Builder APIs 用于构建端到端 RAG 解决方案。

– 与其他框架集成

– 与 LangChain 等框架原生集成,可作为检索源用于新的 Grounded Generation API 等。

思维导图:

文章来源:cloud.google.com

作者:UK/I AI Customer Engineering,Google Cloud

发布时间:2024/9/9 0:00

语言:英文

总字数:2549字

预计阅读时间:11分钟

评分:91分

标签:Vertex AI,搜索应用,生成式 AI,大型语言模型,RAG

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Developing analytical search with natural language is a very complex problem. Understanding disparate complex data sources and associated schema, especially in the case of structured relational data, and being able to produce aggregated results to consistently serve user queries requires a more complex “agentic” workflow. LLM-powered RAG can be one of the enabling components or tools for such agents.

Part 2: Vertex AI: Powering the future of search

Vertex AI offers a comprehensive suite of tools and services to address the above search patterns.

Building and managing RAG systems can be complex and can be quite nuanced. Developers need to develop and maintain several RAG building blocks like data connectors, data processing, chunking, annotating, vectorization with embeddings, indexing, query rewriting, retrieval, reranking, along with LLM-powered summarization. Designing, building and maintaining this pipeline can be time- and resource-intensive. Being able to scale each of the components to handle bursty search traffic and coping with a large corpus of varied and frequently updated data can also be challenging. Speaking of scale, as the queries per second ramp up, many vector databases degrade both their recall and latency metrics.

Vertex AI Search leverages decades of Google’s expertise in information retrieval and brings together the power of deep information retrieval, state-of-the-art natural language processing, and the latest in LLM processing to understand user intent and return the most relevant results for the user. No matter where you are in the development journey, Vertex AI Search provides several options to bring your enterprise truth to life from out-of-the-box to DIY RAG.

Why Vertex AI Search for out-of-the-box RAG:

The out-of-the-box solution based on Vertex AI Search brings Google-quality search to building end-to-end, state-of-the-art semantic and hybrid search applications, with features such as:

-

Built-in connectors to several data sources: Cloud Storage, BigQuery, websites, Confluence, Jira, Salesforce, Slack and many more

-

A state-of-the-art document layout parser capable of keeping chunks of data organized across pages, containing embedded tables, annotating embedded images, and that can track heading ancestry as metadata for each chunk

-

“Hybrid search” — a combination of keyword (sparse) and LLM (dense) based embeddings to handle any user query. Sparse vectors tend to directly map words to numbers and dense vectors are designed to better represent the meaning of a piece of text.

-

Advanced neural matching between user queries and document snippets to help retrieve highly relevant and ranked results for the user. Neural matching allows a retrieval engine to learn the relationships between a query’s intentions and highly relevant documents, allowing Vertex AI Search to recognize the context of a query instead of the simple similarity search.

-

LLM-powered summaries with citations that are designed to scale to your search traffic. Vertex AI Search supports custom LLM instruction templates, making it easy to create powerful engaging search experiences with minimal effort.

-

Support for building a RAG search engine grounded on the user’s own data in minutes from the console. Developers can also use the API to programmatically build and test the OOTB agent.

Explore this notebook (Part I) to see the Vertex AI Agent Builder SDK in action.

For greater customization

The Vertex AI Search SDK further allows developers to integrate it with open-source LLMs or other custom components, tailoring the search pipeline to their specific needs. As mentioned above, building end-to-end RAG solutions can be complex; as such, developers might want to rely on Vertex AI Search as a grounding source for search results retrieval and ranking, and leverage custom LLMs for the guided summary. Vertex AI Search also provides grounding in Google Search.

Find an example for Grounding Responses for Gemini mode Example notebook in Part II here.

Developers might already be building their LLM application with frameworks like Langchain/ LLamaIndex. Vertex AI Search has native integration with LangChain and other frameworks, allowing developers to retrieve search results and/or grounded generation. It can also be linked as an available tool in Vertex AI Gemini SDK. Likewise, Vertex AI Search can be a retrieval source for the new Grounded Generation API powered by Gemini “high-fidelity mode,” which is fine-tuned for grounded generation.

Here is a Notebook example for leveraging Vertex AI Search from LangChain in Part III here.

Vertex AI DIY Builder APIs for end-to-end RAG

Vertex AI provides the essential building blocks for developers who want to construct their own end-to-end RAG solutions. These include APIs for document parsing, chunking, LLM text and multimodal vector embeddings, versatile vector database options (Vertex AI Vector Search, AlloyDB, BigQuery Vector DB), reranking APIs, and grounding checks.