包阅导读总结

1. 关键词:数据质量、Anomalo、自动化监测、机器学习、根因分析

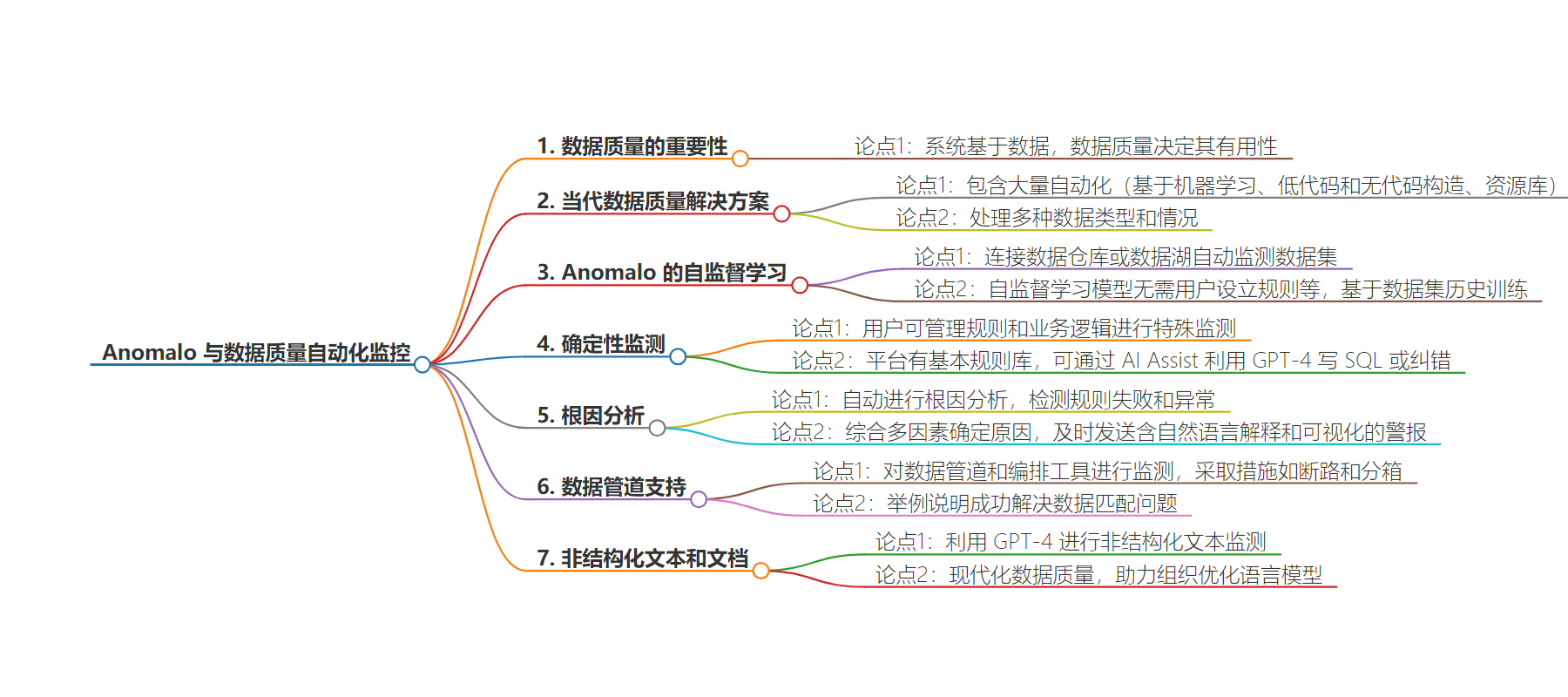

2. 总结:本文主要介绍了 Anomalo 在改善数据质量方面的作用,包括通过自动化和机器学习进行监测,对数据的准确性、及时性等进行评估,还能进行根因分析和支持数据管道等,且在非结构化文本方面有新功能。

3. 主要内容:

– 数据质量的重要性:系统基于数据,数据质量决定其有用性。

– Anomalo 的机器学习:可自动监测数据集,自监督学习模型基于数据集历史训练,能监测数据新鲜度、完整性等。

– 确定性监测:用户可为特定数据质量问题制定规则和业务逻辑,平台有基本规则库,AI Assist 可利用 GPT-4 写 SQL 或纠错。

– 根因分析:系统能自动分析数据问题的根因,通过及时警报和因素综合展示。

– 数据管道支持:对数据管道和工具进行监测,采取措施保证数据质量。

– 非结构化文本和文档:部署 GPT-4 用于监测,评估文档质量。

思维导图:

文章地址:https://thenewstack.io/the-new-face-of-data-quality-anomalo-and-automated-monitoring/

文章来源:thenewstack.io

作者:Jelani Harper

发布时间:2024/6/26 17:00

语言:英文

总字数:1066字

预计阅读时间:5分钟

评分:86分

标签:人工智能,数据,可观测性

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Whether applications, information systems and computational resources are customer-facing or internal, revenue-generating or otherwise, one fact will almost always remain true about them.

They’re all founded upon data and only as useful as their data assets are.

When that data’s accurate, timely, complete and of dependable quality, these systems can meet any number of business objectives. However, as Anomalo CEO Elliot Shmukler told The New Stack, “The problem is all of those things break if you’re doing things wrong. Or, if your data is late. Or, if it’s missing.”

Contemporary data quality solutions account for these and an almost unlimited number of additional variables that diminish an organization’s data quality — and the output of its data-driven endeavors.

Moreover, they do so with a staggering amount of automation (based on machine learning (ML), low code and no-code constructs, and libraries of resources). They provide this functionality for data flowing through data pipelines, data at rest, and structured, semi-structured and unstructured data (including unstructured text).

With capabilities to speedily implement root cause analysis via natural language and pictorial descriptions, such platforms “help data teams find these kinds of issues with their data before things break,” Shmukler added. “Before the dashboards are wrong. Before the ML models based on that data go off their rails. Before the wrong decisions are made.”

Self-Supervised Learning

Anomalo’s machine learning is instrumental for enabling organizations to implement data quality expediently and, in most cases, with nominal effort. The offering primarily works by connecting to data warehouses or data lakes to begin “automatically monitoring any dataset … that you care about,” Shmukler indicated.

Anomalo’s machine learning models, which largely involve self-supervised learning, monitor datasets without users instituting rules, writing code, or describing what quality data is. Although data-profiling techniques are also involved, the self-supervised learning models “train themselves on the history of the dataset, rather than any human-labeled data,” Shmukler said. Soon after datasets are selected, the platform begins monitoring for details like:

- Data freshness: This check determines if new data is coming in when it’s expected.

- Completeness: This metric assesses if the data has the right volume or is missing segments, columnar information or other characteristics.

- Distribution: Distribution shifts indicate if datasets contain new, anomalous values.

- Columnar correlations: Anomalo can determine if there are atypical changes in the correlations between columns in tables.

By analyzing these and other factors, Shmukler said, the system’s “out of the box checks find 85 to 90 percent of all possible issues without you having to tell us what to look for.”

Deterministic Monitoring

Users can administer rules and business logic for specialized, deterministic monitoring for the remaining 10 to 15 percent of data quality issues. A library of basic rules is available in the platform for organizations to “take three to four clicks to implement an easy, simple rule,” Shmukler said.

Examples include rules for checking columnar values to detect things like nulls appearing where they shouldn’t be, whether values are in the correct format, and differences between particular tables.

For customized use cases in which SQL is required, AI Assist, a recently released capability, utilizes GPT-4 to write SQL from natural language prompts. It can also correct code errors if users prefer writing their own SQL.

“With AI Assist you can just tell us what you’re trying to do, and we’ll write that SQL for you,” Shmukler said.

Organizations can even issue checks for an entire set of metrics, up to approximately 100 at a time, for different datasets, or for the same one. Thus, when monitoring activities from multiple customers in multiple regions, for example, users can employ this feature instead of creating individual checks for each metric for each customer in each region—an arduous, time-consuming process.

With this capability, Shmukler said, the platform “will automatically look at that collection of metrics as a whole and identify the most anomalous, track them over time, and build individual models to understand how it’s moving.”

Root Cause Analysis

The simplicity Anomalo provides for automatically monitoring data quality characterizes that for rapidly ascertaining root causes of data incidents. The system issues “automated root cause analysis when rules fail,” according to Shmukler, and when anomalies are detected. Several factors influence the determination of the cause of data issues, including data lineage, current developments and previous ones.

A synthesis of these factors is disseminated via timely alerts of issues that link back to the underlying system and “the root cause that we’ve computed,” Shmukler said. “There’s historical information so you can put this issue in context. All of those things are available to you, just a click away.”

Alerts incorporate natural language explanations of—and visualizations depicting—any problems with either data or data pipelines.

Data Pipeline Support

Anomalo extends its monitoring to data pipelines and orchestration tools to implement measures like circuit breakers, which stop pipelines from transmitting data of substandard quality, and binning, which separates quality data from poor quality data, allowing the former to continue through the pipeline.

Shmukler recounted a use case in which a real estate customer, receiving third-party data from numerous nationwide sources and governmental agencies, was struggling to match its listings price data with that of the correct tax data.

Anomalo’s root cause analysis not only successfully pinpointed where the poor quality data came from but, Shmukler noted, “also identified which geography within the dataset was showing that bad data.”

Unstructured Text and Documents

The capacity to implement data quality on sources by merely connecting to them, stop data pipelines based on the quality of the data they contain, and automate natural language and pictorial explanations of causes of data incidents is just the beginning for Anomalo.

The vendor, which was recognized as Databricks’ Emerging Partner of the Year, recently released a feature in which it deploys GPT-4 for unstructured text monitoring, allowing users to upload a corpus and “create summaries of the documents to assess their quality while looking at how rich is this content,” Shmukler said. “What grade level is it at? Are there duplicates?”

Such efforts are modernizing data quality’s overall utility by bringing it into the realm of generative AI. Anomalo is not only employing this technology, but also doing so for organizations to fine-tune, train and institute retrieval augmented generation (RAG) on their own language models.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.