包阅导读总结

1. 关键词:Meta、DCPerf、Hyperscale、Workloads、Benchmark

2. 总结:社交媒体巨头 Meta 开发了用于测量超大规模应用性能的 DCPerf 基准测试,并以开源许可发布,希望成为行业标准。DCPerf 相比传统基准有优势,Meta 还与 CPU 供应商合作验证,且 Google 也有类似的内部测试套件。

3. 主要内容:

– Meta 受标准数据中心基准限制,开发 DCPerf 以测量超大规模应用性能

– 这些应用需大量服务器运行

– DCPerf 基于 Meta 正在使用的大型应用建模

– Meta 将 DCPerf 以 MIT 开源许可证在 GitHub 发布

– 希望被学术界、硬件行业等采用

– DCPerf 相比 SPEC CPU 等传统基准的优势

– 能精确配置选择和性能预测,识别软硬件性能问题

– 提供更丰富的应用软件多样性和更好的平台性能覆盖信号

– Meta 与 CPU 供应商合作验证 DCPerf

– Google 也有自己的内部测试套件 Fleetbench 用于帮助理解超大规模工作负载

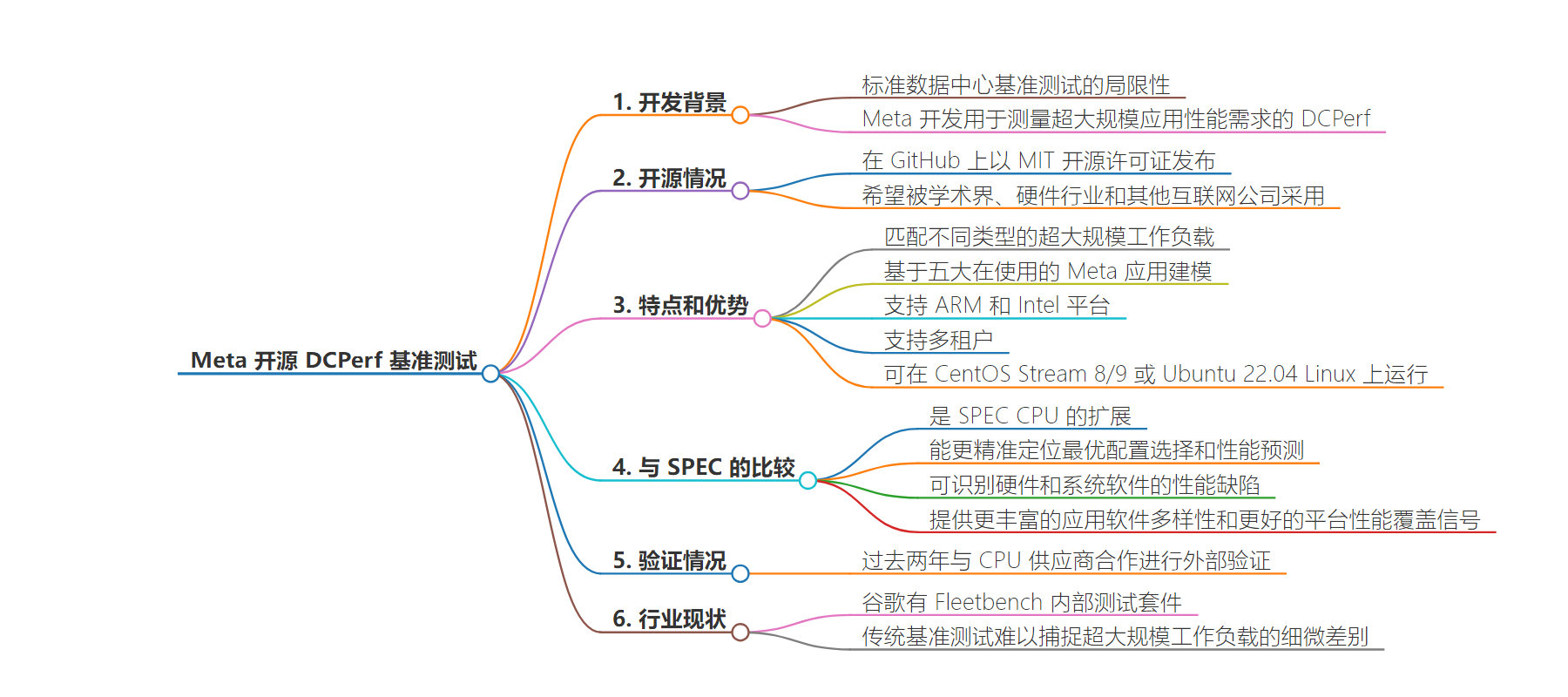

思维导图:

文章地址:https://thenewstack.io/meta-open-sources-dcperf-a-benchmark-for-hyperscale-workloads/

文章来源:thenewstack.io

作者:Joab Jackson

发布时间:2024/8/15 19:09

语言:英文

总字数:586字

预计阅读时间:3分钟

评分:89分

标签:超大规模计算,基准测试,开源,数据中心,性能测试

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Stymied by the limits of the standard data center benchmarks, social media giant Meta, formerly Facebook, developed its own set of performance tests, called DCPerf, to measure the performance requirements of its hyperscale applications, those heavily used applications requiring hundreds or even thousands of servers to run.

The company has now released this benchmarking suite (on GitHub) under an MIT open source license, hoping it will be picked up by academia, the hardware industry and other Internet companies.

“As an open source benchmark suite, DCPerf has the potential to become an industry standard method to capture important workload characteristics of compute workloads that run in hyperscale data center deployments,” wrote Meta engineers Abhishek Dhanotia,Wei Su,Carlos Torres,Shobhit Kanaujia,Maxim Naumov, in a blog post published Aug. 5.

Hyperscale computing is a different beast compared to traditional enterprise computing, or even supercomputer-based high performance computing (HPC). And it requires its own set of tests, the Meta engineers argued, given that data center-driven hyperscale computing takes up the largest market share of server deployments worldwide.

So it makes sense that hyperscale needs its own set of evaluation methodologies.

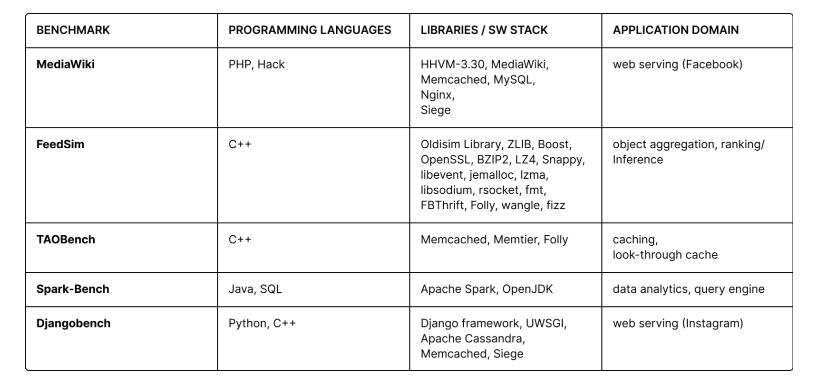

DCPerf was designed to match different kinds of hyperscale workloads, with each of the five benchmarks modeled on a large Meta application currently in use.

Different DCPerf benchmarks are based on different Meta workloads, each with a different mix of technologies. (Source: Meta)

The benchmarks support both ARM and Intel platforms and can support multi-tenancy for those cases where an application runs across multiple data centers. The testing software can run on either CentOS Stream 8/9 or Ubuntu 22.04 Linux.

But What About SPEC?

To date, the industry standard for data center benchmarks has come from the Standard Performance Evaluation Corporation (SPEC). Meta itself uses SPEC’s CPU benchmark suite for processor evaluation.

DCPerf extends SPEC CPU, allowing the company to pinpoint optimal configuration choices, as well as make more accurate performance projections. It can even identify performance bugs in hardware and system software.

“DCPerf provides a much richer set of application software diversity and helps get better coverage signals on platform performance versus existing benchmarks such as SPEC CPU,” the engineers wrote. “Due to these benefits, we have also started using DCPerf to assist with our decision making process on which platforms to deploy in our data centers.”

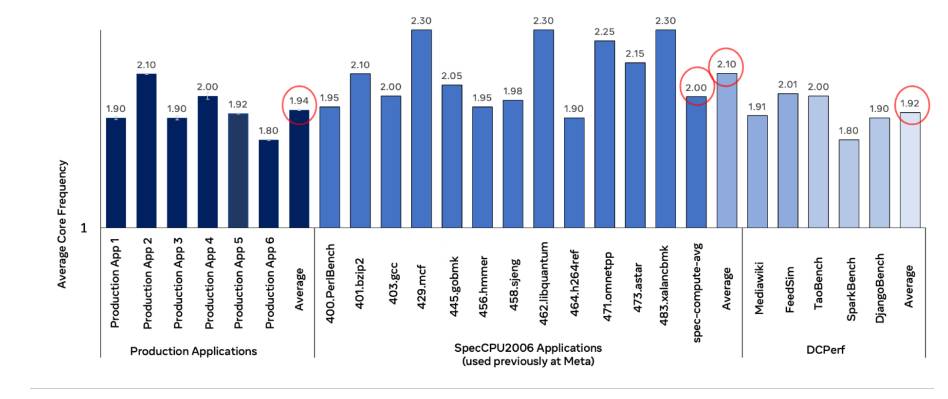

In the blog post, the engineers showed how DCPerf provided more nuanced results in how well system-on-a-chip microarchitectures supported Meta’s applications.

Here are two estimates of the average core frequency (1.94) consumed by production applications; SpecCPU 2006 estimated the number at 2.10, while DCPerf pegged the number at 1.92. For a giant operation like Meta’s, more accurate predictions mean better estimates of how much hardware to buy. (Source: Meta)

Meta has worked with the CPU vendors over the past two years to externally validate the spec.

Google Has a Benchmark, Too

Meta is not the only hyperscaler with its own internal testing suite.

Google has Fleetbench, which is a set of seven “microbenchmarks” designed to help CPU and system software builders better understand hyperscale workloads at Google.

“Traditional benchmarks often fall short in capturing the nuances of” hyperscale workloads, wrote Google researchers in a paper detailing Fleetbench. “Creating a public benchmark suite that is representative of the workloads used by actual warehouse-scale computers is challenging, as they typically run proprietary, non-public software that operates on confidential data.”

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.