包阅导读总结

1. 关键词:Hex-LLM、TPUs、Vertex AI Model Garden、LLM 服务、优化

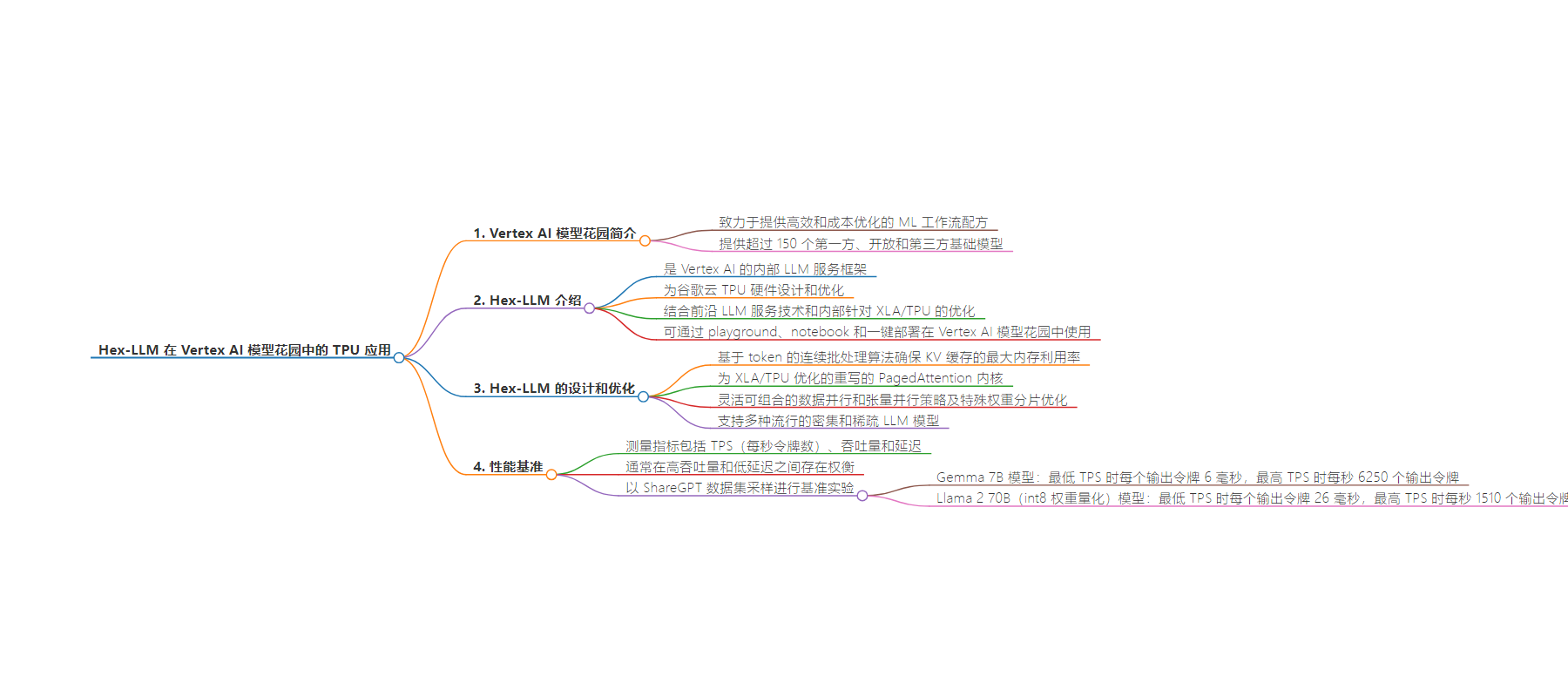

2. 总结:谷歌云通过 Vertex AI Model Garden 提供高效且成本优化的 ML 工作流程,去年推出 vLLM,如今引入基于 TPU 的 Hex-LLM。Hex-LLM 专为 TPU 硬件优化,结合最新技术和优化,支持多种模型,具有高吞吐量和低延迟性能,通过基准实验展示了其在不同模型上的表现。

3. 主要内容:

– Vertex AI Model Garden 介绍

– 致力于提供高效和成本优化的 ML 工作流程配方,有超过 150 个模型。

– Hex-LLM 推出

– 是内部的 LLM 服务框架,针对 Google 的 Cloud TPU 硬件优化。

– 结合了连续批处理和分页注意力等技术和内部针对 XLA/TPU 的优化。

– Hex-LLM 的优化

– 包括基于令牌的连续批处理算法、重写的 PagedAttention 内核等。

– 支持多种流行的密集和稀疏 LLM 模型。

– 性能测试

– 解释了 TPS、吞吐量和延迟的指标含义。

– 展示了 Gemma 7B 和 Llama 2 70B 模型在八块 TPU v5e 芯片上的性能数据。

思维导图:

文章来源:cloud.google.com

作者:Xiang Xu,Pengchong Jin

发布时间:2024/7/26 0:00

语言:英文

总字数:1071字

预计阅读时间:5分钟

评分:91分

标签:LLM 服务,Hex-LLM,TPU,Vertex AI 模型花园,Google Cloud

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

With Vertex AI Model Garden, Google Cloud strives to deliver highly efficient and cost-optimized ML workflow recipes. Currently, it offers a selection of more than 150 first-party, open and third-party foundation models. Last year, we introduced the popular open source LLM serving stack vLLM on GPUs, in Vertex Model Garden. Since then, we have witnessed rapid growth of serving deployment. Today, we are thrilled to introduce Hex-LLM, High-Efficiency LLM Serving with XLA, on TPUs in Vertex AI Model Garden.

Hex-LLM is Vertex AI’s in-house LLM serving framework that is designed and optimized for Google’s Cloud TPU hardware, which is available as part of AI Hypercomputer. Hex-LLM combines state-of-the-art LLM serving technologies, including continuous batching and paged attention, and in-house optimizations that are tailored for XLA/TPU, representing our latest high-efficiency and low-cost LLM serving solution on TPU for open-source models. Hex-LLM is now available in Vertex AI Model Garden via playground, notebook, and one-click deployment. We can’t wait to see how Hex-LLM and Cloud TPUs can help your LLM serving workflows.

Design and benchmarks

Hex-LLM is inspired by a number of successful open-source projects, including vLLM and FlashAttention, and incorporates the latest LLM serving technologies and in-house optimizations that are tailored for XLA/TPU.

The key optimizations in Hex-LLM include:

-

The token-based continuous batching algorithm to ensure the maximal memory utilization for KV caching.

-

A complete rewrite of the PagedAttention kernel that is optimized for XLA/TPU.

-

Flexible and composable data parallelism and tensor parallelism strategies with special weights sharding optimization to run large models efficiently on multiple TPU chips.

In addition, Hex-LLM supports a wide range of popular dense and sparse LLM models, including:

As the LLM field keeps evolving, we are also committed to bringing in more advanced technologies and the latest and greatest foundation models to Hex-LLM.

Hex-LLM delivers competitive performance with high throughput and low latency. We conducted benchmark experiments, and the metrics that we measured are explained as following:

-

TPS (tokens per second) is the average number of tokens a LLM server receives per second. Similar to QPS (queries per second), which is used for measuring the traffic of a general server, TPS is for measuring the traffic of a LLM server but in a more fine-grained fashion.

-

Throughput measures how many tokens the server can generate for a certain timespan at a specific TPS. This is a key metric for estimating the capability of processing a number of concurrent requests.

-

Latency measures the average time to generate one output token at a specific TPS. This estimates the end-to-end time spent on the server side for each request, including all queueing and processing time.

Note that there is usually a tradeoff between high throughput and low latency. As TPS increases, both throughput and latency should increase. The throughput will saturate at a certain TPS, while the latency will continue to increase with higher TPS. Thus, given a particular TPS, we can measure a pair of throughput and latency metrics of the server. The resultant throughput-latency plot with respect to different TPS gives an accurate measurement of the LLM server performance.

The data used to benchmark Hex-LLM is sampled from the ShareGPT dataset, which is a widely adopted dataset containing prompts and outputs in variable lengths.

In the following charts, we present the performance of Gemma 7B and Llama 2 70B (int8 weight quantized) models on eight TPU v5e chips:

-

Gemma 7B model: 6ms per output token for the lowest TPS, 6250 output tokens per second at the highest TPS;

-

Llama 2 70B int8 model: 26ms per output token for the lowest TPS, 1510 output tokens per second at the highest TPS.