包阅导读总结

1. 关键词:AI 监管、创新、输出、版权平衡、现有法律

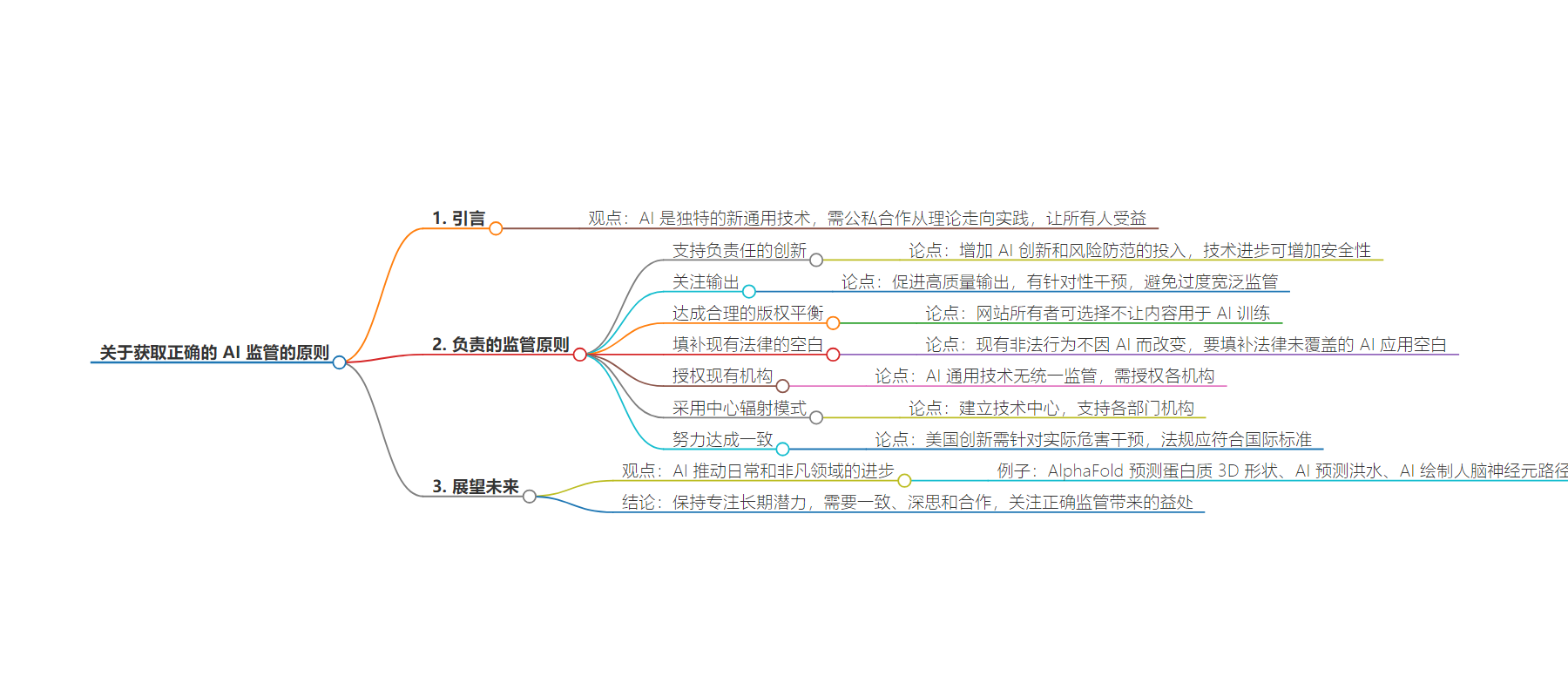

2. 总结:AI 是新的通用技术,为实现其潜力需公私合作。文中提出了负责的 AI 监管的七条原则,包括支持创新、关注输出、平衡版权、填补法律漏洞等,强调若监管得当,AI 可带来更多突破。

3. 主要内容:

– AI 的潜力与合作:AI 是独特通用技术,公私需协作从理论到实践,让人人受益。

– 负责任的监管原则:

– 支持创新:增加 AI 创新和风险防范投入,技术进步能增加安全。

– 关注输出:促进高质量输出,基于实际问题制定规则。

– 版权平衡:公平使用数据促科学进步,网站可选择内容不用于 AI 训练。

– 填补法律漏洞:现有法律覆盖不足的地方要填补。

– 赋能现有机构:不同机构按需监管。

– 采用中心辐射模式:建立技术中心支持部门机构。

– 力求一致:按实际危害干预,与国际标准一致。

– AI 的成果与未来:AI 已带来诸多成果,如预测蛋白质形状等,若关注长期潜力,可带来更多突破。

思维导图:

文章地址:https://blog.google/outreach-initiatives/public-policy/7-principles-for-getting-ai-regulation-right/

文章来源:blog.google

作者:Kent Walker

发布时间:2024/6/26 10:00

语言:英文

总字数:1090字

预计阅读时间:5分钟

评分:88分

标签:人工智能监管,负责任创新,国会法案,风险管理

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

AI is a unique tool, a new general-purpose technology. And as with the steam engine, electricity, or the internet, seizing its potential will require public and private stakeholders to collaborate to bridge the gap from AI theory to productive practice. Together, we can transition from the “wow” of AI to the “how” of AI, so that everyone, everywhere can benefit from AI’s opportunities.

Seven principles for responsible regulation

Companies in democracies have thus far led advances in AI capabilities and fundamental AI research. But we need to continue to aim high, focusing on future AI advances, because while America leads in some AI fields, we’re behind in others.

To complement scientific innovation, we’d suggest seven principles as the foundation of bold and responsible AI regulation:

- Support responsible innovation. The Senate’s Bipartisan AI Working Group starts its roadmap with a call for increased spending on both AI innovation and safeguards against known risks. That makes sense, because the goals are complementary. Advances in technology actually increase safety, helping us build more resilient systems. While new technology involves uncertainty, we can still incorporate good practices that build trust and don’t slow beneficial innovation.

- Focus on outputs. Let’s promote AI systems that generate high-quality outputs, while preventing or mitigating harms. Focusing on specific outputs lets regulators intervene in a focused way, rather than trying to manage fast-evolving computer science and deep-learning techniques. That approach grounds new rules in real issues, and helps avoid overbroad regulations that could short-circuit broadly beneficial AI advances.

- Strike a sound copyright balance. While fair use, copyright exceptions, and similar rules governing publicly available data unlock scientific advances and the ability to learn from prior knowledge, website owners should be able to use machine-readable tools to opt out of having content on their sites used for AI training.

- Plug gaps in existing laws. If something is illegal without AI, then it’s illegal with AI. We don’t need duplicative laws or reinvented wheels; we need to identify and fill gaps where existing laws don’t adequately cover AI applications.

- Empower existing agencies. There’s no one-size-fits-all regulation for a general-purpose technology like AI, any more than we have a Department of Engines, or one law to cover all uses of electricity. We instead need to empower agencies and make every agency an AI agency.

- Adopt a hub-and-spoke model. A hub-and-spoke model establishes a center of technical expertise at an agency like NIST that can advance government understanding of AI and support sectoral agencies, recognizing that issues in banking will differ from issues in pharmaceuticals or transportation.

- Strive for alignment. We’ve already seen dozens of frameworks and proposals to govern AI around the world, including more than 600 bills in U.S. states alone. Progressing American innovation requires intervention at points of actual harm, not blanket research inhibitors. And given the national and international scope of these scientific advances, regulation should reflect truly national approaches, aligned with international standards wherever possible.

Looking down the road

AI is driving advances from the everyday to the extraordinary. From improving tools you use every day —Google Search, Translate, Maps, Gmail, YouTube, and more— to changing the way we do science and tackle big societal challenges. Modern AI is not just a technological breakthrough, but a breakthrough in creating breakthroughs — a tool to make progress happen faster.

Think of Google DeepMind’s AlphaFold program, which has already predicted the 3D shapes of nearly all proteins known to science, and how they interact. Or using AI to forecast floods up to seven days in advance, providing life-saving alerts for 460 million people in 80 countries around the world. Or using AI to map the pathways of neurons in the human brain, revealing newly discovered structures and helping scientists understand fundamental processes such as thought, learning, and memory.

AI can drive more stunning breakthroughs like these —if we stay focused on its long-term potential.

That will take being consistent, thoughtful, and collaborative —and keeping our eyes on the benefits everyone stands to gain if we get it right.